repmgr - after a failover switch, both nodes act as a master

Solution 1

I looked into auto failover, using repmgr, a few months back. It seems repmgr is working as expected.

IIRC repmgr doesn't bring an old master up as a new standby, you would need to run a --force standby clone. You can set other standby nodes to follow a new master, should failover occur (repmgr standby follow).

- Would you expect your master to recover unexpectedly?

- How do you handle failover in your application?

- Aren't you redirecting all database traffic to the new master?

Solution 2

Often it's desirable to bring a failed master back into replication as a standby. First, ensure that the master's PostgreSQL server is no longer running; then use repmgr standby clone to re-sync its data directory with the current master, e.g.:

repmgr -f /etc/repmgr/repmgr.conf --force --rsync-only -h node2 -d repmgr -U repmgr --verbose standby clone

Here it's essential to use the command line options --force, to ensure repmgr will re-use the existing data directory, and --rsync-only, which causes repmgr to use rsync rather than pg_basebackup, as the latter can only be used to clone a fresh standby.

The node can then be restarted.

The node will then need to be re-registered with repmgr; again the --force option is required to update the existing record:

repmgr -f /etc/repmgr/9.5/repmgr.conf --force standby register

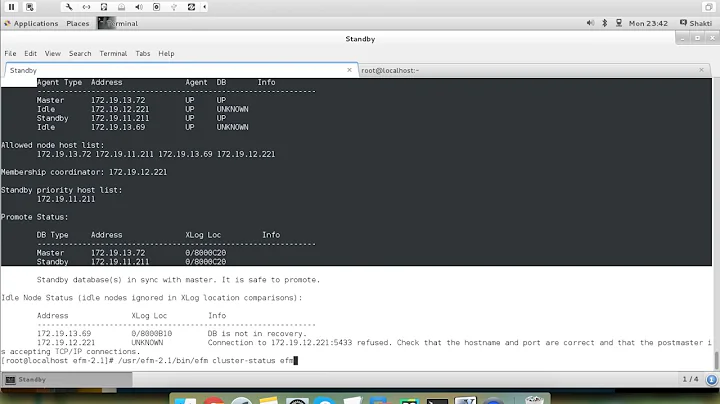

Related videos on Youtube

Admin

Updated on September 18, 2022Comments

-

Admin over 1 year

Admin over 1 yearI have a two node PostgreSQL cluster configured through

repmgr.

The database topology looks like this:db1 - 10.10.10.50 ( master ) db2 - 10.10.10.60 ( standby ) wit - 10.10.10.70 ( witness )The creation of cluster ( as the replication and automatic failover ) work as expected, but the problem is the following.

Let's say that in my cluster the

db1node goes down, then the expected behaviour is that thedb2node gets promoted to a new master. That is all good and the logs prove it:[WARNING] connection to upstream has been lost, trying to recover... 60 seconds before failover decision [WARNING] connection to upstream has been lost, trying to recover... 50 seconds before failover decision [WARNING] connection to upstream has been lost, trying to recover... 40 seconds before failover decision [WARNING] connection to upstream has been lost, trying to recover... 30 seconds before failover decision [WARNING] connection to upstream has been lost, trying to recover... 20 seconds before failover decision [WARNING] connection to upstream has been lost, trying to recover... 10 seconds before failover decision [ERROR] unable to reconnect to upstream after 60 seconds... [ERROR] connection to database failed: could not connect to server: No route to host Is the server running on host "10.10.10.50" and accepting TCP/IP connections on port 5432? [ERROR] connection to database failed: could not connect to server: No route to host Is the server running on host "10.10.10.50" and accepting TCP/IP connections on port 5432? [NOTICE] promoting standby [NOTICE] promoting server using '/usr/lib/postgresql/9.3/bin/pg_ctl -D /var/lib/postgresql/9.3/main promote' [NOTICE] STANDBY PROMOTE successful. You should REINDEX any hash indexes you have.The

db2node is now promoted to a new master, and it's all good, until thedb1node gets back up.In that scenario, it's expected that the

db1to became a new standby, but that is not the case as I end up with both nodes acting as master ?!So my question is, after a failover, how can I prevent that both nodes act as a master ( in the docs it says to include a third node to be a witness - I have that ), but the desired effect is not there.

Here is an example of my repmgr.conf file:

cluster=test_cluster node=1 node_name=db1 conninfo='host=10.10.10.50 dbname=repmgr user=repmgr' master_response_timeout=60 reconnect_attempts=6 reconnect_interval=10 failover=automatic promote_command='repmgr standby promote -f /etc/repmgr/repmgr.conf' follow_command='repmgr standby follow -f /etc/repmgr/repmgr.conf' pg_bindir=/usr/lib/postgresql/9.3/binAnd the cluster state after the

db1node gets back up:repmgr -f /etc/repmgr/repmgr.conf cluster show Role | Connection String * master | host=10.10.10.50 dbname=repmgr user=repmgr * master | host=10.10.10.60 dbname=repmgr user=repmgr witness | host=10.10.10.70 dbname=repmgr user=repmgr port=5499Thanks a bunch,

Best regards -

Admin over 8 yearsHi Nathan, thank you for your input. Yes, repmgr is workings as expected other in that one scenario ( or is it just me ). My question is how to promote the old master into standby when it gets back online automatically without me having to type anything ? The redirection is not important atm, it will be handled by pgbouncer later.

Admin over 8 yearsHi Nathan, thank you for your input. Yes, repmgr is workings as expected other in that one scenario ( or is it just me ). My question is how to promote the old master into standby when it gets back online automatically without me having to type anything ? The redirection is not important atm, it will be handled by pgbouncer later. -

Nathan Foley over 8 yearsRepmgr is working as expected in your case. Repmgr does not handle an old master coming back online, and bringing it up as a a replica. Would you really want it to? Bearing in mind that would involve dropping your database, and copying over data from another server without user input?

-

Admin over 8 yearsOk, and is there a way I can somehow overcome that ? Can I maybe promote the new master to standby again when the old master gets back online ?

Admin over 8 yearsOk, and is there a way I can somehow overcome that ? Can I maybe promote the new master to standby again when the old master gets back online ? -

Nathan Foley over 8 yearsNot in <=9.4; you cant stream changes from the "new_master" to the "old_master". Say your failed machine(old master) is node_1, your new master is node_2; when node_1 is back up, you would need to drop the cluster on node_1, start streaming replication from node_2 to node_1 and wait for this to complete, shutdown node_2, promote node_1 then drop the cluster on node_2 and start streaming replication from node_1 to node_2. Postgres 9.5 introduces "pg_rewind", so it will be much easier to achieve what you are looking for.

-

Nathan Foley over 8 years

-

Admin over 8 yearsOk, but for now, what can I do to overcame that 'split brain' situation ? So basically the failover only works once and that's it ( from the original master to standby ) and when the old master gets back up everything is screwed. Thank you for your elaborate answers.

Admin over 8 yearsOk, but for now, what can I do to overcame that 'split brain' situation ? So basically the failover only works once and that's it ( from the original master to standby ) and when the old master gets back up everything is screwed. Thank you for your elaborate answers. -

Nathan Foley over 8 yearsAvoid split brain by ensuring your failover solution sends all requests to your new master and not return to the old master should it come back online. So we use repmgr to handle the promotion of slave to master, but then use pg_pool to send all database traffic to the "new master".

-

Admin over 8 yearsOk, and when the new master gets back online, I should manually promote it to new standby, right ? Makes sense, lol. Thank you for your elaborate answers and help, it's much appreciated.

Admin over 8 yearsOk, and when the new master gets back online, I should manually promote it to new standby, right ? Makes sense, lol. Thank you for your elaborate answers and help, it's much appreciated.