Retrieve files from remote HDFS

13,687

Here are the steps:

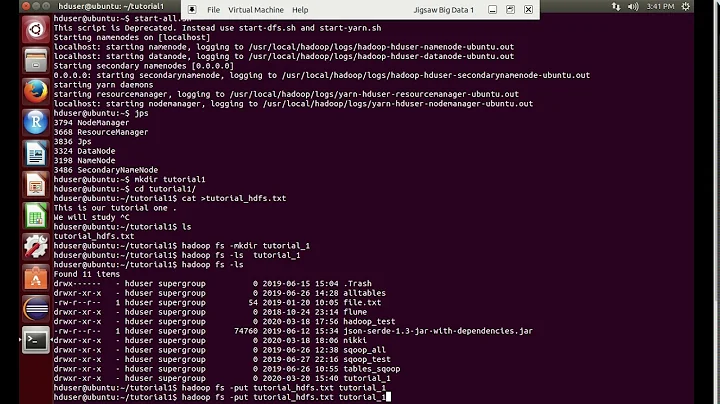

- Make sure there is connectivity between your host and the target cluster

- Configure your host as client, you need to install compatible hadoop binaries. Also your host needs to be running using same operating system.

- Make sure you have the same configuration files (core-site.xml, hdfs-site.xml)

- You can run

hadoop fs -getcommand to get the files directly

Also there are alternatives

- If Webhdfs/httpFS is configured, you can actually download files using curl or even your browser. You can write bash scritps if Webhdfs is configured.

If your host cannot have Hadoop binaries installed to be client, then you can use following instructions.

- enable password less login from your host to the one of the node on the cluster

- run command

ssh <user>@<host> "hadoop fs -get <hdfs_path> <os_path>" - then scp command to copy files

- You can have the above 2 commands in one script

Related videos on Youtube

Author by

savx2

Updated on September 14, 2022Comments

-

savx2 over 1 year

My local machine does not have an hdfs installation. I want to retrieve files from a remote hdfs cluster. What's the best way to achieve this? Do I need to

getthe files from hdfs to one of the cluster machines fs and then use ssh to retrieve them? I want to be able to do this programmatically through say a bash script.