ruby 1.9: invalid byte sequence in UTF-8

Solution 1

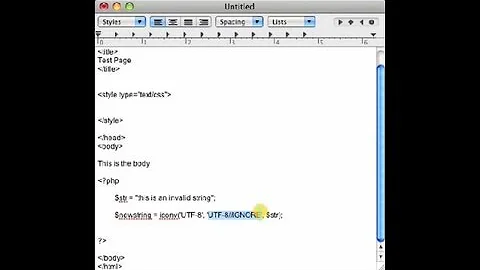

In Ruby 1.9.3 it is possible to use String.encode to "ignore" the invalid UTF-8 sequences. Here is a snippet that will work both in 1.8 (iconv) and 1.9 (String#encode) :

require 'iconv' unless String.method_defined?(:encode)

if String.method_defined?(:encode)

file_contents.encode!('UTF-8', 'UTF-8', :invalid => :replace)

else

ic = Iconv.new('UTF-8', 'UTF-8//IGNORE')

file_contents = ic.iconv(file_contents)

end

or if you have really troublesome input you can do a double conversion from UTF-8 to UTF-16 and back to UTF-8:

require 'iconv' unless String.method_defined?(:encode)

if String.method_defined?(:encode)

file_contents.encode!('UTF-16', 'UTF-8', :invalid => :replace, :replace => '')

file_contents.encode!('UTF-8', 'UTF-16')

else

ic = Iconv.new('UTF-8', 'UTF-8//IGNORE')

file_contents = ic.iconv(file_contents)

end

Solution 2

The accepted answer nor the other answer work for me. I found this post which suggested

string.encode!('UTF-8', 'binary', invalid: :replace, undef: :replace, replace: '')

This fixed the problem for me.

Solution 3

My current solution is to run:

my_string.unpack("C*").pack("U*")

This will at least get rid of the exceptions which was my main problem

Solution 4

Try this:

def to_utf8(str)

str = str.force_encoding('UTF-8')

return str if str.valid_encoding?

str.encode("UTF-8", 'binary', invalid: :replace, undef: :replace, replace: '')

end

Solution 5

I recommend you to use a HTML parser. Just find the fastest one.

Parsing HTML is not as easy as it may seem.

Browsers parse invalid UTF-8 sequences, in UTF-8 HTML documents, just putting the "�" symbol. So once the invalid UTF-8 sequence in the HTML gets parsed the resulting text is a valid string.

Even inside attribute values you have to decode HTML entities like amp

Here is a great question that sums up why you can not reliably parse HTML with a regular expression: RegEx match open tags except XHTML self-contained tags

Related videos on Youtube

Comments

-

Marc Seeger almost 2 years

I'm writing a crawler in Ruby (1.9) that consumes lots of HTML from a lot of random sites.

When trying to extract links, I decided to just use.scan(/href="(.*?)"/i)instead of nokogiri/hpricot (major speedup). The problem is that I now receive a lot of "invalid byte sequence in UTF-8" errors.

From what I understood, thenet/httplibrary doesn't have any encoding specific options and the stuff that comes in is basically not properly tagged.

What would be the best way to actually work with that incoming data? I tried.encodewith the replace and invalid options set, but no success so far...-

Marc Seeger over 12 yearssomething that might break characters, but keeps the string valid for other libraries: valid_string = untrusted_string.unpack(‘C*’).pack(‘U*’)

-

Jordan Feldstein over 12 yearsHaving the exact issue, tried the same other solutions. No love. Tried Marc's, but it seems to garble everything. Are you sure

'U*'undoes'C*'? -

Marc Seeger over 11 yearsNo, it does not :) I just used that in a webcrawler where I care about 3rd party libraries not crashing more than I do about a sentence here and there.

-

-

Marc Seeger almost 14 yearsthanks, but that's not my problem :) I only extract the host part of the URL anyway and hit only the front page. My problem is that my input apparently isn't UTF-8 and the 1.9 encoding foo goes haywire

-

Adrian almost 14 years@Marc Seeger: What do you mean by "my input"? Stdin, the URL, or the page body?

-

Eduardo almost 14 yearsHTML can be encoded in UTF-8: en.wikipedia.org/wiki/Character_encodings_in_HTML

-

Marc Seeger almost 14 yearsmy input = the page body @Eduardo: I know. My problem is that the data coming from net/http seems to have a bad encoding from time to time

-

Marc Seeger almost 14 yearsI'd love to keep the regexp since it's about 10 times faster and I really don't want to parse the html correctly but just want to extract links. I should be able to replace the invalid parts in ruby by just doing: ok_string = bad_string.encode("UTF-8", {:invalid => :replace, :undef => :replace}) but that doesn't seem to work :(

-

sunkencity over 12 yearsIt's not uncommon for webpages to actually have bad encoding for real. The response header might say it's one encoding but then actually serving another encoding.

-

Nakilon over 12 yearsI've compared with my solution and found, that mine loses some letters, at least

ё:"Alena V.\". While your solution keeps it:"Ale\u0308na V.\". Nice. -

RubenLaguna over 12 yearsWith some problematic input I also use a double conversion from UTF-8 to UTF-16 and then back to UTF-8

file_contents.encode!('UTF-16', 'UTF-8', :invalid => :replace, :replace => '')file_contents.encode!('UTF-8', 'UTF-16') -

Aaron Gibralter over 12 yearsI'm using this method in combination with

valid_encoding?which seems to detect when something is wrong.val.unpack('C*').pack('U*') if !val.valid_encoding?. -

RubenLaguna about 12 yearsThere is also the option of

force_encoding. If you have a read a ISO8859-1 as an UTF-8 (and thus that string contains invalid UTF-8) then you can "reinterpret" it as ISO8859-1 with the_string.force_encoding("ISO8859-1") and just work with that string in its real encoding. -

johnf about 12 yearsThat double encode trick just saved my Bacon! I wonder why it is required though?

-

nnyby about 12 yearsI'm using this on my mysql database of Apple's affiliate feed for app store data. The double encode works! But the formatting on the app descriptions is messed up now :/

nnyby about 12 yearsI'm using this on my mysql database of Apple's affiliate feed for app store data. The double encode works! But the formatting on the app descriptions is messed up now :/ -

Lefsler over 11 yearsWhere should i put those lines?

-

boulder_ruby over 11 yearsAgain, I just want to note that while Nakilon's (and others) fix was for Cyrillic characters originating from (haha) Cyrillia, this output is standard output for a csv which was converted from xls!

-

Jo Hund over 10 yearsI think the double conversion works because it forces an encoding conversion (and with it the check for invalid characters). If the source string is already encoded in UTF-8, then just calling

.encode('UTF-8')is a no-op, and no checks are run. Ruby Core Documentation for encode. However, converting it to UTF-16 first forces all the checks for invalid byte sequences to be run, and replacements are done as needed. -

gtd about 10 yearsIf you want an example string for which the double conversion is required, here's one I have

gtd about 10 yearsIf you want an example string for which the double conversion is required, here's one I haveURI.decode("%E2%EF%BF%BD%A6-invalid"). -

La-comadreja about 10 yearsThis fixed the problem for me and I like using non-deprecated methods (I have Ruby 2.0 now).

-

hamstar over 9 yearsThis one worked for me. Successfully converts my

\xB0back to degrees symbols. Even thevalid_encoding?comes back true but I still check if it doesn't and strip out the offending characters using Amir's answer above:string.encode!('UTF-8', 'binary', invalid: :replace, undef: :replace, replace: ''). I had also tried theforce_encodingroute but that failed. -

d_ethier over 8 yearsThis is great. Thanks.

-

Chihung Yu over 8 yearsThis one is the only one that works! I have tried all of the above solution, none of them work String that used in testing "fdsfdsf dfsf sfds fs sdf <div>hello<p>fooo??? {!@#$%^&*()_+}</p></div> \xEF\xBF\xBD \xef\xbf\x9c <div>\xc2\x90</div> \xc2\x90"

-

Aldo over 8 yearsBest answer for my case! Thanks

-

Henley over 5 yearsWhat is the second argument 'binary' for?