Running airflow tasks/dags in parallel

Solution 1

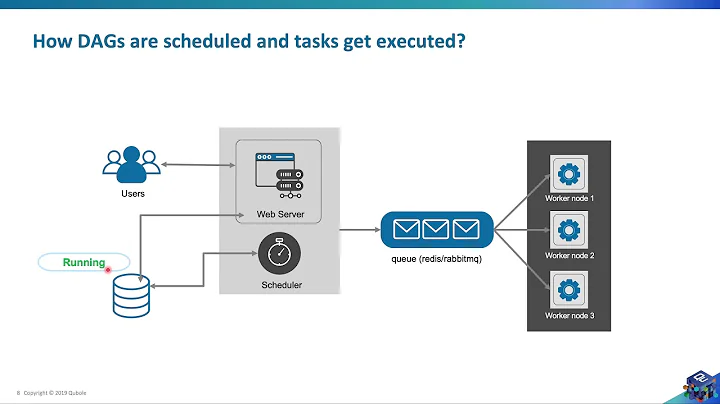

You will need to use LocalExecutor.

Check your configs (airflow.cfg), you might be using SequentialExectuor which executes tasks serially.

Airflow uses a Backend database to store metadata. Check your airflow.cfg file and look for executor keyword. By default, Airflow uses SequentialExecutor which would execute task sequentially no matter what. So to allow Airflow to run tasks in Parallel you will need to create a database in Postges or MySQL and configure it in airflow.cfg (sql_alchemy_conn param) and then change your executor to LocalExecutor in airflow.cfg and then run airflow initdb.

Note that for using LocalExecutor you would need to use Postgres or MySQL instead of SQLite as a backend database.

More info: https://airflow.incubator.apache.org/howto/initialize-database.html

If you want to take a real test drive of Airflow, you should consider setting up a real database backend and switching to the LocalExecutor. As Airflow was built to interact with its metadata using the great SqlAlchemy library, you should be able to use any database backend supported as a SqlAlchemy backend. We recommend using MySQL or Postgres.

Solution 2

Try:

etl_internal_sub_dag3 >> [etl_adzuna_sub_dag, etl_adwords_sub_dag, etl_facebook_sub_dag, etl_pagespeed_sub_dag]

[etl_adzuna_sub_dag, etl_adwords_sub_dag, etl_facebook_sub_dag, etl_pagespeed_sub_dag] >> etl_combine_sub_dag

Solution 3

One simple solution to run tasks in parallel is to put them in [ ] brackets.

For example :

task_start >> [task_get_users, task_get_posts, task_get_comments, task_get_todos]

For more information you can read this Article from towardsdatascience

Related videos on Youtube

Mr. President

Updated on July 09, 2022Comments

-

Mr. President almost 2 years

Mr. President almost 2 yearsI'm using airflow to orchestrate some python scripts. I have a "main" dag from which several subdags are run. My main dag is supposed to run according to the following overview:

I've managed to get to this structure in my main dag by using the following lines:

etl_internal_sub_dag1 >> etl_internal_sub_dag2 >> etl_internal_sub_dag3 etl_internal_sub_dag3 >> etl_adzuna_sub_dag etl_internal_sub_dag3 >> etl_adwords_sub_dag etl_internal_sub_dag3 >> etl_facebook_sub_dag etl_internal_sub_dag3 >> etl_pagespeed_sub_dag etl_adzuna_sub_dag >> etl_combine_sub_dag etl_adwords_sub_dag >> etl_combine_sub_dag etl_facebook_sub_dag >> etl_combine_sub_dag etl_pagespeed_sub_dag >> etl_combine_sub_dagWhat I want airflow to do is to first run the

etl_internal_sub_dag1then theetl_internal_sub_dag2and then theetl_internal_sub_dag3. When theetl_internal_sub_dag3is finished I wantetl_adzuna_sub_dag,etl_adwords_sub_dag,etl_facebook_sub_dag, andetl_pagespeed_sub_dagto run in parallel. Finally, when these last four scripts are finished, I want theetl_combine_sub_dagto run.However, when I run the main dag,

etl_adzuna_sub_dag,etl_adwords_sub_dag,etl_facebook_sub_dag, andetl_pagespeed_sub_dagare run one by one and not in parallel.Question: How do I make sure that the scripts

etl_adzuna_sub_dag,etl_adwords_sub_dag,etl_facebook_sub_dag, andetl_pagespeed_sub_dagare run in parallel?Edit: My

default_argsandDAGlook like this:default_args = { 'owner': 'airflow', 'depends_on_past': False, 'start_date': start_date, 'end_date': end_date, 'email': ['[email protected]'], 'email_on_failure': False, 'email_on_retry': False, 'retries': 0, 'retry_delay': timedelta(minutes=5), } DAG_NAME = 'main_dag' dag = DAG(DAG_NAME, default_args=default_args, catchup = False)-

Mendhak over 5 yearsMr. President, can you edit your post and add what your

DAG(...)anddefault_argslooks like. -

Mr. President over 5 years@Mendhak Yes, I edited my question!

Mr. President over 5 years@Mendhak Yes, I edited my question!

-

-

Mr. President over 5 yearsThank you for your answer! Could you elaborate a bit more on what you mean? The subdags are using a mysql database but I'm not sure whether that's what you mean. Can I just change

Mr. President over 5 yearsThank you for your answer! Could you elaborate a bit more on what you mean? The subdags are using a mysql database but I'm not sure whether that's what you mean. Can I just changeexecutor = SequentialExecutortoexecutor = LocalExecuter? -

kaxil over 5 yearsNot subdags. Airflow uses a Backend database to store metadata. Check your

kaxil over 5 yearsNot subdags. Airflow uses a Backend database to store metadata. Check yourairflow.cfgfile and look forexecutorkeyword. By default Airflow usesSequentialExecutorwhich would execute task sequentially no matter what. So to allow Airflow to run tasks in Parallel you will need to create a database in Postges or MySQL and configure it inairflow.cfg(sql_alchemy_connparam) and then change your executor toLocalExecutor. -

Mr. President over 5 yearsAllright, I think I understand what you mean! Isn't there a way to do so without creating a new database though? And what's the reason that it isn't possible with the default sqlite setup?

Mr. President over 5 yearsAllright, I think I understand what you mean! Isn't there a way to do so without creating a new database though? And what's the reason that it isn't possible with the default sqlite setup? -

Mr. President over 5 yearsAnd one more thing; could you show me where you read that you need to use mysql or postgres in order to use

Mr. President over 5 yearsAnd one more thing; could you show me where you read that you need to use mysql or postgres in order to useLocaExecutor? Because I can't find that anywhere :) -

kaxil over 5 yearsSQLite only supports 1 connection at a time. It doesn't support more than 1 connection. Hence, you need to use a different database like Postgres or MySQL. airflow.incubator.apache.org/howto/initialize-database.html - Check this link where we recommend using it. I am one of the committers to the Airflow project and based on my experience Postgres would be the best option. :)

kaxil over 5 yearsSQLite only supports 1 connection at a time. It doesn't support more than 1 connection. Hence, you need to use a different database like Postgres or MySQL. airflow.incubator.apache.org/howto/initialize-database.html - Check this link where we recommend using it. I am one of the committers to the Airflow project and based on my experience Postgres would be the best option. :) -

Admin over 2 yearsPlease provide additional details in your answer. As it's currently written, it's hard to understand your solution.

Admin over 2 yearsPlease provide additional details in your answer. As it's currently written, it's hard to understand your solution.

![[Getting started with Airflow - 3] Understanding task retries](https://i.ytimg.com/vi/2N6uR0kTTxo/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAVL6v3YDeUMAK3LB_aKtWb6RiUiA)