Running thousands of curl background processes in parallel in bash script

Solution 1

Following the question strict:

mycurl() {

START=$(date +%s)

curl -s "http://some_url_here/"$1 > $1.txt

END=$(date +%s)

DIFF=$(( $END - $START ))

echo "It took $DIFF seconds"

}

export -f mycurl

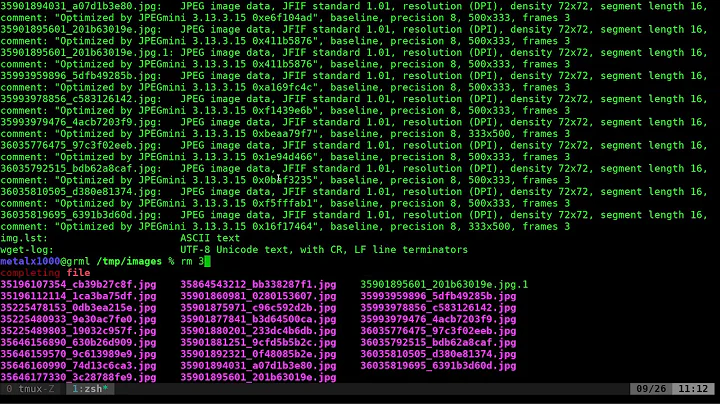

seq 100000 | parallel -j0 mycurl

Shorter if you do not need the boilerplate text around the timings:

seq 100000 | parallel -j0 --joblog log curl -s http://some_url_here/{} ">" {}.txt

cut -f 4 log

If you want to run 1000s in parallel you will hit some limits (such as file handles). Raising ulimit -n or /etc/security/limits.conf may help.

Solution 2

for i in {1..100000}

There are only 65536 ports. Throttle this.

for n in {1..100000..1000}; do # start 100 fetch loops

for i in `eval echo {$n..$((n+999))}`; do

echo "club $i..."

curl -s "http://some_url_here/"$i > $i.txt

done &

wait

done

(edit: echocurl

(edit: strip severely dated assertion about OS limits and add the missing wait)

Related videos on Youtube

zavg

Updated on September 18, 2022Comments

-

zavg over 1 year

I am running thounsand of curl background processes in parallel in the following bash script

START=$(date +%s) for i in {1..100000} do curl -s "http://some_url_here/"$i > $i.txt& END=$(date +%s) DIFF=$(( $END - $START )) echo "It took $DIFF seconds" doneI have 49Gb Corei7-920 dedicated server (not virtual).

I track memory consumption and CPU through

topcommand and they are far away from bounds.I am using

ps aux | grep curl | wc -lto count the number of current curl processes. This number increases rapidly up to 2-4 thousands and then starts to continuously decrease.If I add simple parsing through piping curl to awk (

curl | awk > output) than curl processes number raise up just to 1-2 thousands and then decreases to 20-30...Why number of processes decrease so dramatically? Where are the bounds of this architecture?

-

Admin over 10 yearsYou're probably hitting the one of the limits of max running processes or max open sockets.

Admin over 10 yearsYou're probably hitting the one of the limits of max running processes or max open sockets.ulimitwill show some of those limits. -

Admin over 10 yearsI also would suggest using

Admin over 10 yearsI also would suggest usingparallel(1)for such tasks: manpages.debian.org/cgi-bin/… -

Admin over 10 yearsTry

Admin over 10 yearsTrystart=$SECONDSandend=$SECONDS- and use lower case or mixed case variable names by habit in order to avoid potential name collision with shell variables. However, you're really only getting the ever-increasing time interval of the starting of each process. You're not getting how long the download took since the process is in the background (andstartis only calculated once). In Bash, you can do(( diff = end - start ))dropping the dollar signs and allowing the spacing to be more flexible. Usepgrepif you have it. -

Admin over 10 yearsI agree with HBruijn. Notice how your process count is halved when you double the number of processes (by adding

Admin over 10 yearsI agree with HBruijn. Notice how your process count is halved when you double the number of processes (by addingawk). -

Admin over 10 years@zhenech @HBrujin I launched

Admin over 10 years@zhenech @HBrujin I launchedparalleland it says me that I may run just 500 parallel tasks due to system limit of file handles. I raised limit in limits.conf, but now when I try to run 5000 simulaneus jobs it instantly eats all my memory (49 Gb) even before start because everyparallelperl script eats 32Mb. -

Admin about 9 yearsOriginal question didn't specify how long a single request takes... what if the earlier instances have completed?

Admin about 9 yearsOriginal question didn't specify how long a single request takes... what if the earlier instances have completed? -

Admin over 8 yearsWhy don't you use

Admin over 8 yearsWhy don't you useab(apache benchmark)? You can set any concurrency.

-

-

phemmer over 10 yearsActually the OS can handle this just fine. This is a limitation of TCP. No OS, no matter how special, will be able to get around it. But OP's 4k connections is nowhere near 64k (or the 32k default of some distros)

-

Guy Avraham over 6 yearsAnd if I wish to run several commands as the one in the short answer version in parallel, how do I do that ?

Guy Avraham over 6 yearsAnd if I wish to run several commands as the one in the short answer version in parallel, how do I do that ? -

Ole Tange over 6 yearsQuote it:

Ole Tange over 6 yearsQuote it:seq 100 | parallel 'echo here is command 1: {}; echo here is command 2: {}'. Spend an hour walking through the tutorial. Your command line will love you for it:man parallel_tutorial