Scrapy - Extract items from table

Solution 1

You need to slightly correct your code. Since you already select all elements within the table you don't need to point again to a table. Thus you can shorten your xpath to something like thistd[1]//text().

def parse_products(self, response):

products = response.xpath('//*[@id="Year1"]/table//tr')

# ignore the table header row

for product in products[1:]

item = Schooldates1Item()

item['hol'] = product.xpath('td[1]//text()').extract_first()

item['first'] = product.xpath('td[2]//text()').extract_first()

item['last'] = product.xpath('td[3]//text()').extract_first()

yield item

Edited my answer since @stutray provide the link to a site.

Solution 2

You can use CSS Selectors instead of xPaths, I always find CSS Selectors easy.

def parse_products(self, response):

for table in response.css("#Y1 table")[1:]:

item = Schooldates1Item()

item['hol'] = product.css('td:nth-child(1)::text').extract_first()

item['first'] = product.css('td:nth-child(2)::text').extract_first()

item['last'] = product.css('td:nth-child(3)::text').extract_first()

yield item

Also do not use tbody tag in selectors. Source:

Firefox, in particular, is known for adding elements to tables. Scrapy, on the other hand, does not modify the original page HTML, so you won’t be able to extract any data if you use in your XPath expressions.

Related videos on Youtube

stonk

Updated on September 15, 2022Comments

-

stonk over 1 year

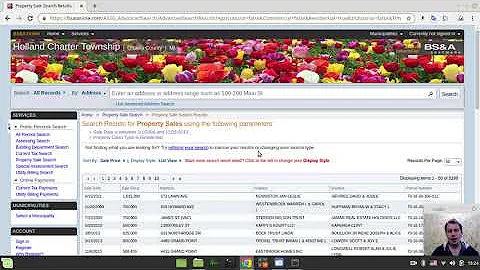

Trying to get my head around Scrapy but hitting a few dead ends.

I have a 2 Tables on a page and would like to extract the data from each one then move along to the next page.

Tables look like this (First one is called Y1, 2nd is Y2) and structures are the same.

<div id="Y1" style="margin-bottom: 0px; margin-top: 15px;"> <h2>First information</h2><hr style="margin-top: 5px; margin-bottom: 10px;"> <table class="table table-striped table-hover table-curved"> <thead> <tr> <th class="tCol1" style="padding: 10px;">First Col Head</th> <th class="tCol2" style="padding: 10px;">Second Col Head</th> <th class="tCol3" style="padding: 10px;">Third Col Head</th> </tr> </thead> <tbody> <tr> <td>Info 1</td> <td>Monday 5 September, 2016</td> <td>Friday 21 October, 2016</td> </tr> <tr class="vevent"> <td class="summary"><b>Info 2</b></td> <td class="dtstart" timestamp="1477094400"><b></b></td> <td class="dtend" timestamp="1477785600"> <b>Sunday 30 October, 2016</b></td> </tr> <tr> <td>Info 3</td> <td>Monday 31 October, 2016</td> <td>Tuesday 20 December, 2016</td> </tr> <tr class="vevent"> <td class="summary"><b>Info 4</b></td> <td class="dtstart" timestamp="1482278400"><b>Wednesday 21 December, 2016</b></td> <td class="dtend" timestamp="1483315200"> <b>Monday 2 January, 2017</b></td> </tr> </tbody> </table>As you can see, the structure is a little inconsistent but as long as I can get each td and output to csv then I'll be a happy guy.

I tried using xPath but this only confused me more.

My last attempt:

import scrapy class myScraperSpider(scrapy.Spider): name = "myScraper" allowed_domains = ["mysite.co.uk"] start_urls = ( 'https://mysite.co.uk/page1/', ) def parse_products(self, response): products = response.xpath('//*[@id="Y1"]/table') # ignore the table header row for product in products[1:] item = Schooldates1Item() item['hol'] = product.xpath('//*[@id="Y1"]/table/tbody/tr[1]/td[1]').extract()[0] item['first'] = product.xpath('//*[@id="Y1"]/table/tbody/tr[1]/td[2]').extract()[0] item['last'] = product.xpath('//*[@id="Y1"]/table/tbody/tr[1]/td[3]').extract()[0] yield itemNo errors here but it just fires back lots of information about the crawl but no actual results.

Update:

import scrapy class SchoolSpider(scrapy.Spider): name = "school" allowed_domains = ["termdates.co.uk"] start_urls = ( 'https://termdates.co.uk/school-holidays-16-19-abingdon/', ) def parse_products(self, response): products = sel.xpath('//*[@id="Year1"]/table//tr') for p in products[1:]: item = dict() item['hol'] = p.xpath('td[1]/text()').extract_first() item['first'] = p.xpath('td[1]/text()').extract_first() item['last'] = p.xpath('td[1]/text()').extract_first() yield itemThis give me: IndentationError: unexpected indent

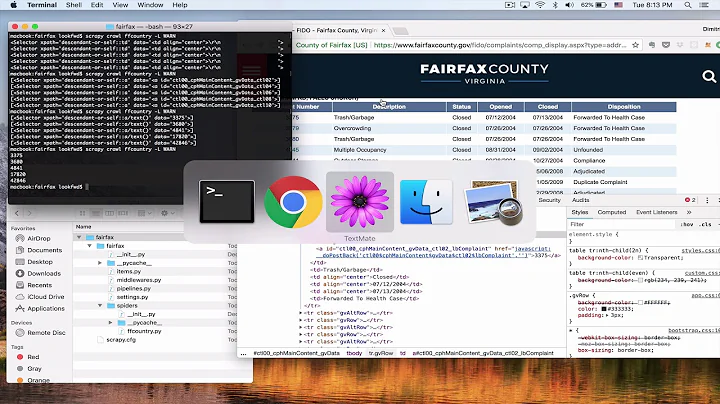

if I run the amended script below (thanks to @Granitosaurus) to output to CSV (-o schoolDates.csv) I get an empty file:

import scrapy class SchoolSpider(scrapy.Spider): name = "school" allowed_domains = ["termdates.co.uk"] start_urls = ('https://termdates.co.uk/school-holidays-16-19-abingdon/',) def parse_products(self, response): products = sel.xpath('//*[@id="Year1"]/table//tr') for p in products[1:]: item = dict() item['hol'] = p.xpath('td[1]/text()').extract_first() item['first'] = p.xpath('td[1]/text()').extract_first() item['last'] = p.xpath('td[1]/text()').extract_first() yield itemThis is the log:

- 2017-03-23 12:04:08 [scrapy.core.engine] INFO: Spider opened 2017-03-23 12:04:08 [scrapy.extensions.logstats] INFO: Crawled 0 pages (at 0 pages/min), scraped 0 items (at 0 items/min) 2017-03-23 12:04:08 [scrapy.extensions.telnet] DEBUG: Telnet console listening on ... 2017-03-23 12:04:08 [scrapy.core.engine] DEBUG: Crawled (200) https://termdates.co.uk/robots.txt> (referer: None) 2017-03-23 12:04:08 [scrapy.core.engine] DEBUG: Crawled (200) https://termdates.co.uk/school-holidays-16-19-abingdon/> (referer: None) 2017-03-23 12:04:08 [scrapy.core.scraper] ERROR: Spider error processing https://termdates.co.uk/school-holidays-16-19-abingdon/> (referer: None) Traceback (most recent call last): File "c:\python27\lib\site-packages\twisted\internet\defer.py", line 653, in _ runCallbacks current.result = callback(current.result, *args, **kw) File "c:\python27\lib\site-packages\scrapy-1.3.3-py2.7.egg\scrapy\spiders__init__.py", line 76, in parse raise NotImplementedError NotImplementedError 2017-03-23 12:04:08 [scrapy.core.engine] INFO: Closing spider (finished) 2017-03-23 12:04:08 [scrapy.statscollectors] INFO: Dumping Scrapy stats: {'downloader/request_bytes': 467, 'downloader/request_count': 2, 'downloader/request_method_count/GET': 2, 'downloader/response_bytes': 11311, 'downloader/response_count': 2, 'downloader/response_status_count/200': 2, 'finish_reason': 'finished', 'finish_time': datetime.datetime(2017, 3, 23, 12, 4, 8, 845000), 'log_count/DEBUG': 3, 'log_count/ERROR': 1, 'log_count/INFO': 7, 'response_received_count': 2, 'scheduler/dequeued': 1, 'scheduler/dequeued/memory': 1, 'scheduler/enqueued': 1, 'scheduler/enqueued/memory': 1, 'spider_exceptions/NotImplementedError': 1, 'start_time': datetime.datetime(2017, 3, 23, 12, 4, 8, 356000)} 2017-03-23 12:04:08 [scrapy.core.engine] INFO: Spider closed (finished)

Update 2: (Skips row) This pushes result to csv file but skips every other row.

The Shell shows {'hol': None, 'last': u'\r\n\t\t\t\t\t\t\t\t', 'first': None}

import scrapy class SchoolSpider(scrapy.Spider): name = "school" allowed_domains = ["termdates.co.uk"] start_urls = ('https://termdates.co.uk/school-holidays-16-19-abingdon/',) def parse(self, response): products = response.xpath('//*[@id="Year1"]/table//tr') for p in products[1:]: item = dict() item['hol'] = p.xpath('td[1]/text()').extract_first() item['first'] = p.xpath('td[2]/text()').extract_first() item['last'] = p.xpath('td[3]/text()').extract_first() yield itemSolution: Thanks to @vold This crawls all pages in start_urls and deals with the inconsistent table layout

# -*- coding: utf-8 -*- import scrapy from SchoolDates_1.items import Schooldates1Item class SchoolSpider(scrapy.Spider): name = "school" allowed_domains = ["termdates.co.uk"] start_urls = ('https://termdates.co.uk/school-holidays-16-19-abingdon/', 'https://termdates.co.uk/school-holidays-3-dimensions',) def parse(self, response): products = response.xpath('//*[@id="Year1"]/table//tr') # ignore the table header row for product in products[1:]: item = Schooldates1Item() item['hol'] = product.xpath('td[1]//text()').extract_first() item['first'] = product.xpath('td[2]//text()').extract_first() item['last'] = ''.join(product.xpath('td[3]//text()').extract()).strip() item['url'] = response.url yield item-

rfelten about 7 yearsPlease provide more information: What did you try? What code? Which XPATH expression confuses you? Did you read the Scrapy Tutorial about Selectors?

-

Granitosaurus about 7 years

Granitosaurus about 7 years./is not neccessary here, since expression is already bound to first level. If you'd look for any descendant you would need to use.//indeed. Alsoextract_first()is a relatively new shortcut toextract()[0]:) -

vold about 7 yearsI agree and correct my answer, but without link to a site, it's all I can suggest :)

-

Umair Ayub about 7 yearsTBODY is added by browser like Mozilla and Chrome and it doesnt exists in source-code of HTML response, so your xpath wont work.

-

Granitosaurus about 7 years@Umair well in the context of OP's code it would work :P. Also you imply that OP doesn't use a browser or some rendering to download the source. So in the context of this question my original answer would work, but adjusted the answet nevertheless to reflect your point.

Granitosaurus about 7 years@Umair well in the context of OP's code it would work :P. Also you imply that OP doesn't use a browser or some rendering to download the source. So in the context of this question my original answer would work, but adjusted the answet nevertheless to reflect your point. -

stonk about 7 yearsThanks for all your input folks. Please see my edit with the site i'm trying to scrape

-

David almost 7 yearsquick question - why is it

David almost 7 yearsquick question - why is ittd[1]for each of the xpaths - are thetds being removed by.extract_first()