Spark: "Truncated the string representation of a plan since it was too large." Warning when using manually created aggregation expression

Solution 1

You can safely ignore it, if you are not interested in seeing the sql schema logs. Otherwise, you might want to set the property to a higher value, but it might affect the performance of your job:

spark.debug.maxToStringFields=100

Default value is: DEFAULT_MAX_TO_STRING_FIELDS = 25

The performance overhead of creating and logging strings for wide schemas can be large. To limit the impact, we bound the number of fields to include by default. This can be overridden by setting the 'spark.debug.maxToStringFields' conf in SparkEnv.

Taken from: https://github.com/apache/spark/blob/master/core/src/main/scala/org/apache/spark/util/Utils.scala#L90

Solution 2

This config, along many others, has been moved to: SQLConf - sql/catalyst/src/main/scala/org/apache/spark/sql/internal/SQLConf.scala

This can be set either in the config file or via command line in spark, using:

spark.conf.set("spark.sql.debug.maxToStringFields", 1000)

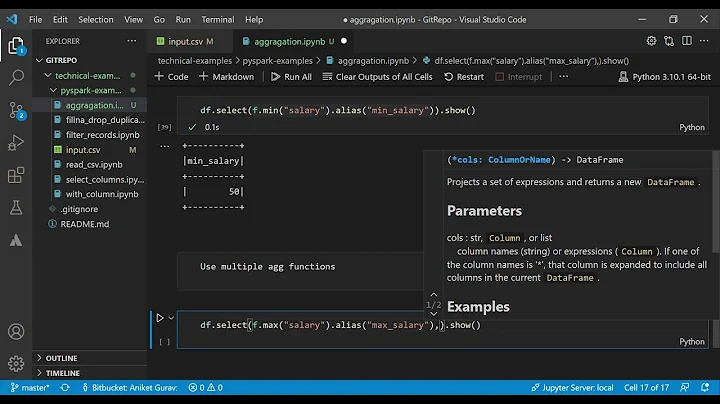

Related videos on Youtube

Rami

I am a Data Scientist with experience in Machine Learning, Big Data Analytics and Computer Vision. I am currently working on advanced Machine Learning technologies for Mobile Operators Subscribers Analytics to optimise advertisement, recommendation and costumer care services. Co-founder and organiser of the Lifelogging@Dublin Meetup group, I am an active long-term Lifelogger with particular interest in human behaviour tracking and analytics.

Updated on July 09, 2022Comments

-

Rami almost 2 years

Rami almost 2 yearsI am trying to build for each of my users a vector containing the average number of records per hour of day. Hence the vector has to have 24 dimensions.

My original DataFrame has

userIDandhourcolumns, andI am starting by doing agroupByand counting the number of record per user per hour as follow:val hourFreqDF = df.groupBy("userID", "hour").agg(count("*") as "hfreq")Now, in order to generate a vector per user I am doing the follow, based on the first suggestion in this answer.

val hours = (0 to 23 map { n => s"$n" } toArray) val assembler = new VectorAssembler() .setInputCols(hours) .setOutputCol("hourlyConnections") val exprs = hours.map(c => avg(when($"hour" === c, $"hfreq").otherwise(lit(0))).alias(c)) val transformed = assembler.transform(hourFreqDF.groupBy($"userID") .agg(exprs.head, exprs.tail: _*))When I run this example, I get the following warning:

Truncated the string representation of a plan since it was too large. This behavior can be adjusted by setting 'spark.debug.maxToStringFields' in SparkEnv.conf.I presume this is because the expression is too long?

My question is: can I safely ignore this warning?

-

Thom Rogers over 6 yearsCan this be set via command line as part of a spark-submit command?

-

Arnab over 6 yearsWhere can I find the SparkEnv file. I tried setting that in spark-env.sh but did not help.

-

Sangram Gaikwad over 5 years@Arnab I just added

spark.debug.maxToStringFields=100tospark-defaults.confand it worked for me. (I'm using spark 2.3.x) -

Rakshith over 3 yearsThis would be in Spark 3 I believe

Rakshith over 3 yearsThis would be in Spark 3 I believe