Spark runs on Yarn cluster exitCode=13:

Solution 1

It seems that you have set the master in your code to be local

SparkConf.setMaster("local[*]")

You have to let the master unset in the code, and set it later when you issue spark-submit

spark-submit --master yarn-client ...

Solution 2

If it helps someone

Another possibility of this error is when you put incorrectly the --class param

Solution 3

I had exactly the same problem but the above answer didn't work.

Alternatively, when I ran this with spark-submit --deploy-mode client everything worked fine.

Solution 4

I got this same error running a SparkSQL job in cluster mode. None of the other solutions worked for me but looking in the job history server logs in Hadoop I found this stack trace.

20/02/05 23:01:24 INFO hive.metastore: Connected to metastore.

20/02/05 23:03:03 ERROR yarn.ApplicationMaster: Uncaught exception:

java.util.concurrent.TimeoutException: Futures timed out after [100000 milliseconds]

at scala.concurrent.impl.Promise$DefaultPromise.ready(Promise.scala:223)

at scala.concurrent.impl.Promise$DefaultPromise.result(Promise.scala:227)

at org.apache.spark.util.ThreadUtils$.awaitResult(ThreadUtils.scala:220)

at org.apache.spark.deploy.yarn.ApplicationMaster.runDriver(ApplicationMaster.scala:468)

at org.apache.spark.deploy.yarn.ApplicationMaster.org$apache$spark$deploy$yarn$ApplicationMaster$$runImpl(ApplicationMaster.scala:305)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anonfun$run$1.apply$mcV$sp(ApplicationMaster.scala:245)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anonfun$run$1.apply(ApplicationMaster.scala:245)

at org.apache.spark.deploy.yarn.ApplicationMaster$$anonfun$run$1.apply(ApplicationMaster.scala:245)

...

and looking at the Spark source code you'll find that basically the AM timed out waiting for the spark.driver.port property to be set by the Thread executing the user class.

So it could either be a transient issue or you should investigate your code for the reason for a timeout.

Related videos on Youtube

user_not_found

Updated on May 19, 2020Comments

-

user_not_found almost 4 years

I am a spark/yarn newbie, run into exitCode=13 when I submit a spark job on yarn cluster. When the spark job is running in local mode, everything is fine.

The command I used is:

/usr/hdp/current/spark-client/bin/spark-submit --class com.test.sparkTest --master yarn --deploy-mode cluster --num-executors 40 --executor-cores 4 --driver-memory 17g --executor-memory 22g --files /usr/hdp/current/spark-client/conf/hive-site.xml /home/user/sparkTest.jar*Spark Error Log:

16/04/12 17:59:30 INFO Client: client token: N/A diagnostics: Application application_1459460037715_23007 failed 2 times due to AM Container for appattempt_1459460037715_23007_000002 exited with exitCode: 13 For more detailed output, check application tracking page:http://b-r06f2-prod.phx2.cpe.net:8088/cluster/app/application_1459460037715_23007Then, click on links to logs of each attempt. Diagnostics: Exception from container-launch. Container id: container_e40_1459460037715_23007_02_000001 Exit code: 13 Stack trace: ExitCodeException exitCode=13: at org.apache.hadoop.util.Shell.runCommand(Shell.java:576) at org.apache.hadoop.util.Shell.run(Shell.java:487) at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:753) at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:211) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302) at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82) **Yarn logs** 16/04/12 23:55:35 INFO mapreduce.TableInputFormatBase: Input split length: 977 M bytes. 16/04/12 23:55:41 INFO yarn.ApplicationMaster: Waiting for spark context initialization ... 16/04/12 23:55:51 INFO yarn.ApplicationMaster: Waiting for spark context initialization ... 16/04/12 23:56:01 INFO yarn.ApplicationMaster: Waiting for spark context initialization ... 16/04/12 23:56:11 INFO yarn.ApplicationMaster: Waiting for spark context initialization ... 16/04/12 23:56:11 INFO client.ConnectionManager$HConnectionImplementation: Closing zookeeper sessionid=0x152f0b4fc0e7488 16/04/12 23:56:11 INFO zookeeper.ZooKeeper: Session: 0x152f0b4fc0e7488 closed 16/04/12 23:56:11 INFO zookeeper.ClientCnxn: EventThread shut down 16/04/12 23:56:11 INFO executor.Executor: Finished task 0.0 in stage 1.0 (TID 2). 2003 bytes result sent to driver 16/04/12 23:56:11 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 1.0 (TID 2) in 82134 ms on localhost (2/3) 16/04/12 23:56:17 INFO client.ConnectionManager$HConnectionImplementation: Closing zookeeper sessionid=0x4508c270df0980316/04/12 23:56:17 INFO zookeeper.ZooKeeper: Session: 0x4508c270df09803 closed * ... 16/04/12 23:56:21 ERROR yarn.ApplicationMaster: SparkContext did not initialize after waiting for 100000 ms. Please check earlier log output for errors. Failing the application. 16/04/12 23:56:21 INFO yarn.ApplicationMaster: Final app status: FAILED, exitCode: 13, (reason: Timed out waiting for SparkContext.) 16/04/12 23:56:21 INFO spark.SparkContext: Invoking stop() from shutdown hook *-

user1314742 about 8 yearsCould you share the yarn logs as well (not the whole logs, just the error messages in yarn logs)?

-

user1314742 about 8 yearsYou could get yarn logs:

$ yarn logs -applicationId application_1459460037715_18191 -

user_not_found about 8 yearsThanks for the response. So it turns out exitCode 10 is because of the classNotFound issue. After a quick fix of that, I encountered the new issue with exit Code 13 when spark job is running on yarn cluster. It is work well in local mode. I have updated the question as well as logs so it won't confuse people :)

-

user1314742 about 8 yearsHave you set the master in your code? like doing

SparkConf.setMaster("local[*]")? -

user_not_found about 8 yearsYou are totally right! :) Thanks a lot. I have made the same issue before in another place and the exit code was 15. So when it's 13 this time, I didn't even look back my code as the log, so dumm.

-

user1314742 about 8 yearsGood.. I ll put as an answer so you could mark your question as answered :)

-

-

mahendra maid over 5 yearswhat if i want to submit to --master yarn --deploy-mode cluster ...its giving error .

mahendra maid over 5 yearswhat if i want to submit to --master yarn --deploy-mode cluster ...its giving error . -

user1314742 over 5 yearswhat is the error?? because this is the new way in spark submit for version 2+ so it shouldn't give an error

-

Omkar Neogi over 4 yearsDoes anyone understand the reason for this?

Omkar Neogi over 4 yearsDoes anyone understand the reason for this? -

Eli Borodach almost 4 yearsWhat is this class parameter? And how can you know your class param?

-

Jhon Mario Lotero almost 4 yearsThis is the main class that you want to execute with spark , may be this question can help you stackoverflow.com/questions/50205621/…

-

Sajal about 3 yearsYes, this solved my problem. I was using

Sajal about 3 yearsYes, this solved my problem. I was usingspark-submit --deploy-mode cluster, but when I changed it toclient, it worked fine. In my case, I was executing SQL scripts using a python code, so my code was not "spark dependent", but I am not sure what will be the implications of doing this when you want multiprocessing. -

Mark Korzhov almost 3 yearsYou saved my day!

-

BeerIsGood over 2 years--deploy-mode client only runs spark on the master driver node. This does not use Spark's worker (core & task) nodes. Instead, use --deploy-mode cluster to distribute work across workers.

-

Nolan Barth almost 2 years@BeerIsGood, that's only true of the single-threaded code you run. Any actual spark operations (reads, writes, maps, filters, etc) are distributed by the master node across the entire cluster, even in client mode. The difference between client and cluster modes is how the work gets submitted to the cluster and which nodes get used for what.

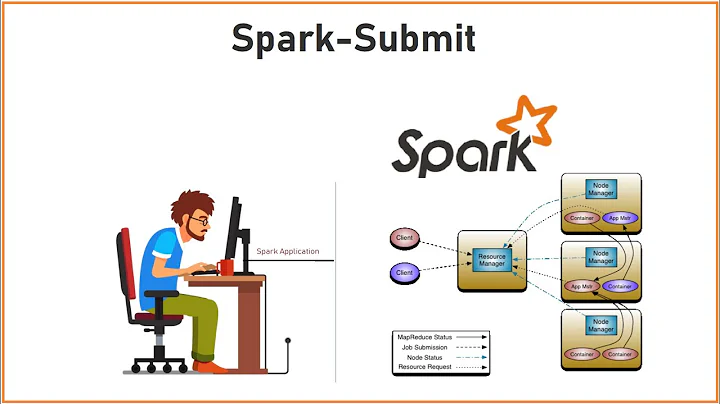

![Spark Submit Cluster [YARN] Mode](https://i.ytimg.com/vi/3c62-F6bu5k/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAJOh009FCGgJNkddKZe2DA41ikNw)