Spring Batch: Which ItemReader implementation to use for high volume & low latency

Solution 1

To process that kind of data, you're probably going to want to parallelize it if that is possible (the only thing preventing it would be if the output file needed to retain an order from the input). Assuming you are going to parallelize your processing, you are then left with two main options for this type of use case (from what you have provided):

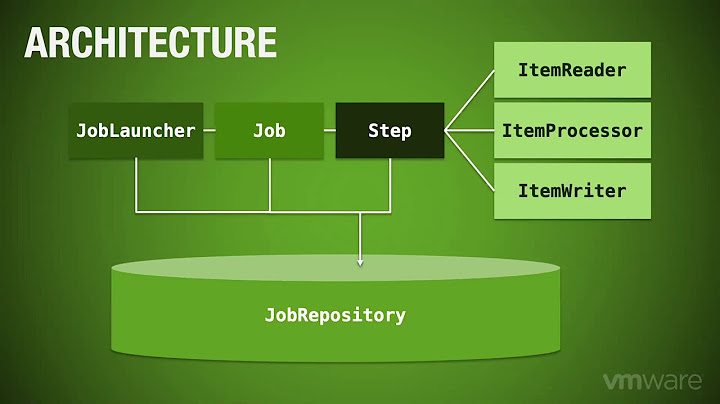

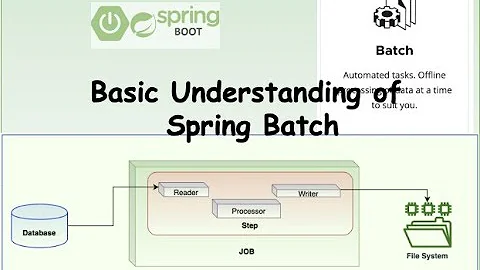

- Multithreaded step - This will process a chunk per thread until complete. This allows for parallelization in a very easy way (simply adding a TaskExecutor to your step definition). With this, you do loose restartability out of the box because you will need to turn off state persistence on either of the ItemReaders you have mentioned (there are ways around this with flagging records in the database as having been processed, etc).

- Partitioning - This breaks up your input data into partitions that are processed by step instances in parallel (master/slave configuration). The partitions can be executed locally via threads (via a TaskExecutor) or remotely via remote partitioning. In either case, you gain restartability (each step processes it's own data so there is no stepping on state from partition to partition) with parallization.

I did a talk on processing data in parallel with Spring Batch. Specifically, the example I present is a remote partitioned job. You can view it here: https://www.youtube.com/watch?v=CYTj5YT7CZU

To your specific questions:

- Which ItemReader implementation among JdbcCursorItemReader & JdbcPagingItemReader would be suggested? What would be the reason? - Either of these two options can be tuned to meet many performance needs. It really depends on the database you're using, driver options available as well as processing models you can support. Another consideration is, do you need restartability?

- Which would be better performing (fast) in the above use case? - Again it depends on your processing model chosen.

- Would the selection be different in case of a single-process vs multi-process approach? - This goes to how you manage jobs more so than what Spring Batch can handle. The question is, do you want to manage partitioning external to the job (passing in the data description to the job as parameters) or do you want the job to manage it (via partitioning).

- In case of a multi-threaded approach using TaskExecutor, which one would be better & simple? - I won't deny that remote partitioning adds a level of complexity that local partitioning and multithreaded steps don't have.

I'd start with the basic step definition. Then try a multithreaded step. If that doesn't meet your needs, then move to local partitioning, and finally remote partitioning if needed. Keep in mind that Spring Batch was designed to make that progression as painless as possible. You can go from a regular step to a multithreaded step with only configuration updates. To go to partitioning, you need to add a single new class (a Partitioner implementation) and some configuration updates.

One final note. Most of this has talked about parallelizing the processing of this data. Spring Batch's FlatFileItemWriter is not thread safe. Your best bet would be to write to multiple files in parallel, then aggregate them afterwards if speed is your number one concern.

Solution 2

You should profile this in order to make a choice. In plain JDBC I would start with something that:

- prepares statements with

ResultSet.TYPE_FORWARD_ONLYandResultSet.CONCUR_READ_ONLY. Several JDBC drivers "simulate" cursors in client side unless you use those two, and for large result sets you don't want that as it will probably lead you toOutOfMemoryErrorbecause your JDBC driver is buffering the entire data set in memory. By using those options you increase the chance that you get server side cursors and get the results "streamed" to you bit by bit, which is what you want for large result sets. Note that some JDBC drivers always "simulate" cursors in client side, so this tip might be useless for your particular DBMS. - set a reasonable fetch size to minimize the impact of network roundtrips. 50-100 is often a good starting value for profiling. As fetch size is hint, this might also be useless for your particular DBMS.

JdbcCursorItemReader seems to cover both things, but as it is said before they are not guaranteed to give you best performance in all DBMS, so I would start with that and then, if performance is inadequate, try JdbcPagingItemReader.

I don't think doing simple processing with JdbcCursorItemReader will be slow for your data set size unless you have very strict performance requirements. If you really need to parallelize using JdbcPagingItemReader might be easier, but the interface of those two is very similar, so I would not count on it.

Anyway, profile.

Related videos on Youtube

Comments

-

ram almost 2 years

Use case: Read 10 million rows [10 columns] from database and write to a file (csv format).

Which ItemReader implementation among JdbcCursorItemReader & JdbcPagingItemReader would be suggested? What would be the reason?

Which would be better performing (fast) in the above use case?

Would the selection be different in case of a single-process vs multi-process approach?

In case of a multi-threaded approach using TaskExecutor, which one would be better & simple?

-

Luca Basso Ricci over 10 yearspartition to create parts of csv + extra step to concat csv parts into single csv?

Luca Basso Ricci over 10 yearspartition to create parts of csv + extra step to concat csv parts into single csv? -

ram over 10 years@bellabax Please consider a single-process approach [for now]. Updated question to ask if it matters for single-process/mutli-process.

-

ram over 10 yearsYou did mention about a

Multithreaded stepbefore Partitioning. However there is a possibility of ResultSetExhaustedException in case of a JdbcCursorItemReader in a multithreaded step. How could it be tackled? -

Michael Minella over 10 yearsJdbcCursorItemReader isn't considered thread safe. Use the JdbcPagingItemReader in a multithreaded step. You can read the details as to why here: jira.springsource.org/browse/…

Michael Minella over 10 yearsJdbcCursorItemReader isn't considered thread safe. Use the JdbcPagingItemReader in a multithreaded step. You can read the details as to why here: jira.springsource.org/browse/…