Spring webFlux differrences when Netty vs Tomcat is used under the hood

Solution 1

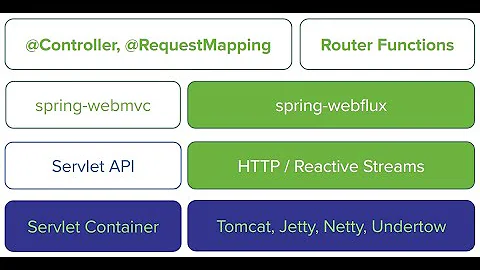

When using Servlet 2.5, Servlet containers will assign a request to a thread until that request has been fully processed.

When using Servlet 3.0 async processing, the server can dispatch the request processing in a separate thread pool while the request is being processed by the application. However, when it comes to I/O, work always happens on a server thread and it is always blocking. This means that a "slow client" can monopolize a server thread, since the server is blocked while reading/writing to that client with a poor network connection.

With Servlet 3.1, async I/O is allowed and in that case the "one request/thread" model isn't anymore. At any point a bit request processing can be scheduled on a different thread managed by the server.

Servlet 3.1+ containers offer all those possibilities with the Servlet API. It's up to the application to leverage async processing, or non-blocking I/O. In the case of non-blocking I/O, the paradigm change is important and it's really challenging to use.

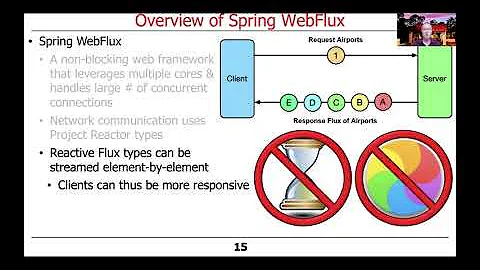

With Spring WebFlux - Tomcat, Jetty and Netty don't have the exact same runtime model, but they all support reactive backpressure and non-blocking I/O.

Solution 2

Currently there are 2 basic concepts to handle parallel access to a web-server with various advantages and disadvantages:

- Blocking

- Non-Blocking

Blocking Web-Servers

The first concept of blocking, multi-threaded server has a finite set amount of threads in a pool. Every request will get assigned to specific thread and this thread will be assigned until the request has been fully served. This is basically the same as how a the checkout queues in a super market works, a customer at a time with possible parallel lines. In most circumstances a request in a web server will be cpu-idle for the majority of the time while processing the request. This is due the fact that it has to wait for I/O: read the socket, write to the db (which is also basically IO) and read the result and write to the socket. Additionally using/creating a bunch of threads is slow (context switching) and requires a lot of memory. Therefore this concept often does not use the hardware resources it has very efficiently and has a hard limit on how many clients can be served in parallel. This property is misused in so called starvation attacks, e.g. the slow loris, an attack where usually a single client can DOS a big multi-threaded web-server with little effort.

Summary

- (+) simpler code

- (-) hard limit of parallel clients

- (-) requires more memory

- (-) inefficient use of hardware for usual web-server work

- (-) easy to DOS

Most "conventional" web server work that way, e.g. older tomcat, Apache Webserver, and everything Servlet older than 3 or 3.1 etc.

Non-Blocking Web-Servers

In contrast a non-blocking web-server can serve multiple clients with only a single thread. That is because it uses the non-blocking kernel I/O features. These are just kernel calls which immediately return and call back when something can be written or read, making the cpu free to do other work instead. Reusing our supermarket metaphor, this would be like, when a cashier needs his supervisor to solve a problem, he does not wait and block the whole lane, but starts to check out the next customer until the supervisor arrives and solves the problem of the first customer.

This is often done in an event loop or higher abstractions as green-threads or fibers. In essence such servers can't really process anything concurrently (of course you can have multiple non-blocking threads), but they are able to serve thousands of clients in parallel because the memory consumption will not scale as drastically as with the multi-thread concept (read: there is no hard limit on max parallel clients). Also there is no thread context-switching. The downside is, that non-blocking code is often more complex to read and write (e.g. callback-hell) and doesn't prefrom well in situations where a request does a lot of cpu-expensive work.

Summary

- (-) more complex code

- (-) performance worse with cpu intensive tasks

- (+) uses resources much more efficiently as web server

- (+) many more parallel clients with no hard-limit (except max memory)

Most modern "fast" web-servers and framework facilitate non-blocking concepts: Netty, Vert.x, Webflux, nginx, servlet 3.1+, Node, Go Webservers.

As a side note, looking at this benchmark page you will see that most of the fastest web-servers are usually non-blocking ones: https://www.techempower.com/benchmarks/

See also

- https://stackoverflow.com/a/21155697/774398

- https://www.quora.com/What-exactly-does-it-mean-for-a-web-server-to-be-blocking-versus-non-blocking

Related videos on Youtube

gstackoverflow

Updated on July 09, 2022Comments

-

gstackoverflow almost 2 years

I am learninig spring webflux and I've read the following series of articles(first, second, third)

In the third Article I faced the following text:

Remember the same application code runs on Tomcat, Jetty or Netty. Currently, the Tomcat and Jetty support is provided on top of Servlet 3.1 asynchronous processing, so it is limited to one request per thread. When the same code runs on the Netty server platform that constraint is lifted, and the server can dispatch requests sympathetically to the web client. As long as the client doesn’t block, everyone is happy. Performance metrics for the netty server and client probably show similar characteristics, but the Netty server is not restricted to processing a single request per thread, so it doesn’t use a large thread pool and we might expect to see some differences in resource utilization. We will come back to that later in another article in this series.

First of all I don't see newer article in the series although it was written in 2016. It is clear for me that tomcat has 100 threads by default for handling requests and one thread handle one request in the same time but I don't understand phrase it is limited to one request per thread What does it mean?

Also I would like to know how Netty works for that concrete case(I want to understand difference with Tomcat). Can it handle 2 requests per thread?

-

Brian Clozel almost 5 yearsI think it's a typo and it's conflating Servlet 3.0 async and Servlet 3.1 non-blocking I/O. I'll get in touch with the author to fix that.

-

gstackoverflow almost 5 years@Brian Clozel but anyway I am a bit messed and I would like to ask you to provide a correct text just because I don't understand how phrase it is limited to one request per thread related to the servlet 3.0 From my current vision it relates to servlet 2.5 rather than 3+

-

Brian Clozel almost 5 yearsthe article is being fixed as we speak

-

gstackoverflow almost 5 years@Brian Clozel It is fantastic!!! I contacted to proper person) world is so small

-

-

gstackoverflow almost 5 yearsWhat you're wrinting is correct but my question about spring web flux. For tomcat spring web flux works over the servlet 3.1 (async support + NIO) but for Netty it work somehow different. I want to know how. Please follow the link and find the phrase I mentioned in the topic

-

gstackoverflow almost 5 yearsRemember the same application code runs on Tomcat, Jetty or Netty. Currently, the Tomcat and Jetty support is provided on top of Servlet 3.1 asynchronous processing, so it is limited to one request per thread. I created the topic because I don't understand that phrase

-

Patrick almost 5 yearsTomcat and Jetty are servlet containers, so they are restricted on how the servlet spec defines them. Servlet 3.1 should enable non-blocking IO, but I don't think it is mandatory. I think it's just a complicated way of saying they don't support non-blocking concepts?

-

Patrick almost 5 years"For tomcat spring web flux works over the servlet 3.1 (async support + NIO) but for Netty it work somehow different." Servlet 3.1 NIO and Netty should both use the same principle.

-

gstackoverflow almost 5 yearsAs far as I know tomcat 8.5+ supports servlet 3.1 and thus it supports async+non-blocking

-

gstackoverflow almost 5 yearsIt is possible to find videos like this: youtube.com/watch?v=o0nyGrSk8Fs so I don't have any doubts about it

-

gstackoverflow almost 5 yearswork always happens on a server thread and it is always blocking Do you mean container thread?

-

Brian Clozel almost 5 yearsYes, I meant the same.

-

gstackoverflow almost 5 yearsYou are saying that "one request/thread" model isn't longer used sinceservlet 3.1. But what about servlet 3.0 ? Do you consider it as a "one request/thread" model ? I agree that NIO in servlet 3.1 is more thread ditributed for single request than 3.0 but 3.0 is not single thread per request too

-

Brian Clozel almost 5 yearsTomcat supports Servlet 3.1 for quite some time now but most applications don't leverage that since using the Servlet non-blocking I/O API is challenging. I'd say most Servlet apps are still "one request/thread" these days (unless they're using 3.0 async processing, which invalidates that, but is still blocking)

-

gstackoverflow almost 5 yearsBut from your point of view Is servlet 3.0 uses "one request/thread" model ? from my view - not

-

gstackoverflow almost 5 yearsany thoughts about my question?

-

Brian Clozel almost 5 yearsAlready described that in my answer. It's more about "is the application using Servlet 3.0 async processing?" than "is the application running on a Servlet 3.0+ container?". If async processing is being used, the "one request per thread" model does not apply anymore.

-

gstackoverflow almost 5 yearsThanks it is clear now. Also I would be thankful if you share any example of spring "hello world" where servlet 3.1 is used(without webflux)

-

Brian Clozel almost 5 yearsI don't have any example handy, but this presentation youtube.com/watch?v=uGXsnB2S_vc and our reactive adapter github.com/spring-projects/spring-framework/blob/master/… should give you some ideas of the complexity involved.