Transferring millions of files from one server to another

Solution 1

As you say, use rsync:

rsync -azP /var/www/html/txt/ username@ip-address:/var/www/html/txt

The options are:

-a : enables archive mode, which preserves symbolic links and works recursively

-z : compress the data transfer to minimise network usage

-P : to display a progress bar and enables you to resume partial transfers

As @aim says in his answer, make sure you have a trailing / on the source directory (on both is fine too).

More info from the man page

Solution 2

Just use rsync over ssh!

rsync -av username@ip:/var/www/html/txt /var/www/html/

From the man page:

-a, --archive : This is equivalent to -rlptgoD. It is a quick way of saying you want recursion and want to preserve almost everything (with -H being a notable omission). The only exception to the above equivalence is when --files-from is specified, in which case -r is not implied.

-v, --verbose : This option increases the amount of information you are given during the transfer. By default, rsync works silently. A single -v will give you information about what files are being transferred and a brief summary at the end. Two -v options will give you information on what files are being skipped and slightly more information at the end. More than two -v options should only be used if you are debugging rsync.

Note how did I use slashes at the end of the folders - it's important.

Solution 3

Use lftp, its much faster than rsync and its best for mirroring websites (many small files). It can also transfer in parallel using multiple connections:

lftp -u username,password sftp://ip-address -e 'mirror --only-newer --no-dereference --parallel=5 /remote/path/ /destination/;quit'

If one connection breaks it will reconnect and continue. If you break the transfer it will skip existing files and continue.

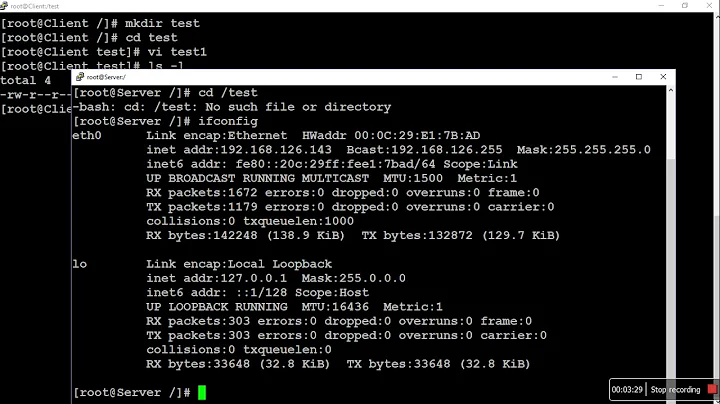

Related videos on Youtube

yuli chika

Updated on September 18, 2022Comments

-

yuli chika over 1 year

I have two servers. One of them has 15 million text files (about 40 GB). I am trying to transfer them to another server. I considered zipping them and transferring the archive, but I realized that this is not a good idea.

So I used the following command:

scp -r usrname@ip-address:/var/www/html/txt /var/www/html/txtBut I noticed that this command just transfers about 50,000 files and then the connection is lost.

Is there any better solution that allows me to transfer the entire collection of files? I mean to use something like

rsyncto transfer the files which didn't get transferred when the connection was lost. When another connection interrupt would occur, I would type the command again to transfer files, ignoring those which have already been transferred successfully.This is not possible with

scp, because it always begins from the first file. -

nyuszika7h over 9 yearsYou should probably add more information on the significance of the trailing slash. From the

rsyncmanual page: "A trailing slash on the source changes this behavior to avoid creating an additional directory level at the destination. You can think of a trailing / on a source as meaning "copy the contents of this directory" as opposed to "copy the directory by name", but in both cases the attributes of the containing directory are transferred to the containing directory on the destination." -

nyuszika7h over 9 yearsI suggest adding the

-hflag to use human-readable units. And if you want more verbosity, you have a few options:-iand/or-v/-vv. -

vensires over 9 yearsThere is generally very little reason not to always use rsync instead of scp. Its basic usage is the same and it offers many additional handy features.

-

Skaperen about 9 yearsif

sshis not an option for you and you don't need or want the encryption you can try my script s3.amazonaws.com/skaperen/rsend