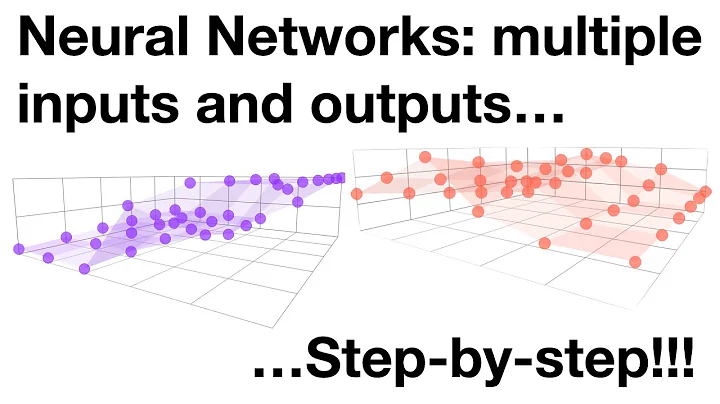

Understanding input/output dimensions of neural networks

Yes, we just have a bunch of neurons throuhg which single numbers flow.

But: if you must give your network 5 numbers as input, it's then convenient to give these numbers in an array with length 5.

And if you're giving 30 thousand examples for your network to train, then it's convenient to create an array with 30 thousand elements, each element being an array of 5 numbers.

In the end, this input with 30 thousand examples of 5 numbers is an array with shape (30000,5).

Each layer then has it's own output shape. Each layer's output is certainly related to its own amount of neurons. Each neuron will throw out a number (or sometimes an array, depending on which layer type you're using). But 10 neurons together will throw out 10 numbers, which will then be packed in an array shaped (30000,10).

The word "None" in those shapes is related to the batch size (the amount of examples you give for training or predicting). You don't define that number, it is automatically understood when you pass a batch.

Looking at your network:

When you have an input of 5 units, you got an input shape of (None,5). But you actually say only (5,) to your model, because the None part is the batch size, which will only appear when training.

This number means: you have to give your network an array with a number of samples, each sample being an array of 5 numbers.

Then, your hidden layer with 10 neurons will calculate and give you 10 numbers as output, in an array shaped as (None, 10).

What is a (None,5,300)?

If you're saying that each word is a 300d vector, there are a few different ways to translate a word in that.

One of the common ways is: how many words you have in your dictionary? If you have a dictionary with 300 words, you can then make each word be a vector with 300 elements, being all zeros, except for one of them.

- Say word "hello" is the first word in your dictionary, it's vector will be [1,0,0,0, ...., 0]

- Say word "my" is the second word in your dictionary, it's vector will be [0,1,0,0, ...., 0]

- And the word "fly" is the last one in the dictionary, it's vector will be [0,0,0,0, ...., 1]

You do this for your entire dictionary, and whenever you have to pass the word "hello" to your network, you will pass [1,0,0,0 ..., 0] instead.

A sentence with five words will then be an array with five of these arrays. This means, a sentence with five words will be shaped as (5, 300). If you pass 30 thousand sentences as examples: (30000,5,300). In the model, "None" appears as the batch size (None, 5, 300)

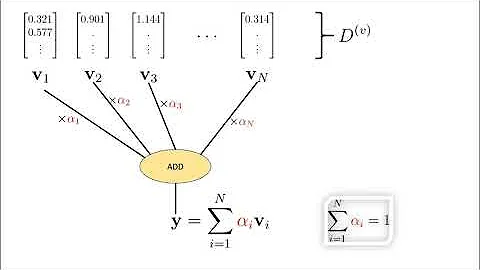

There are also other options, such as creating a word Embedding, which will translate the words into vectors of meanings. Meanings which only the network will understand. (There is the Embedding layer on Keras for that).

There are also things called CBOW (continous bag of words).

You have to know what you want to do first, so you can translate your words in some array that fits the network's requirements.

How many neurons do I have for an output of (None,5,300)?

This only tells you about the last layer. The other layers' outputs were all calculated and packed together by the following layers, which changed the output. Each layer has its own output. (When you have a model, you can do a model.summary() and see the output of each layer.)

Even though, it's impossible to answer that question without knowing which types of layers you're using.

There are layers such as Dense that throw out things like (BatchSize,NumberOfNeurons)

But there are layers such as Convolution2D that throw out things like (BatchSize, numberOfChannels, pixelsInX, pixelsInY). For instance, a regular image has three channels: red, blue and green. An array for passing a regular image would be like (3,sizeX,sizeY).

It all depends on which layer type you're using.

Using a word embedding

For using an embedding, it's interesting to read keras documentation about it.

For that you will have to transform your words in indices.

Instead of saying that each word in your dictionary is a vector, you say it's a number.

- Word "hello" is 1

- Word "my" is 2

- Word "fly" is

theSizeOfYourDictionary

If you want each sentence to have 100 words, then your input shape will be (None, 100). Where each array of 100 numbers contains numbers representing the words in your dictionary.

The first layer in your model will be an Embedding layer.

model = Sequential()

model.add(Embedding(theSizeOfYourDictionary, 300, input_length=100)

This way, you're creating vectors of size 300 for each word, passing sequences of 100 words. (I'm not used to embeddings, but it seems 300 is a big number, it could be less).

The output of this embedding will be (None, 100, 300).

Then you connect other layers after it.

Related videos on Youtube

Comments

-

null over 1 year

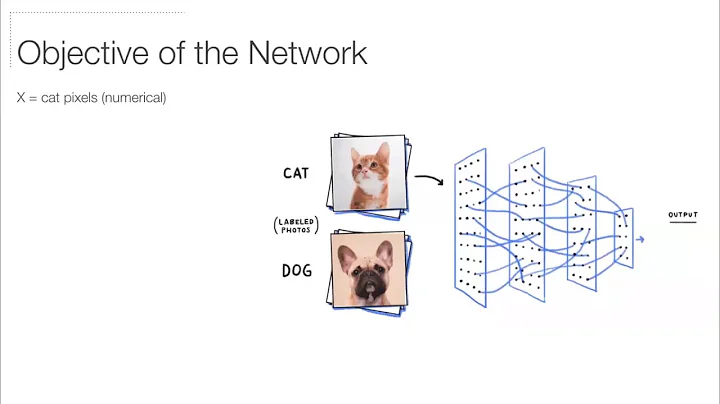

Let's take a fully-connected neural network with one hidden layer as an example. The input layer consists of 5 units that are each connected to all hidden neurons. In total there are 10 hidden neurons.

Libraries such as Theano and Tensorflow allow multidimensional input/output shapes. For example, we could use sentences of 5 words where each word is represented by a 300d vector.

How is such an input mapped on the described neural network? I do not understand what an ouptut shape of (None, 5, 300) (just an example) means. In my imagination we just have a bunch of neurons through which single numbers flow.

When I have an output shape of (None, 5, 300), how much neurons do I have in the corresponding network? How do I connect the words to my neural network?

-

null almost 7 yearsThanks. When using word embeddings the input dimension equals the size of my dictionary. Currently, I am using pretained embeddings by word2vec. The overal size of my dictionary is 5125. Doesn't this mean that the input layer has 5125 neurons (your first argument)? Each of these 5125 neurons is connected to 300 neurons of the next layer. But how could I connect a sentence with 300d words to a nn with 5125 input neurons?

-

Daniel Möller almost 7 yearsNo, your input is the size of your "sentence". The size of each example you want to give your network to train. While the size of your dictionary is a parameter for the embedding so it calculates things internally in a proper way.

Daniel Möller almost 7 yearsNo, your input is the size of your "sentence". The size of each example you want to give your network to train. While the size of your dictionary is a parameter for the embedding so it calculates things internally in a proper way. -

Daniel Möller almost 7 yearsYour input is (None, 100). The embedding will automatically transform it in (None, 100, 300), and the next layer you add to the model will have to adapt to this shape.

Daniel Möller almost 7 yearsYour input is (None, 100). The embedding will automatically transform it in (None, 100, 300), and the next layer you add to the model will have to adapt to this shape. -

Daniel Möller almost 7 yearsI suppose the embedding itself has 300 neurons, but I have no idea how it works internally....

Daniel Möller almost 7 yearsI suppose the embedding itself has 300 neurons, but I have no idea how it works internally.... -

Daniel Möller almost 7 yearsBut if your words are already vectors, you can't use an embedding. For an embedding, each word should be a number.

Daniel Möller almost 7 yearsBut if your words are already vectors, you can't use an embedding. For an embedding, each word should be a number.