Unique (non-repeating) random numbers in O(1)?

Solution 1

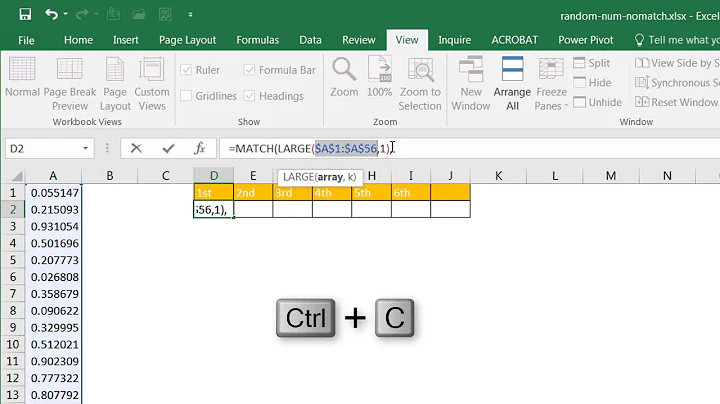

Initialize an array of 1001 integers with the values 0-1000 and set a variable, max, to the current max index of the array (starting with 1000). Pick a random number, r, between 0 and max, swap the number at the position r with the number at position max and return the number now at position max. Decrement max by 1 and continue. When max is 0, set max back to the size of the array - 1 and start again without the need to reinitialize the array.

Update: Although I came up with this method on my own when I answered the question, after some research I realize this is a modified version of Fisher-Yates known as Durstenfeld-Fisher-Yates or Knuth-Fisher-Yates. Since the description may be a little difficult to follow, I have provided an example below (using 11 elements instead of 1001):

Array starts off with 11 elements initialized to array[n] = n, max starts off at 10:

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 1| 2| 3| 4| 5| 6| 7| 8| 9|10|

+--+--+--+--+--+--+--+--+--+--+--+

^

max

At each iteration, a random number r is selected between 0 and max, array[r] and array[max] are swapped, the new array[max] is returned, and max is decremented:

max = 10, r = 3

+--------------------+

v v

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 1| 2|10| 4| 5| 6| 7| 8| 9| 3|

+--+--+--+--+--+--+--+--+--+--+--+

max = 9, r = 7

+-----+

v v

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 1| 2|10| 4| 5| 6| 9| 8| 7: 3|

+--+--+--+--+--+--+--+--+--+--+--+

max = 8, r = 1

+--------------------+

v v

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 8| 2|10| 4| 5| 6| 9| 1: 7| 3|

+--+--+--+--+--+--+--+--+--+--+--+

max = 7, r = 5

+-----+

v v

+--+--+--+--+--+--+--+--+--+--+--+

| 0| 8| 2|10| 4| 9| 6| 5: 1| 7| 3|

+--+--+--+--+--+--+--+--+--+--+--+

...

After 11 iterations, all numbers in the array have been selected, max == 0, and the array elements are shuffled:

+--+--+--+--+--+--+--+--+--+--+--+

| 4|10| 8| 6| 2| 0| 9| 5| 1| 7| 3|

+--+--+--+--+--+--+--+--+--+--+--+

At this point, max can be reset to 10 and the process can continue.

Solution 2

You can do this:

- Create a list, 0..1000.

- Shuffle the list. (See Fisher-Yates shuffle for a good way to do this.)

- Return numbers in order from the shuffled list.

So this doesn't require a search of old values each time, but it still requires O(N) for the initial shuffle. But as Nils pointed out in comments, this is amortised O(1).

Solution 3

Use a Maximal Linear Feedback Shift Register.

It's implementable in a few lines of C and at runtime does little more than a couple test/branches, a little addition and bit shifting. It's not random, but it fools most people.

Solution 4

You could use Format-Preserving Encryption to encrypt a counter. Your counter just goes from 0 upwards, and the encryption uses a key of your choice to turn it into a seemingly random value of whatever radix and width you want. E.g. for the example in this question: radix 10, width 3.

Block ciphers normally have a fixed block size of e.g. 64 or 128 bits. But Format-Preserving Encryption allows you to take a standard cipher like AES and make a smaller-width cipher, of whatever radix and width you want, with an algorithm which is still cryptographically robust.

It is guaranteed to never have collisions (because cryptographic algorithms create a 1:1 mapping). It is also reversible (a 2-way mapping), so you can take the resulting number and get back to the counter value you started with.

This technique doesn't need memory to store a shuffled array etc, which can be an advantage on systems with limited memory.

AES-FFX is one proposed standard method to achieve this. I've experimented with some basic Python code which is based on the AES-FFX idea, although not fully conformant--see Python code here. It can e.g. encrypt a counter to a random-looking 7-digit decimal number, or a 16-bit number. Here is an example of radix 10, width 3 (to give a number between 0 and 999 inclusive) as the question stated:

000 733

001 374

002 882

003 684

004 593

005 578

006 233

007 811

008 072

009 337

010 119

011 103

012 797

013 257

014 932

015 433

... ...

To get different non-repeating pseudo-random sequences, change the encryption key. Each encryption key produces a different non-repeating pseudo-random sequence.

Solution 5

You could use A Linear Congruential Generator. Where m (the modulus) would be the nearest prime bigger than 1000. When you get a number out of the range, just get the next one. The sequence will only repeat once all elements have occurred, and you don't have to use a table. Be aware of the disadvantages of this generator though (including lack of randomness).

Related videos on Youtube

Comments

-

dicroce over 3 years

I'd like to generate unique random numbers between 0 and 1000 that never repeat (i.e. 6 doesn't show up twice), but that doesn't resort to something like an O(N) search of previous values to do it. Is this possible?

-

jk. over 15 yearsIsn't this the same question as stackoverflow.com/questions/158716/…

-

Pete Kirkham over 15 yearsIs 0 between 0 and 1000?

-

jww over 9 yearsIf you are prohibiting anything over constant time (like

jww over 9 yearsIf you are prohibiting anything over constant time (likeO(n)in time or memory), then many of the answer below are wrong, including the accepted answer. -

Colonel Panic over 9 yearsHow would you shuffle a pack of cards?

-

ivan_pozdeev over 7 yearsYou cannot have

O(1)if there areNelements in the answer. So the requirements are unclear. -

ivan_pozdeev over 7 yearsWARNING! Many of the answers given below to not produce truly random sequences, are slower than O(n) or otherwise defective! codinghorror.com/blog/archives/001015.html is an essential read before you use any of them or try to concoct your own!

-

ivan_pozdeev over 7 yearsFlagging as an inferior duplicate as per meta.stackoverflow.com/questions/334325/…

-

-

Kirk Strauser over 15 yearsI disagree that it's amortized. The total algorithm is O(N) because of the shuffling. I guess you could say it's O(.001N) because each value only consumes 1/1000th of a O(N) shuffle, but that's still O(N) (albeit with a tiny coefficient).

Kirk Strauser over 15 yearsI disagree that it's amortized. The total algorithm is O(N) because of the shuffling. I guess you could say it's O(.001N) because each value only consumes 1/1000th of a O(N) shuffle, but that's still O(N) (albeit with a tiny coefficient). -

Guvante over 15 years@Just Some Guy N = 1000, so you are saying that it is O(N/N) which is O(1)

-

Mitch Wheat over 15 yearsIf the array doesn't start off shuffled (and not with the naive algorithm; see Jeff's post on shuffling), doesn't this introduce a subtle bias?

Mitch Wheat over 15 yearsIf the array doesn't start off shuffled (and not with the naive algorithm; see Jeff's post on shuffling), doesn't this introduce a subtle bias? -

B Bulfin over 15 yearsNo bias. This is just a spread-out version of the same algorithm.

-

pro over 15 yearsJeff's post on shuffling suggests this will not return good random numbers.. codinghorror.com/blog/archives/001015.html

-

mikeramos over 15 years@Peter Rounce: I think not; this looks to me like the Fisher Yates algorithm, also quoted in Jeff's post (as the good guy).

-

Kibbee over 15 yearsIf each insert into the shuffled array is an operation, then after inserting 1 value, you can get 1 random value. 2 for 2 values, and so on, n for n values. It takes n operations to generate the list, so the entire algorithm is O(n). If you need 1,000,000 random values, it will take 1,000,000 ops

-

Kibbee over 15 yearsThink about it this way, if it was constant time, it would take the same amount of time for 10 random numbers as it would for 10 billion. But due to the shuffling taking O(n), we know this is not true.

-

user2522201 over 15 years@Brad: I'm not really sure what you are talking about. What "initial hit" are you referring to and how will Perl6/Parrot avoid that exactly?

-

Pete Kirkham over 15 yearsAny method which requires you to initialise an array of size N with values is going to have an O(N) initialisation cost; moving the shuffle to the initialise or doing one iteration of the shuffle per request doesn't make any difference.

-

Aleksey Balenko about 15 years"It's not random, but it fools most people". That applies to all pseudo-random number generators and all feasible answers to this question. But most people won't think about it. So omitting this note would maybe result in more upvotes...

Aleksey Balenko about 15 years"It's not random, but it fools most people". That applies to all pseudo-random number generators and all feasible answers to this question. But most people won't think about it. So omitting this note would maybe result in more upvotes... -

starblue over 14 yearsThat would fool fewer people than an LFSR.

-

sellibitze almost 14 years"bitmask" within 512...1023 is OK, too. For a little more fake randomness see my answer. :-)

-

Charles over 13 yearsThis actually takes amortized time O(log n), since you need to generate n lg n random bits.

-

Charles over 13 yearsThe algorithm you describe takes O(n log n) time to generate the list, or O(log n) amortized time per element.

-

user2522201 over 13 years@Charles: How do you come up with that?

-

Charles over 13 years@Robert: Because you're generating a (lg n)-bit random number for each number on the list, and this takes Omega(log n) time.

-

user2522201 over 13 years@Charles: I think your logic is faulty, it may take O(n) time to generate the original list of numbers if you do not already have this set up but it takes O(1) time to generate each number from the list which is that was being asked for.

-

Charles over 13 years@Robert: This method does not generate the list in O(n), it takes O(n log n). I can't imagine that the request meant that we're allowed infinite pre-processing time, because in that case any method works -- just do whatever you need to do, then generate each item and store it in a table. It's clear to me that the time must include the upfront cost, which is log n per element. (Actually, the algorithm can be modified to generate each element in O(log n) time rather than O(log n) amortized time.)

-

user2522201 over 13 years@Charles: The initial list takes O(n) time to initialize (it takes twice as long to initialize 2 integers of the same size as it does initialize one such integer). The time that the random number generation takes depends on the implementation. Once you have the random number it takes constant time to execute the algorithm I presented. The OP asked for something "that doesn't resort to something like an O(N) search of previous values to do it" which indicates that the O(1) part should be the selection. Since this is the accepted answer, I think it met the OP's requirements.

-

Charles over 13 years@Robert: The method requires Theta(n log n) random bits: actually, precisely lg n! bits. Are you claiming that you can generate Theta(n log n) bits in time O(n)?

-

user2522201 over 13 years@Charles, the claim is what I put in my answer. Read the question and my answer, if you feel you have a better answer you are more than free to post it.

-

Charles over 13 years@robert: I just wanted to point out that it doesn't produce, as in the name of the question, "unique random numbers in O(1)".

-

user2522201 over 13 years@Charles: Fair enough, although nobody ever said it did and the the question elaborates pretty well on what was desired which should prevent confusion although I guess the title could be updated to better reflect the question that was actually asked and answered.

-

Charles over 13 years@Robert: Yep. (In fairness, I didn't claim that anyone claimed this was O(1) -- I just pointed out the complexity, since it hadn't been mentioned up to that point.)

-

mikera over 13 years@Charles/Robert - assuming you are dealing with fixed size 32-bit machine integers (which is a reasonable assumption given the question and scales of integers discussed) then you only need at most 32 random bits per sample. Generating that is an O(1) operation, hence this should be regarded as an O(1) algorithm for practical (as opposed to theoretical) purposes.

-

Charles over 13 years@mikera: Agreed, although technically if you're using fixed-size integers the whole list can be generated in O(1) (with a large constant, viz. 2^32). Also, for practical purposes, the definition of "random" is important -- if you really want to use your system's entropy pool, the limit is the computation of the random bits rather than calculations themselves, and in that case n log n is relevant again. But in the likely case that you'll use (the equivalent of) /dev/urandom rather than /dev/random, you're back to 'practically' O(n).

-

Seph over 12 yearsI'm a little confused, wouldn't the fact that you have to perform

Niterations (11 in this example) to get the desired result each time mean it'sO(n)? As you need to to doNiterations to getN!combinations from the same initial state, otherwise your output will only be one of N states. -

Nuoji about 11 years@Phob No that won't happen, because a 10 bit PRNG based on a Linear Feedback Shift Register is typically made from a construct that assumes all values (except one) once, before returning to the first value. In other words, it will only pick 1001 exactly once during a cycle.

-

bobobobo about 11 yearsWhy fake it when you can do it correctly?

bobobobo about 11 yearsWhy fake it when you can do it correctly? -

Nuoji about 11 years@Phob the whole point of this question is to select each number exactly once. And then you complain that 1001 won't occur twice in a row? A LFSR with an optimal spread will traverse all numbers in its space in a pseudo random fashion, then restart the cycle. In other words, it is not used as a usual random function. When used as a random, we typically only use a subset of the bits. Read a bit about it and it'll soon make sense.

-

Ash about 11 years@bobobobo: O(1) memory is why.

-

bobobobo about 11 yearsTo save 4KB of RAM? For this particular problem, I see no reason to fake it. Other problems maybe.

bobobobo about 11 yearsTo save 4KB of RAM? For this particular problem, I see no reason to fake it. Other problems maybe. -

Paul Hankin about 11 yearsNit: it's O(log N) memory.

Paul Hankin about 11 yearsNit: it's O(log N) memory. -

C. K. Young over 10 yearsI've been tempted for years to amend @AdamRosenfield's edit to say "amortised" instead of "amortized", but I can't bring myself to make such a small edit with no other changes, especially since said edit is 5 years old. Still, I should at least state so for the record.

C. K. Young over 10 yearsI've been tempted for years to amend @AdamRosenfield's edit to say "amortised" instead of "amortized", but I can't bring myself to make such a small edit with no other changes, especially since said edit is 5 years old. Still, I should at least state so for the record. -

gbarry about 10 yearsClearly, this is the Gamble-Durstenfeld-Fisher-Yates algorithm :)

-

C. K. Young almost 10 yearsAnd now, I have all the justification to do it! meta.stackoverflow.com/q/252503/13

C. K. Young almost 10 yearsAnd now, I have all the justification to do it! meta.stackoverflow.com/q/252503/13 -

Teepeemm almost 10 years1009 is the first prime after 1000.

-

tigrou almost 8 yearsUsing that method, how do you generate numbers let's say between 0 and 800000 ? Some might use a LFSR which period is 1048575 (2^20 - 1) and get next one if number is out of range but this won't be efficient.

-

Exectron over 7 yearsCould that method work for a decimal value, e.g. scrambling a 3-digit decimal counter to always have a 3-digit decimal result?

-

ivan_pozdeev over 7 yearsIt's completely unnecessary to perform all the "11 iterations" before starting over. So, the method is strictly inferior to Fisher-Yates with no gain. Just the thing Jeff warned about in his blog as pointed out by pro.

-

ivan_pozdeev over 7 yearsAn LCG has high correlation between consecutive numbers, thus combinations will not be quite random at large (e.g. numbers farther than

kapart in the sequence can never occur together). -

ivan_pozdeev over 7 yearsThis is essentially a simple mapping, thus not any different from LCG and LFSR, with all the relevant kinks (e.g. values more than

kapart in the sequence can never occur together). -

ivan_pozdeev over 7 yearsAs an example of Xorshift algorithm, it's an LFSR, with all related kinks (e.g. values more than

kapart in the sequence can never occur together). -

ivan_pozdeev over 7 yearsThis one is O(k^2), what with a number of additional steps proportional on average to the number of values selected so far.

-

ivan_pozdeev over 7 yearsEssentially equivalent to stackoverflow.com/a/16097246/648265, also fails randomness for sequences.

-

ivan_pozdeev over 7 yearsAs an LFSR, this doesn't produce uniformly distributed sequences: the entire sequence that would be generated is defined by the first element.

-

ivan_pozdeev over 7 yearsO(n^2), as the number of retries is proportional on average to the number of elements selected so far.

-

ivan_pozdeev over 7 yearsIt's not necessary to keep 2 lists - or exhaust a list before staring over. Fisher-Yates gives uniformly random results from any initial state. See stackoverflow.com/a/158742/648265 for explanation.

-

Exectron over 7 years@ivan_pozdeev: I'm having difficulty understanding the meaning of your comment. Can you explain what is wrong with this mapping, what are "all the relevant kinks", and what is

k? -

Khaled.K over 7 years@ivan_pozdeev Yes, it's the same result, but my idea here is to make it amortized O(1) by making the shuffle part of the drawing action.

-

ivan_pozdeev over 7 yearsYou didn't understand. You don't need to reset the list at all before shuffling again. Shuffling

[1,3,4,5,2]will produce the same result as shuffling[1,2,3,4,5]. -

ivan_pozdeev over 7 yearsAll the "encryption" effectively does here is replace the sequence

1,2,...,Nwith a sequence of the same numbers in some other, but still constant, order. The numbers are then pulled from this sequence one by one.kis the number of values picked (the OP didn't specify a letter for it so I had to introduce one). -

ivan_pozdeev over 7 yearsSince the sequence is constant and every number in it is unique, the combination returned is fully defined by the first number. Thus it's not fully random - only a small subset of possible combinations can be generated.

-

ivan_pozdeev over 7 yearsLFSRs and LCGs have the same property, so their other defects related to it also apply.

-

ivan_pozdeev over 7 yearsThe only problem is that a given LFSR has only one sequence, thus giving strong correlation between the picked numbers - in particular, not generating every possible combination.

-

Exectron over 7 yearsI see. However, in the case of format-preserving encryption, you can obtain a different "random" sequence for every chosen encryption key. WIth a 128-bit or 256-bit key, that gives you plenty of possible sequences. For any chosen key, the generated sequence is guaranteed to be the complete set of numbers effectively shuffled.

-

Exectron over 7 yearsRegarding the "values more than

kapart" consideration — you just pick a suitable radixrand widthwto suit your needs to getk = rʷ, and then generate as manynvalues as you want, wheren ≤ k. For example, in my answer I showed how to get 16 non-repeating numbers in the range 0..999. -

Erez Robinson over 7 yearsThink about it, if you select min=0 max=10000000 and N=5, retries ~=0 no matter how many selected. But yes you have a point that if max-min is small, o(N) breaks up.

-

ivan_pozdeev over 7 yearsIf N<<(max-min) then it's still proportional, it's just the coefficient is very small. And coefficients don't matter for an asymptotic estimate.

-

ivan_pozdeev over 7 yearsOf course, the deficiencies of a constant-sequence PRNG (strong correlation between results) only manifest themselves when you use the same setup more than once. If you use it once and never again, it's random alright. But generating a new setup each time (randomly, with a better RNG!) pretty much defeats the purpose of building one's own PRNG.

-

ivan_pozdeev over 7 yearsThat's because the "key" roughly equals to the same amount of "good" random numbers (must have

N!possible values to cover all possible sequences) in addition to all the extra work. -

ivan_pozdeev over 7 yearsSame as stackoverflow.com/a/16097246/648265, fails randomness for sequences just the same.

-

ivan_pozdeev over 7 yearsSince this generates combinations rather than permutations, it's more appropriate for stackoverflow.com/questions/2394246/…

-

ivan_pozdeev over 7 yearsTesting shows this has a bias towards lower numbers: the measured probabilities for 2M samples with

(top,n)=(100,10)are :(0.01047705, 0.01044825, 0.01041225, ..., 0.0088324, 0.008723, 0.00863635). I tested in Python, so slight differences in math might play a role here (I did make sure all operations for calculatingrare floating-point). -

ivan_pozdeev over 7 yearsThis is an LCG, like stackoverflow.com/a/196164/648265, non-random for sequences as well as other related kinks just the same.

-

sh1 over 7 years@ivan_pozdeev mine's better than an LCG because it ensures that it won't return a duplicate on the 1001st call.

-

sh1 over 7 years@ivan_pozdeev It's not the case that FPE must implement a specific static mapping, or that "the combination returned is fully defined by the first number". Since the configuration parameter is much larger than the size of the first number (which has only a thousand states), there should be multiple sequences that start with the same initial value and then proceed to different subsequent values. Any realistic generator is going to fail to cover the entire possible space of permutations; it's not worth raising that failure mode when the OP didn't ask for it.

-

Ilmari Karonen over 7 years+1. When implemented correctly, using a secure block cipher with a key chosen uniformly at random, the sequences generated using this method will be computationally indistinguishable from a true random shuffle. That is to say, there is no way to distinguish the output of this method from a true random shuffle significantly faster than by testing all possible block cipher keys and seeing if any of them generates the same output. For a cipher with a 128-bit keyspace, this is probably beyond the computing power currently available to mankind; with 256-bit keys, it will probably forever remain so.

Ilmari Karonen over 7 years+1. When implemented correctly, using a secure block cipher with a key chosen uniformly at random, the sequences generated using this method will be computationally indistinguishable from a true random shuffle. That is to say, there is no way to distinguish the output of this method from a true random shuffle significantly faster than by testing all possible block cipher keys and seeing if any of them generates the same output. For a cipher with a 128-bit keyspace, this is probably beyond the computing power currently available to mankind; with 256-bit keys, it will probably forever remain so. -

Ilmari Karonen over 7 years@ivan_pozdeev: In theory, assuming infinite computing power, yes. However, assuming that

Ilmari Karonen over 7 years@ivan_pozdeev: In theory, assuming infinite computing power, yes. However, assuming thathash(), as used in the code above, is a secure pseudorandom function, this construction will provably (Luby & Rackoff, 1988) yield a pseudorandom permutation, which cannot be distinguished from a true random shuffle using significantly less effort than an exhaustive search of the entire key space, which is exponential in the key length. Even for reasonably sized keys (say, 128 bits), this is beyond the total computing power available on Earth. -

Ilmari Karonen over 7 years(BTW, just to make this argument a bit more rigorous, I'd prefer to replace the ad hoc

Ilmari Karonen over 7 years(BTW, just to make this argument a bit more rigorous, I'd prefer to replace the ad hochash( key + "/" + int2str(temp) )construction above with HMAC, whose security in turn can be provably reduced to that of the underlying hash compression function. Also, using HMAC might make it less likely for someone to mistakenly try to use this construction with an insecure non-crypto hash function.) -

salva over 7 yearsYes, in order for this method to work correctly, the upper limit must be much bigger than the number of values to be extracted.

-

ivan_pozdeev over 7 years@IlmariKaronen Still, the answer is incomplete without specifying that one needs a new random key for each permutation, some points on its required length and the "doesn't generate all possible permutations but indistinguishable" speech.

-

Max Abramovich over 7 yearsm should be the number of elements 1001 (1000 + 1 for zero) and you may use Next = (1002 * Current + 757) mod 1001;

-

paparazzo about 7 yearsThis is not O(n). Each time the set contains the value this is and extra loop.

paparazzo about 7 yearsThis is not O(n). Each time the set contains the value this is and extra loop. -

paparazzo about 7 yearsThere is already an answer with this but it is fairly long winded and does not recognize you can stop at 1 (not 0)

paparazzo about 7 yearsThere is already an answer with this but it is fairly long winded and does not recognize you can stop at 1 (not 0) -

tactoth over 6 yearsMaybe this is not exactly the asker wants, but it's what I'm looking for ;)

-

ivan_pozdeev over 6 yearsIt won't work "correctly" even if "the upper limit [is] much bigger than the number of values". The probabilities will still be uneven, just by a lesser margin.

-

Mac almost 6 yearsI have no idea if this can actually meet the OPs needs, but props for a COBOL contribution!

-

Emanuel Landeholm about 5 yearsGuys, please! The Fisher-Yates method is broken. You select the first one with probability 1/N and the second one with probability 1/(N-1) != 1/N. This is a biased sampling method! You really need the Vittter's algorithm to resolve the bias.

-

Pablo H over 2 yearsI think this is a great idea. On the other hand, it is not clear to me that the resulting distribution (in the random variable sense) is uniform. For some applications at least, a uniform distribution of permutations (i.e. each permutation has equal probability) is desired/required. Also, if there're more permutations than keys, some are not generated. If there're more keys than permutations, some are repeated.

-

Exectron over 2 years@PabloH Statistically it is a uniform distribution, as good-quality cryptographic algorithms are.