Using awk to remove the Byte-order mark

Solution 1

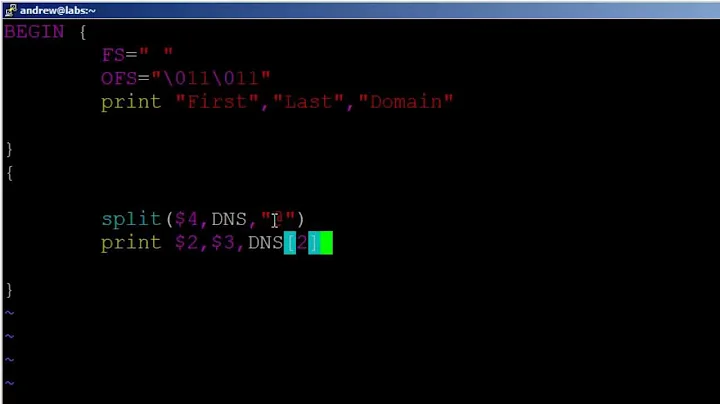

Try this:

awk 'NR==1{sub(/^\xef\xbb\xbf/,"")}{print}' INFILE > OUTFILE

On the first record (line), remove the BOM characters. Print every record.

Or slightly shorter, using the knowledge that the default action in awk is to print the record:

awk 'NR==1{sub(/^\xef\xbb\xbf/,"")}1' INFILE > OUTFILE

1 is the shortest condition that always evaluates to true, so each record is printed.

Enjoy!

-- ADDENDUM --

Unicode Byte Order Mark (BOM) FAQ includes the following table listing the exact BOM bytes for each encoding:

Bytes | Encoding Form

--------------------------------------

00 00 FE FF | UTF-32, big-endian

FF FE 00 00 | UTF-32, little-endian

FE FF | UTF-16, big-endian

FF FE | UTF-16, little-endian

EF BB BF | UTF-8

Thus, you can see how \xef\xbb\xbf corresponds to EF BB BF UTF-8 BOM bytes from the above table.

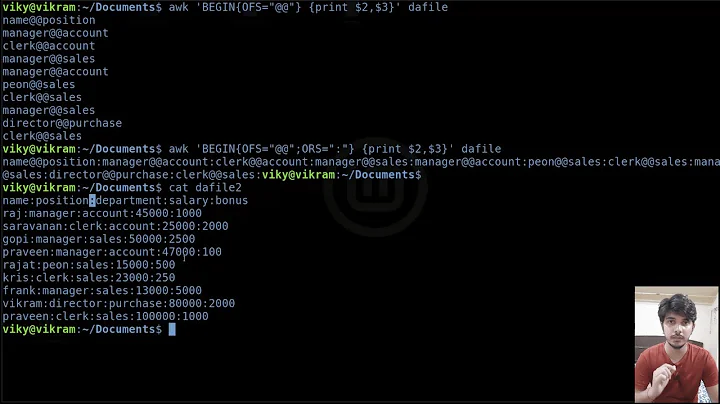

Solution 2

Using GNU sed (on Linux or Cygwin):

# Removing BOM from all text files in current directory:

sed -i '1 s/^\xef\xbb\xbf//' *.txt

On FreeBSD:

sed -i .bak '1 s/^\xef\xbb\xbf//' *.txt

Advantage of using GNU or FreeBSD sed: the -i parameter means "in place", and will update files without the need for redirections or weird tricks.

On Mac:

This awk solution in another answer works, but the sed command above does not work. At least on Mac (Sierra) sed documentation does not mention supporting hexadecimal escaping ala \xef.

A similar trick can be achieved with any program by piping to the sponge tool from moreutils:

awk '…' INFILE | sponge INFILE

Solution 3

Not awk, but simpler:

tail -c +4 UTF8 > UTF8.nobom

To check for BOM:

hd -n 3 UTF8

If BOM is present you'll see: 00000000 ef bb bf ...

Solution 4

In addition to converting CRLF line endings to LF, dos2unix also removes BOMs:

dos2unix *.txt

dos2unix also converts UTF-16 files with a BOM (but not UTF-16 files without a BOM) to UTF-8 without a BOM:

$ printf '\ufeffä\n'|iconv -f utf-8 -t utf-16be>bom-utf16be

$ printf '\ufeffä\n'|iconv -f utf-8 -t utf-16le>bom-utf16le

$ printf '\ufeffä\n'>bom-utf8

$ printf 'ä\n'|iconv -f utf-8 -t utf-16be>utf16be

$ printf 'ä\n'|iconv -f utf-8 -t utf-16le>utf16le

$ printf 'ä\n'>utf8

$ for f in *;do printf '%11s %s\n' $f $(xxd -p $f);done

bom-utf16be feff00e4000a

bom-utf16le fffee4000a00

bom-utf8 efbbbfc3a40a

utf16be 00e4000a

utf16le e4000a00

utf8 c3a40a

$ dos2unix -q *

$ for f in *;do printf '%11s %s\n' $f $(xxd -p $f);done

bom-utf16be c3a40a

bom-utf16le c3a40a

bom-utf8 c3a40a

utf16be 00e4000a

utf16le e4000a00

utf8 c3a40a

Solution 5

I know the question was directed at unix/linux, thought it would be worth to mention a good option for the unix-challenged (on windows, with a UI).

I ran into the same issue on a WordPress project (BOM was causing problems with rss feed and page validation) and I had to look into all the files in a quite big directory tree to find the one that was with BOM. Found an application called Replace Pioneer and in it:

Batch Runner -> Search (to find all the files in the subfolders) -> Replace Template -> Binary remove BOM (there is a ready made search and replace template for this).

It was not the most elegant solution and it did require installing a program, which is a downside. But once I found out what was going around me, it worked like a charm (and found 3 files out of about 2300 that were with BOM).

Related videos on Youtube

Boldewyn

Boldewyn is the name of the donkey in German fables (at least, the ones from Goethe). Despite its bad name, a donkey is an animal with its own head and quite a portion of wit. As someone pointed out once, if you say to a horse to jump down that abyss, it would happily do so, whereas the donkey would give you a kick where you deserve it to. And someone else pointed out, that laziness is a core requirement for a good developer...

Updated on July 08, 2022Comments

-

Boldewyn almost 2 years

How would an

awkscript (presumably a one-liner) for removing a BOM look like?Specification:

- print every line after the first (

NR > 1) - for the first line: If it starts with

#FE #FFor#FF #FE, remove those and print the rest

- print every line after the first (

-

Boldewyn almost 15 yearsIt seems that the dot in the middle of the sub statement is too much (at least, my awk complains about it). Beside this it's exactly what I searched, thanks!

-

Boldewyn almost 15 yearsThis solution, however, works only for UTF-8 encoded files. For others, like UTF-16, see Wikipedia for the corresponding BOM representation: en.wikipedia.org/wiki/Byte_order_mark

-

Brandon Rhodes over 14 yearsI agree with the earlier comment; the dot does not belong in the middle of this statement and makes this otherwise great little snippet an example of an awk syntax error.

-

Axel M. Garcia about 14 yearsSo:

awk '{if(NR==1)sub(/^\xef\xbb\xbf/,"");print}' INFILE > OUTFILEand make sure INFILE and OUTFILE are different! -

tchrist over 12 yearsBOMs are 2 bytes for UTF-16 and 4 bytes for UTF-32, and of course have no business being in UTF-8 in the first place.

tchrist over 12 yearsBOMs are 2 bytes for UTF-16 and 4 bytes for UTF-32, and of course have no business being in UTF-8 in the first place. -

tchrist over 12 yearsIf you used

tchrist over 12 yearsIf you usedperl -i.orig -pe 's/^\x{FFFE}//' badfileyou could rely on your PERL_UNICODE and/or PERLIO envariables for the encoding. PERL_UNICODE=SD would work for UTF-8; for the others, you’d need PERLIO. -

Karoly Horvath about 12 years@tchrist: from wikipedia: "The Unicode Standard does permit the BOM in UTF-8, but does not require or recommend its use. Byte order has no meaning in UTF-8 so in UTF-8 the BOM serves only to identify a text stream or file as UTF-8."

-

tchrist about 12 years@KarolyHorvath Yes, precisely. Its use is not recommended. It breaks stuff. The encoding should be specified by a higher-level protocol.

tchrist about 12 years@KarolyHorvath Yes, precisely. Its use is not recommended. It breaks stuff. The encoding should be specified by a higher-level protocol. -

Karoly Horvath about 12 years@tchrist: you mean it breaks broken stuff? :) proper apps should be able to handle that BOM.

-

tchrist about 12 years@KarolyHorvath I mean it breaks lots of programs. Isn’t that what I said? When you open a stream in the UTF-16 or UTF-32 encodings, the decoder knows not to count the BOM. When you use UTF-8, decoders present the BOM as data. This is a syntax error in innumerable programs. Even Java’s decoder behaves this way, BY DESIGN! BOMs on UTF-8 files are misplaced and a pain in the butt: they are an error! They break many things. Even just

tchrist about 12 years@KarolyHorvath I mean it breaks lots of programs. Isn’t that what I said? When you open a stream in the UTF-16 or UTF-32 encodings, the decoder knows not to count the BOM. When you use UTF-8, decoders present the BOM as data. This is a syntax error in innumerable programs. Even Java’s decoder behaves this way, BY DESIGN! BOMs on UTF-8 files are misplaced and a pain in the butt: they are an error! They break many things. Even justcat file1.utf8 file2.utf8 file3.utf3 > allfiles.utf8will be broken. Never use a BOM on UTF-8. Period. -

Karoly Horvath about 12 years@@tchrist:: that's what you said... and I said something else. BTW Java's decoder isn't broken by design. it's a bug and they kept it for backward compatibility.

-

mklement0 over 11 years

hdis not available on OS X (as of 10.8.2), so to check for an UTF-8 BOM there you can use the following:head -c 3 file | od -t x1. -

Hakanai over 11 yearsI tried the second command precisely on Mac OS X and the result was "success", but the substitution didn't actually occur.

Hakanai over 11 yearsI tried the second command precisely on Mac OS X and the result was "success", but the substitution didn't actually occur. -

Denilson Sá Maia over 11 yearsIt is worth noting these commands replace one specific byte sequence, which is one of the possible byte-order-marks. Maybe your file had a different BOM sequence. (I can't help other than that, as I don't have a Mac)

-

TrueY almost 11 yearsMaybe a little bit shorter version:

TrueY almost 11 yearsMaybe a little bit shorter version:awk 'NR==1{sub(/^\xef\xbb\xbf/,"")}1' -

Benoit Duffez over 9 years

if [[ "file a.txt | grep -o 'with BOM'" == "BOM" ]];can also be used -

Hoàng Long almost 9 yearsI am so happy when I found your solution, however I don't have the privilege to install software on company computer. Took lots of time today, until I figure out the alternative: Using Notepad++ with PythonScript plugin . superuser.com/questions/418515/… Thanks anyway!

-

John Wiseman over 8 yearsWhen I tried the second command on OS X on a file that used 0xef 0xbb 0xbf as the BOM, it did not actually do the substitution.

-

Seldom 'Where's Monica' Needy almost 8 years

Seldom 'Where's Monica' Needy almost 8 yearshexdumpandxddshould work in place ofhd, if that's not available on your system. -

Ian over 7 yearsIn OSX, I could only get this to work via perl, as shown here: stackoverflow.com/a/9101056/2063546

-

user963601 over 7 yearsWorks great on OS X El Capitan

10.11.6. -

user963601 over 7 yearsOn OS X El Capitan

10.11.6, this doesn't work, but the official answer stackoverflow.com/a/1068700/9636 works fine. -

OroshiX almost 6 years/!\ Both commands erased my file, as a "side-effect" of changing the encoding... Quite fortunate to have had them backed up first.

-

Blayzeing over 3 yearsIf you're trying to just change the file (not create a new one) and for some reason can't use sed (as per the answer below), make sure to use

Blayzeing over 3 yearsIf you're trying to just change the file (not create a new one) and for some reason can't use sed (as per the answer below), make sure to use-i inplaceand not put the input file as the output file, which will erase the file!