Using tryCatch and rvest to deal with 404 and other crawling errors

Solution 1

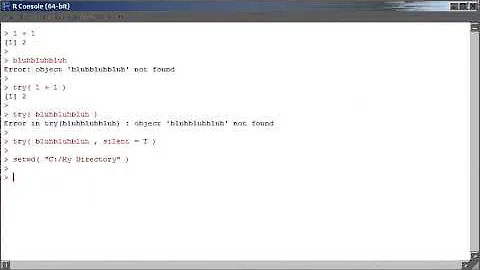

You're looking for try or tryCatch, which are how R handles error catching.

With try, you just need to wrap the thing that might fail in try(), and it will return the error and keep running:

library(rvest)

sapply(Data$Pages, function(url){

try(

url %>%

as.character() %>%

read_html() %>%

html_nodes('h1') %>%

html_text()

)

})

# [1] "'Spam King' Sanford Wallace gets 2.5 years in prison for 27 million Facebook scam messages"

# [2] "OMG, this Japanese Trump Commercial is everything"

# [3] "Omar Mateen posted to Facebook during Orlando mass shooting"

# [4] "Error in open.connection(x, \"rb\") : HTTP error 404.\n"

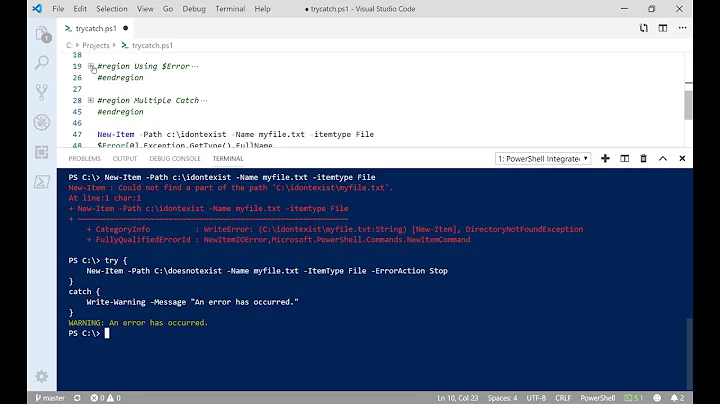

However, while that will get everything, it will also insert bad data into our results. tryCatch allows you to configure what happens when an error is called by passing it a function to run when that condition arises:

sapply(Data$Pages, function(url){

tryCatch(

url %>%

as.character() %>%

read_html() %>%

html_nodes('h1') %>%

html_text(),

error = function(e){NA} # a function that returns NA regardless of what it's passed

)

})

# [1] "'Spam King' Sanford Wallace gets 2.5 years in prison for 27 million Facebook scam messages"

# [2] "OMG, this Japanese Trump Commercial is everything"

# [3] "Omar Mateen posted to Facebook during Orlando mass shooting"

# [4] NA

There we go; much better.

Update

In the tidyverse, the purrr package offers two functions, safely and possibly, which work like try and tryCatch. They are adverbs, not verbs, meaning they take a function, modify it so as to handle errors, and return a new function (not a data object) which can then be called. Example:

library(tidyverse)

library(rvest)

df <- Data %>% rowwise() %>% # Evaluate each row (URL) separately

mutate(Pages = as.character(Pages), # Convert factors to character for read_html

title = possibly(~.x %>% read_html() %>% # Try to take a URL, read it,

html_nodes('h1') %>% # select header nodes,

html_text(), # and collect text inside.

NA)(Pages)) # If error, return NA. Call modified function on URLs.

df %>% select(title)

## Source: local data frame [4 x 1]

## Groups: <by row>

##

## # A tibble: 4 × 1

## title

## <chr>

## 1 'Spam King' Sanford Wallace gets 2.5 years in prison for 27 million Facebook scam messages

## 2 OMG, this Japanese Trump Commercial is everything

## 3 Omar Mateen posted to Facebook during Orlando mass shooting

## 4 <NA>

Solution 2

You can see this Question for explanation here

urls<-c(

"http://boingboing.net/2016/06/16/spam-king-sanford-wallace.html",

"http://boingboing.net/2016/06/16/omg-the-japanese-trump-commer.html",

"http://boingboing.net/2016/06/16/omar-mateen-posted-to-facebook.html",

"http://boingboing.net/2016/06/16/omar-mateen-posted-to-facdddebook.html")

readUrl <- function(url) {

out <- tryCatch(

{

message("This is the 'try' part")

url %>% as.character() %>% read_html() %>% html_nodes('h1') %>% html_text()

},

error=function(cond) {

message(paste("URL does not seem to exist:", url))

message("Here's the original error message:")

message(cond)

return(NA)

}

}

)

return(out)

}

y <- lapply(urls, readUrl)

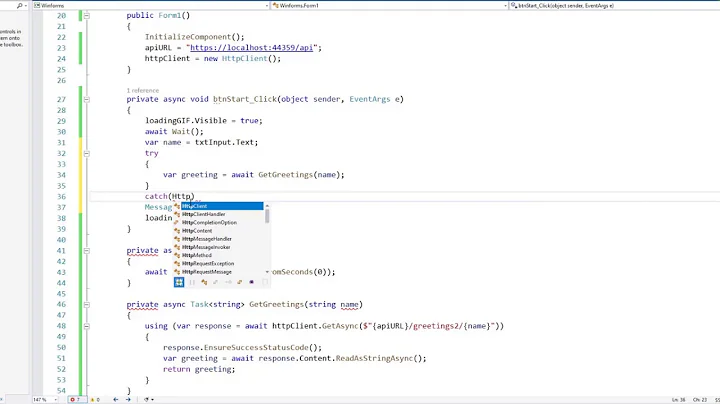

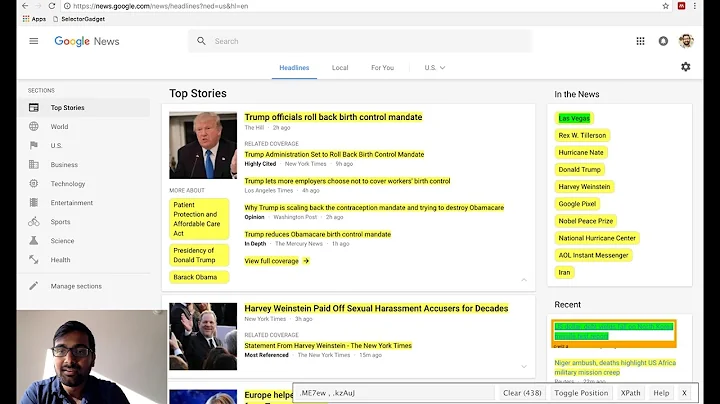

Related videos on Youtube

Blas

Updated on June 04, 2022Comments

-

Blas almost 2 years

When retrieving the h1 title using

rvest, I sometimes run into 404 pages. This stop the process and returns this error.Error in open.connection(x, "rb") : HTTP error 404.

See the example below

Data<-data.frame(Pages=c( "http://boingboing.net/2016/06/16/spam-king-sanford-wallace.html", "http://boingboing.net/2016/06/16/omg-the-japanese-trump-commer.html", "http://boingboing.net/2016/06/16/omar-mateen-posted-to-facebook.html", "http://boingboing.net/2016/06/16/omar-mateen-posted-to-facdddebook.html"))Code used to retrieve h1

library (rvest) sapply(Data$Pages, function(url){ url %>% as.character() %>% read_html() %>% html_nodes('h1') %>% html_text() })Is there a way to include an argument to ignore errors and continue the process ?