UTF8 to/from wide char conversion in STL

Solution 1

I've asked this question 5 years ago. This thread was very helpful for me back then, I came to a conclusion, then I moved on with my project. It is funny that I needed something similar recently, totally unrelated to that project from the past. As I was researching for possible solutions, I stumbled upon my own question :)

The solution I chose now is based on C++11. The boost libraries that Constantin mentions in his answer are now part of the standard. If we replace std::wstring with the new string type std::u16string, then the conversions will look like this:

UTF-8 to UTF-16

std::string source;

...

std::wstring_convert<std::codecvt_utf8_utf16<char16_t>,char16_t> convert;

std::u16string dest = convert.from_bytes(source);

UTF-16 to UTF-8

std::u16string source;

...

std::wstring_convert<std::codecvt_utf8_utf16<char16_t>,char16_t> convert;

std::string dest = convert.to_bytes(source);

As seen from the other answers, there are multiple approaches to the problem. That's why I refrain from picking an accepted answer.

Solution 2

The problem definition explicitly states that the 8-bit character encoding is UTF-8. That makes this a trivial problem; all it requires is a little bit-twiddling to convert from one UTF spec to another.

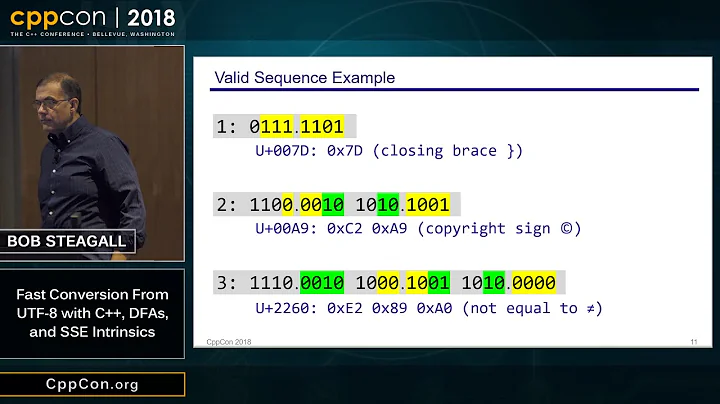

Just look at the encodings on these Wikipedia pages for UTF-8, UTF-16, and UTF-32.

The principle is simple - go through the input and assemble a 32-bit Unicode code point according to one UTF spec, then emit the code point according to the other spec. The individual code points need no translation, as would be required with any other character encoding; that's what makes this a simple problem.

Here's a quick implementation of wchar_t to UTF-8 conversion and vice versa. It assumes that the input is already properly encoded - the old saying "Garbage in, garbage out" applies here. I believe that verifying the encoding is best done as a separate step.

std::string wchar_to_UTF8(const wchar_t * in)

{

std::string out;

unsigned int codepoint = 0;

for (in; *in != 0; ++in)

{

if (*in >= 0xd800 && *in <= 0xdbff)

codepoint = ((*in - 0xd800) << 10) + 0x10000;

else

{

if (*in >= 0xdc00 && *in <= 0xdfff)

codepoint |= *in - 0xdc00;

else

codepoint = *in;

if (codepoint <= 0x7f)

out.append(1, static_cast<char>(codepoint));

else if (codepoint <= 0x7ff)

{

out.append(1, static_cast<char>(0xc0 | ((codepoint >> 6) & 0x1f)));

out.append(1, static_cast<char>(0x80 | (codepoint & 0x3f)));

}

else if (codepoint <= 0xffff)

{

out.append(1, static_cast<char>(0xe0 | ((codepoint >> 12) & 0x0f)));

out.append(1, static_cast<char>(0x80 | ((codepoint >> 6) & 0x3f)));

out.append(1, static_cast<char>(0x80 | (codepoint & 0x3f)));

}

else

{

out.append(1, static_cast<char>(0xf0 | ((codepoint >> 18) & 0x07)));

out.append(1, static_cast<char>(0x80 | ((codepoint >> 12) & 0x3f)));

out.append(1, static_cast<char>(0x80 | ((codepoint >> 6) & 0x3f)));

out.append(1, static_cast<char>(0x80 | (codepoint & 0x3f)));

}

codepoint = 0;

}

}

return out;

}

The above code works for both UTF-16 and UTF-32 input, simply because the range d800 through dfff are invalid code points; they indicate that you're decoding UTF-16. If you know that wchar_t is 32 bits then you could remove some code to optimize the function.

std::wstring UTF8_to_wchar(const char * in)

{

std::wstring out;

unsigned int codepoint;

while (*in != 0)

{

unsigned char ch = static_cast<unsigned char>(*in);

if (ch <= 0x7f)

codepoint = ch;

else if (ch <= 0xbf)

codepoint = (codepoint << 6) | (ch & 0x3f);

else if (ch <= 0xdf)

codepoint = ch & 0x1f;

else if (ch <= 0xef)

codepoint = ch & 0x0f;

else

codepoint = ch & 0x07;

++in;

if (((*in & 0xc0) != 0x80) && (codepoint <= 0x10ffff))

{

if (sizeof(wchar_t) > 2)

out.append(1, static_cast<wchar_t>(codepoint));

else if (codepoint > 0xffff)

{

out.append(1, static_cast<wchar_t>(0xd800 + (codepoint >> 10)));

out.append(1, static_cast<wchar_t>(0xdc00 + (codepoint & 0x03ff)));

}

else if (codepoint < 0xd800 || codepoint >= 0xe000)

out.append(1, static_cast<wchar_t>(codepoint));

}

}

return out;

}

Again if you know that wchar_t is 32 bits you could remove some code from this function, but in this case it shouldn't make any difference. The expression sizeof(wchar_t) > 2 is known at compile time, so any decent compiler will recognize dead code and remove it.

Solution 3

UTF8-CPP: UTF-8 with C++ in a Portable Way

Solution 4

You can extract utf8_codecvt_facet from Boost serialization library.

Their usage example:

typedef wchar_t ucs4_t;

std::locale old_locale;

std::locale utf8_locale(old_locale,new utf8_codecvt_facet<ucs4_t>);

// Set a New global locale

std::locale::global(utf8_locale);

// Send the UCS-4 data out, converting to UTF-8

{

std::wofstream ofs("data.ucd");

ofs.imbue(utf8_locale);

std::copy(ucs4_data.begin(),ucs4_data.end(),

std::ostream_iterator<ucs4_t,ucs4_t>(ofs));

}

// Read the UTF-8 data back in, converting to UCS-4 on the way in

std::vector<ucs4_t> from_file;

{

std::wifstream ifs("data.ucd");

ifs.imbue(utf8_locale);

ucs4_t item = 0;

while (ifs >> item) from_file.push_back(item);

}

Look for utf8_codecvt_facet.hpp and utf8_codecvt_facet.cpp files in boost sources.

Solution 5

There are several ways to do this, but the results depend on what the character encodings are in the string and wstring variables.

If you know the string is ASCII, you can simply use wstring's iterator constructor:

string s = "This is surely ASCII.";

wstring w(s.begin(), s.end());

If your string has some other encoding, however, you'll get very bad results. If the encoding is Unicode, you could take a look at the ICU project, which provides a cross-platform set of libraries that convert to and from all sorts of Unicode encodings.

If your string contains characters in a code page, then may $DEITY have mercy on your soul.

Related videos on Youtube

Vladimir Grigorov

Updated on July 05, 2022Comments

-

Vladimir Grigorov almost 2 years

Is it possible to convert UTF8 string in a std::string to std::wstring and vice versa in a platform independent manner? In a Windows application I would use MultiByteToWideChar and WideCharToMultiByte. However, the code is compiled for multiple OSes and I'm limited to standard C++ library.

-

C. K. Young over 15 yearsIncidentally, the standard C++ library is not called STL; the STL is just a small subsection of the standard C++ library. In this case, I believe you are asking for functionality in the standard C++ library, and I've answered accordingly.

C. K. Young over 15 yearsIncidentally, the standard C++ library is not called STL; the STL is just a small subsection of the standard C++ library. In this case, I believe you are asking for functionality in the standard C++ library, and I've answered accordingly. -

josesuero over 15 yearsYou haven't specified which encoding you want to end up with. wstring doesn't specify any particular encoding. Of course it'd be natural to convert to utf32 on platforms where wchar_t is 4 bytes wide, and utf16 if wchar_t is 2 bytes. Is that what you want?

-

kirelagin about 4 years@jalf Your comment is misleading.

std::wstringisstd::basic_string<wchar_t>.wchar_tis an opaque data type that represents a Unicode character (the fact that on Windows it is 16 bits long only means that Windows does not follow the standard). There is no “encoding” for abstract Unicode characters, they are just characters.

-

-

Martin York over 15 yearsICU converts too/from every character encoding I have ever come across. Its huge.

Martin York over 15 yearsICU converts too/from every character encoding I have ever come across. Its huge. -

Nemanja Trifunovic over 15 yearsI don't see he seaid anything about std::string containing UTF-8 encoded strings in the original question: "Is it possible to convert std::string to std::wstring and vice versa in a platform independent manner?"

Nemanja Trifunovic over 15 yearsI don't see he seaid anything about std::string containing UTF-8 encoded strings in the original question: "Is it possible to convert std::string to std::wstring and vice versa in a platform independent manner?" -

Mark Ransom over 15 yearsUTF-8 is specified in the title of the post. You are correct that it is missing from the body of the text.

-

Vladimir Grigorov over 15 yearsThank you for the correction, I did intend to use UTF8. I edited the question to be more clear.

-

moogs over 15 yearsBut ''widechar'' does not necessarily mean UTF16

-

Martin York over 15 yearsI though you had to imbue the stream before it is opened, otherwise the imbue is ignored!

Martin York over 15 yearsI though you had to imbue the stream before it is opened, otherwise the imbue is ignored! -

Alex S over 15 yearsMartin, it seems to work with Visual Studio 2005: 0x41a is successfully converted to {0xd0, 0x9a} UTF-8 sequence.

-

Exectron almost 13 yearsWhat you've got may be a good "proof of concept". It's one thing to convert valid encodings successfully. It is another level of effort to handle conversion of invalid encoding data (e.g. unpaired surrogates in UTF-16) correctly according to the specifications. For that you really need some more thoroughly designed and tested code.

-

Mark Ransom almost 13 years@Craig McQueen, you're absolutely right. I made the assumption that the encoding was already correct, and it was just a mechanical conversion. I'm sure there are situations where that's the case, and this code would be adequate - but the limitations should be stated explicitly. It's not clear from the original question if this should be a concern or not.

-

Tyler Liu about 11 yearsDoing encoding/decoding according to locale is a bad idea. Just as you said: "does not guarantee".

Tyler Liu about 11 yearsDoing encoding/decoding according to locale is a bad idea. Just as you said: "does not guarantee". -

Tyler Liu about 11 yearsThe question says "UTF8", so "the encoding of its multibyte characters" is known.

Tyler Liu about 11 yearsThe question says "UTF8", so "the encoding of its multibyte characters" is known. -

Tyler Liu about 11 yearsI have the same feeling as you. The questions already states "UTF8", so it is an encoding/decoding issue. It has nothing to do with locale. Whose answer mentioned locale didn't get the point at all.

Tyler Liu about 11 yearsI have the same feeling as you. The questions already states "UTF8", so it is an encoding/decoding issue. It has nothing to do with locale. Whose answer mentioned locale didn't get the point at all. -

Chawathe Vipul S about 11 yearswstring implies 2 or 4 bytes instead of single byte characters. Where's the question to switch from utf8 encoding?

-

Basilevs almost 10 years@TylerLong obviously one should configure std::locale instance specifically for the required conversion.

Basilevs almost 10 years@TylerLong obviously one should configure std::locale instance specifically for the required conversion. -

Xtra Coder over 9 yearsI've got some strange poor performance with codecvt, look here for details: stackoverflow.com/questions/26196686/…

-

Tyler Liu over 9 years@Basilevs I still think using locale to encode/decode is wrong. The correct way is to configure

Tyler Liu over 9 years@Basilevs I still think using locale to encode/decode is wrong. The correct way is to configureencodinginstead oflocale. As far as I can tell, there is no such a locale which can represent every single unicode character. Let's say I want to encode a string which contains all of the unicode characters, which locale do you sugguest me to configure? Corret me if I am wrong. -

Basilevs over 9 years@TylerLong Locale in C++ is very abstract concept that covers far more things than just regional settings and encodings. Basically one can.do everything with it. While codecvt_facet indeed handles more than just simple recoding, absolutely nothing prevents it from making simple unicode transformations.

Basilevs over 9 years@TylerLong Locale in C++ is very abstract concept that covers far more things than just regional settings and encodings. Basically one can.do everything with it. While codecvt_facet indeed handles more than just simple recoding, absolutely nothing prevents it from making simple unicode transformations. -

Navin almost 9 yearsI think you should accept this answer. Sure there are multiple ways to solve this, but this is the only portable solution that does not need a library.

-

thomthom over 8 yearsIs this UTF-16 with LE or BE?

-

HojjatJafary almost 7 yearsstd::wstring_convert deprecated in C++17

HojjatJafary almost 7 yearsstd::wstring_convert deprecated in C++17 -

Mark Ransom over 6 years@moogs after all these years I just realized how close this was to working for both UTF-16 and UTF-32

wchar_t. I've updated the answer. -

jakar about 4 years@HojjatJafary, what is the replacement?

-

kirelagin about 4 yearsWhat do you mean by “It assumes that the input is already properly encoded”? Your input is made of

wchar_t, which is an opaque data type that represents a Unicode character, you are not allowed to make any assumptions about its internal representation, the only thing you can do is call provided library functions on it, likewctomb, which will encode the character using current system locale encoding. -

kirelagin about 4 years@HojjatJafary None :).

codecvt_utf8_utf16is deprecated too, by the way (and, no, there is no replacement either). -

Mark Ransom about 4 years@kirelagin it's my code, I'm allowed to make any assumptions I want. I added that statement to make it clear that there wasn't any error checking in the code, and if you fed it invalid input I couldn't guarantee the correctness of the result. By "invalid input" I mean for example a code point greater than 0x10ffff.

-

kirelagin about 4 years@MarkRansom The author of the question is asking for “a platform independent manner”. Your manner is not only not platform independent, it is not even independent of the standard library implementation on a single platform. You can not make any assumptions about the numbers in the variable of type

wchar_t, any error checking you can add will be incorrect and will be triggered by valid inputs on some platforms/implementations (potentially). -

Mark Ransom about 4 years@kirelagin which is exactly why I suggest in the answer that conversion and validation should be two separate operations. If you want to assert that my code is incorrect you'll have to be more specific about the conditions under which it would be incorrect. The only assumption I make about

wchar_tis that it holds a range of integers appropriate for the platform on which it is compiled. -

kirelagin about 4 years@MarkRansom I’m sorry, I just got very confused by your statement that “The above code works for both UTF-16 and UTF-32 input”, because the input is

wchar_ts, which are abstract code points (according to the C standard), and the concept of encoding (UTF-16 or UTF-32) does not apply to them in any meaningful way. I see what you meant now: basically, this code works both withwchar_tthat represent all of Unicode and platforms like Windows that hackwchar_tfor their purposes. -

Mark Ransom about 4 years@kirelagin

wchar_twas intended to hold code points, but that's not how it worked out in practice. As a concrete example when Windows first got Unicode all the codepoints fit into 16 bits sowchar_twas made a 16-bit integer. Later when Unicode was extended they were forced to use UTF-16 encoding to make it work, and that's what Windows uses to this day withwchar_tstill 16 bits. Don't look down on Windows, their problems stem from being an early adopter and they aren't alone. -

John Thoits over 3 yearsAnd the include you will want to use for this answer is #include <codecvt>

John Thoits over 3 yearsAnd the include you will want to use for this answer is #include <codecvt>

![Base [6]: Unicode Conversions](https://i.ytimg.com/vi/OkW7_rWz70A/hqdefault.jpg?sqp=-oaymwEcCOADEI4CSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLA6H6br6yjtR12bgTWCVJvk1ARLIg)