Volume claim on GKE / Multi-Attach error for volume Volume is already exclusively attached

Solution 1

Based on your description what you are experiencing is exactly what is supposed to happen.

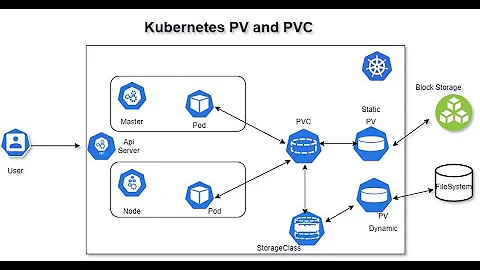

You are using gcePersistentDisk in your PV/PVC definition. The accessMode is ReadWriteOnce - this means that this PV can only be attached to a single Node (stressing Node here, there can be multiple Pods running on the same Node using the same PV). There is not much you can do about this; gcePersistentDisk is like a remote block device, it's not possible to mount it on multiple Nodes simultaneously (unless read only).

There is a nice table that shows which PVs support ReadWriteMany (that is, write access on multiple Nodes at the same time):

Important! A volume can only be mounted using one access mode at a time, even if it supports many. For example, a GCEPersistentDisk can be mounted as ReadWriteOnce by a single node or ReadOnlyMany by many nodes, but not at the same time.

Solution 2

Your Deployment yaml shows 5 replicas, which will not work with GCE PD in ReadWriteOnce mode. GCE PD can only be attached to multiple nodes in ReadOnlyMany mode.

If you need shared, writable storage across all replicas, then you should look into a multi-writer solution like NFS or Gluster.

If you want each replica to have its own disk, then you can use StatefulSets, which will have a PVC per replica.

Related videos on Youtube

Comments

-

Ben almost 2 years

The problem seems to have been solved a long time ago, as the answer and the comments does not provide real solutions, I would like to get some help from experienced users

The error is the following (when describing the pod, which keeps on the ContainerCreating state) :

Multi-Attach error for volume "pvc-xxx" Volume is already exclusively attached to one node and can't be attached to anotherThis all run on GKE. I had a previous cluster, and the problem never occured. I have reused the same disk when creating this new cluster — not sure if it is related

Here is the full yaml config files (I'm leaving the concerned code part commented as to highlight it; it is not when in effective use)

Thanks in advance if obvious workarounds

-

Janos Lenart over 6 yearsIs it actually attached to any Pods? Can you please show how you are using this pvc, and how you created it? Please include more information so we can help.

Janos Lenart over 6 yearsIs it actually attached to any Pods? Can you please show how you are using this pvc, and how you created it? Please include more information so we can help. -

Ben over 6 years@JanosLenart added the full config. The cluster has two nodes. Let's say I had two replicas. It seems that the disk is locked in one of the two nodes, and if one of the pod goes to the other node, the described error appears; i'm just not sure where to look at. At the same time, this is actually the issue that seem to have been solved long ago; wondering if my cluster is not screwed somewhere. I've formatted the disk as advertised by google; used ti without problems on the previous cluster, with the very same pv/pvc/deployment config

-

-

Ben over 6 yearsOk it makes 100% sense now. I missed the point, just did not set replicas on the previous one

-

ChrHansen over 6 yearsI'm having the same error, but I don't really understand how to determine which node the PVC is bound to and how I "move" it to the pod that needs the PVC – a pod I have fixed to one node (by label). I used

helm install stable/jenkins, and have deleted that deployment and rehelm installed a few times. It's probably dead-simple once I understand "who" or what has laid the claim. -

Ben over 6 yearsComing back to this one; it'd be strongly advised to use nodeSelector on your pod/deployments ? In order for it to land on the related node. Also, what happen when you eventually change the node ? You'd need to attache the disk manually probably ? Feels like using disk is harder than it looks at first, and far from being "automated". Taking this example (cloud.google.com/kubernetes-engine/docs/tutorials/…), more or less for beginners, they just don't expose those two very potential issues; it kind of feels misleading

-

jaksky about 6 yearsIs there a way to detach the disk an move it?

![Persistent Storage with Kubernetes in Production - Which Solution and Why? [I] - Cheryl Hung](https://i.ytimg.com/vi/hqE5c5pyfrk/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDSJUmOIjulepmO5EeuIC9lwp-8-A)