wget - How to download recursively and only specific mime-types/extensions (i.e. text only)

Solution 1

I've tried a totally different approach is to use Scrapy, however it has the same problem! Here's how I solved it: SO: Python Scrapy - mimetype based filter to avoid non-text file downloads?

The solution is to setup a

Node.jsproxy and configure Scrapy to use it throughhttp_proxyenvironment variable.What the proxy should do is:

- Take HTTP requests from Scrapy and sends it to the server being crawled. Then it gives back the response from to Scrapy i.e. intercept all HTTP traffic.

- For binary files (based on a heuristic you implement) it sends

403 Forbiddenerror to Scrapy and immediate closes the request/response. This helps to save time, traffic and Scrapy won't crash.Sample Proxy Code That actually works!

http.createServer(function(clientReq, clientRes) {

var options = {

host: clientReq.headers['host'],

port: 80,

path: clientReq.url,

method: clientReq.method,

headers: clientReq.headers

};

var fullUrl = clientReq.headers['host'] + clientReq.url;

var proxyReq = http.request(options, function(proxyRes) {

var contentType = proxyRes.headers['content-type'] || '';

if (!contentType.startsWith('text/')) {

proxyRes.destroy();

var httpForbidden = 403;

clientRes.writeHead(httpForbidden);

clientRes.write('Binary download is disabled.');

clientRes.end();

}

clientRes.writeHead(proxyRes.statusCode, proxyRes.headers);

proxyRes.pipe(clientRes);

});

proxyReq.on('error', function(e) {

console.log('problem with clientReq: ' + e.message);

});

proxyReq.end();

}).listen(8080);

Solution 2

You could specify a list of allowed resp. disallowed filename patterns:

Allowed:

-A LIST

--accept LIST

Disallowed:

-R LIST

--reject LIST

LIST is comma-separated list of filename patterns/extensions.

You can use the following reserved characters to specify patterns:

*?[]

Examples:

- only download PNG files:

-A png - don't download CSS files:

-R css - don't download PNG files that start with "avatar":

-R avatar*.png

If the file has no extension resp. the file name has no pattern you could make use of, you'd need MIME type parsing, I guess (see Lars Kotthoffs answer).

Solution 3

You could try patching wget with this (also here) to filter by MIME type. This patch is quite old now though, so it might not work anymore.

Solution 4

A new Wget (Wget2) already has feature:

--filter-mime-type Specify a list of mime types to be saved or ignored`

### `--filter-mime-type=list`

Specify a comma-separated list of MIME types that will be downloaded. Elements of list may contain wildcards.

If a MIME type starts with the character '!' it won't be downloaded, this is useful when trying to download

something with exceptions. For example, download everything except images:

wget2 -r https://<site>/<document> --filter-mime-type=*,\!image/*

It is also useful to download files that are compatible with an application of your system. For instance,

download every file that is compatible with LibreOffice Writer from a website using the recursive mode:

wget2 -r https://<site>/<document> --filter-mime-type=$(sed -r '/^MimeType=/!d;s/^MimeType=//;s/;/,/g' /usr/share/applications/libreoffice-writer.desktop)

Wget2 has not been released as of today, but will be soon. Debian unstable already has an alpha version shipped.

Look at https://gitlab.com/gnuwget/wget2 for more info. You can post questions/comments directly to [email protected].

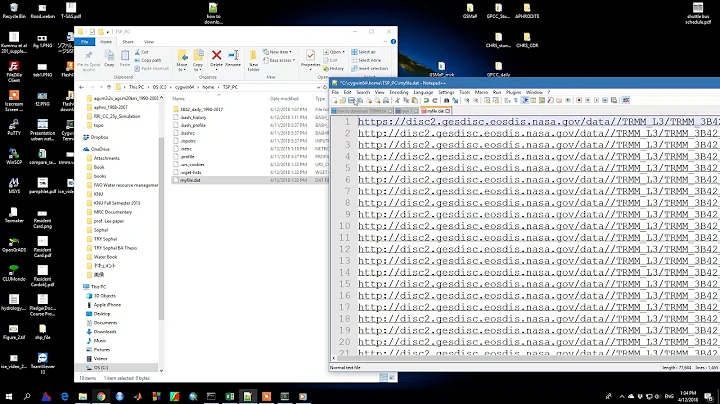

Related videos on Youtube

Omar Al-Ithawi

Django, Python and a little bit of React and JavaScript.

Updated on September 18, 2022Comments

-

Omar Al-Ithawi almost 2 years

How to download a full website, but ignoring all binary files.

wgethas this functionality using the-rflag but it downloads everything and some websites are just too much for a low-resources machine and it's not of a use for the specific reason I'm downloading the site.Here is the command line i use:

wget -P 20 -r -l 0 http://www.omardo.com/blog(my own blog) -

James Andino over 11 yearsGiving this a shot... ftp.gnu.org/gnu/wget I rolled the dice on just patching the newest version of wget with this but no luck( of course ). I would try to update the patch but I frankly don`t have the chops yet in c++ for it to not be a time sink. I did manage to grab the version of wget it was written for and get that running. I had trouble though compiling with ssl support because I could not figure out what version of openssl I needed to grab.

James Andino over 11 yearsGiving this a shot... ftp.gnu.org/gnu/wget I rolled the dice on just patching the newest version of wget with this but no luck( of course ). I would try to update the patch but I frankly don`t have the chops yet in c++ for it to not be a time sink. I did manage to grab the version of wget it was written for and get that running. I had trouble though compiling with ssl support because I could not figure out what version of openssl I needed to grab. -

David Portabella almost 8 yearsthis looks great. any idea why this patch has not been yet accepted (four years later)?