What's the fastest algorithm for sorting a linked list?

Solution 1

It is reasonable to expect that you cannot do any better than O(N log N) in running time.

However, the interesting part is to investigate whether you can sort it in-place, stably, its worst-case behavior and so on.

Simon Tatham, of Putty fame, explains how to sort a linked list with merge sort. He concludes with the following comments:

Like any self-respecting sort algorithm, this has running time O(N log N). Because this is Mergesort, the worst-case running time is still O(N log N); there are no pathological cases.

Auxiliary storage requirement is small and constant (i.e. a few variables within the sorting routine). Thanks to the inherently different behaviour of linked lists from arrays, this Mergesort implementation avoids the O(N) auxiliary storage cost normally associated with the algorithm.

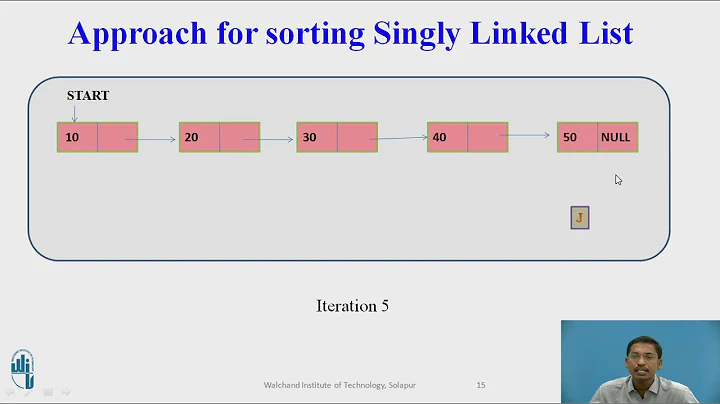

There is also an example implementation in C that work for both singly and doubly linked lists.

As @Jørgen Fogh mentions below, big-O notation may hide some constant factors that can cause one algorithm to perform better because of memory locality, because of a low number of items, etc.

Solution 2

Depending on a number of factors, it may actually be faster to copy the list to an array and then use a Quicksort.

The reason this might be faster is that an array has much better cache performance than a linked list. If the nodes in the list are dispersed in memory, you may be generating cache misses all over the place. Then again, if the array is large you will get cache misses anyway.

Mergesort parallelises better, so it may be a better choice if that is what you want. It is also much faster if you perform it directly on the linked list.

Since both algorithms run in O(n * log n), making an informed decision would involve profiling them both on the machine you would like to run them on.

--- EDIT

I decided to test my hypothesis and wrote a C-program which measured the time (using clock()) taken to sort a linked list of ints. I tried with a linked list where each node was allocated with malloc() and a linked list where the nodes were laid out linearly in an array, so the cache performance would be better. I compared these with the built-in qsort, which included copying everything from a fragmented list to an array and copying the result back again. Each algorithm was run on the same 10 data sets and the results were averaged.

These are the results:

N = 1000:

Fragmented list with merge sort: 0.000000 seconds

Array with qsort: 0.000000 seconds

Packed list with merge sort: 0.000000 seconds

N = 100000:

Fragmented list with merge sort: 0.039000 seconds

Array with qsort: 0.025000 seconds

Packed list with merge sort: 0.009000 seconds

N = 1000000:

Fragmented list with merge sort: 1.162000 seconds

Array with qsort: 0.420000 seconds

Packed list with merge sort: 0.112000 seconds

N = 100000000:

Fragmented list with merge sort: 364.797000 seconds

Array with qsort: 61.166000 seconds

Packed list with merge sort: 16.525000 seconds

Conclusion:

At least on my machine, copying into an array is well worth it to improve the cache performance, since you rarely have a completely packed linked list in real life. It should be noted that my machine has a 2.8GHz Phenom II, but only 0.6GHz RAM, so the cache is very important.

Solution 3

This is a nice little paper on this topic. His empirical conclusion is that Treesort is best, followed by Quicksort and Mergesort. Sediment sort, bubble sort, selection sort perform very badly.

A COMPARATIVE STUDY OF LINKED LIST SORTING ALGORITHMS by Ching-Kuang Shene

http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.31.9981

Solution 4

Comparison sorts (i.e. ones based on comparing elements) cannot possibly be faster than n log n. It doesn't matter what the underlying data structure is. See Wikipedia.

Other kinds of sort that take advantage of there being lots of identical elements in the list (such as the counting sort), or some expected distribution of elements in the list, are faster, though I can't think of any that work particularly well on a linked list.

Solution 5

As stated many times, the lower bound on comparison based sorting for general data is going to be O(n log n). To briefly resummarize these arguments, there are n! different ways a list can be sorted. Any sort of comparison tree that has n! (which is in O(n^n)) possible final sorts is going to need at least log(n!) as its height: this gives you a O(log(n^n)) lower bound, which is O(n log n).

So, for general data on a linked list, the best possible sort that will work on any data that can compare two objects is going to be O(n log n). However, if you have a more limited domain of things to work in, you can improve the time it takes (at least proportional to n). For instance, if you are working with integers no larger than some value, you could use Counting Sort or Radix Sort, as these use the specific objects you're sorting to reduce the complexity with proportion to n. Be careful, though, these add some other things to the complexity that you may not consider (for instance, Counting Sort and Radix sort both add in factors that are based on the size of the numbers you're sorting, O(n+k) where k is the size of largest number for Counting Sort, for instance).

Also, if you happen to have objects that have a perfect hash (or at least a hash that maps all values differently), you could try using a counting or radix sort on their hash functions.

Related videos on Youtube

Comments

-

Dirk almost 2 years

I'm curious if O(n log n) is the best a linked list can do.

-

MAK over 14 yearsJust so that you know, O(nlogn) is the bound for comparison based sorts. There are non-comparison based sorts than can give O(n) performance (e.g. counting sort), but they require additional constraints on the data.

-

Abhijit Sarkar over 3 yearsThose were the days when questions unlike "why this code doesn't work?????" were acceptable on SO.

Abhijit Sarkar over 3 yearsThose were the days when questions unlike "why this code doesn't work?????" were acceptable on SO.

-

-

Dominic Rodger over 14 yearsSee Simon Tatham's chiark.greenend.org.uk/~sgtatham/algorithms/listsort.html

-

Artelius over 14 yearsIt's been proven that no comparison-based sort algorthms exist that are faster than n log n.

-

Pete Kirkham over 14 yearsNo, it's been proven that no comparison-based sort algorithms on general data are faster than n log n

-

csl over 14 yearsIt would be a better answer if you would clarify why.

-

csl over 14 yearsGood comments, but you should consider the non-constant cost of copying the data from a list to an array (you'd have to traverse the list), as well as the worst case running time for quicksort.

-

Dean J over 14 yearsO(n * log n) is theoretically the same as O(n * log n + n), which would be including the cost of the copy. For any sufficiently large n, the cost of the copy really shouldn't matter; traversing a list once to the end should be n time.

-

DivineWolfwood over 14 yearsIt's not a hack algorithm, and it doesn't run in O(n). It runs in O(cn), where c is the largest value you're sorting (well, really it's the difference between the highest and lowest values) and only works on integral values. There's a difference between O(n) and O(cn), as unless you can give a definitive upper bound for the values you're sorting (and thus bound it by a constant), you have two factors complicating the complexity.

-

bdonlan over 14 yearsexpected

O(lg N)search time - but not guaranteed, as skip lists rely on randomness. If you are receiving untrusted input, be sure the supplier of the input cannot predict your RNG, or they could send you data that triggers its worst case performance -

bdonlan over 14 yearsNo, any sort algorithm faster than

O(n lg n)would not be comparison-based (eg, radix sort). By definition, comparison sort applies to any domain that has a total order (ie, can be compared). -

bdonlan over 14 yearsStrictly speaking, it runs in

O(n lg c). If all of your elements are unique, thenc >= n, and therefore it takes longer thanO(n lg n). -

Pete Kirkham over 14 years@bdonlan the point of "general data" is that there are algorithms which are faster for constrained input, rather than random input. At the limiting case, you can write a trivial O(1) algorithm which sorts a list given the input data is constrained to be already sorted

-

csl over 14 years@DeanJ: Theoretically, yes, but remember that the original poster is putting forth the case where micro-optimizations matter. And in that case, the time spent turning a linked-list into an array has to be considered. The comments are insightful, but I'm not completely convinced it would provide performance gain in reality. It might work for a very small N, perhaps.

-

Steve Jessop over 14 years@csl: Actually, I'd expect the benefits of locality to kick in for large N. Assuming that cache misses are the dominant performance effect, then the copy-qsort-copy approach results in about 2*N cache misses for the copying, plus the number of misses for the qsort, which will be a small fraction of Nlog(N) (since most accesses in qsort are to an element close to a recently-accessed element). The number of misses for the merge sort is a larger fraction of Nlog(N), since a higher proportion of comparisons cause a cache miss. So for large N, this term dominates and slows down mergesort.

-

Steve Jessop over 14 yearsAs against this, mergesort is stable and quicksort isn't. Plus you need to conditionally bail out of quicksort to avoid O(N^2) worst-case. So it may not always be a drop-in replacement, since "faster but gives a different answer" sometimes isn't acceptable. But Jørgen's results certainly show a marked benefit with a decent-sized N.

-

Steve Jessop over 14 yearsAnd that would not be a comparison-based sort. The modifier "on general data" is redundant, since comparison sorts already handle general data (and the big-O notation is for the number of comparisons made).

-

Jørgen Fogh over 14 years@Steve: You are right that qsort is not a drop-in replacement, but my point is not really about qsort vs. mergesort. I just didn't feel like writing another version of the mergesort when qsort was readily available. The standard library is way more convenient than rolling your own.

-

L.E. about 11 yearsThis is not for single linked list. His C code is using *prev and *next.

-

csl over 10 years@L.E. It's actually for both. If you see the signature for

listsort, you'll see you can switch by using the parameterint is_double. -

jfs over 10 years@L.E.: here's a Python version of the

listsortC code that supports only singly-linked lists -

LoveToCode over 8 yearsCan you please explain more on this topic or give any resource link for radix sort in linked list.

LoveToCode over 8 yearsCan you please explain more on this topic or give any resource link for radix sort in linked list. -

greybeard over 8 yearsHaving run creation perform well with "reversed input" is a nice touch.

O(log m)additional memory should not be needed - just add runs to two list alternately until one is empty. -

Richard over 7 yearsThis is an exceptionally thorough answer: thanks! Question, though: how did you generate the fragmented vs. packed lists?

Richard over 7 yearsThis is an exceptionally thorough answer: thanks! Question, though: how did you generate the fragmented vs. packed lists? -

Jørgen Fogh over 7 yearsEach node in the fragmented list was allocated with whichever version of

malloc()came with GLibc on Linux when I ran the test. I just allocated them inside a loop, so the memory is likely to have been less fragmented than it would have been in practice. The packed list was stored in a single contiguous piece of memory where each node originally pointed to the one immediately succeeding it. This means that the entire linked list could be traversed in its original order in a single linear scan. -

Adam almost 7 yearsO(kn) is theoretically linear, and can be achieved with bucket sort. Assuming a reasonable k (number of bits /size of object you are sorting), it might be a bit faster

Adam almost 7 yearsO(kn) is theoretically linear, and can be achieved with bucket sort. Assuming a reasonable k (number of bits /size of object you are sorting), it might be a bit faster -

rcgldr almost 6 yearsThe packed list with merge sort seems unusually quick, is there a chance that the packed list merge sort was operating on an already sorted list?

rcgldr almost 6 yearsThe packed list with merge sort seems unusually quick, is there a chance that the packed list merge sort was operating on an already sorted list? -

Jørgen Fogh almost 6 yearsI am fairly confident that it was not operating on a sorted list, although it is hard to be 100% certain after so many years and without access to the source code. I would probably not even have performed this experiment today, since there could be a myriad reasons why one implementation might be faster than another, regardless of which algorithm is actually "better".

-

greybeard about 5 yearsWhat does this add over Jørgen Fogh's answer?

![[Linked List] - Sắp xếp trong danh sách liên kết đơn bằng Quick Sort.](https://i.ytimg.com/vi/o1C0hLaZiIg/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAs2QJw47Sz6L_UTrOkXk-vlShs_A)