What are Kernel PMU event-s in perf_events list?

Googling and ack-ing is over! I've got some answer.

But firstly let me clarify the aim of the question a little more: I want clearly distinguish independent processes in the system and their performance counters. For instance, a core of a processor, an uncore device (learned about it recently), kernel or user application on the processor, a bus (= bus controller), a hard drive are all independent processes, they are not synchronized by a clock. And nowadays probably all of them have some Process Monitoring Counter (PMC). I'd like to understand which processes the counters come from. (It is also helpful in googling: the "vendor" of a thing zeros it better.)

Also, the gear used for the search: Ubuntu 14.04, linux 3.13.0-103-generic, processor Intel(R) Core(TM) i5-3317U CPU @ 1.70GHz (from /proc/cpuinfo, it has 2 physical cores and 4 virtual -- the physical matter here).

Terminology, things the question involves

From Intel:

processor is a

coredevice (it's 1 device/process) and a bunch ofuncoredevices,coreis what runs the program (clock, ALU, registers etc),uncoreare devices put on die, close to the processor for speed and low latency (the real reason is "because the manufacturer can do it"); as I understood it is basically the Northbridge, like on PC motherboard, plus caches; and AMD actually calls these devices NorthBridgeinstead ofuncore`;-

uboxwhich shows up in mysysfs$ find /sys/devices/ -type d -name events /sys/devices/cpu/events /sys/devices/uncore_cbox_0/events /sys/devices/uncore_cbox_1/events-- is an

uncoredevice, which manages Last Level Cache (LLC, the last one before hitting RAM); I have 2 cores, thus 2 LLC and 2ubox; -

Processor Monitoring Unit (PMU) is a separate device which monitors operations of a processor and records them in Processor Monitoring Counter (PMC) (counts cache misses, processor cycles etc); they exist on

coreanduncoredevices; thecoreones are accessed withrdpmc(read PMC) instruction; theuncore, since these devices depend on actual processor at hand, are accessed via Model Specific Registers (MSR) viardmsr(naturally);apparently, the workflow with them is done via pairs of registers -- 1 register sets which events the counter counts, 2 register is the value in the counter; the counter can be configured to increment after a bunch of events, not just 1; + there are some interupts/tech noticing overflows in these counters;

-

more one can find in Intel's "IA-32 Software Developer's Manual Vol 3B" chapter 18 "PERFORMANCE MONITORING";

also, the MSR's format concretely for these

uncorePMCs for version "Architectural Performance Monitoring Version 1" (there are versions 1-4 in the manual, I don't know which one is my processor) is described in "Figure 18-1. Layout of IA32_PERFEVTSELx MSRs" (page 18-3 in mine), and section "18.2.1.2 Pre-defined Architectural Performance Events" with "Table 18-1. UMask and Event Select Encodings for Pre-Defined Architectural Performance Events", which shows the events which show up asHardware eventinperf list.

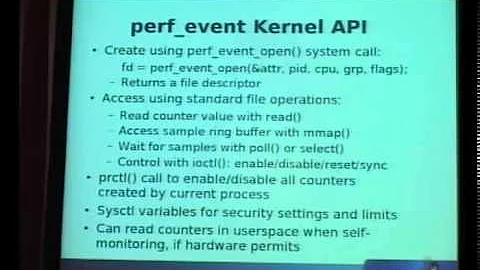

From linux kernel:

-

kernel has a system (abstraction/layer) for managing performance counters of different origin, both software (kernel's) and hardware, it is described in

linux-source-3.13.0/tools/perf/design.txt; an event in this system is defined asstruct perf_event_attr(filelinux-source-3.13.0/include/uapi/linux/perf_event.h), the main part of which is probably__u64 configfield -- it can hold both a CPU-specific event definition (the 64bit word in the format described on those Intel's figures) or a kernel's eventThe MSB of the config word signifies if the rest contains [raw CPU's or kernel's event]

the kernel's event defined with 7 bits for type and 56 for event's identifier, which are

enum-s in the code, which in my case are:$ ak PERF_TYPE linux-source-3.13.0/include/ ... linux-source-3.13.0/include/uapi/linux/perf_event.h 29: PERF_TYPE_HARDWARE = 0, 30: PERF_TYPE_SOFTWARE = 1, 31: PERF_TYPE_TRACEPOINT = 2, 32: PERF_TYPE_HW_CACHE = 3, 33: PERF_TYPE_RAW = 4, 34: PERF_TYPE_BREAKPOINT = 5, 36: PERF_TYPE_MAX, /* non-ABI */(

akis my alias toack-grep, which is the name forackon Debian; andackis awesome);in the source code of kernel one can see operations like "register all PMUs dicovered on the system" and structure types

struct pmu, which are passed to something likeint perf_pmu_register(struct pmu *pmu, const char *name, int type)-- thus, one could just call this system "kernel's PMU", which would be an aggregation of all PMUs on the system; but this name could be interpreted as monitoring system of kernel's operations, which would be misleading;let's call this subsystem

perf_eventsfor clarity; as any kernel subsystem, this subsystem can be exported into

sysfs(which is made to export kernel subsystems for people to use); and that's what are thoseeventsdirectories in my/sys/-- the exported (parts of?)perf_eventssubsystem;also, the user-space utility

perf(built into linux) is still a separate program and has its' own abstractions; it represents an event requested for monitoring by user asperf_evsel(fileslinux-source-3.13.0/tools/perf/util/evsel.{h,c}) -- this structure has a fieldstruct perf_event_attr attr;, but also a field likestruct cpu_map *cpus;that's howperfutility assigns an event to all or particular CPUs.

Answer

-

Indeed,

Hardware cache eventare "shortcuts" to the events of the cache devices (uboxof Intel'suncoredevices), which are processor-specific, and can be accessed via the protocolRaw hardware event descriptor. AndHardware eventare more stable within architecture, which, as I understand, name the events from thecoredevice. There no other "shortcuts" in my kernel3.13to some otheruncoreevents and counters. All the rest --SoftwareandTracepoints-- are kernel's events.I wonder if the

core'sHardware events are accessed via the sameRaw hardware event descriptorprotocol. They might not -- since the counter/PMU sits oncore, maybe it is accessed differently. For instance, with thatrdpmuinstruction, instead ofrdmsr, which accessesuncore. But it is not that important. -

Kernel PMU eventare just the events, which are exported intosysfs. I don't know how this is done (automatically by kernel all discovered PMCs on the system, or just something hard-coded, and if I add akprobe-- is it exported? etc). But the main point is that these are the same events asHardware eventor any other in the internalperf_eventsystem.And I don't know what those

$ ls /sys/devices/uncore_cbox_0/events clockticksare.

Details on Kernel PMU event

Searching through the code leads to:

$ ak "Kernel PMU" linux-source-3.13.0/tools/perf/

linux-source-3.13.0/tools/perf/util/pmu.c

629: printf(" %-50s [Kernel PMU event]\n", aliases[j]);

-- which happens in the function

void print_pmu_events(const char *event_glob, bool name_only) {

...

while ((pmu = perf_pmu__scan(pmu)) != NULL)

list_for_each_entry(alias, &pmu->aliases, list) {...}

...

/* b.t.w. list_for_each_entry is an iterator

* apparently, it takes a block of {code} and runs over some lost

* Ruby built in kernel!

*/

// then there is a loop over these aliases and

loop{ ... printf(" %-50s [Kernel PMU event]\n", aliases[j]); ... }

}

and perf_pmu__scan is in the same file:

struct perf_pmu *perf_pmu__scan(struct perf_pmu *pmu) {

...

pmu_read_sysfs(); // that's what it calls

}

-- which is also in the same file:

/* Add all pmus in sysfs to pmu list: */

static void pmu_read_sysfs(void) {...}

That's it.

Details on Hardware event and Hardware cache event

Apparently, the Hardware event come from what Intel calls "Pre-defined Architectural Performance Events", 18.2.1.2 in IA-32 Software Developer's Manual Vol 3B. And "18.1 PERFORMANCE MONITORING OVERVIEW" of the manual describes them as:

The second class of performance monitoring capabilities is referred to as architectural performance monitoring. This class supports the same counting and Interrupt-based event sampling usages, with a smaller set of available events. The visible behavior of architectural performance events is consistent across processor implementations. Availability of architectural performance monitoring capabilities is enumerated using the CPUID.0AH. These events are discussed in Section 18.2.

-- the other type is:

Starting with Intel Core Solo and Intel Core Duo processors, there are two classes of performance monitoring capa-bilities. The first class supports events for monitoring performance using counting or interrupt-based event sampling usage. These events are non-architectural and vary from one processor model to another...

And these events are indeed just links to underlying "raw" hardware events, which can be accessed via perf utility as Raw hardware event descriptor.

To check this one looks at linux-source-3.13.0/arch/x86/kernel/cpu/perf_event_intel.c:

/*

* Intel PerfMon, used on Core and later.

*/

static u64 intel_perfmon_event_map[PERF_COUNT_HW_MAX] __read_mostly =

{

[PERF_COUNT_HW_CPU_CYCLES] = 0x003c,

[PERF_COUNT_HW_INSTRUCTIONS] = 0x00c0,

[PERF_COUNT_HW_CACHE_REFERENCES] = 0x4f2e,

[PERF_COUNT_HW_CACHE_MISSES] = 0x412e,

...

}

-- and exactly 0x412e is found in "Table 18-1. UMask and Event Select Encodings for Pre-Defined Architectural Performance Events" for "LLC Misses":

Bit Position CPUID.AH.EBX | Event Name | UMask | Event Select

...

4 | LLC Misses | 41H | 2EH

-- H is for hex. All 7 are in the structure, plus [PERF_COUNT_HW_REF_CPU_CYCLES] = 0x0300, /* pseudo-encoding *. (The naming is a bit different, addresses are the same.)

Then the Hardware cache events are in structures like (in the same file):

static __initconst const u64 snb_hw_cache_extra_regs

[PERF_COUNT_HW_CACHE_MAX]

[PERF_COUNT_HW_CACHE_OP_MAX]

[PERF_COUNT_HW_CACHE_RESULT_MAX] =

{...}

-- which should be for sandy bridge?

One of these -- snb_hw_cache_extra_regs[LL][OP_WRITE][RESULT_ACCESS] is filled with SNB_DMND_WRITE|SNB_L3_ACCESS, where from the def-s above:

#define SNB_L3_ACCESS SNB_RESP_ANY

#define SNB_RESP_ANY (1ULL << 16)

#define SNB_DMND_WRITE (SNB_DMND_RFO|SNB_LLC_RFO)

#define SNB_DMND_RFO (1ULL << 1)

#define SNB_LLC_RFO (1ULL << 8)

which should equal to 0x00010102, but I don't know how to check it with some table.

And this gives an idea how it is used in perf_events:

$ ak hw_cache_extra_regs linux-source-3.13.0/arch/x86/kernel/cpu/

linux-source-3.13.0/arch/x86/kernel/cpu/perf_event.c

50:u64 __read_mostly hw_cache_extra_regs

292: attr->config1 = hw_cache_extra_regs[cache_type][cache_op][cache_result];

linux-source-3.13.0/arch/x86/kernel/cpu/perf_event.h

521:extern u64 __read_mostly hw_cache_extra_regs

linux-source-3.13.0/arch/x86/kernel/cpu/perf_event_intel.c

272:static __initconst const u64 snb_hw_cache_extra_regs

567:static __initconst const u64 nehalem_hw_cache_extra_regs

915:static __initconst const u64 slm_hw_cache_extra_regs

2364: memcpy(hw_cache_extra_regs, nehalem_hw_cache_extra_regs,

2365: sizeof(hw_cache_extra_regs));

2407: memcpy(hw_cache_extra_regs, slm_hw_cache_extra_regs,

2408: sizeof(hw_cache_extra_regs));

2424: memcpy(hw_cache_extra_regs, nehalem_hw_cache_extra_regs,

2425: sizeof(hw_cache_extra_regs));

2452: memcpy(hw_cache_extra_regs, snb_hw_cache_extra_regs,

2453: sizeof(hw_cache_extra_regs));

2483: memcpy(hw_cache_extra_regs, snb_hw_cache_extra_regs,

2484: sizeof(hw_cache_extra_regs));

2516: memcpy(hw_cache_extra_regs, snb_hw_cache_extra_regs, sizeof(hw_cache_extra_regs));

$

The memcpys are done in __init int intel_pmu_init(void) {... case:...}.

Only attr->config1 is a bit odd. But it is there, in perf_event_attr (same linux-source-3.13.0/include/uapi/linux/perf_event.h file):

...

union {

__u64 bp_addr;

__u64 config1; /* extension of config */

};

union {

__u64 bp_len;

__u64 config2; /* extension of config1 */

};

...

They are registered in kernel's perf_events system with calls to int perf_pmu_register(struct pmu *pmu, const char *name, int type) (defined in linux-source-3.13.0/kernel/events/core.c: ):

static int __init init_hw_perf_events(void)(filearch/x86/kernel/cpu/perf_event.c) with callperf_pmu_register(&pmu, "cpu", PERF_TYPE_RAW);static int __init uncore_pmu_register(struct intel_uncore_pmu *pmu)(filearch/x86/kernel/cpu/perf_event_intel_uncore.c, there are alsoarch/x86/kernel/cpu/perf_event_amd_uncore.c) with callret = perf_pmu_register(&pmu->pmu, pmu->name, -1);

So finally, all events come from hardware and everything is ok. But here one could notice: why do we have LLC-loads in perf list and not ubox1 LLC-loads, since these are HW events and they actualy come from uboxes?

That's a thing of the perf utility and its' perf_evsel structure: when you request a HW event from perf you define the event which processors you want it from (default is all), and it sets up the perf_evsel with the requested event and processors, then at aggregation is sums the counters from all processors in perf_evsel (or does some other statistics with them).

One can see it in tools/perf/builtin-stat.c:

/*

* Read out the results of a single counter:

* aggregate counts across CPUs in system-wide mode

*/

static int read_counter_aggr(struct perf_evsel *counter)

{

struct perf_stat *ps = counter->priv;

u64 *count = counter->counts->aggr.values;

int i;

if (__perf_evsel__read(counter, perf_evsel__nr_cpus(counter),

thread_map__nr(evsel_list->threads), scale) < 0)

return -1;

for (i = 0; i < 3; i++)

update_stats(&ps->res_stats[i], count[i]);

if (verbose) {

fprintf(output, "%s: %" PRIu64 " %" PRIu64 " %" PRIu64 "\n",

perf_evsel__name(counter), count[0], count[1], count[2]);

}

/*

* Save the full runtime - to allow normalization during printout:

*/

update_shadow_stats(counter, count);

return 0;

}

(So, for the utility perf a "single counter" is not even a perf_event_attr, which is a general form, fitting both SW and HW events, it is an event of your query -- the same events may come from different devices and they are aggregated.)

Also a notice: struct perf_evsel contains only 1 struct perf_evevent_attr, but it also has a field struct perf_evsel *leader; -- it is nested.

There is a feature of "(hierarchical) groups of events" in perf_events, when you can dispatch a bunch of counters together, so that they can be compared to each other and so on. Not sure how it works with independent events from kernel, core, ubox. But this nesting of perf_evsel is it. And, most likely, that's how perf manages a query of several events together.

Related videos on Youtube

xealits

Updated on September 18, 2022Comments

-

xealits over 1 year

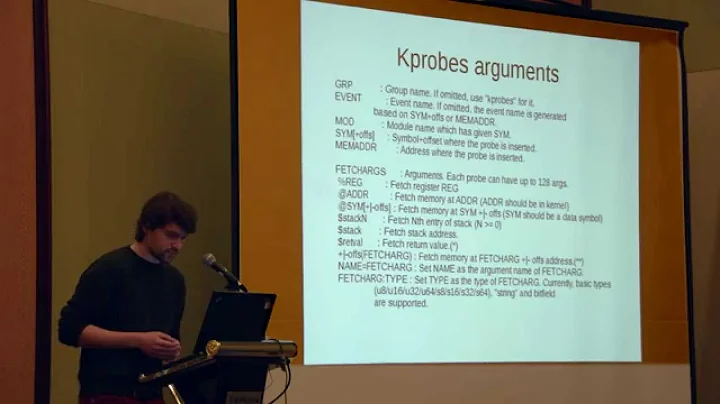

Searching for what one can monitor with

perf_eventson Linux, I cannot find whatKernel PMU eventare? Namely, withperf version 3.13.11-ckt39theperf listshows events like:branch-instructions OR cpu/branch-instructions/ [Kernel PMU event]Overall there are:

Tracepoint event Software event Hardware event Hardware cache event Raw hardware event descriptor Hardware breakpoint Kernel PMU eventand I would like to understand what they are, where they come from. I have some kind of explanation for all, but

Kernel PMU eventitem.From perf wiki tutorial and Brendan Gregg's page I get that:

-

Tracepointsare the clearest -- these are macros on the kernel source, which make a probe point for monitoring, they were introduced withftraceproject and now are used by everybody -

Softwareare kernel's low level counters and some internal data-structures (hence, they are different from tracepoints) -

Hardware eventare some very basic CPU events, found on all architectures and somehow easily accessed by kernel -

Hardware cache eventare nicknames toRaw hardware event descriptor-- it works as followsas I got it,

Raw hardware event descriptorare more (micro?)architecture-specific events thanHardware event, the events come from Processor Monitoring Unit (PMU) or other specific features of a given processor, thus they are available only on some micro-architectures (let's say "architecture" means "x86_64" and all the rest of the implementation details are "micro-architecture"); and they are accessible for instrumentation via these strange descriptorsrNNN [Raw hardware event descriptor] cpu/t1=v1[,t2=v2,t3 ...]/modifier [Raw hardware event descriptor] (see 'man perf-list' on how to encode it)-- these descriptors, which events they point to and so on is to be found in processor's manuals (PMU events in perf wiki);

but then, when people know that there is some useful event on a given processor they give it a nickname and plug it into linux as

Hardware cache eventfor ease of access-- correct me if I'm wrong (strangely all

Hardware cache eventare aboutsomething-loadsorsomething-misses-- very like the actual processor's cache..) -

now, the

Hardware breakpointmem:<addr>[:access] [Hardware breakpoint]is a hardware feature, which is probably common to most modern architectures, and works as a breakpoint in a debugger? (probably it is googlable anyway)

-

finally,

Kernel PMU eventI don't manage to google on;it also doesn't show up in the listing of Events in Brendan's perf page, so it's new?

Maybe it's just nicknames to hardware events specifically from PMU? (For ease of access it got a separate section in the list of events in addition to the nickname.) In fact, maybe

Hardware cache eventsare nicknames to hardware events from CPU's cache andKernel PMU eventare nicknames to PMU events? (Why not call itHardware PMU eventthen?..) It could be just new naming scheme -- the nicknames to hardware events got sectionized?And these events refer to things like

cpu/mem-stores/, plus since some linux version events got descriptions in/sys/devices/and:# find /sys/ -type d -name events /sys/devices/cpu/events /sys/devices/uncore_cbox_0/events /sys/devices/uncore_cbox_1/events /sys/kernel/debug/tracing/events--

debug/tracingis forftraceand tracepoints, other directories match exactly whatperf listshows asKernel PMU event.

Could someone point me to a good explanation/documentation of what

Kernel PMU eventsor/sys/..events/systems are? Also, is/sys/..events/some new effort to systemize hardware events or something alike? (Then, Kernel PMU is like "the Performance Monitoring Unit of Kernel".)PS

To give better context, not-privileged run of

perf list(tracepoints are not shown, but all 1374 of them are there) with full listings ofKernel PMU events andHardware cache events and others skipped:$ perf list List of pre-defined events (to be used in -e): cpu-cycles OR cycles [Hardware event] instructions [Hardware event] ... cpu-clock [Software event] task-clock [Software event] ... L1-dcache-load-misses [Hardware cache event] L1-dcache-store-misses [Hardware cache event] L1-dcache-prefetch-misses [Hardware cache event] L1-icache-load-misses [Hardware cache event] LLC-loads [Hardware cache event] LLC-stores [Hardware cache event] LLC-prefetches [Hardware cache event] dTLB-load-misses [Hardware cache event] dTLB-store-misses [Hardware cache event] iTLB-loads [Hardware cache event] iTLB-load-misses [Hardware cache event] branch-loads [Hardware cache event] branch-load-misses [Hardware cache event] branch-instructions OR cpu/branch-instructions/ [Kernel PMU event] branch-misses OR cpu/branch-misses/ [Kernel PMU event] bus-cycles OR cpu/bus-cycles/ [Kernel PMU event] cache-misses OR cpu/cache-misses/ [Kernel PMU event] cache-references OR cpu/cache-references/ [Kernel PMU event] cpu-cycles OR cpu/cpu-cycles/ [Kernel PMU event] instructions OR cpu/instructions/ [Kernel PMU event] mem-loads OR cpu/mem-loads/ [Kernel PMU event] mem-stores OR cpu/mem-stores/ [Kernel PMU event] ref-cycles OR cpu/ref-cycles/ [Kernel PMU event] stalled-cycles-frontend OR cpu/stalled-cycles-frontend/ [Kernel PMU event] uncore_cbox_0/clockticks/ [Kernel PMU event] uncore_cbox_1/clockticks/ [Kernel PMU event] rNNN [Raw hardware event descriptor] cpu/t1=v1[,t2=v2,t3 ...]/modifier [Raw hardware event descriptor] (see 'man perf-list' on how to encode it) mem:<addr>[:access] [Hardware breakpoint] [ Tracepoints not available: Permission denied ] -