What does 'COLLATE SQL_Latin1_General_CP1_CI_AS' do?

Solution 1

It sets how the database server sorts (compares pieces of text). in this case:

SQL_Latin1_General_CP1_CI_AS

breaks up into interesting parts:

-

latin1makes the server treat strings using charset latin 1, basically ascii -

CP1stands for Code Page 1252 -

CIcase insensitive comparisons so 'ABC' would equal 'abc' -

ASaccent sensitive, so 'ü' does not equal 'u'

P.S. For more detailed information be sure to read @solomon-rutzky's answer.

Solution 2

Please be aware that the accepted answer is a bit incomplete. Yes, at the most basic level Collation handles sorting. BUT, the comparison rules defined by the chosen Collation are used in many places outside of user queries against user data.

If "What does COLLATE SQL_Latin1_General_CP1_CI_AS do?" means "What does the COLLATE clause of CREATE DATABASE do?", then:

The COLLATE {collation_name} clause of the CREATE DATABASE statement specifies the default Collation of the Database, and not the Server; Database-level and Server-level default Collations control different things.

Server (i.e. Instance)-level controls:

- Database-level Collation for system Databases:

master,model,msdb, andtempdb. - Due to controlling the DB-level Collation of

tempdb, it is then the default Collation for string columns in temporary tables (global and local), but not table variables. - Due to controlling the DB-level Collation of

master, it is then the Collation used for Server-level data, such as Database names (i.e.namecolumn insys.databases), Login names, etc. - Handling of parameter / variable names

- Handling of cursor names

- Handling of

GOTOlabels - Default Collation used for newly created Databases when the

COLLATEclause is missing

Database-level controls:

- Default Collation used for newly created string columns (

CHAR,VARCHAR,NCHAR,NVARCHAR,TEXT, andNTEXT-- but don't useTEXTorNTEXT) when theCOLLATEclause is missing from the column definition. This goes for bothCREATE TABLEandALTER TABLE ... ADDstatements. - Default Collation used for string literals (i.e.

'some text') and string variables (i.e.@StringVariable). This Collation is only ever used when comparing strings and variables to other strings and variables. When comparing strings / variables to columns, then the Collation of the column will be used. - The Collation used for Database-level meta-data, such as object names (i.e.

sys.objects), column names (i.e.sys.columns), index names (i.e.sys.indexes), etc. - The Collation used for Database-level objects: tables, columns, indexes, etc.

Also:

- ASCII is an encoding which is 8-bit (for common usage; technically "ASCII" is 7-bit with character values 0 - 127, and "ASCII Extended" is 8-bit with character values 0 - 255). This group is the same across cultures.

- The Code Page is the "extended" part of Extended ASCII, and controls which characters are used for values 128 - 255. This group varies between each culture.

-

Latin1does not mean "ASCII" since standard ASCII only covers values 0 - 127, and all code pages (that can be represented in SQL Server, and evenNVARCHAR) map those same 128 values to the same characters.

If "What does COLLATE SQL_Latin1_General_CP1_CI_AS do?" means "What does this particular collation do?", then:

-

Because the name start with

SQL_, this is a SQL Server collation, not a Windows collation. These are definitely obsolete, even if not officially deprecated, and are mainly for pre-SQL Server 2000 compatibility. Although, quite unfortunatelySQL_Latin1_General_CP1_CI_ASis very common due to it being the default when installing on an OS using US English as its language. These collations should be avoided if at all possible.Windows collations (those with names not starting with

SQL_) are newer, more functional, have consistent sorting betweenVARCHARandNVARCHARfor the same values, and are being updated with additional / corrected sort weights and uppercase/lowercase mappings. These collations also don't have the potential performance problem that the SQL Server collations have: Impact on Indexes When Mixing VARCHAR and NVARCHAR Types. -

Latin1_Generalis the culture / locale.- For

NCHAR,NVARCHAR, andNTEXTdata this determines the linguistic rules used for sorting and comparison. - For

CHAR,VARCHAR, andTEXTdata (columns, literals, and variables) this determines the:- linguistic rules used for sorting and comparison.

- code page used to encode the characters. For example,

Latin1_Generalcollations use code page 1252,Hebrewcollations use code page 1255, and so on.

- For

-

CP{code_page}or{version}- For SQL Server collations:

CP{code_page}, is the 8-bit code page that determines what characters map to values 128 - 255. While there are four code pages for Double-Byte Character Sets (DBCS) that can use 2-byte combinations to create more than 256 characters, these are not available for the SQL Server collations. -

For Windows collations:

{version}, while not present in all collation names, refers to the SQL Server version in which the collation was introduced (for the most part). Windows collations with no version number in the name are version80(meaning SQL Server 2000 as that is version 8.0). Not all versions of SQL Server come with new collations, so there are gaps in the version numbers. There are some that are90(for SQL Server 2005, which is version 9.0), most are100(for SQL Server 2008, version 10.0), and a small set has140(for SQL Server 2017, version 14.0).I said "for the most part" because the collations ending in

_SCwere introduced in SQL Server 2012 (version 11.0), but the underlying data wasn't new, they merely added support for supplementary characters for the built-in functions. So, those endings exist for version90and100collations, but only starting in SQL Server 2012.

- For SQL Server collations:

- Next you have the sensitivities, that can be in any combination of the following, but always specified in this order:

-

CS= case-sensitive orCI= case-insensitive -

AS= accent-sensitive orAI= accent-insensitive -

KS= Kana type-sensitive or missing = Kana type-insensitive -

WS= width-sensitive or missing = width insensitive -

VSS= variation selector sensitive (only available in the version 140 collations) or missing = variation selector insensitive

-

-

Optional last piece:

-

_SCat the end means "Supplementary Character support". The "support" only affects how the built-in functions interpret surrogate pairs (which are how supplementary characters are encoded in UTF-16). Without_SCat the end (or_140_in the middle), built-in functions don't see a single supplementary character, but instead see two meaningless code points that make up the surrogate pair. This ending can be added to any non-binary, version 90 or 100 collation. -

_BINor_BIN2at the end means "binary" sorting and comparison. Data is still stored the same, but there are no linguistic rules. This ending is never combined with any of the 5 sensitivities or_SC._BINis the older style, and_BIN2is the newer, more accurate style. If using SQL Server 2005 or newer, use_BIN2. For details on the differences between_BINand_BIN2, please see: Differences Between the Various Binary Collations (Cultures, Versions, and BIN vs BIN2). -

_UTF8is a new option as of SQL Server 2019. It's an 8-bit encoding that allows for Unicode data to be stored inVARCHARandCHARdatatypes (but not the deprecatedTEXTdatatype). This option can only be used on collations that support supplementary characters (i.e. version 90 or 100 collations with_SCin their name, and version 140 collations). There is also a single binary_UTF8collation (_BIN2, not_BIN).PLEASE NOTE: UTF-8 was designed / created for compatibility with environments / code that are set up for 8-bit encodings yet want to support Unicode. Even though there are a few scenarios where UTF-8 can provide up to 50% space savings as compared to

NVARCHAR, that is a side-effect and has a cost of a slight hit to performance in many / most operations. If you need this for compatibility, then the cost is acceptable. If you want this for space-savings, you had better test, and TEST AGAIN. Testing includes all functionality, and more than just a few rows of data. Be warned that UTF-8 collations work best when ALL columns, and the database itself, are usingVARCHARdata (columns, variables, string literals) with a_UTF8collation. This is the natural state for anyone using this for compatibility, but not for those hoping to use it for space-savings. Be careful when mixing VARCHAR data using a_UTF8collation with eitherVARCHARdata using non-_UTF8collations orNVARCHARdata, as you might experience odd behavior / data loss. For more details on the new UTF-8 collations, please see: Native UTF-8 Support in SQL Server 2019: Savior or False Prophet?

-

Solution 3

The CP1 means 'Code Page 1' - technically this translates to code page 1252

Solution 4

The COLLATE keyword specify what kind of character set and rules (order, confrontation rules) you are using for string values.

For example in your case you are using Latin rules with case insensitive (CI) and accent sensitive (AS)

You can refer to this Documentation

Solution 5

This specifies the default collation for the database. Every text field that you create in tables in the database will use that collation, unless you specify a different one.

A database always has a default collation. If you don't specify any, the default collation of the SQL Server instance is used.

The name of the collation that you use shows that it uses the Latin1 code page 1, is case insensitive (CI) and accent sensitive (AS). This collation is used in the USA, so it will contain sorting rules that are used in the USA.

The collation decides how text values are compared for equality and likeness, and how they are compared when sorting. The code page is used when storing non-unicode data, e.g. varchar fields.

Related videos on Youtube

Comments

-

Thunder over 3 years

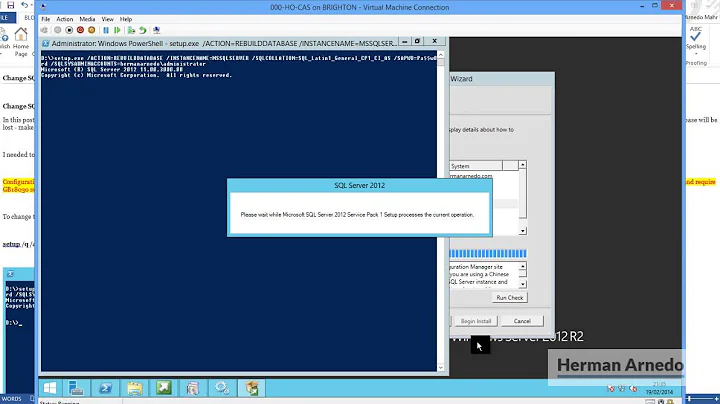

I have an SQL query to create the database in SQLServer as given below:

create database yourdb on ( name = 'yourdb_dat', filename = 'c:\program files\microsoft sql server\mssql.1\mssql\data\yourdbdat.mdf', size = 25mb, maxsize = 1500mb, filegrowth = 10mb ) log on ( name = 'yourdb_log', filename = 'c:\program files\microsoft sql server\mssql.1\mssql\data\yourdblog.ldf', size = 7mb, maxsize = 375mb, filegrowth = 10mb ) COLLATE SQL_Latin1_General_CP1_CI_AS; goIt runs fine.

While rest of the SQL is clear to be I am quite confused about the functionality of

COLLATE SQL_Latin1_General_CP1_CI_AS.Can anyone explain this to me? Also, I would like to know if creating the database in this way is a best practice?

-

RichardTheKiwi about 13 yearswrong (you cannot

notspecify a collation, although you can accept the default) wrong (it is used for unicode data too) -

Guffa about 13 years@Richard aka cyberkiwi: Check the documentation: msdn.microsoft.com/en-us/library/ms176061.aspx Specifying the collation is optional. The code page is not used for storing Unicode data, as that is stored as 16 bit Unicode code points, not as 8 bit code page indexes.

-

RichardTheKiwi about 13 yearsI read your answer wrong, but it is still wrong. A database always has a default collation = SERVER collation, not specifically

Latin1_General_CI_AS. Now I read it wrong because I half expected the statement to be about SERVER collation which does require acceptance of default in the UI. For the 2nd point, you seem to imply that collation is not used for sorting unicode data (even though you switch fromsortingtostoringin the last 2 sentences). Unicode text data also obeys collations. -

Guffa about 13 years@Richard aka cyberkiwi: I changed the paragraph about the default collation to correspond with the specific documentation that I linked to. (It differs depending on the version of server.) Regarding the second point, I can't see how I could make it clearer. The text says that the code page is used when storing non-unicode data. A code page is not used to determine sorting, neither for unicode data nor for non-unicode data.

-

Kad over 10 yearsWhat would be the difference between this and

SQL_Latin1_General_CI_AS. Specifically, CP1 got me wondering. -

Riley Major about 10 years@Kad: There doesn't seem to be a

Riley Major about 10 years@Kad: There doesn't seem to be aSQL_Latin1_General_CI_AS. Rather, there is aLatin1_General_CI_AS. SeeSELECT * FROM fn_helpcollations() where name IN ('SQL_Latin1_General_CP1_CI_AS','Latin1_General_CI_AS','SQL_Latin1_General_CI_AS');. There are subtle differences regarding sorting and comparison as between the two collations. See olcot.co.uk/sql-blogs/…. -

slartibartfast over 7 years@Kad: CP1 stands for Code Page 1252. A code page is a lookup table to map the hex value to a specific character in a character set. CP1 is shorthand for CP1252 in the Microsoft sub-culture. Windows is the only platform that uses CP1252 indigenously as it is a hold-over from DOS days. Though it is very similar to ISO 8859-1, they are not the same. There are differences in mapped characters like the euro and a few others that are not in ISO 8859-1.

-

Kris over 6 yearsWhile I did upvote this for containing so much information and effort, My answer is definitely not wrong (databases store data, database servers act on this data, sorting is acting). I chose brevity over complete mathematic precision because the OP was probably looking for enough, not all possible information.

-

Solomon Rutzky over 6 yearsHi @Kris. Thanks. To be fair, I didn't say that your answer was entirely wrong, just woefully incomplete. I have updated to hopefully clarify that. I get what you're saying, but the OP asked what the

Solomon Rutzky over 6 yearsHi @Kris. Thanks. To be fair, I didn't say that your answer was entirely wrong, just woefully incomplete. I have updated to hopefully clarify that. I get what you're saying, but the OP asked what theCOLLATEclause ofCREATE DATABASEdoes. You said one of several things that it does. Why do you assume that the OP only wants to know 10% of the answer? If all of the info is presented, each person can decide how much of it to take. But if only some info is given, then the choice was made for them. I choose to provide as much info as possible because most of it is not well known. (continued) -

Solomon Rutzky over 6 yearsWhen it comes to Collations (and encodings) most of what's out there is either incomplete or incorrect. So most folks walk away without knowing enough or thinking that they know something but being entirely wrong. People make better decisions when they have all of the info, so I find it best to offer as complete of an answer as possible. By choosing brevity, you potentially leave readers confused when they get parse errors, etc in a DB with case-sensitive or binary Collation, because name resolution wasn't mentioned. So while you're correct about sorting, I feel that by itself it's misleading.

Solomon Rutzky over 6 yearsWhen it comes to Collations (and encodings) most of what's out there is either incomplete or incorrect. So most folks walk away without knowing enough or thinking that they know something but being entirely wrong. People make better decisions when they have all of the info, so I find it best to offer as complete of an answer as possible. By choosing brevity, you potentially leave readers confused when they get parse errors, etc in a DB with case-sensitive or binary Collation, because name resolution wasn't mentioned. So while you're correct about sorting, I feel that by itself it's misleading. -

Kris over 6 yearsI think I see what you mean but I aim to give enough information rather than too much. too much information quickly becomes too complicated for a lot of people. and when I fail to give enough information for any circumstance I'll expect followup questions. (I also didn't expect quite this much attention to the topic)

-

Solomon Rutzky over 5 years@Kris I have been meaning for a while to say "Thanks!" for showing such maturity and professionalism. I am somewhat accustomed to people taking personal offense to someone saying that they are wrong, and then becoming "difficult" (or even more difficult) to interact with. But, your measured response to my, "the accepted answer is WRONG" inspired me to tone down my intro, and should serve as an example to others here on how to communicate properly and productively 😺.

Solomon Rutzky over 5 years@Kris I have been meaning for a while to say "Thanks!" for showing such maturity and professionalism. I am somewhat accustomed to people taking personal offense to someone saying that they are wrong, and then becoming "difficult" (or even more difficult) to interact with. But, your measured response to my, "the accepted answer is WRONG" inspired me to tone down my intro, and should serve as an example to others here on how to communicate properly and productively 😺. -

Kris over 5 yearsYou're welcome and nice to hear I somehow made a positive impact, but I enjoy being "wrong", it opens up opportunities to learn new things, which is great!

-

Chanky Mallick about 4 years@Kris Is there any UTF-8 alternative is there for SQL_Latin1_General_CP1_CI_AS in SQL2019 ?

Chanky Mallick about 4 years@Kris Is there any UTF-8 alternative is there for SQL_Latin1_General_CP1_CI_AS in SQL2019 ? -

Arno Hilke almost 3 years@RileyMajor archive link as the original is dead

-

Solomon Rutzky almost 3 years@Kad As Riley mentioned, that collation doesn't exist. The name is a mix of the two types of collations: 1) SQL Server collations which all have names starting with

Solomon Rutzky almost 3 years@Kad As Riley mentioned, that collation doesn't exist. The name is a mix of the two types of collations: 1) SQL Server collations which all have names starting withSQL_and include the code page number in the name (e.g.CP1,CP1255). These use older, non-Unicode sorting/comparison rules forVARCHARdata. 2) Windows collations have neitherSQL_nor the CP number in their name. These use Unicode sort/comparison rules forVARCHARdata. Please see: sqlquantumleap.com/2019/11/22/… and collations.info -

Solomon Rutzky almost 3 years@slartibartfast I just posted an article on how

Solomon Rutzky almost 3 years@slartibartfast I just posted an article on howCP1is actually a mistake that didn't get caught early enough to fix, similar to the misspelledrefererHTTP header. It means ISO-8859-1 even though that code page isn't supported anywhere in SQL Server, but someone initially thought that it was synonymous with Windows-1252. For anyone who's interested, that post is: What does “CP1” mean in “SQL_Latin1_General_CP1_CI_AS”?. Also, 1252 isn't from DOS days, that'ld be 437 and 850. -

Solomon Rutzky almost 3 years@Chanky Depending on exactly what you mean by "alternative", you are probably looking for the

Solomon Rutzky almost 3 years@Chanky Depending on exactly what you mean by "alternative", you are probably looking for theLatin1_General_100_CI_AS_SC_UTF8collation. Please also see my post on the UTF-8 collations: Native UTF-8 Support in SQL Server 2019: Savior or False Prophet?. -

Chanky Mallick almost 3 years@SolomonRutzky Yes man its been 1 year, we tried with SQL2019 utf-8 collation to make the application supports non-English without changing existing varchar columns, but it was not feasible, restricting application only to a specific 2019 version was a bad idea, then we converted the whole thing into NVARCHAR, it was tough compared to utf-8, but worthy.

Chanky Mallick almost 3 years@SolomonRutzky Yes man its been 1 year, we tried with SQL2019 utf-8 collation to make the application supports non-English without changing existing varchar columns, but it was not feasible, restricting application only to a specific 2019 version was a bad idea, then we converted the whole thing into NVARCHAR, it was tough compared to utf-8, but worthy.