What does "Memory allocated at compile time" really mean?

Solution 1

Memory allocated at compile-time means the compiler resolves at compile-time where certain things will be allocated inside the process memory map.

For example, consider a global array:

int array[100];

The compiler knows at compile-time the size of the array and the size of an int, so it knows the entire size of the array at compile-time. Also a global variable has static storage duration by default: it is allocated in the static memory area of the process memory space (.data/.bss section). Given that information, the compiler decides during compilation in what address of that static memory area the array will be.

Of course that memory addresses are virtual addresses. The program assumes that it has its own entire memory space (From 0x00000000 to 0xFFFFFFFF for example). That's why the compiler could do assumptions like "Okay, the array will be at address 0x00A33211". At runtime that addresses are translated to real/hardware addresses by the MMU and OS.

Value initialized static storage things are a bit different. For example:

int array[] = { 1 , 2 , 3 , 4 };

In our first example, the compiler only decided where the array will be allocated, storing that information in the executable.

In the case of value-initialized things, the compiler also injects the initial value of the array into the executable, and adds code which tells the program loader that after the array allocation at program start, the array should be filled with these values.

Here are two examples of the assembly generated by the compiler (GCC4.8.1 with x86 target):

C++ code:

int a[4];

int b[] = { 1 , 2 , 3 , 4 };

int main()

{}

Output assembly:

a:

.zero 16

b:

.long 1

.long 2

.long 3

.long 4

main:

pushq %rbp

movq %rsp, %rbp

movl $0, %eax

popq %rbp

ret

As you can see, the values are directly injected into the assembly. In the array a, the compiler generates a zero initialization of 16 bytes, because the Standard says that static stored things should be initialized to zero by default:

8.5.9 (Initializers) [Note]:

Every object of static storage duration is zero-initialized at program startup before any other initial- ization takes place. In some cases, additional initialization is done later.

I always suggest people to disassembly their code to see what the compiler really does with the C++ code. This applies from storage classes/duration (like this question) to advanced compiler optimizations. You could instruct your compiler to generate the assembly, but there are wonderful tools to do this on the Internet in a friendly manner. My favourite is GCC Explorer.

Solution 2

Memory allocated at compile time simply means there will be no further allocation at run time -- no calls to malloc, new, or other dynamic allocation methods. You'll have a fixed amount of memory usage even if you don't need all of that memory all of the time.

Isn't memory allocation by definition a runtime concept?

The memory is not in use prior to run time, but immediately prior to execution starting its allocation is handled by the system.

If I make a 1KB statically allocated variable in my C/C++ code, will that increase the size of the executable by the same amount?

Simply declaring the static will not increase the size of your executable more than a few bytes. Declaring it with an initial value that is non-zero will (in order to hold that initial value). Rather, the linker simply adds this 1KB amount to the memory requirement that the system's loader creates for you immediately prior to execution.

Solution 3

Memory allocated in compile time means that when you load the program, some part of the memory will be immediately allocated and the size and (relative) position of this allocation is determined at compile time.

char a[32];

char b;

char c;

Those 3 variables are "allocated at compile time", it means that the compiler calculates their size (which is fixed) at compile time. The variable a will be an offset in memory, let's say, pointing to address 0, b will point at address 33 and c at 34 (supposing no alignment optimization). So, allocating 1Kb of static data will not increase the size of your code, since it will just change an offset inside it. The actual space will be allocated at load time.

Real memory allocation always happens in run time, because the kernel needs to keep track of it and to update its internal data structures (how much memory is allocated for each process, pages and so on). The difference is that the compiler already knows the size of each data you are going to use and this is allocated as soon as your program is executed.

Remember also that we are talking about relative addresses. The real address where the variable will be located will be different. At load time the kernel will reserve some memory for the process, lets say at address x, and all the hard coded addresses contained in the executable file will be incremented by x bytes, so that variable a in the example will be at address x, b at address x+33 and so on.

Solution 4

Adding variables on the stack that take up N bytes doesn't (necessarily) increase the bin's size by N bytes. It will, in fact, add but a few bytes most of the time.

Let's start off with an example of how adding a 1000 chars to your code will increase the bin's size in a linear fashion.

If the 1k is a string, of a thousand chars, which is declared like so

const char *c_string = "Here goes a thousand chars...999";//implicit \0 at end

and you then were to vim your_compiled_bin, you'd actually be able to see that string in the bin somewhere. In that case, yes: the executable will be 1 k bigger, because it contains the string in full.

If, however you allocate an array of ints, chars or longs on the stack and assign it in a loop, something along these lines

int big_arr[1000];

for (int i=0;i<1000;++i) big_arr[i] = some_computation_func(i);

then, no: it won't increase the bin... by 1000*sizeof(int)

Allocation at compile time means what you've now come to understand it means (based on your comments): the compiled bin contains information the system requires to know how much memory what function/block will need when it gets executed, along with information on the stack size your application requires. That's what the system will allocate when it executes your bin, and your program becomes a process (well, the executing of your bin is the process that... well, you get what I'm saying).

Of course, I'm not painting the full picture here: The bin contains information about how big a stack the bin will actually be needing. Based on this information (among other things), the system will reserve a chunk of memory, called the stack, that the program gets sort of free reign over. Stack memory still is allocated by the system, when the process (the result of your bin being executed) is initiated. The process then manages the stack memory for you. When a function or loop (any type of block) is invoked/gets executed, the variables local to that block are pushed to the stack, and they are removed (the stack memory is "freed" so to speak) to be used by other functions/blocks. So declaring int some_array[100] will only add a few bytes of additional information to the bin, that tells the system that function X will be requiring 100*sizeof(int) + some book-keeping space extra.

Solution 5

On many platforms, all of the global or static allocations within each module will be consolidated by the compiler into three or fewer consolidated allocations (one for uninitialized data (often called "bss"), one for initialized writable data (often called "data"), and one for constant data ("const")), and all of the global or static allocations of each type within a program will be consolidated by the linker into one global for each type. For example, assuming int is four bytes, a module has the following as its only static allocations:

int a;

const int b[6] = {1,2,3,4,5,6};

char c[200];

const int d = 23;

int e[4] = {1,2,3,4};

int f;

it would tell the linker that it needed 208 bytes for bss, 16 bytes for "data", and 28 bytes for "const". Further, any reference to a variable would be replaced with an area selector and offset, so a, b, c, d, and e, would be replaced by bss+0, const+0, bss+4, const+24, data+0, or bss+204, respectively.

When a program is linked, all of the bss areas from all the modules are be concatenated together; likewise the data and const areas. For each module, the address of any bss-relative variables will be increased by the size of all preceding modules' bss areas (again, likewise with data and const). Thus, when the linker is done, any program will have one bss allocation, one data allocation, and one const allocation.

When a program is loaded, one of four things will generally happen depending upon the platform:

The executable will indicate how many bytes it needs for each kind of data and--for the initialized data area, where the initial contents may be found. It will also include a list of all the instructions which use a bss-, data-, or const- relative address. The operating system or loader will allocate the appropriate amount of space for each area and then add the starting address of that area to each instruction which needs it.

The operating system will allocate a chunk of memory to hold all three kinds of data, and give the application a pointer to that chunk of memory. Any code which uses static or global data will dereference it relative to that pointer (in many cases, the pointer will be stored in a register for the lifetime of an application).

The operating system will initially not allocate any memory to the application, except for what holds its binary code, but the first thing the application does will be to request a suitable allocation from the operating system, which it will forevermore keep in a register.

The operating system will initially not allocate space for the application, but the application will request a suitable allocation on startup (as above). The application will include a list of instructions with addresses that need to be updated to reflect where memory was allocated (as with the first style), but rather than having the application patched by the OS loader, the application will include enough code to patch itself.

All four approaches have advantages and disadvantages. In every case, however, the compiler will consolidate an arbitrary number of static variables into a fixed small number of memory requests, and the linker will consolidate all of those into a small number of consolidated allocations. Even though an application will have to receive a chunk of memory from the operating system or loader, it is the compiler and linker which are responsible for allocating individual pieces out of that big chunk to all the individual variables that need it.

Related videos on Youtube

Comments

-

Talha Sayed over 3 years

Talha Sayed over 3 yearsIn programming languages like C and C++, people often refer to static and dynamic memory allocation. I understand the concept but the phrase "All memory was allocated (reserved) during compile time" always confuses me.

Compilation, as I understand it, converts high level C/C++ code to machine language and outputs an executable file. How is memory "allocated" in a compiled file ? Isn't memory always allocated in the RAM with all the virtual memory management stuff ?

Isn't memory allocation by definition a runtime concept ?

If I make a 1KB statically allocated variable in my C/C++ code, will that increase the size of the executable by the same amount ?

This is one of the pages where the phrase is used under the heading "Static allocation".

-

Cholthi Paul Ttiopic over 8 yearsthe code and data is totally segregated in most modern architectures. while source files contain both code data in same place, the bin only has references to data. This means static data in source is only resolved as references.

-

-

Talha Sayed over 10 yearsThanks. This clarifies a lot. So the compiler outputs something equivalent to "reserve memory from 0xABC till 0xXYZ for variable array[] etc." and then the loader uses that to really allocate it just before it runs the program ?

Talha Sayed over 10 yearsThanks. This clarifies a lot. So the compiler outputs something equivalent to "reserve memory from 0xABC till 0xXYZ for variable array[] etc." and then the loader uses that to really allocate it just before it runs the program ? -

Manu343726 over 10 years@TalhaSayed exactly. See the edit to look at the example

-

Talha Sayed over 10 yearsThanks a lot. One more question, do local variables for functions also get allocated the same way during compile time ?

Talha Sayed over 10 yearsThanks a lot. One more question, do local variables for functions also get allocated the same way during compile time ? -

Elias Van Ootegem over 10 years@TalhaSayed: Yes, that's what I meant when I said: "information the system requires to know how much memory what function/block will be requiring." The moment you call a function, the system will allocate the required memory for that function. The moment the function returns, that memory will be freed again.

Elias Van Ootegem over 10 years@TalhaSayed: Yes, that's what I meant when I said: "information the system requires to know how much memory what function/block will be requiring." The moment you call a function, the system will allocate the required memory for that function. The moment the function returns, that memory will be freed again. -

TiTAnSMG over 10 yearsI don't agree with - "The program is written in a way that it things it has its own entire memory space (From 0x00000000 to 0xFFFFFFFF for example)."

-

phant0m over 10 years@Secko Can you elaborate? Thanks!

-

Manu343726 over 10 years@Secko I have simplified things. Its only a mention about the program works through virtual memory, but as the question is not about virtual memory I have not extended the topic. I was only pointing that the compiler can do assumptions about memory addresses at compile-time, thanks to virtual memory.

-

TiTAnSMG over 10 years@Manu343726 OK, then I have just one thing left to ask about in that phrase. If what you have said is just a simplification, then what do you mean by - "program is written"?

-

TiTAnSMG over 10 years@phant0m Manu343726 has already explained my doubts about it.

-

Manu343726 over 10 years@Secko I mean the program (As a concept, the binary code for example) is written in a way which it (The program) works as it has the entire memory space for it (Even more memory), besides its not true.

-

TiTAnSMG over 10 years@Manu343726 BTW, I agree with this completely - "assumptions about memory addresses at compile-time, thanks to virtual memory", if this had been in the answer I wouldn't have commented. Somehow the phrase which I commented on wasn't as clear to me.

-

phant0m over 10 yearsAs for the comments in your C code: That's not actually/necessarily what happens. For instance, the string will most likely be allocated only once, at compile time. Thus it is never "freed" (also I think that terminology is usually only used when you allocate something dynamically),

iisn't "freed" or either. Ifiwere to reside on memory, it'd just get pushed to the stack, something that's not freed in that sense of the word, disregarding thatiorcwill be held in registers the entire time. Of course, this all depends on the compiler, which means it's not that black and white. -

phant0m over 10 years@Secko Ah sorry, I thought that bit was in the post from the beginning, hence my confusion what you were disagreeing with.

-

TiTAnSMG over 10 years@Manu343726 Correct me if I'm wrong, but by "written" you actually mean compiled and linked together into binary code.

-

Elias Van Ootegem over 10 years@phant0m: I never said the string is allocated on the stack, only the pointer too it would be, the string itself would reside in read-only memory. I know the memory associated with the local variables doesn't get freed in the sense of

Elias Van Ootegem over 10 years@phant0m: I never said the string is allocated on the stack, only the pointer too it would be, the string itself would reside in read-only memory. I know the memory associated with the local variables doesn't get freed in the sense offree()calls, but the stack memory they used is free for use by other functions once the function I listed returns. I removed the code, since it may be confusing to some -

Manu343726 over 10 years@Secko yes. mmm "generated" is a better term I think.

-

phant0m over 10 yearsAh I see. In that case take my comment to mean "I was confused by your wording."

-

Radiodef over 10 years"Its allocated in the static mamory area of the process memory space" Reading that allocated some static mammary areas in my process memory space.

-

Manu343726 over 10 years@Radiodef LOL... Fixed :)

-

Quuxplusone over 10 yearsI like answers that use the Socratic method, but I still downvoted you for the erroneous conclusion that "the compiler generates instructions to allocate that memory somehow at runtime". Check out the top-voted answer to see how a compiler can "allocate memory" without generating any runtime "instructions". (Note that "instructions" in an assembly-language context has a specific meaning, i.e., executable opcodes. You might have been using the word colloquially to mean something like "recipe", but in this context that'll just confuse the OP.)

-

Quuxplusone over 10 yearsThis answer is confusing (or confused) in that it talks about "the application heap", "the OS heap", and "the GC heap" as if these were all meaningful concepts. I infer that by #1 you were trying to say that some programming languages might (hypothetically) use a "heap allocation" scheme that allocates memory out of a fixed-size buffer in the .data section, but that seems unrealistic enough to be harmful to the OP's understanding. Re #2 and #3, the presence of a GC doesn't really change anything. And re #5, you omitted the relatively MUCH more important distinction between

.dataand.bss. -

jmoreno over 10 years@Quuxplusone: I read (and upvoted) that answer. And no, my answer doesn't specifically address the issue of initialized variables. It also doesn't address self-modifying code. While that answer is excellent, it didn't address what I consider an important issue -- putting things in context. Hence my answer, which I hope will help the OP (and others) stop and think about what is or can be going on, when they have issues they do not understand.

-

Mercurial over 10 years"Every object of static storage duration is zero-initialized at program startup before any other initialization takes place. In some cases, additional initialization is done later." Is this compiler dependent or a language rule? I suppose dynamically created objects don't get zero initialized because C++ prefers performance over everything else?

Mercurial over 10 years"Every object of static storage duration is zero-initialized at program startup before any other initialization takes place. In some cases, additional initialization is done later." Is this compiler dependent or a language rule? I suppose dynamically created objects don't get zero initialized because C++ prefers performance over everything else? -

Manu343726 over 10 years@Mercurial is a language rule. Is the point 8.5.9 of the ISO C++ Standard

-

Manu343726 over 10 years@Mercurial thats exactly why the zero-initialization is not performed in local or dynamic objects. Note that Standard entry (And this question) talks about static storage duration.

-

jnovacho over 10 yearsDoes this thing mean I can create few gigabytes big

jnovacho over 10 yearsDoes this thing mean I can create few gigabytes bighello_worldexecutable? If I allocate big enough static array. -

Manu343726 over 10 years@jnovacho I think thats implementation defined. From the OS point of view, in most common architectures the OS lets a process 4GB of memory space. But I don't know whats the maximum of that space for each memory segment. Again, that depends on th OS. OTOH, the compiler has limits on initializer sizes, raw-strings lengths, etc.

-

Dwayne Towell over 10 yearsNot to muddy the water too much, but I feel it needs to be pointed out that: compilers don't actually determine where variables will be allocated at compile time. Technically the linker does that by collecting all the items in the same segment together and ordering them. Only after they have been ordered can the "final" address (which doesn't include relocatable binaries and virtual memory and so on) be determined.

Dwayne Towell over 10 yearsNot to muddy the water too much, but I feel it needs to be pointed out that: compilers don't actually determine where variables will be allocated at compile time. Technically the linker does that by collecting all the items in the same segment together and ordering them. Only after they have been ordered can the "final" address (which doesn't include relocatable binaries and virtual memory and so on) be determined. -

Elias Van Ootegem over 10 yearsI honestly don't mind/care being down-voted, but please: explain why. If I'm making false assumptions/assertions, I'd like to know, too, so I can mend my ways and write better code.

Elias Van Ootegem over 10 yearsI honestly don't mind/care being down-voted, but please: explain why. If I'm making false assumptions/assertions, I'd like to know, too, so I can mend my ways and write better code. -

Elias Van Ootegem over 10 years@Quuxplusone: Sorry if I'm making false allegations here, but I take it you were one of the people who -1'ed my answer, too. If so, would you mind awfully pointing out which part of my answer was the main reason for doing so, and would you also care to check my edit? I know I've skipped a few bits about the true internals of how the stack memory is managed, so I now added a bit about my not being 100% accurate to my answer now anyways :)

Elias Van Ootegem over 10 years@Quuxplusone: Sorry if I'm making false allegations here, but I take it you were one of the people who -1'ed my answer, too. If so, would you mind awfully pointing out which part of my answer was the main reason for doing so, and would you also care to check my edit? I know I've skipped a few bits about the true internals of how the stack memory is managed, so I now added a bit about my not being 100% accurate to my answer now anyways :) -

Quuxplusone over 10 yearsCan't speak for anyone else, but my downvote was because your answer didn't explain the why or how of the binary file's size increase, only the what — and even some of that was incorrect. It feels like you were just collecting data points via

ls -l a.outand then making up abstract "explanations" that you thought fitted the data, rather than seriously having deep knowledge of the true answer and attempting to convey relevant parts of that knowledge to the questioner. -

Quuxplusone over 10 yearsSample factual errors: Adding a local (stack) variable just changes a constant in the relevant function prologue (i.e., instructions in

.text); once you have a stack frame at all, adding more variables doesn't usually change the size of the binary. Adding a zero-initialized static (in.bss) doesn't change the size of the binary at all (only the value stored as "size of.bss" in the binary), and certainly doesn't generate any executable instructions. -

Elias Van Ootegem over 10 years@Quuxplusone: I admit, I don't know how the stack memory is allocated, and I don't know assembly. I know I took a couple of short-cuts, and I know I stated a couple of things that weren't entirely true. I never claimed my answer would be 100% accurate. But in my defense, the OP's question actually boiled down to what compile-time allocation means, and does it change the bin size, and I did answer that to an extent. But could you tell me where I said that adding a stack var increases the bin size (the first lines of my answer always started with "adding a variable doesn't necessarily..."

Elias Van Ootegem over 10 years@Quuxplusone: I admit, I don't know how the stack memory is allocated, and I don't know assembly. I know I took a couple of short-cuts, and I know I stated a couple of things that weren't entirely true. I never claimed my answer would be 100% accurate. But in my defense, the OP's question actually boiled down to what compile-time allocation means, and does it change the bin size, and I did answer that to an extent. But could you tell me where I said that adding a stack var increases the bin size (the first lines of my answer always started with "adding a variable doesn't necessarily..." -

Dan over 10 years@DwayneTowell - you aren't muddying the waters, you are clearing them IMO. On a deeply embedded system, typically all addresses are fixed at link time, and the image is loaded (flashed, etc.) into same. More elaborate systems might have a loader which copies or moves code, and some systems implement both a loader and virtual memory. This question actually touches on a host of concepts - static vs. dynamic vs. automatic allocation, compilers, linkers, loaders, virtual memory, operating system vs. bare-metal, bootloaders, etc.

-

Manu343726 over 10 years@Dan thats exactly why I have not extended the answer. As you pointed out, this question covers a lot of topics, (from compilers to program loaders, OS/compiler dependent things). My intention was only to provide a clear vision to the OP. I think adding all that specific information hides the concept.

-

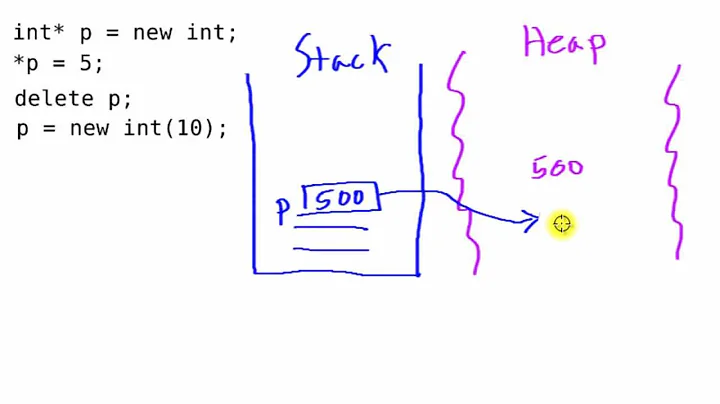

Bartek Banachewicz over 10 yearsIf those diagrams showed the difference between static and dynamic memory they would be more useful IMHO.

Bartek Banachewicz over 10 yearsIf those diagrams showed the difference between static and dynamic memory they would be more useful IMHO. -

user3258051 over 10 yearsThis had been deliberately avoided by me to keep the things simple. My focus is to explain this funda with clarity without much technical clutter. As far as this is meant for static variable .. This point has been established well by previous answers.So I skipped this.

-

Bartek Banachewicz over 10 yearsEh, this concept isn't particularly complicated, so I don't see why make it simpler than it needs to be, but since it's meant only as a complimentary answer, ok.

Bartek Banachewicz over 10 yearsEh, this concept isn't particularly complicated, so I don't see why make it simpler than it needs to be, but since it's meant only as a complimentary answer, ok. -

Talha Sayed about 10 years@jmoreno The point which you made about " Can it mean that memory on chips that have not yet been manufactured, for computers that have not yet been designed, is somehow being reserved? No." is exactly the false meaning that the word "allocation" implies which confused me from the start. I like this answer because it refers to exactly the problem that I was trying to point out. None of the answers here really touched that particular point. Thanks.

Talha Sayed about 10 years@jmoreno The point which you made about " Can it mean that memory on chips that have not yet been manufactured, for computers that have not yet been designed, is somehow being reserved? No." is exactly the false meaning that the word "allocation" implies which confused me from the start. I like this answer because it refers to exactly the problem that I was trying to point out. None of the answers here really touched that particular point. Thanks. -

Suraj Jain over 7 years@Manu343726 If i change

Suraj Jain over 7 years@Manu343726 If i changeint a[4]tostatic int a[4]then in assembly output.zero 16is not there. -

Suraj Jain over 7 years@Manu343726 Why Is so ?

Suraj Jain over 7 years@Manu343726 Why Is so ? -

Suraj Jain over 7 years@Manu343726 Compiler Should generates a zero initialization of 16 bytes, because the Standard says that static stored things should be initialized to zero by default. So what really happens ?

Suraj Jain over 7 years@Manu343726 Compiler Should generates a zero initialization of 16 bytes, because the Standard says that static stored things should be initialized to zero by default. So what really happens ? -

Manu343726 over 7 years@SurajJain Maybe the compiler optimized the array out since it's not being used in this translation unit and now, since you made it private to the tu with static, cannot be referenced externally. Check of you can find the array in the executable symbol table

-

Suraj Jain over 7 years@Manu343726 i cannot find any reference to it in gcc explorer generated assembly output. Link : godbolt.org/g/c4lN4x

Suraj Jain over 7 years@Manu343726 i cannot find any reference to it in gcc explorer generated assembly output. Link : godbolt.org/g/c4lN4x -

Suraj Jain over 7 yearsif i write

Suraj Jain over 7 yearsif i writestatic int i[4] = {2 , 3 , 5 ,5 }will it increase by executable size by 16 bytes. You said "Simply declaring the static will not increase the size of your executable more than a few bytes. Declaring it with an initial value that is non-zero will" Declaring it with initial value will what does it mean. -

mah over 7 yearsYour executable has two areas for static data - one for uninitialized statics and one for initialized statics. The uninitialized area is really just a size indication; when your program is run, that size is used to grow the static storage area but the program itself didn't have to hold anything more than how much uninitialized data is used. For initialized statics, your program must hold not only the size of (each) static, but also what it gets initialized to. Thus in your example, your program will have 2, 3, 5, and 5 in it.

-

mah over 7 yearsIt's implementation defined as to where it gets placed / how it gets allocated, but I'm not sure I understand the need to know.

-

mdo123 over 7 years@Manu343726. Let's say I have three computers, A, B, and C. All three computers are identical in hardware, software, OS, etc. They are all brand new and have never been used. If I write a simple program on computer A and compile it, then take that executable and run it on computer B and computer C. Will computer B and computer C use the same exact memory addresses and still be identical or will the memory usage be different on computers B and C? I'm still trying to visualize from point A to Z how you write a program and it ends up in memory.