What is the fastest way to Parse a line in Delphi?

Solution 1

- Use PChar incrementing for speed of processing

- If some tokens are not needed, only copy token data on demand

- Copy PChar to local variable when actually scanning through characters

- Keep source data in a single buffer unless you must handle line by line, and even then, consider handling line processing as a separate token in the lexer recognizer

- Consider processing a byte array buffer that has come straight from the file, if you definitely know the encoding; if using Delphi 2009, use PAnsiChar instead of PChar, unless of course you know the encoding is UTF16-LE.

- If you know that the only whitespace is going to be #32 (ASCII space), or a similarly limited set of characters, there may be some clever bit manipulation hacks that can let you process 4 bytes at a time using Integer scanning. I wouldn't expect big wins here though, and the code will be as clear as mud.

Here's a sample lexer that should be pretty efficient, but it assumes that all source data is in a single string. Reworking it to handle buffers is moderately tricky due to very long tokens.

type

TLexer = class

private

FData: string;

FTokenStart: PChar;

FCurrPos: PChar;

function GetCurrentToken: string;

public

constructor Create(const AData: string);

function GetNextToken: Boolean;

property CurrentToken: string read GetCurrentToken;

end;

{ TLexer }

constructor TLexer.Create(const AData: string);

begin

FData := AData;

FCurrPos := PChar(FData);

end;

function TLexer.GetCurrentToken: string;

begin

SetString(Result, FTokenStart, FCurrPos - FTokenStart);

end;

function TLexer.GetNextToken: Boolean;

var

cp: PChar;

begin

cp := FCurrPos; // copy to local to permit register allocation

// skip whitespace; this test could be converted to an unsigned int

// subtraction and compare for only a single branch

while (cp^ > #0) and (cp^ <= #32) do

Inc(cp);

// using null terminater for end of file

Result := cp^ <> #0;

if Result then

begin

FTokenStart := cp;

Inc(cp);

while cp^ > #32 do

Inc(cp);

end;

FCurrPos := cp;

end;

Solution 2

Here is a lame ass implementation of a very simple lexer. This might give you an idea.

Note the limitations of this example - no buffering involved, no Unicode (this is an excerpt from a Delphi 7 project). You would probably need those in a serious implementation.

{ Implements a simpe lexer class. }

unit Simplelexer;

interface

uses Classes, Sysutils, Types, dialogs;

type

ESimpleLexerFinished = class(Exception) end;

TProcTableProc = procedure of object;

// A very simple lexer that can handle numbers, words, symbols - no comment handling

TSimpleLexer = class(TObject)

private

FLineNo: Integer;

Run: Integer;

fOffset: Integer;

fRunOffset: Integer; // helper for fOffset

fTokenPos: Integer;

pSource: PChar;

fProcTable: array[#0..#255] of TProcTableProc;

fUseSimpleStrings: Boolean;

fIgnoreSpaces: Boolean;

procedure MakeMethodTables;

procedure IdentProc;

procedure NewLineProc;

procedure NullProc;

procedure NumberProc;

procedure SpaceProc;

procedure SymbolProc;

procedure UnknownProc;

public

constructor Create;

destructor Destroy; override;

procedure Feed(const S: string);

procedure Next;

function GetToken: string;

function GetLineNo: Integer;

function GetOffset: Integer;

property IgnoreSpaces: boolean read fIgnoreSpaces write fIgnoreSpaces;

property UseSimpleStrings: boolean read fUseSimpleStrings write fUseSimpleStrings;

end;

implementation

{ TSimpleLexer }

constructor TSimpleLexer.Create;

begin

makeMethodTables;

fUseSimpleStrings := false;

fIgnoreSpaces := false;

end;

destructor TSimpleLexer.Destroy;

begin

inherited;

end;

procedure TSimpleLexer.Feed(const S: string);

begin

Run := 0;

FLineNo := 1;

FOffset := 1;

pSource := PChar(S);

end;

procedure TSimpleLexer.Next;

begin

fTokenPos := Run;

foffset := Run - frunOffset + 1;

fProcTable[pSource[Run]];

end;

function TSimpleLexer.GetToken: string;

begin

SetString(Result, (pSource + fTokenPos), Run - fTokenPos);

end;

function TSimpleLexer.GetLineNo: Integer;

begin

Result := FLineNo;

end;

function TSimpleLexer.GetOffset: Integer;

begin

Result := foffset;

end;

procedure TSimpleLexer.MakeMethodTables;

var

I: Char;

begin

for I := #0 to #255 do

case I of

'@', '&', '}', '{', ':', ',', ']', '[', '*',

'^', ')', '(', ';', '/', '=', '-', '+', '#', '>', '<', '$',

'.', '"', #39:

fProcTable[I] := SymbolProc;

#13, #10: fProcTable[I] := NewLineProc;

'A'..'Z', 'a'..'z', '_': fProcTable[I] := IdentProc;

#0: fProcTable[I] := NullProc;

'0'..'9': fProcTable[I] := NumberProc;

#1..#9, #11, #12, #14..#32: fProcTable[I] := SpaceProc;

else

fProcTable[I] := UnknownProc;

end;

end;

procedure TSimpleLexer.UnknownProc;

begin

inc(run);

end;

procedure TSimpleLexer.SymbolProc;

begin

if fUseSimpleStrings then

begin

if pSource[run] = '"' then

begin

Inc(run);

while pSource[run] <> '"' do

begin

Inc(run);

if pSource[run] = #0 then

begin

NullProc;

end;

end;

end;

Inc(run);

end

else

inc(run);

end;

procedure TSimpleLexer.IdentProc;

begin

while pSource[Run] in ['_', 'A'..'Z', 'a'..'z', '0'..'9'] do

Inc(run);

end;

procedure TSimpleLexer.NumberProc;

begin

while pSource[run] in ['0'..'9'] do

inc(run);

end;

procedure TSimpleLexer.SpaceProc;

begin

while pSource[run] in [#1..#9, #11, #12, #14..#32] do

inc(run);

if fIgnoreSpaces then Next;

end;

procedure TSimpleLexer.NewLineProc;

begin

inc(FLineNo);

inc(run);

case pSource[run - 1] of

#13:

if pSource[run] = #10 then inc(run);

end;

foffset := 1;

fRunOffset := run;

end;

procedure TSimpleLexer.NullProc;

begin

raise ESimpleLexerFinished.Create('');

end;

end.

Solution 3

I made a lexical analyser based on a state engine (DFA). It works with a table and is pretty fast. But there are possible faster options.

It also depends on the language. A simple language can possibly have a smart algorithm.

The table is an array of records each containing 2 chars and 1 integer. For each token the lexer walks through the table, startting at position 0:

state := 0;

result := tkNoToken;

while (result = tkNoToken) do begin

if table[state].c1 > table[state].c2 then

result := table[state].value

else if (table[state].c1 <= c) and (c <= table[state].c2) then begin

c := GetNextChar();

state := table[state].value;

end else

Inc(state);

end;

It is simple and works like a charm.

Solution 4

If speed is of the essence, custom code is the answer. Check out the Windows API that will map your file into memory. You can then just use a pointer to the next character to do your tokens, marching through as required.

This is my code for doing a mapping:

procedure TMyReader.InitialiseMapping(szFilename : string);

var

// nError : DWORD;

bGood : boolean;

begin

bGood := False;

m_hFile := CreateFile(PChar(szFilename), GENERIC_READ, 0, nil, OPEN_EXISTING, 0, 0);

if m_hFile <> INVALID_HANDLE_VALUE then

begin

m_hMap := CreateFileMapping(m_hFile, nil, PAGE_READONLY, 0, 0, nil);

if m_hMap <> 0 then

begin

m_pMemory := MapViewOfFile(m_hMap, FILE_MAP_READ, 0, 0, 0);

if m_pMemory <> nil then

begin

htlArray := Pointer(Integer(m_pMemory) + m_dwDataPosition);

bGood := True;

end

else

begin

// nError := GetLastError;

end;

end;

end;

if not bGood then

raise Exception.Create('Unable to map token file into memory');

end;

Solution 5

I think the biggest bottleneck is always going to be getting the file into memory. Once you have it in memory (obviously not all of it at once, but I would work with buffers if I were you), the actual parsing should be insignificant.

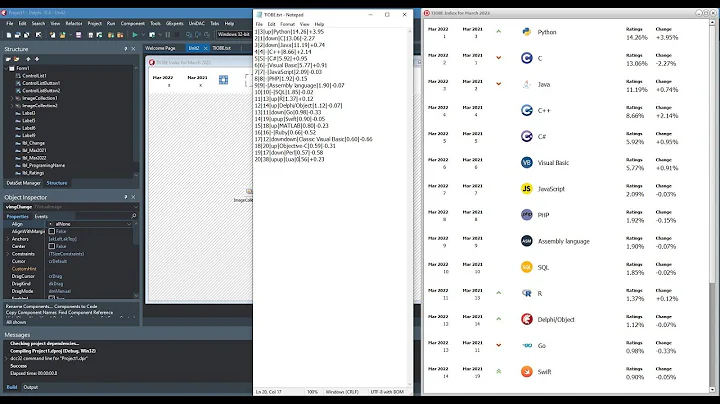

Related videos on Youtube

lkessler

I'm a Programmer and a Genealogist. I am the developer of the Genealogy Software: Behold Also the DNA Analysis Software: Double Match Triangulator I operate the GenSoftReviews site My blog | My Tweets | Brute Force If you're into Genealogy, I recommend you try the Genealogy and Family History Stack Exchange site at: http://genealogy.stackexchange.com/ See My Family Research and Unsolved Mysteries

Updated on June 04, 2022Comments

-

lkessler almost 2 years

I have a huge file that I must parse line by line. Speed is of the essence.

Example of a line:

Token-1 Here-is-the-Next-Token Last-Token-on-Line ^ ^ Current Position Position after GetTokenGetToken is called, returning "Here-is-the-Next-Token" and sets the CurrentPosition to the position of the last character of the token so that it is ready for the next call to GetToken. Tokens are separated by one or more spaces.

Assume the file is already in a StringList in memory. It fits in memory easily, say 200 MB.

I am worried only about the execution time for the parsing. What code will produce the absolute fastest execution in Delphi (Pascal)?

-

lkessler over 15 yearsSorry. I didn't mean the fastest way to "write" the code. I really wanted the code that would be fastest. I've now editing the question to make that obvious.

-

lkessler over 15 yearsNo. It fits in memory easily. Say 200 MB. Assume it's already in a StringList. I'll edit the question and add clarify this.

-

Barry Kelly over 15 yearsDFA state transitions can be implemented as a table, yes, but a different way to implement them is implicitly via the program counter. It usually ends up being clearer and more efficient than a DFA, which are more suited to automatic generation.

Barry Kelly over 15 yearsDFA state transitions can be implemented as a table, yes, but a different way to implement them is implicitly via the program counter. It usually ends up being clearer and more efficient than a DFA, which are more suited to automatic generation. -

Barry Kelly over 15 yearsUsing PChar directly rather than indexing, and copying the PChar location into a local so that a register can be allocated to it, are a couple of simple optimisations you could apply to your approach. Also, determining token type can be efficiently done with a case statement rather than table+func.

Barry Kelly over 15 yearsUsing PChar directly rather than indexing, and copying the PChar location into a local so that a register can be allocated to it, are a couple of simple optimisations you could apply to your approach. Also, determining token type can be efficiently done with a case statement rather than table+func. -

lkessler over 15 yearsI read my file using TFileStream.Create, Read, TEncoding.GetBufferEncoding and Encoding.GetString. This load a StringList very fast. I understand that Memory mapped files are often faster for random access, but never for sequential access. Also I would still have to do the Encoding.

-

lkessler over 15 yearsActually NOT. It took .04 seconds for a simple Read File of a 25 MB file into the Buffer and .17 seconds to Encode it (to convert ASCII to Unicode). Then it took 4.5 seconds to read the 25 million characters and parse out the parts of the line. So I need the speed in the parser.