What is the fastest way to transpose a matrix in C++?

Solution 1

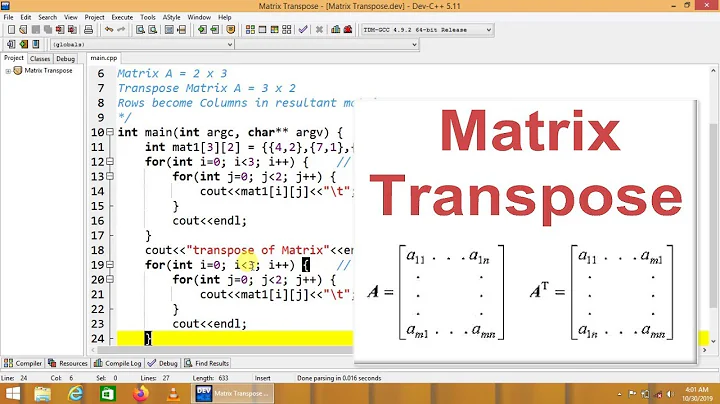

This is a good question. There are many reason you would want to actually transpose the matrix in memory rather than just swap coordinates, e.g. in matrix multiplication and Gaussian smearing.

First let me list one of the functions I use for the transpose (EDIT: please see the end of my answer where I found a much faster solution)

void transpose(float *src, float *dst, const int N, const int M) {

#pragma omp parallel for

for(int n = 0; n<N*M; n++) {

int i = n/N;

int j = n%N;

dst[n] = src[M*j + i];

}

}

Now let's see why the transpose is useful. Consider matrix multiplication C = A*B. We could do it this way.

for(int i=0; i<N; i++) {

for(int j=0; j<K; j++) {

float tmp = 0;

for(int l=0; l<M; l++) {

tmp += A[M*i+l]*B[K*l+j];

}

C[K*i + j] = tmp;

}

}

That way, however, is going to have a lot of cache misses. A much faster solution is to take the transpose of B first

transpose(B);

for(int i=0; i<N; i++) {

for(int j=0; j<K; j++) {

float tmp = 0;

for(int l=0; l<M; l++) {

tmp += A[M*i+l]*B[K*j+l];

}

C[K*i + j] = tmp;

}

}

transpose(B);

Matrix multiplication is O(n^3) and the transpose is O(n^2), so taking the transpose should have a negligible effect on the computation time (for large n). In matrix multiplication loop tiling is even more effective than taking the transpose but that's much more complicated.

I wish I knew a faster way to do the transpose (Edit: I found a faster solution, see the end of my answer). When Haswell/AVX2 comes out in a few weeks it will have a gather function. I don't know if that will be helpful in this case but I could image gathering a column and writing out a row. Maybe it will make the transpose unnecessary.

For Gaussian smearing what you do is smear horizontally and then smear vertically. But smearing vertically has the cache problem so what you do is

Smear image horizontally

transpose output

Smear output horizontally

transpose output

Here is a paper by Intel explaining that http://software.intel.com/en-us/articles/iir-gaussian-blur-filter-implementation-using-intel-advanced-vector-extensions

Lastly, what I actually do in matrix multiplication (and in Gaussian smearing) is not take exactly the transpose but take the transpose in widths of a certain vector size (e.g. 4 or 8 for SSE/AVX). Here is the function I use

void reorder_matrix(const float* A, float* B, const int N, const int M, const int vec_size) {

#pragma omp parallel for

for(int n=0; n<M*N; n++) {

int k = vec_size*(n/N/vec_size);

int i = (n/vec_size)%N;

int j = n%vec_size;

B[n] = A[M*i + k + j];

}

}

EDIT:

I tried several function to find the fastest transpose for large matrices. In the end the fastest result is to use loop blocking with block_size=16 (Edit: I found a faster solution using SSE and loop blocking - see below). This code works for any NxM matrix (i.e. the matrix does not have to be square).

inline void transpose_scalar_block(float *A, float *B, const int lda, const int ldb, const int block_size) {

#pragma omp parallel for

for(int i=0; i<block_size; i++) {

for(int j=0; j<block_size; j++) {

B[j*ldb + i] = A[i*lda +j];

}

}

}

inline void transpose_block(float *A, float *B, const int n, const int m, const int lda, const int ldb, const int block_size) {

#pragma omp parallel for

for(int i=0; i<n; i+=block_size) {

for(int j=0; j<m; j+=block_size) {

transpose_scalar_block(&A[i*lda +j], &B[j*ldb + i], lda, ldb, block_size);

}

}

}

The values lda and ldb are the width of the matrix. These need to be multiples of the block size. To find the values and allocate the memory for e.g. a 3000x1001 matrix I do something like this

#define ROUND_UP(x, s) (((x)+((s)-1)) & -(s))

const int n = 3000;

const int m = 1001;

int lda = ROUND_UP(m, 16);

int ldb = ROUND_UP(n, 16);

float *A = (float*)_mm_malloc(sizeof(float)*lda*ldb, 64);

float *B = (float*)_mm_malloc(sizeof(float)*lda*ldb, 64);

For 3000x1001 this returns ldb = 3008 and lda = 1008

Edit:

I found an even faster solution using SSE intrinsics:

inline void transpose4x4_SSE(float *A, float *B, const int lda, const int ldb) {

__m128 row1 = _mm_load_ps(&A[0*lda]);

__m128 row2 = _mm_load_ps(&A[1*lda]);

__m128 row3 = _mm_load_ps(&A[2*lda]);

__m128 row4 = _mm_load_ps(&A[3*lda]);

_MM_TRANSPOSE4_PS(row1, row2, row3, row4);

_mm_store_ps(&B[0*ldb], row1);

_mm_store_ps(&B[1*ldb], row2);

_mm_store_ps(&B[2*ldb], row3);

_mm_store_ps(&B[3*ldb], row4);

}

inline void transpose_block_SSE4x4(float *A, float *B, const int n, const int m, const int lda, const int ldb ,const int block_size) {

#pragma omp parallel for

for(int i=0; i<n; i+=block_size) {

for(int j=0; j<m; j+=block_size) {

int max_i2 = i+block_size < n ? i + block_size : n;

int max_j2 = j+block_size < m ? j + block_size : m;

for(int i2=i; i2<max_i2; i2+=4) {

for(int j2=j; j2<max_j2; j2+=4) {

transpose4x4_SSE(&A[i2*lda +j2], &B[j2*ldb + i2], lda, ldb);

}

}

}

}

}

Solution 2

This is going to depend on your application but in general the fastest way to transpose a matrix would be to invert your coordinates when you do a look up, then you do not have to actually move any data.

Solution 3

Some details about transposing 4x4 square float (I will discuss 32-bit integer later) matrices with x86 hardware. It's helpful to start here in order to transpose larger square matrices such as 8x8 or 16x16.

_MM_TRANSPOSE4_PS(r0, r1, r2, r3) is implemented differently by different compilers. GCC and ICC (I have not checked Clang) use unpcklps, unpckhps, unpcklpd, unpckhpd whereas MSVC uses only shufps. We can actually combine these two approaches together like this.

t0 = _mm_unpacklo_ps(r0, r1);

t1 = _mm_unpackhi_ps(r0, r1);

t2 = _mm_unpacklo_ps(r2, r3);

t3 = _mm_unpackhi_ps(r2, r3);

r0 = _mm_shuffle_ps(t0,t2, 0x44);

r1 = _mm_shuffle_ps(t0,t2, 0xEE);

r2 = _mm_shuffle_ps(t1,t3, 0x44);

r3 = _mm_shuffle_ps(t1,t3, 0xEE);

One interesting observation is that two shuffles can be converted to one shuffle and two blends (SSE4.1) like this.

t0 = _mm_unpacklo_ps(r0, r1);

t1 = _mm_unpackhi_ps(r0, r1);

t2 = _mm_unpacklo_ps(r2, r3);

t3 = _mm_unpackhi_ps(r2, r3);

v = _mm_shuffle_ps(t0,t2, 0x4E);

r0 = _mm_blend_ps(t0,v, 0xC);

r1 = _mm_blend_ps(t2,v, 0x3);

v = _mm_shuffle_ps(t1,t3, 0x4E);

r2 = _mm_blend_ps(t1,v, 0xC);

r3 = _mm_blend_ps(t3,v, 0x3);

This effectively converted 4 shuffles into 2 shuffles and 4 blends. This uses 2 more instructions than the implementation of GCC, ICC, and MSVC. The advantage is that it reduces port pressure which may have a benefit in some circumstances. Currently all the shuffles and unpacks can go only to one particular port whereas the blends can go to either of two different ports.

I tried using 8 shuffles like MSVC and converting that into 4 shuffles + 8 blends but it did not work. I still had to use 4 unpacks.

I used this same technique for a 8x8 float transpose (see towards the end of that answer). https://stackoverflow.com/a/25627536/2542702. In that answer I still had to use 8 unpacks but I manged to convert the 8 shuffles into 4 shuffles and 8 blends.

For 32-bit integers there is nothing like shufps (except for 128-bit shuffles with AVX512) so it can only be implemented with unpacks which I don't think can be convert to blends (efficiently). With AVX512 vshufi32x4 acts effectively like shufps except for 128-bit lanes of 4 integers instead of 32-bit floats so this same technique might be possibly with vshufi32x4 in some cases. With Knights Landing shuffles are four times slower (throughput) than blends.

Solution 4

If the size of the arrays are known prior then we could use the union to our help. Like this-

#include <bits/stdc++.h>

using namespace std;

union ua{

int arr[2][3];

int brr[3][2];

};

int main() {

union ua uav;

int karr[2][3] = {{1,2,3},{4,5,6}};

memcpy(uav.arr,karr,sizeof(karr));

for (int i=0;i<3;i++)

{

for (int j=0;j<2;j++)

cout<<uav.brr[i][j]<<" ";

cout<<'\n';

}

return 0;

}

Solution 5

transposing without any overhead (class not complete):

class Matrix{

double *data; //suppose this will point to data

double _get1(int i, int j){return data[i*M+j];} //used to access normally

double _get2(int i, int j){return data[j*N+i];} //used when transposed

public:

int M, N; //dimensions

double (*get_p)(int, int); //functor to access elements

Matrix(int _M,int _N):M(_M), N(_N){

//allocate data

get_p=&Matrix::_get1; // initialised with normal access

}

double get(int i, int j){

//there should be a way to directly use get_p to call. but i think even this

//doesnt incur overhead because it is inline and the compiler should be intelligent

//enough to remove the extra call

return (this->*get_p)(i,j);

}

void transpose(){ //twice transpose gives the original

if(get_p==&Matrix::get1) get_p=&Matrix::_get2;

else get_p==&Matrix::_get1;

swap(M,N);

}

}

can be used like this:

Matrix M(100,200);

double x=M.get(17,45);

M.transpose();

x=M.get(17,45); // = original M(45,17)

of course I didn't bother with the memory management here, which is crucial but different topic.

Related videos on Youtube

mans

Updated on September 22, 2020Comments

-

mans over 3 years

I have a matrix (relatively big) that I need to transpose. For example assume that my matrix is

a b c d e f g h i j k l m n o p q rI want the result be as follows:

a g m b h n c I o d j p e k q f l rWhat is the fastest way to do this?

-

Andy Prowl almost 11 yearsThat's called "transposing". Rotating by 90 degrees is a completely different notion.

Andy Prowl almost 11 yearsThat's called "transposing". Rotating by 90 degrees is a completely different notion. -

Some programmer dude almost 11 yearsBesides, that's not really 90 degrees is it? If it was the first two lines would be

Some programmer dude almost 11 yearsBesides, that's not really 90 degrees is it? If it was the first two lines would bem g aandn h b. -

High Performance Mark almost 11 yearsAnd the fastest way is not to rotate it but to simply swap the index order when you access the array.

High Performance Mark almost 11 yearsAnd the fastest way is not to rotate it but to simply swap the index order when you access the array. -

Damon almost 11 yearsIf Intel intrinsic macros count as "C", that would be

_MM_TRANSPOSE(). :-) -

taocp almost 11 yearsNo matter how fast it is, you have to access all the elements of the matrix anyway.

taocp almost 11 yearsNo matter how fast it is, you have to access all the elements of the matrix anyway. -

Matthieu M. almost 11 years@HighPerformanceMark: I would guess it depends, if you then wish to access the matrix repetitively in row order, having a "transposed" flag will hit you hard.

Matthieu M. almost 11 years@HighPerformanceMark: I would guess it depends, if you then wish to access the matrix repetitively in row order, having a "transposed" flag will hit you hard. -

NealB almost 11 yearsIf your matrix can be represented in linear memory (1D array) and Rows <> Columns (ie. not square), then this answer might be of some help: stackoverflow.com/a/3514733/192510

-

Marc Claesen almost 11 years@HighPerformanceMark if the matrix is stored as a 2D array, swapping indices will not work when the number of columns and rows are not equal. You will end up accessing memory outside of the array!

Marc Claesen almost 11 years@HighPerformanceMark if the matrix is stored as a 2D array, swapping indices will not work when the number of columns and rows are not equal. You will end up accessing memory outside of the array! -

Eric Postpischil almost 11 yearsTransposing matrices is notorious for the problems it causes with memory caches. If your array is large enough that the performance of a transpose is significant, and you cannot avoid transposing by simply providing an interface with swapped indices, then your best option is to use an existing library routine for transposing large matrices. Experts have already done this work, and you should use it.

-

Eric Postpischil almost 11 yearsThere is some useful information in this question. (Among other things: Making your matrix larger can make transposition faster.)

-

Admin almost 11 yearsTurns out loop tiling/blocking helps for the transpose as well. stackoverflow.com/questions/5200338/…

Admin almost 11 yearsTurns out loop tiling/blocking helps for the transpose as well. stackoverflow.com/questions/5200338/… -

Admin almost 11 yearsSo I looked into this and updated my answer. I found a solution which is much faster than what I was using using loop blocking.

Admin almost 11 yearsSo I looked into this and updated my answer. I found a solution which is much faster than what I was using using loop blocking. -

Admin almost 11 yearsI found, yet again, a faster solution using SSE, loop blocking, and OpenMP. I updated my answer.

Admin almost 11 yearsI found, yet again, a faster solution using SSE, loop blocking, and OpenMP. I updated my answer.

-

-

taocp almost 11 yearsBy invert coordinates, do you mean switch x and y axis?

taocp almost 11 yearsBy invert coordinates, do you mean switch x and y axis? -

Agentlien almost 11 yearsThis is great if it's a small matrix or you only read from it once. However, if the transposed matrix is large and needs to be reused many times, you may still to save a fast transposed version to get better memory access pattern. (+1, btw)

-

beaker almost 11 years@Agentlien: Why would A[j][i] be any slower than A[i][j]?

beaker almost 11 years@Agentlien: Why would A[j][i] be any slower than A[i][j]? -

Agentlien almost 11 years@beaker If you have a large matrix, different rows/columns may occupy different cache lines/pages. In this case, you'd want to iterate over elements in such a way that you access adjacent elements after each other. Otherwise, it can lead to every element access becoming a cache miss, which completely destroys performance.

-

Matthieu M. almost 11 years@beaker: it has to do with caching at CPU level (supposing that the matrix is a single big blob of memory), the cache lines are then effective lines of the matrix, and the prefetcher may fetch the next few lines. If you switch access, the CPU cache/prefetcher still work line by line whilst you access column by column, the performance drop can be dramatic.

Matthieu M. almost 11 years@beaker: it has to do with caching at CPU level (supposing that the matrix is a single big blob of memory), the cache lines are then effective lines of the matrix, and the prefetcher may fetch the next few lines. If you switch access, the CPU cache/prefetcher still work line by line whilst you access column by column, the performance drop can be dramatic. -

Shafik Yaghmour almost 11 years@taocp Basically, you would need some sort of flag to indicate it is transposed and then request for say

(i,j)would be mapped to(j,i) -

phoeagon almost 11 yearsI'd rather think it would be faster if you exchange the two loops, due to a smaller cache miss penalty at writing than reading.

-

NealB almost 11 yearsThis only works for a square matrix. A rectangular matrix is a whole different problem!

-

Eric Postpischil almost 11 yearsThe question asks for the fastest way. This is just a way. What makes you think it is fast, let alone fastest? For large matrices, this will thrash cache and have terrible performance.

-

Eric Postpischil almost 11 years@NealB: How do you figure that?

-

NealB almost 11 years@EricPostpischil The OP is asking about a relatively large matrix so I assume they wanted to do it "in place" to avoid allocating double the memory. When this is done the base address of the source and destination matrices the same. Transposing by flipping Row and Column indices will only work for square matrices. There are methods to get this right for rectangular matrices but they are somewhat more complex.

-

Eric Postpischil almost 11 years@NealB: Those criticisms are inapplicable to this code. This code is not incorrect for non-square matrices.

-

Admin almost 11 yearsThat code is fine for non-square matrices (although not very optimal). I think @EricPostpischil is thinking of the alogirthm for in-situ transpose. That's much harder en.wikipedia.org/wiki/….

Admin almost 11 yearsThat code is fine for non-square matrices (although not very optimal). I think @EricPostpischil is thinking of the alogirthm for in-situ transpose. That's much harder en.wikipedia.org/wiki/…. -

Eric Postpischil almost 11 years@raxman: You may have addressed the wrong person or misread the statement that the code is “not incorrect”.

-

user877329 over 9 yearsYou have an overhead from your function pointer that has to be followed for each element access.

-

Antony Thomas about 9 yearsM[i][j]=M[j][i] will only work if it were to be square matrix; else it would throw an index exception.

Antony Thomas about 9 yearsM[i][j]=M[j][i] will only work if it were to be square matrix; else it would throw an index exception. -

ulyssis2 over 7 yearsNice shot, but I am not sure 'Matrix multiplication is O(n^3)', I think it is O(n^2).

-

saurabheights over 7 years@ulyssis2 It's O(n^3), unless you use Strassen's Matrix Multiplication(O(n^2.8074)). user2088790: This is very well done. Keeping this in my personal collection. :)

saurabheights over 7 years@ulyssis2 It's O(n^3), unless you use Strassen's Matrix Multiplication(O(n^2.8074)). user2088790: This is very well done. Keeping this in my personal collection. :) -

Peter Cordes over 7 yearsYou can use

Peter Cordes over 7 yearsYou can useshufpson integer data. If you're doing a lot of shuffling, it might be worth it to do it all in the FP domain forshufps+blendps, especially if you don't have the equally-efficient AVX2vpblenddavailable. Also, on Intel SnB-family hardware, there's no extra bypass delay for usingshufpsbetween integer instructions likepaddd. (There is a bypass delay for mixingblendpswithpaddd, according to Agner Fog's SnB testing, though.) -

Z boson over 7 years@PeterCordes, I need to review domain changes again. Is there some table (maybe an answer on SO) that summaries the domain change penalty for Core2-Skylake? In any case I have given more thought to this. I see now why wim and you kept mentioning

Z boson over 7 years@PeterCordes, I need to review domain changes again. Is there some table (maybe an answer on SO) that summaries the domain change penalty for Core2-Skylake? In any case I have given more thought to this. I see now why wim and you kept mentioningvinsertf64x4in my 16x16 transpose answer instead ofvinserti64x4. If I am reading then writing the matrix then it certainly does not matter if I use the floating point domain or integer domain since the transpose is just moving data. -

Peter Cordes over 7 yearsAgner's tables list domains per-instruction for Core2 and Nehalem (and AMD I think), but not SnB-family. Agner's microarch guide just has a paragraph saying that it's down to 1c and often 0 on SnB, with some examples. Intel's optimization manual has a table I think, but I haven't tried to grok it so I don't remember how much detail it has. I do recall it not being totally obvious what category a given instruction would be in.

Peter Cordes over 7 yearsAgner's tables list domains per-instruction for Core2 and Nehalem (and AMD I think), but not SnB-family. Agner's microarch guide just has a paragraph saying that it's down to 1c and often 0 on SnB, with some examples. Intel's optimization manual has a table I think, but I haven't tried to grok it so I don't remember how much detail it has. I do recall it not being totally obvious what category a given instruction would be in. -

Peter Cordes over 7 yearsEven if you aren't just writing back to memory, it's only 1 extra clock for the whole transpose. The extra delay for each operand can be happening in parallel (or staggered fashion) as the consumer of the transpose starts to read registers written by shuffles or blends. Out-of-order execution allows the first few FMAs or whatever to start while the last few shuffles are finishing, but there's no chain of dypass delays, just an extra at most one.

Peter Cordes over 7 yearsEven if you aren't just writing back to memory, it's only 1 extra clock for the whole transpose. The extra delay for each operand can be happening in parallel (or staggered fashion) as the consumer of the transpose starts to read registers written by shuffles or blends. Out-of-order execution allows the first few FMAs or whatever to start while the last few shuffles are finishing, but there's no chain of dypass delays, just an extra at most one. -

wim over 7 yearsNicw answer! The intel 64-ia-32-architectures-optimization-manual, table 2-3, lists bypass delays for Skylake, maybe that is of interest to you. Table 2-8 for Haswell looks quite different.

-

wim over 7 yearsI think on Skylake

vinsertf64x4andvinserti64x4are interchangeable. I did not had a reason to mention one or the other. I was just thinking of 64x4 bits of data. -

Z boson about 7 yearsIn case, anyone wants to know who wrote this answer it was I. I quit SO once, got over it, and came back.

Z boson about 7 yearsIn case, anyone wants to know who wrote this answer it was I. I quit SO once, got over it, and came back. -

étale-cohomology about 7 years@ulyssis2 Naive matrix multiplication is most definitely O(n^3), and, as far as I know, compute kernels implement the naive algorithm (I think this is because Strassen's ends up doing way more operations (additions), which is bad if you can do fast products, but I could be wrong). It is an open problem whether matrix multiplication can be O(n^2) or not.

-

Jack Wasey about 6 yearsin addition, if you are passing a matrix between applications which are not both column-major, or not both row-major, transposition is required.

Jack Wasey about 6 yearsin addition, if you are passing a matrix between applications which are not both column-major, or not both row-major, transposition is required. -

Jorge Bellon over 4 yearsIt is usually a better option to rely on a linear algebra library to do the work for you. Modern day libraries such as Intel MKL, OpenBLAS, etc. provide dynamic CPU dispatching which selects the best implementation available for your hardware (for example, wider vector registers than SSE might be available: AVX AVX2, AVX512...), so you don't need to make a non-portable program to get a fast program.

-

Sopel over 3 yearsPlease note that the last SSE snippet won't work correctly if the number of rows and the number of columns are not multiples of 4. It will leave the border cells untouched.

Sopel over 3 yearsPlease note that the last SSE snippet won't work correctly if the number of rows and the number of columns are not multiples of 4. It will leave the border cells untouched. -

Doğuş over 3 yearsI'm new in C/C++, but this looks genius. Because union uses shared memory location for its members, you can read that memory differently. Thus, you get a transposed matrix without doing a new array allocation. Am I right?

Doğuş over 3 yearsI'm new in C/C++, but this looks genius. Because union uses shared memory location for its members, you can read that memory differently. Thus, you get a transposed matrix without doing a new array allocation. Am I right? -

jezza almost 2 yearsI don't think this is correct. This just prints the elements in the same order with a different row size. The transpose requires swapping rows and columns. What @Doğuş refers to can be achieved as described in the main post's comments 'just swap the index order when you access the array'.