What is the point of delay slots?

Solution 1

Most processors these days use pipelines. The ideas and problems from the H&P book(s) are used everywhere. At the time of those original writings, I would assume the actual hardware matched that particular notion of a pipeline. fetch, decode, execute, write back.

Basically a pipeline is an assembly line, with four main stages in the line, so you have at most four instructions be worked on at once. Which confuses the notion of how many clocks does it take to execute an instruction, well it takes more than one clock, but if you have some/many executing in parallel then the "average" can approach or exceed one per clock.

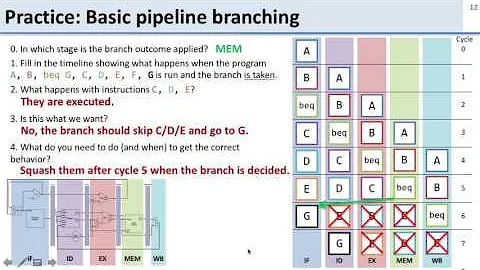

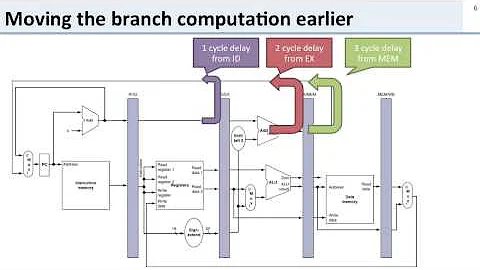

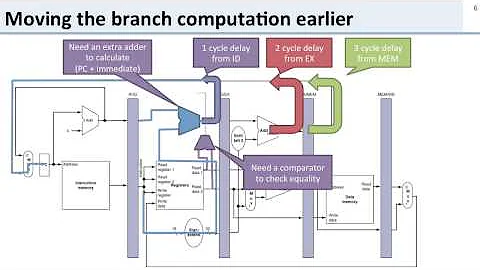

When you take a branch though the assembly line fails. The instructions in the fetch and decode stage have to be tossed, and you have to start filling again, so you take a hit of a few clocks to fetch, decode, then back to executing. The idea of the branch shadow or delay slot is to recover one of those clocks. If you declare that the instruction after a branch is always executed then when a branch is taken the instruction in the decode slot also gets executed, the instruction in the fetch slot is discarded and you have one hole of time not two. So instead of execute, empty, empty, execute, execute you now have execute, execute, empty, execute, execute... in the execute stage of the pipeline. The branch is 50% less painful, your overall average execution speed improves, etc.

ARM does not have a delay slot, but it gives the illusion of a pipeline as well, by declaring that the program counter is two instructions ahead. Any operation that relies on the program counter (pc-relative addressing) must compute the offset using a pc that is two instructions ahead, for ARM instructions this is 8 bytes for original thumb 4 bytes and when you add in thumb2 instructions it gets messy.

These are illusions at this point outside academics, the pipelines are deeper, have lots of tricks, etc, in order for legacy code to keep working, and/or not having to re-define how instructions work for each architecture change (imagine mips rev x, 1 delay slot, rev y 2 delay slots, rev z 3 slots if condition a and 2 slots if condition b and 1 slot if condition c) the processor goes ahead and executes the first instruction after a branch, and discards the other handful or dozen after as it re-fills the pipe. How deep the pipes really are is often not shared with the public.

I saw a comment about this being a RISC thing, it may have started there but CISC processors use the same exact tricks, just giving the illusion of the legacy instruction set, at times the CISC processor is no more than a RISC or VLIW core with a wrapper to emulate the legacy CISC instruction set (microcoded).

Watch the how its made show. Visualize an assembly line, each step in the line has a task. What if one step in the line ran out of blue whatsits, and to make the blue and yellow product you need the blue whatsits. And you cant get new blue whatsits for another week because someone screwed up. So you have to stop the line, change the supplies to each stage, and make the red and green product for a while, which normally could have been properly phased in without dumping the line. That is like what happens with a branch, somewhere deep in the assembly line, something causes the line to have to change, dump the line. the delay slot is a way to recover one product from having to be discarded in the line. Instead of N products coming out before the line stopped, N+1 products came out per production run. Execution of code is like bursts of production runs, you often get short, sometimes long, linear execution paths before hitting a branch to go to another short execution path, branch another short execution path...

Solution 2

Wouldn't you expect the code after a branch not to run in case the branch is taken?

But it's already too late. The whole purpose of a CPU pipeline is that you want to complete an instruction on every cycle. The only way you can achieve that is by fetching an instruction every cycle. So the code after the branch instruction has already been fetched and is in-flight before the CPU notices that the branch has to be taken.

What is the point of this?

There is no point. It's not a feature, it's merely an artifact of this kind of pipeline design.

Solution 3

Even though the instruction appears in the program after the branch, it actually runs before the branch is taken. Check out the wikipedia page about the delay slot and the branch hazard.

Solution 4

The idea of the RISC architecture is to simplify the decode and optimize the pipelines for speed. The CPU attempts to overlap instruction execution by pipelining and so several instructions are being executed at once.

The point of the delay slot specifically is to execute an instruction that has already made it through part of the pipeline and is now in a slot that would otherwise just have to be thrown away.

An optimizer could take the first instruction at the branch target and move it to the delay slot, getting it executed "for free".

The feature did not go mainstream, primarily because the world standardized on existing ISA1 designs, i.e., x86 and x86-64, but also for another reason.

The quadratic explosion in transistor counts made very sophisticated decoders possible. When the architecturally visible ISA is being translated into micro-ops anyway, small hacks like the delay slot become unimportant.

1. ISA: Instruction Set Architecture

Solution 5

In the textbook example of pipelined implementation, a CPU fetches, decodes, executes, and writes back. These stages all happen in different clock cycles so in effect, each instruction completes in 4 cycles. However while the first opcode is about to be decoded, the next loads from memory. When the CPU is fully occupied, there are parts of 4 different instructions handled simultaneously and the throughput of the CPU is one instruction per clock cycle.

When in the machine code there is a sequence:

sub r0, #1

bne loop

xxx

The processor can feed back information from write back stage of sub r0, #1 to execute stage of bne loop, but at the same time the xxx is already in the stage fetch. To simplify the necessity of unrolling the pipeline, the CPU designers choose to use a delay slot instead. After the instruction in delay slot is fetched, the fetch unit has the proper address of branch target. An optimizing compiler only rarely needs to put a NOP in the delay slot, but inserts there an instruction that is necessarily needed on both possible branch targets.

Related videos on Youtube

James

Updated on January 05, 2021Comments

-

James over 3 years

So from my understanding of delay slots, they occur when a branch instruction is called and the next instruction following the branch also gets loaded from memory. What is the point of this? Wouldn't you expect the code after a branch not to run in case the branch is taken? Is it to save time in case the branch isnt taken?

I am looking at a pipeline diagram and it seems the instruction after branch is getting carried out anyway..

-

Oliver Charlesworth about 11 yearsDo you understand the concept of a CPU pipeline?

-

Oliver Charlesworth about 11 yearsOk, then that's the thing to focus on ;) Once you are clear about how there are multiple instructions in-flight simultaneously, it should become apparent why branch delay slots can exist.

-

fjardon about 11 yearsAnother thing to consider is one of the initial goal of the RISC architecture was to reach the 1 instruction executed per cycle limit. As a jump requires two cycles there is the need to execute the instruction placed after the jump. Other architectures may execute this instruction and use complex schemes to not commit its result in order to simulate they didn't process this instruction.

-

-

James about 11 yearsso if the instruction after branch increments a value by 1 and the instruction after branch taken decrements by 1, it will increment it anyway then decrement assuming the branch is taken?

-

James about 11 yearsare delay slots then an inconvenience and not a tool? I can't see how that would be useful..

-

Oliver Charlesworth about 11 years@James: Absolutely. They're an artifact of RISC-style pipelines, and are generally a pain. But given that they exist unavoidably on these architectures, compilers and cunning assembly-programmers may take advantage of them.

-

James about 11 yearswhat if we were to place a breakpoint at the branch instruction? would the delay slot instruction still run?

-

Oliver Charlesworth about 11 years@James: I suspect that depends on the specific architecture, and the details of how it implements breakpoints.

-

James about 11 yearsone more question. couldn't we just place some dummy instruction to account for the delay slot?

-

Oliver Charlesworth about 11 years@James: Absolutely, we could just put a

nopthere (and indeed some compilers do this). But that's sub-optimal, in the sense that it becomes a wasted cycle. So compilers often look for ways to put something useful in the delay slot. -

Pekka over 7 yearsPlease stop vandalizing your content; it's pointless, as it will be restored to its old state anyway. The only thing it achieves is to make your exit from the site so much less dignified.

Pekka over 7 yearsPlease stop vandalizing your content; it's pointless, as it will be restored to its old state anyway. The only thing it achieves is to make your exit from the site so much less dignified. -

Peter Cordes over 7 yearsMore importantly, a newer microarchitecture with a longer pipeline would need multiple "branch delay" slots to hide the fetch/decode bubble introduced by a branch. Exposing micro-architectural details like the branch-delay slot works great for the first-generation CPUs, but after that it's just extra baggage for newer implementations of the same instruction set, which they have to support while actually using branch prediction to hide the bubble. A page fault or something in an instruction in the branch delay slot is tricky, because execution has to re-run it, but still take the branch.

Peter Cordes over 7 yearsMore importantly, a newer microarchitecture with a longer pipeline would need multiple "branch delay" slots to hide the fetch/decode bubble introduced by a branch. Exposing micro-architectural details like the branch-delay slot works great for the first-generation CPUs, but after that it's just extra baggage for newer implementations of the same instruction set, which they have to support while actually using branch prediction to hide the bubble. A page fault or something in an instruction in the branch delay slot is tricky, because execution has to re-run it, but still take the branch. -

Kindred over 5 years(This comment can be ignored) Is that a meme? There is no point. Yeah, we could just put a

nopoint there. -

Kindred over 5 years+1 for the An optimizing compiler only rarely needs to put a NOP in the delay slot, but I don't understand other part.