Which filesystem for large LVM of disks (8 TB)?

Solution 1

It's not file system problem, it's disks' physical limitations. Here's some data:

SATA drives are commonly specified with an unrecoverable read error rate (URE) of 10^14. That means that 1 byte per 12TB will be unrecoverably lost even if disks work fine.

This means that with no RAID you will lose data even if no drive fails - RAID is your only option.

If you choose RAID5 (total capacity n-1, where n = number of disks) it's still not enough. With 10TB RAID5 consisting of 6 x 2TB HDD you will have a 20% chance of one drive failure per year and with a single disk failing, due to URE you'll have 50% chance of successfully rebuilding RAID5 and recovering 100% of your data.

Basically with the high capacity of disks and relatively high URE you need RAID6 to be secure even again single disk failure.

Read this: http://www.zdnet.com/blog/storage/why-raid-5-stops-working-in-2009/162

Solution 2

Do yourself a favor and use a RAID for your disks, could even be software RAID with mdadm. Also think about why you "often get errors on your disks" - this is not normal except when you use cheap desktop class SATA drives instead of RAID grade disks.

After that, the filesystem is not that important anymore - ext4, xfs are both fine choices.

Solution 3

I add new disks of greater sizes progressively

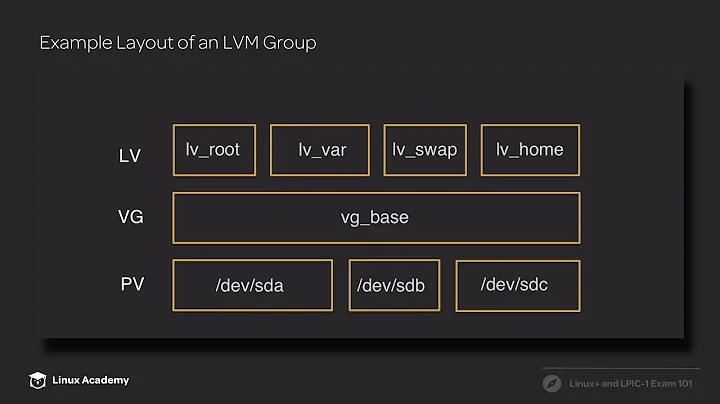

Since you are interesting in using LVM, and you want to handle multiple drives, the simple answer would be to just use the mirror feature that is part of LVM. Simply add all the physical volumes into your LVM. When you are creating a logical volume pass the --mirrors option. This duplicates your data.

Another option might be to just setup several RAID1 pairs. Then add all the RAID1 volumes as PVs to your VG. Then whenever you want to expand your storage, just buy a pair of disks.

Solution 4

I've had good luck with ZFS, you could check to see if it's available on whatever distro you use. Fair warning, it'll probably mean rebuilding your whole system, but it gives really good performance and fault-tolerance.

Solution 5

You should really be using a RAID 5, 6, 10, 50, or 60. Here's some resources to get you started:

background info about RAIDs

- http://en.wikipedia.org/wiki/RAID

- http://www.techrepublic.com/blog/datacenter/choose-a-raid-level-that-works-for-you/3237

howto's & setup

- http://www.dedoimedo.com/computers/linux-raid.html

- http://www.linuxplanet.com/linuxplanet/tutorials/6514/1

- http://dtbaker.com.au/random-bits/ubuntu---howto-easily-setup-raid-5-with-lvm.html

Check out my delicious links for additional RAID links: http://delicious.com/slmingol/raid

Related videos on Youtube

alphatiger

Updated on September 18, 2022Comments

-

alphatiger over 1 year

I have a Linux server with many 2 TB disks, all currently in a LVM resulting in about 10 TB of space. I use all this space on an ext4 partition, and currently have about 8,8 TB of data.

Problem is, I often get errors on my disks, and even if I replace (that is to say, I copy the old disk to a new one with dd then i put the new one in the server) them as soon as errors appear, I often get about 100 MB of corrupted data on it. That makes e2fsck go crazy everytime, and it often takes a week to get the ext4 filesystem in a sane state again.

So the question is : What would you recommend me to use as a filesystem on my LVM ? Or what would you recommend me to do instead (I don't really need the LVM) ?

Profile of my filesystem :

- many folder of different total sizes (some totalling 2 TB, some totalling 100 MB)

- almost 200,000 files with different sizes (3/4 of them about 10 MB, 1/4 between 100 MB and 4 GB; I can't currently get more statistics on files as my ext4 partition is completely wrecked up for some days)

- many reads but few writes

- and I need fault tolerance (I stopped using mdadm RAID because it doesn't like having ONE error on the whole disk, and I sometimes have failing disks, that I replace as soon as I can, but that means I can get corrupted data on my filesystem)

The major problem are failing disks; I can lose some files, but I can't afford lose everything at the same time.

If I continue to use ext4, I heard that I should best try to make smaller filesystems and "merge" them somehow, but I don't know how.

I heard btrfs would be nice, but I can't find any clue as to how it manages losing a part of a disk (or a whole disk), when data is NOT replicated (

mkfs.btrfs -d single?).Any advice on the question will be welcome, thanks in advance !

-

Soham Chakraborty over 11 yearsExactly what disk errors you get. That should give a clue

-

alphatiger over 11 yearsBad sectors, often it's only one or two bad sectors on the whole disk ...

-

Soham Chakraborty over 11 yearsThat means your disk is going bad. Hardly anything to do with filesystem. If the disk is bad, no matter what fs you use, will be handy. As others have mentioned, go for RAID disks and/or buy enterprise disks. Also, look for quality controllers too.

-

alphatiger over 11 yearsYep, I know, that's why I replace disks that are going bad. Sorry if my question wasn't clear. But still, I thought that some filesystems would behave better with corrupted data ...

-

Janne Pikkarainen over 11 yearsYou really really should replace the faulty pieces of your hardware. This is like looking at a crash test dummy after a car has been driven against the wall 200 km/h. "Oh look! His left leg is almost OK! The test was successful!" ... no filesystem can help you if the underlying hardware rots. XFS has faster fsck than ext*, and after enough time passes and the filesystem matures a bit more, perhaps btrfs would work, too. Then there's ZFS but on Linux its state is a bit sad.

Janne Pikkarainen over 11 yearsYou really really should replace the faulty pieces of your hardware. This is like looking at a crash test dummy after a car has been driven against the wall 200 km/h. "Oh look! His left leg is almost OK! The test was successful!" ... no filesystem can help you if the underlying hardware rots. XFS has faster fsck than ext*, and after enough time passes and the filesystem matures a bit more, perhaps btrfs would work, too. Then there's ZFS but on Linux its state is a bit sad. -

Avio over 11 yearsPlease paste a S.M.A.R.T. report of your disks.

-

alphatiger over 11 yearsI agree that I should ;) but I don't use RAID for many reasons. Main one is the price, as they are 2-3 times more expensive, and I can't really afford that. The second reason is that last time I used RAID 5, I was lucky enough to get two bad disks before I could connect a new one and resync it (I didn't have any spare disks at the time, I had to wait for a new one; I agree that with RAID class disks, I would have had this problem). The third reason is that as the data I have to store is growing, I add new disks of greater sizes progressively, what I cannot do with a RAID configuration.

-

alphatiger over 11 yearsSo I'm trying to see if there exists a filesystem that someone would recommend me to use in a configuration where I can't rely on uncorrupted data. Still, thanks for your answer !

-

alphatiger over 11 yearsSee my comments on SvenW's answer to see why I don't really want RAID. (In fact, I already did setup multiple software RAIDs in a company who could afford that ...) Still, thanks !

-

alphatiger over 11 yearsI currently use Debian GNU/Linux, it seems there's a FUSE implementation, but no package (due to licensing problems). I'll probably give it a try (after compiling from sources, as using FUSE it not very nice for high output), I don't worry about having to rebuild my whole filesystem. Thanks !

-

slm over 11 yearsI've always used commodity drives for RAIDs, never used ones rated for RAID use and have never had issues with that so long as you pick a RAID that has enough redundancy within it (RAID 6 or RAID 60). Using a RAID 6 you need an even number. You can grow RAIDs fairly easily by replacing existing members with larger disks and then growing into the newer disks' space.

slm over 11 yearsI've always used commodity drives for RAIDs, never used ones rated for RAID use and have never had issues with that so long as you pick a RAID that has enough redundancy within it (RAID 6 or RAID 60). Using a RAID 6 you need an even number. You can grow RAIDs fairly easily by replacing existing members with larger disks and then growing into the newer disks' space. -

bahamat over 11 years+1 for ZFS. Traditional RAID will silently corrupt data because it's not intelligent enough to know when blocks are wrong, or how to repair them. ZFS on the other hand will detect corrupt blocks (via checksums) and repair them from known good mirror copies. Running ZFS under FUSE, while not ideal, will perform well enough for many workloads. That being said, you should load test your application before using this in a production environment.

-

Avio over 11 yearsWait, URE means Unrecoverable Read Error but this doesn't mean that the disk actually HAS the error. The next read may (and probably will) return the correct bit. The OS will probably just re-read the sector and obtain the correct data. You also forgot to talk about S.M.A.R.T.: before a sector is permanently damaged, S.M.A.R.T. will try to read/write data from/to it. If it detects too many failures, S.M.A.R.T. simply moves the content of the sector in another place and marks the sector as BAD and nobody will be able to write onto it again.

-

Avio over 11 yearsSo, you are simply suggesting to buy tons of disks without asking WHY his disks are so faulty. It could be a heat problem, it could be a problem with a faulty SATA controller, it could be a problem of bad SATA connectors, etc. etc. etc.

-

c2h5oh over 11 years@Avio What I'm saying is that with 10TB of data you will have read errors due to hard disk limitations, even if all disks, SATA controller, SATA connectors etc are in perfect condition and working according to specs. I am also saying that even if you decide to use RAID to mitigate that you should go with RAID6 because disk capacity + URE make even RAID5 not reliable enough. Even single drive failure on RAID5 has a high (50% FFS!) data loss chance.

-

Martin Schröder over 11 years@alphatiger: The discs are only too expensive if your time and your data are too cheap.

-

Jens Timmerman over 11 yearsIt can be the file systems problem, if you use a copy on write filesystem like btrfs or xfs you can very likely recover a previous version of the file, so only loosing the last change to the file. (if it was ever changed)

-

ssc over 11 yearsAnother +1 for ZFS. Pretty much all the servers here are running Linux and I'm a big fan of it, but ZFS has proven so useful to me in the last 3+ years that I've actually gone through the effort of learning and setting up FreeBSD on the big storage machine to be able to use ZFS without any licensing or performance issues.

ssc over 11 yearsAnother +1 for ZFS. Pretty much all the servers here are running Linux and I'm a big fan of it, but ZFS has proven so useful to me in the last 3+ years that I've actually gone through the effort of learning and setting up FreeBSD on the big storage machine to be able to use ZFS without any licensing or performance issues. -

TMN over 11 yearsI'm running it under Solaris on my old Sun workstation, and the performance is nothing short of amazing, considering the hardware (single-core Opteron @ 2.2GHz with 3G of memory and a pair of 250G SATA drives).

-

RichVel about 8 yearsAgree with @c2h5oh on the Unrecoverable - see my answer for more.

-

Adrian W over 4 yearsFirst 12 TB is 1.2*10^13, that's one order of magnitude less than 10^14. Then an URE of 10^14 does not mean that ever 10^14th byte will be lost. That's just an average. An error can happen before 10^12 bytes have been written, and there may be no errors even ever after 10^16 bytes have been written, although both are unlikely.