Why do some commands not read from their standard input?

Solution 1

This is an interesting question, and it deals with a part of the Unix/Linux philosophy.

So, what is the difference between programs like grep, sed, sort on the one hand and kill, rm, ls on the other hand? I see two aspects.

The filter aspect

The first kind of programs is also called filters. They take an input, either from a file or from STDIN, modify it, and generate some output, mostly to STDOUT. They are meant to be used in a pipe with other programs as sources and destinations.

The second kind of programs acts on an input, but the output they give is often not related to the input.

killhas no output when it works regularly, neither doesrm. The just have a return value to show success. They do not normally take input from STDIN, but mostly give output to STDOUT.

For programs like ls, the filter aspect does not work that good. It can certainly have an input (but does not need one), and the output is closely related to that input, but it does not work as a filter. However, for that kind of programs, the other aspect still works:

The semantic aspect

For filters, their input has no semantic meaning. They just read data, modify data, output data. It doesn't matter whether this is a list of numeric values, some filenames or HTML source code. The meaning of this data is only given by the code you provide to the filter: the regex for

grep, the rules forawkor the Perl program.For other programs, like

killorls, their input has a meaning, a denotation.killexpects process numbers,lsexpects file or path names. They cannot handle arbitrary data and they are not meant to. Many of them do not even need any input or parameters, likeps. They do not normally read from STDIN.

One could probably combine these two aspects: A filter is a program whose input does not have a semantic meaning for the program.

I'm sure I have read about this philosophy somewhere, but I don't remember any sources at the moment, sorry. If someone has some sources present, please feel free to edit.

Solution 2

There are two common ways to provide inputs to programs:

- provide data to STDIN of the processes

- specify command line arguments

kill uses only command line arguments. It does not read from STDIN.

Programs like grep and awk read from STDIN (if no filenames are given as command line arguments) and process the data according to their command line arguments (pattern, statements, flags, ...).

You can only pipe to STDIN of other processes, not to command line arguments.

The common rule is, that programs uses STDIN to process arbitrary amount of data. All extra input parameters or, if there are usually only few at all, are passed by command line arguments. If the command line can get very long, for example for long awk program texts, there is often the possibility to read these from extra program files (-f option of awk).

To use the STDOUT of programs as command line arguments, use $(...) or in case of lots of data xargs. find can also this directly with -exec ... {} +.

For completeness: To write command line arguments to STDOUT, use echo.

Solution 3

There are no "rules" as such. Some programs take input from STDIN, and some do not. If a program can take input from STDIN, it can be piped to, if not, it can't.

You can normally tell whether a program will take input or not by thinking about what it does. If the program's job is to somehow manipulate the contents of a file (e.g. grep, sed, awk etc.), it normally takes input from STDIN. If its job is to manipulate the file itself (e.g. mv,rm, cp) or a process (e.g. kill, lsof) or to return information about something (e.g. top, find, ps) then it doesn't.

Another way of thinking about it is the difference between arguments and input. For example:

mv foo bar

In the command above, mv has no input as such. What it has been given is two arguments. It does not know or care what is in either of the files, it just knows those are its arguments and it should manipulate them.

On the other hand

sed -e 's/foo/bar/' < file

--- -- ------------ ----

| | | |-> input

| | |------------> argument

| |--------------------> option/flag/switch

|------------------------> command

Here, sed has been given input as well as an argument. Since it takes input, it can read it from STDIN and it can be piped to.

It gets more complicated when an argument can be the input. For example

cat file

Here, file is the argument that was given to cat. To be precise, the file name file is the argument. However, since cat is a program that manipulates the contents of files, its input is whatever is inside file.

This can be illustrated using strace, a program that tracks the system calls made by processes. If we run cat foo via strace, we can see that the file foo is opened:

$ strace cat foo 2| grep foo

execve("/bin/cat", ["cat", "foo"], [/* 44 vars */]) = 0

open("foo", O_RDONLY)

The first line above shows that the program /bin/cat was called and its arguments were cat and foo (the first argument is always the program itself). Later on, the argument foo was opened in read only mode. Now, compare this with

$ strace ls foo 2| grep foo

execve("/bin/ls", ["ls", "foo"], [/* 44 vars */]) = 0

stat("foo", {st_mode=S_IFREG|0644, st_size=0, ...}) = 0

lstat("foo", {st_mode=S_IFREG|0644, st_size=0, ...}) = 0

write(1, "foo\n", 4foo

Here also, ls took itself and foo as arguments. However, there is no open call, the argument is not treated as input. Instead, ls calls the system's stat library (which is not the same thing as the stat command) to get information about the file foo.

In summary, if the command you're running will read its input, you can pipe to it, if it doesn't, you can't.

Related videos on Youtube

Comments

-

VooXe almost 2 years

I wonder what when we should use pipeline and when we shouldn't.

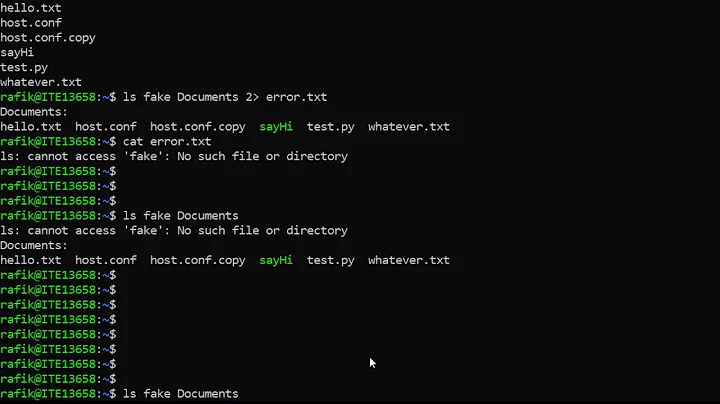

Say for instance, to kill certain process which handling pdf files, the following will not work by using pipeline:

ps aux | grep pdf | awk '{print $2}'|killInstead, we can only do it with following ways:

kill $(ps aux| grep pdf| awk '{print $2}')or

ps aux | grep pdf | awk '{print $2}'| xargs killAccording to

man bash( version4.1.2):The standard output of command is connected via a pipe to the standard input of command2.For above scenario:

- the stdin of

grepis the stdout ofps. That works. - the stdin of

awkis the stdout ofgrep. That works. - the stdin of

killis the stdout ofawk. That doesn't work.

The stdin of the following command is always getting input from the previous command's stdout.

- Why doesn't it work with

killorrm? - What's the different between

kill,rminput withgrep,awkinput? - Are there any rules?

-

terdon almost 10 yearsThis is not an answer but you might want to have a look at the

terdon almost 10 yearsThis is not an answer but you might want to have a look at thepgrep,pkillandkillallcommands. -

VooXe almost 10 years@terdon:I just use above scenario to show the pipeline issue, I understand that

pgrepand the rest can achieve this perfectly :)

- the stdin of

-

VooXe almost 10 yearsHow do we know a command will only take arguments but not STDIN ? Is there a systematic or programmatic way rather than guessing or read from man page ? By only reading the man page, I couldn't get any specific clues to be firm on whether the command can or cannot take STDIN, as STDIN is also part of the arguments from the way a man page present. For instance,

gzip, in the SYNOPSIS, it didn't say it must take a FILENAME as input. I am looking is there a more systematic way to determine that. -

Emmanuel almost 10 yearsThere is also the "-" argument which means "stdin" (or "stdout") for some commands.

-

T. Verron almost 10 yearsWon't

T. Verron almost 10 yearsWon'txargsprecisely allow you to "pipe to command line arguments"? -

YoloTats.com almost 10 years@T.Verron yes, this is the task of

xargs. It calls the command if necessary more than once (command line size is limited) and has lots of other options. -

mhars almost 10 yearsThe text of the description will describe how you can use the program. For instance, gzip says: " The gzip program compresses and decompresses files using Lempel-Ziv coding (LZ77). If no files are specified, gzip will compress from standard input, or decompress to standard output." If a man page does not mention standard input, it won't use it.

-

Olivier Dulac almost 10 years@sylye: For instance, gzip, in the SYNOPSIS, it didn't say it must take a FILENAME as input : It doesn't say it, because it doesn't need a FILENAME as input. gzip can compress file(s) in its argument list, and also can compress stdin (and output the compressed result on stdout). tar is the same. exemple:

ssh user@remote "tar cf - /some/dir | gzip -c -" >some_dir_from_remote.tar.gz -

terdon almost 4 years@Prvt_Yadav that pipes stderr (standard error) instead of stdout

terdon almost 4 years@Prvt_Yadav that pipes stderr (standard error) instead of stdout