Why does changing the sum order returns a different result?

Solution 1

Maybe this question is stupid, but why does simply changing the order of the elements affects the result?

It will change the points at which the values are rounded, based on their magnitude. As an example of the kind of thing that we're seeing, let's pretend that instead of binary floating point, we were using a decimal floating point type with 4 significant digits, where each addition is performed at "infinite" precision and then rounded to the nearest representable number. Here are two sums:

1/3 + 2/3 + 2/3 = (0.3333 + 0.6667) + 0.6667

= 1.000 + 0.6667 (no rounding needed!)

= 1.667 (where 1.6667 is rounded to 1.667)

2/3 + 2/3 + 1/3 = (0.6667 + 0.6667) + 0.3333

= 1.333 + 0.3333 (where 1.3334 is rounded to 1.333)

= 1.666 (where 1.6663 is rounded to 1.666)

We don't even need non-integers for this to be a problem:

10000 + 1 - 10000 = (10000 + 1) - 10000

= 10000 - 10000 (where 10001 is rounded to 10000)

= 0

10000 - 10000 + 1 = (10000 - 10000) + 1

= 0 + 1

= 1

This demonstrates possibly more clearly that the important part is that we have a limited number of significant digits - not a limited number of decimal places. If we could always keep the same number of decimal places, then with addition and subtraction at least, we'd be fine (so long as the values didn't overflow). The problem is that when you get to bigger numbers, smaller information is lost - the 10001 being rounded to 10000 in this case. (This is an example of the problem that Eric Lippert noted in his answer.)

It's important to note that the values on the first line of the right hand side are the same in all cases - so although it's important to understand that your decimal numbers (23.53, 5.88, 17.64) won't be represented exactly as double values, that's only a problem because of the problems shown above.

Solution 2

Here's what's going on in binary. As we know, some floating-point values cannot be represented exactly in binary, even if they can be represented exactly in decimal. These 3 numbers are just examples of that fact.

With this program I output the hexadecimal representations of each number and the results of each addition.

public class Main{

public static void main(String args[]) {

double x = 23.53; // Inexact representation

double y = 5.88; // Inexact representation

double z = 17.64; // Inexact representation

double s = 47.05; // What math tells us the sum should be; still inexact

printValueAndInHex(x);

printValueAndInHex(y);

printValueAndInHex(z);

printValueAndInHex(s);

System.out.println("--------");

double t1 = x + y;

printValueAndInHex(t1);

t1 = t1 + z;

printValueAndInHex(t1);

System.out.println("--------");

double t2 = x + z;

printValueAndInHex(t2);

t2 = t2 + y;

printValueAndInHex(t2);

}

private static void printValueAndInHex(double d)

{

System.out.println(Long.toHexString(Double.doubleToLongBits(d)) + ": " + d);

}

}

The printValueAndInHex method is just a hex-printer helper.

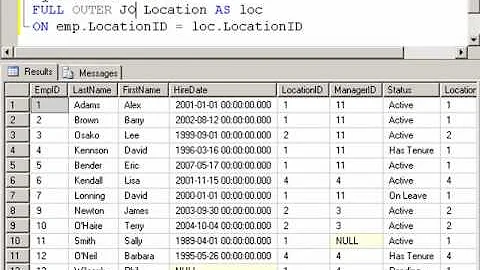

The output is as follows:

403787ae147ae148: 23.53

4017851eb851eb85: 5.88

4031a3d70a3d70a4: 17.64

4047866666666666: 47.05

--------

403d68f5c28f5c29: 29.41

4047866666666666: 47.05

--------

404495c28f5c28f6: 41.17

4047866666666667: 47.050000000000004

The first 4 numbers are x, y, z, and s's hexadecimal representations. In IEEE floating point representation, bits 2-12 represent the binary exponent, that is, the scale of the number. (The first bit is the sign bit, and the remaining bits for the mantissa.) The exponent represented is actually the binary number minus 1023.

The exponents for the first 4 numbers are extracted:

sign|exponent

403 => 0|100 0000 0011| => 1027 - 1023 = 4

401 => 0|100 0000 0001| => 1025 - 1023 = 2

403 => 0|100 0000 0011| => 1027 - 1023 = 4

404 => 0|100 0000 0100| => 1028 - 1023 = 5

First set of additions

The second number (y) is of smaller magnitude. When adding these two numbers to get x + y, the last 2 bits of the second number (01) are shifted out of range and do not figure into the calculation.

The second addition adds x + y and z and adds two numbers of the same scale.

Second set of additions

Here, x + z occurs first. They are of the same scale, but they yield a number that is higher up in scale:

404 => 0|100 0000 0100| => 1028 - 1023 = 5

The second addition adds x + z and y, and now 3 bits are dropped from y to add the numbers (101). Here, there must be a round upwards, because the result is the next floating point number up: 4047866666666666 for the first set of additions vs. 4047866666666667 for the second set of additions. That error is significant enough to show in the printout of the total.

In conclusion, be careful when performing mathematical operations on IEEE numbers. Some representations are inexact, and they become even more inexact when the scales are different. Add and subtract numbers of similar scale if you can.

Solution 3

Jon's answer is of course correct. In your case the error is no larger than the error you would accumulate doing any simple floating point operation. You've got a scenario where in one case you get zero error and in another you get a tiny error; that's not actually that interesting a scenario. A good question is: are there scenarios where changing the order of calculations goes from a tiny error to a (relatively) enormous error? The answer is unambiguously yes.

Consider for example:

x1 = (a - b) + (c - d) + (e - f) + (g - h);

vs

x2 = (a + c + e + g) - (b + d + f + h);

vs

x3 = a - b + c - d + e - f + g - h;

Obviously in exact arithmetic they would be the same. It is entertaining to try to find values for a, b, c, d, e, f, g, h such that the values of x1 and x2 and x3 differ by a large quantity. See if you can do so!

Solution 4

This actually covers much more than just Java and Javascript, and would likely affect any programming language using floats or doubles.

In memory, floating points use a special format along the lines of IEEE 754 (the converter provides much better explanation than I can).

Anyways, here's the float converter.

http://www.h-schmidt.net/FloatConverter/

The thing about the order of operations is the "fineness" of the operation.

Your first line yields 29.41 from the first two values, which gives us 2^4 as the exponent.

Your second line yields 41.17 which gives us 2^5 as the exponent.

We're losing a significant figure by increasing the exponent, which is likely to change the outcome.

Try ticking the last bit on the far right on and off for 41.17 and you can see that something as "insignificant" as 1/2^23 of the exponent would be enough to cause this floating point difference.

Edit: For those of you who remember significant figures, this would fall under that category. 10^4 + 4999 with a significant figure of 1 is going to be 10^4. In this case, the significant figure is much smaller, but we can see the results with the .00000000004 attached to it.

Solution 5

Floating point numbers are represented using the IEEE 754 format, which provides a specific size of bits for the mantissa (significand). Unfortunately this gives you a specific number of 'fractional building blocks' to play with, and certain fractional values cannot be represented precisely.

What is happening in your case is that in the second case, the addition is probably running into some precision issue because of the order the additions are evaluated. I haven't calculated the values, but it could be for example that 23.53 + 17.64 cannot be precisely represented, while 23.53 + 5.88 can.

Unfortunately it is a known problem that you just have to deal with.

Related videos on Youtube

Marlon Bernardes

Full-stack software engineer, passionate about well-written code, adept of agile methodologies, automated tests and continuous integration tools. Always willing to learn and always willing to help!

Updated on March 11, 2022Comments

-

Marlon Bernardes about 2 years

Why does changing the sum order returns a different result?

23.53 + 5.88 + 17.64=47.0523.53 + 17.64 + 5.88=47.050000000000004Both Java and JavaScript return the same results.

I understand that, due to the way floating point numbers are represented in binary, some rational numbers (like 1/3 - 0.333333...) cannot be represented precisely.

Why does simply changing the order of the elements affect the result?

-

Bakuriu over 10 yearsSum of real numbers is associative and commutative. Floating-points aren't real numbers. In fact you just proved that their operations are not commutative. It's pretty easy to show that they aren't associative too (e.g.

(2.0^53 + 1) - 1 == 2.0^53 - 1 != 2^53 == 2^53 + (1 - 1)). Hence, yes: be wary when choosing the order of sums and other operations. Some languages provide a built-in to perform "high-precision" sums (e.g. python'smath.fsum), so you might consider using these functions instead of the naive sum algorithm. -

RBerteig over 10 yearsThe surprise to me is that Java and Javascript appear to have actually evaluated the two sums in the same order. I'm not certain that the two languages were required to do that. I know that C does not specify the order in which the sums will be performed.

-

Chris Cirefice over 10 years@RBerteig That can be determined by examining the language's order of operations for arithmetic expressions and, unless their representation of floating point numbers in memory is different, the results will be the same if their operator precedence rules are the same. Another point of note: I wonder how long it took the devs who develop banking applications to figure this out? Those extra 0000000000004 cents really add up!

Chris Cirefice over 10 years@RBerteig That can be determined by examining the language's order of operations for arithmetic expressions and, unless their representation of floating point numbers in memory is different, the results will be the same if their operator precedence rules are the same. Another point of note: I wonder how long it took the devs who develop banking applications to figure this out? Those extra 0000000000004 cents really add up! -

Bayquiri over 10 years@Bakuriu, I don't think a witness of non-commutativity has been presented yet (and I conjecture there is none!). The original expressions require both associativity and commutativity to be equivalent.

-

Happy Green Kid Naps over 10 years@RBerteig -- C does specify the order of evaluation of OP's two expressions.

-

Daniel Pryden over 10 yearsThis question seems to come up on SO every few weeks. For further reading, take a look at: stackoverflow.com/questions/6699066/…

-

Daniel Pryden over 10 years@ChrisCirefice: if you have 0.00000004 cents, you're doing it wrong. You should never use a binary floating point type for financial calculations.

-

Chris Cirefice over 10 years@DanielPryden Ah alas, it was a joke... just throwing around the idea that people who really need to get this type of problem resolved had one of the most important jobs that you know, holds the monetary status of the people and all that. I was being very sarcastic...

Chris Cirefice over 10 years@DanielPryden Ah alas, it was a joke... just throwing around the idea that people who really need to get this type of problem resolved had one of the most important jobs that you know, holds the monetary status of the people and all that. I was being very sarcastic... -

Sven over 10 years

Sven over 10 years -

RBerteig over 10 years@HappyGreenKidNaps, the C standard is full of weasel words about visibility of side effects. As long as the operations are side effect free and there are no sequence points at which all side effects must be completed, C permits the actual

+operators to be evaluated in any order that does not violate precedence. The phrase "as if performed" appears in a number of places. This is traditional in C so that novel optimizations are possible to allow operations to be performed as fast as possible on a wide range of hardware. -

Brian over 10 yearsVery dry (and old, but still relevant): What Every Computer Scientist Should Know About Floating-Point Arithmetic

-

-

Zong over 10 yearsI think this answer avoids the real reason. "there's that secondary step where floating point numbers can get off". Clearly, this is true, but what we want to explain is why.

Zong over 10 yearsI think this answer avoids the real reason. "there's that secondary step where floating point numbers can get off". Clearly, this is true, but what we want to explain is why. -

Prateek over 10 years

Prateek over 10 yearsMay extend this later - out of time right now!waiting eagerly for it @Jon -

Cruncher over 10 yearsHow do you define a large quantity? Are we talking on the order of 1000ths? 100ths? 1's???

-

Eric Lippert over 10 years@Cruncher: Compute the exact mathematical result and the x1 and x2 values. Call the exact mathematical difference between the true and computed results e1 and e2. There are now several ways to think about error size. The first is: can you find a scenario in which either | e1 / e2 | or | e2 / e1 | are large? Like, can you make the error of one ten times the error of the other? The more interesting one though is if you can make the error of one a significant fraction of the size of the correct answer.

-

Kevin Hsu over 10 yearsI realize he's talking about runtime, but I wonder: If the expression was a compile-time (say, constexpr) expression, are compilers smart enough to minimize the error?

-

Grady Player over 10 yearswhen I say that I will get back to an answer later the community is slightly less kind to me <enter some sort of light hearted emoticon here to show I am joking and not a jerk> ... will get back to this later.

-

Eric Lippert over 10 years@kevinhsu in general no, the compiler is not that smart. Of course the compiler could choose to do the operation in exact arithmetic if it so chose, but it usually does not.

-

frozenkoi over 10 yearsCouldn't the error be not only 'enormous' but even infinite? I.e. When adding a to a large number a number tiny enough that the difference gets ignored (due to not being representable), adding an infinite amount of such tiny numbers keep increasing the error?

frozenkoi over 10 yearsCouldn't the error be not only 'enormous' but even infinite? I.e. When adding a to a large number a number tiny enough that the difference gets ignored (due to not being representable), adding an infinite amount of such tiny numbers keep increasing the error? -

Jon Skeet over 10 years@ZongZhengLi: While it's certainly important to understand that, it's not the root cause in this case. You could write a similar example with values which are represented exactly in binary, and see the same effect. The problem here is maintaining large scale information and small scale information at the same time.

-

Jon Skeet over 10 yearsThe scales being different is the important part. You could write (in decimal) the exact values that are being represented in binary as the inputs, and still have the same problem.

-

Jon Skeet over 10 yearsHadn't seen this when I extended my earlier answer - I've now given an example of the +/- alteration in my "4 significant decimal digits" type as a demonstration that it's not just about starting with inexact representations.

-

Jon Skeet over 10 years@frozenkoi: Yes, the error can be infinite very easily. For example, consider the C#:

double d = double.MaxValue; Console.WriteLine(d + d - d - d); Console.WriteLine(d - d + d - d);- the output is Infinity then 0. -

Zong over 10 years@JonSkeet Yes, thanks for correction. I guess it's more correct to say the intermediate values cannot be exactly represented as floating point values.

Zong over 10 years@JonSkeet Yes, thanks for correction. I guess it's more correct to say the intermediate values cannot be exactly represented as floating point values. -

Jon Skeet over 10 years@ZongZhengLi: Yes, that would definitely fit.

-

Caleb Jares over 10 yearsThis is indeed strange. Are floating point numbers truncated instead of rounded?

Caleb Jares over 10 yearsThis is indeed strange. Are floating point numbers truncated instead of rounded? -

Jon Skeet over 10 years@CalebJares: Nope, they're rounded - have edited to fix this.

-

Buksy over 10 yearsI don't understand in what scenario would 10001 be rounded to 1000 ?? What language & datatype could allow this?

-

Jon Skeet over 10 years@Buksy: Rounded to 10000 - because we're dealing with a datatype which can only store 4 significant digits. (so x.xxx * 10^n)

-

meteors over 10 yearsbut @JonSkeet having a datatype that can store only 4 significant digit should cause an overflow and not round the number to the highest possible. I understood the argument regarding trailing digits of floating point number but am not getting the 1001 getting rounded to 1000. Can you elaborate a bit on that?

meteors over 10 yearsbut @JonSkeet having a datatype that can store only 4 significant digit should cause an overflow and not round the number to the highest possible. I understood the argument regarding trailing digits of floating point number but am not getting the 1001 getting rounded to 1000. Can you elaborate a bit on that? -

Jon Skeet over 10 years@meteors: No, it doesn't cause an overflow - and you're using the wrong numbers. It's 10001 being rounded to 10000, not 1001 being rounded to 1000. To make it clearer, 54321 would be rounded to 54320 - because that only has four significant digits. There's a big difference between "four significant digits" and "a maximum value of 9999". As I said before, you're basically representing x.xxx * 10^n, where for 10000, x.xxx would be 1.000, and n would be 4. This is just like

doubleandfloat, where for very large numbers, consecutive representable numbers are more than 1 apart. -

ADTC over 10 years@rgettman As a programmer, I like your answer better

ADTC over 10 years@rgettman As a programmer, I like your answer better=)+1 for your hex-printer helper... that's really neat! -

meteors over 10 years@JonSkeet thanks got it now. Anyway 1000 and 1001 was just a typo consider it a system with 3 significant digits representation.

meteors over 10 years@JonSkeet thanks got it now. Anyway 1000 and 1001 was just a typo consider it a system with 3 significant digits representation. -

andy over 10 years@Jon Skeet maybe that's the real difference between binary operations and arithmetic operations. the latter one is accurate.

andy over 10 years@Jon Skeet maybe that's the real difference between binary operations and arithmetic operations. the latter one is accurate.