Why does GCC generate such radically different assembly for nearly the same C code?

Solution 1

Updated to sync with the OP's edit

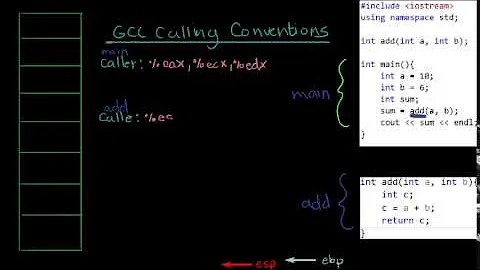

By tinkering with the code, I've managed to see how GCC optimizes the first case.

Before we can understand why they are so different, first we must understand how GCC optimizes fast_trunc_one().

Believe it or not, fast_trunc_one() is being optimized to this:

int fast_trunc_one(int i) {

int mantissa, exponent;

mantissa = (i & 0x07fffff) | 0x800000;

exponent = 150 - ((i >> 23) & 0xff);

if (exponent < 0) {

return (mantissa << -exponent); /* diff */

} else {

return (mantissa >> exponent); /* diff */

}

}

This produces the exact same assembly as the original fast_trunc_one() - register names and everything.

Notice that there are no xors in the assembly for fast_trunc_one(). That's what gave it away for me.

How so?

Step 1: sign = -sign

First, let's take a look at the sign variable. Since sign = i & 0x80000000;, there are only two possible values that sign can take:

sign = 0sign = 0x80000000

Now recognize that in both cases, sign == -sign. Therefore, when I change the original code to this:

int fast_trunc_one(int i) {

int mantissa, exponent, sign, r;

mantissa = (i & 0x07fffff) | 0x800000;

exponent = 150 - ((i >> 23) & 0xff);

sign = i & 0x80000000;

if (exponent < 0) {

r = mantissa << -exponent;

} else {

r = mantissa >> exponent;

}

return (r ^ sign) + sign;

}

It produces the exact same assembly as the original fast_trunc_one(). I'll spare you the assembly, but it is identical - register names and all.

Step 2: Mathematical reduction: x + (y ^ x) = y

sign can only take one of two values, 0 or 0x80000000.

- When

x = 0, thenx + (y ^ x) = ythen trivial holds. - Adding and xoring by

0x80000000is the same. It flips the sign bit. Thereforex + (y ^ x) = yalso holds whenx = 0x80000000.

Therefore, x + (y ^ x) reduces to y. And the code simplifies to this:

int fast_trunc_one(int i) {

int mantissa, exponent, sign, r;

mantissa = (i & 0x07fffff) | 0x800000;

exponent = 150 - ((i >> 23) & 0xff);

sign = i & 0x80000000;

if (exponent < 0) {

r = (mantissa << -exponent);

} else {

r = (mantissa >> exponent);

}

return r;

}

Again, this compiles to the exact same assembly - register names and all.

This above version finally reduces to this:

int fast_trunc_one(int i) {

int mantissa, exponent;

mantissa = (i & 0x07fffff) | 0x800000;

exponent = 150 - ((i >> 23) & 0xff);

if (exponent < 0) {

return (mantissa << -exponent); /* diff */

} else {

return (mantissa >> exponent); /* diff */

}

}

which is pretty much exactly what GCC generates in the assembly.

So why doesn't the compiler optimize fast_trunc_two() to the same thing?

The key part in fast_trunc_one() is the x + (y ^ x) = y optimization. In fast_trunc_two() the x + (y ^ x) expression is being split across the branch.

I suspect that might be enough to confuse GCC to not make this optimization. (It would need to hoist the ^ -sign out of the branch and merge it into the r + sign at the end.)

For example, this produces the same assembly as fast_trunc_one():

int fast_trunc_two(int i) {

int mantissa, exponent, sign, r;

mantissa = (i & 0x07fffff) | 0x800000;

exponent = 150 - ((i >> 23) & 0xff);

sign = i & 0x80000000;

if (exponent < 0) {

r = ((mantissa << -exponent) ^ -sign) + sign; /* diff */

} else {

r = ((mantissa >> exponent) ^ -sign) + sign; /* diff */

}

return r; /* diff */

}

Solution 2

This is the nature of compilers. Assuming they will take the fastest or best path, is quite false. Anyone that implies that you don't need to do anything to your code to optimize because "modern compilers" fill in the blank, do the best job, make the fastest code, etc. Actually I saw gcc get worse from 3.x to 4.x on arm at least. 4.x might have caught up to 3.x by this point, but early on it produced slower code. With practice you can learn how to write your code so the compiler doesn't have to work as hard and as a result produces more consistent and expected results.

The bug here is your expectations of what will be produced, not what was actually produced. If you want the compiler to generate the same output, feed it the same input. Not mathematically the same, not kinda the same, but actually the same, no different paths, no sharing or distributing operations from one version to the other. This is a good exercise in understanding how to write your code and seeing what compilers do with it. Don't make the mistake of assuming that because one version of gcc for one processor target one day produced a certain result that that is a rule for all compilers and all code. You have to use many compilers and many targets to get a feel for what is going on.

gcc is pretty nasty, I invite you to look behind the curtain, look at the guts of gcc, try to add a target or modify something yourself. It is barely held together by duct tape and bailing wire. An extra line of code added or removed in critical places and it comes crumbling down. The fact that it has produced usable code at all is something to be pleased about, instead of worrying about why it didnt meet other expectations.

did you look at what different versions of gcc produce? 3.x and 4.x in particular 4.5 vs 4.6 vs 4.7, etc? and for different target processors, x86, arm, mips, etc or different flavors of x86 if that is the native compiler you use, 32 bit vs 64 bit, etc? And then llvm (clang) for different targets?

Mystical has done an excellent job in the thought process required to work through the problem of analyzing/optimizing the code, expecting a compiler to come up with any of that is, well, not expected of any "modern compiler".

Without getting into the math properties, code of this form

if (exponent < 0) {

r = mantissa << -exponent; /* diff */

} else {

r = mantissa >> exponent; /* diff */

}

return (r ^ -sign) + sign; /* diff */

is going to lead the compiler to A: implement it in that form, perform the if-then-else then converge on common code to finish up and return. or B: save a branch since this is the tail end of the function. Also not bother with using or saving r.

if (exponent < 0) {

return((mantissa << -exponent)^-sign)+sign;

} else {

return((mantissa << -exponent)^-sign)+sign;

}

Then you can get into as Mystical pointed out the sign variable disappears all together for the code as written. I wouldn't expect the compiler to see the sign variable go away so you should have done that yourself and not forced the compiler to try to figure it out.

This is a perfect opportunity to dig into the gcc source code. It appears you have found a case where the optimizer saw one thing in one case then another thing in another case. Then take the next step and see if you can't get gcc to see that case. Every optimization is there because some individual or group recognized the optimization and intentionally put it there. For this optimization to be there and work every time someone has to put it there (and then test it, and then maintain it into the future).

Definitely do not assume that less code is faster and more code is slower, it is very easy to create and find examples of that not being true. It might more often than not be the case of less code being faster than more code. As I demonstrated from the start though you can create more code to save branching in that case or looping, etc and have the net result be faster code.

The bottom line is you fed a compiler different source and expected the same results. The problem is not the compiler output but the expectations of the user. It is fairly easy to demonstrate for a particular compiler and processor, the addition of one line of code that makes a whole function dramatically slower. For example why does changing a = b + 2; to a = b + c + 2; cause _fill_in_the_blank_compiler_name_ generate radically different and slower code? The answer of course being the compiler was fed different code on the input so it is perfectly valid for the compiler to generate different output. (even better is when you swap two unrelated lines of code and cause the output to change dramatically) There is no expected relationship between the complexity and size of the input to the complexity and size of the output. Feed something like this into clang:

for(ra=0;ra<20;ra++) dummy(ra);

It produced somewhere between 60-100 lines of assembler. It unrolled the loop. I didn't count the lines, if you think about it, it has to add, copy the result to the input to the function call, make the function call, three operations minimum. so depending on the target that is probably 60 instructions at least, 80 if four per loop, 100 if five per loop, etc.

Solution 3

Mysticial has already given a great explanation, but I thought I'd add, FWIW, that there's really nothing fundamental about why a compiler would make the optimization for one and not the other.

LLVM's clang compiler, for example, gives the same code for both functions (except for the function name), giving:

_fast_trunc_two: ## @fast_trunc_one

movl %edi, %edx

andl $-2147483648, %edx ## imm = 0xFFFFFFFF80000000

movl %edi, %esi

andl $8388607, %esi ## imm = 0x7FFFFF

orl $8388608, %esi ## imm = 0x800000

shrl $23, %edi

movzbl %dil, %eax

movl $150, %ecx

subl %eax, %ecx

js LBB0_1

shrl %cl, %esi

jmp LBB0_3

LBB0_1: ## %if.then

negl %ecx

shll %cl, %esi

LBB0_3: ## %if.end

movl %edx, %eax

negl %eax

xorl %esi, %eax

addl %edx, %eax

ret

This code isn't as short as the first gcc version from the OP, but not as long as the second.

Code from another compiler (which I won't name), compiling for x86_64, produces this for both functions:

fast_trunc_one:

movl %edi, %ecx

shrl $23, %ecx

movl %edi, %eax

movzbl %cl, %edx

andl $8388607, %eax

negl %edx

orl $8388608, %eax

addl $150, %edx

movl %eax, %esi

movl %edx, %ecx

andl $-2147483648, %edi

negl %ecx

movl %edi, %r8d

shll %cl, %esi

negl %r8d

movl %edx, %ecx

shrl %cl, %eax

testl %edx, %edx

cmovl %esi, %eax

xorl %r8d, %eax

addl %edi, %eax

ret

which is fascinating in that it computes both sides of the if and then uses a conditional move at the end to pick the right one.

The Open64 compiler produces the following:

fast_trunc_one:

movl %edi,%r9d

sarl $23,%r9d

movzbl %r9b,%r9d

addl $-150,%r9d

movl %edi,%eax

movl %r9d,%r8d

andl $8388607,%eax

negl %r8d

orl $8388608,%eax

testl %r8d,%r8d

jl .LBB2_fast_trunc_one

movl %r8d,%ecx

movl %eax,%edx

sarl %cl,%edx

.Lt_0_1538:

andl $-2147483648,%edi

movl %edi,%eax

negl %eax

xorl %edx,%eax

addl %edi,%eax

ret

.p2align 5,,31

.LBB2_fast_trunc_one:

movl %r9d,%ecx

movl %eax,%edx

shll %cl,%edx

jmp .Lt_0_1538

and similar, but not identical, code for fast_trunc_two.

Anyway, when it comes to optimization, it's a lottery — it is what it is... It isn't always easy to know why you code gets compiled any particular way.

Related videos on Youtube

orlp

Computer Science PhD student at CWI Amsterdam. Cryptography, information theory, compression, computer graphics, low-level optimization, discrete mathematics, algorithms & data structures - it all interests me! Favourite languages: Rust, Python, C++, C. Achievements: Gold C++ badge #238 Gold Python badge #236 Gold C badge #225 #1021 to reach 100,000 reputation Pattern-defeating quicksort

Updated on July 23, 2022Comments

-

orlp almost 2 years

While writing an optimized

ftolfunction I found some very odd behaviour inGCC 4.6.1. Let me show you the code first (for clarity I marked the differences):fast_trunc_one, C:

int fast_trunc_one(int i) { int mantissa, exponent, sign, r; mantissa = (i & 0x07fffff) | 0x800000; exponent = 150 - ((i >> 23) & 0xff); sign = i & 0x80000000; if (exponent < 0) { r = mantissa << -exponent; /* diff */ } else { r = mantissa >> exponent; /* diff */ } return (r ^ -sign) + sign; /* diff */ }fast_trunc_two, C:

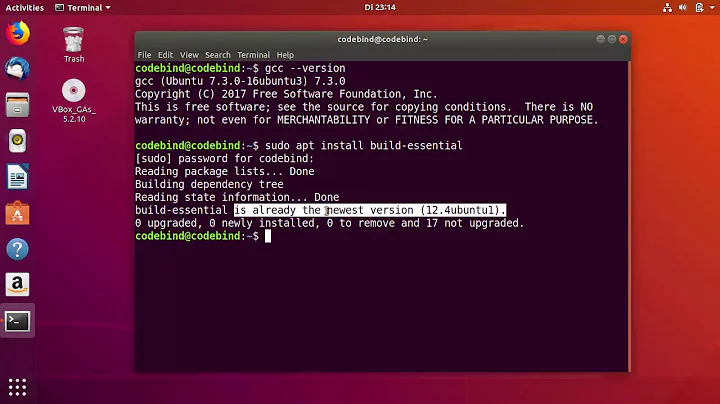

int fast_trunc_two(int i) { int mantissa, exponent, sign, r; mantissa = (i & 0x07fffff) | 0x800000; exponent = 150 - ((i >> 23) & 0xff); sign = i & 0x80000000; if (exponent < 0) { r = (mantissa << -exponent) ^ -sign; /* diff */ } else { r = (mantissa >> exponent) ^ -sign; /* diff */ } return r + sign; /* diff */ }Seems the same right? Well GCC disagrees. After compiling with

gcc -O3 -S -Wall -o test.s test.cthis is the assembly output:fast_trunc_one, generated:

_fast_trunc_one: LFB0: .cfi_startproc movl 4(%esp), %eax movl $150, %ecx movl %eax, %edx andl $8388607, %edx sarl $23, %eax orl $8388608, %edx andl $255, %eax subl %eax, %ecx movl %edx, %eax sarl %cl, %eax testl %ecx, %ecx js L5 rep ret .p2align 4,,7 L5: negl %ecx movl %edx, %eax sall %cl, %eax ret .cfi_endprocfast_trunc_two, generated:

_fast_trunc_two: LFB1: .cfi_startproc pushl %ebx .cfi_def_cfa_offset 8 .cfi_offset 3, -8 movl 8(%esp), %eax movl $150, %ecx movl %eax, %ebx movl %eax, %edx sarl $23, %ebx andl $8388607, %edx andl $255, %ebx orl $8388608, %edx andl $-2147483648, %eax subl %ebx, %ecx js L9 sarl %cl, %edx movl %eax, %ecx negl %ecx xorl %ecx, %edx addl %edx, %eax popl %ebx .cfi_remember_state .cfi_def_cfa_offset 4 .cfi_restore 3 ret .p2align 4,,7 L9: .cfi_restore_state negl %ecx sall %cl, %edx movl %eax, %ecx negl %ecx xorl %ecx, %edx addl %edx, %eax popl %ebx .cfi_restore 3 .cfi_def_cfa_offset 4 ret .cfi_endprocThat's an extreme difference. This actually shows up on the profile too,

fast_trunc_oneis around 30% faster thanfast_trunc_two. Now my question: what is causing this?-

orlp about 12 yearsFor testing purposes I created a gist here where you can easily copy/paste the source and see if you can reproduce the bug on other systems/versions of GCC.

-

zwol about 12 yearsPut the test cases in a directory of their own. Compile them with

-S -O3 -da -fdump-tree-all. This will create many snapshots of the intermediate representation. Walk through them (they're numbered) side by side and you should be able to find the missing optimization in the first case. -

Mads about 12 yearsI'll venture a guess and say you have almost answered your own question by your gcc flag -O3 . Try setting that to -O0 and then you'll notice they are more alike. So it's because of the optimization. I can't answer to how the specifics works, but I remember back when we wrote an optimizer for our compiler at the university we used a lot crazy tricks to optimize our output.

-

zwol about 12 yearsSuggestion two: change all

inttounsigned intand see if the difference vanishes. -

Sebastian Dressler about 12 yearsWrapping ASM difference into words: in

Sebastian Dressler about 12 yearsWrapping ASM difference into words: infast_trunc_twosignis directly used within the shift operation, this is not the case withinfast_trunc_one. Thus the XOR is directly included within the sign, but I'm not able to say why. -

zwol about 12 yearsObligatory editorial comment:

-O3is-O2plus a small handful of optimizations, all of which are irrelevant in this case, and all of which almost always make your code SLOWER. It is meant to be used only on files containing critical inner loops that benefit from hyper-aggressive inlining and unrolling. -

orlp about 12 years@Zack: problem persists if every variable except

exponentis changed to unsigned. (exponentcan not be changed to unsigned because it will break the algorithm). Problem also persists with -O2. -

DCoder about 12 yearsThe two functions seem to be doing slightly different math. While the results might be the same, the expression

DCoder about 12 yearsThe two functions seem to be doing slightly different math. While the results might be the same, the expression(r + shifted) ^ signis not the same asr + (shifted ^ sign). I guess that's confusing the optimizer? FWIW, MSVC 2010 (16.00.40219.01) produces listings that are almost identical to each other: gist.github.com/2430454 -

orlp about 12 years@Zack: I ran a build with

-S -O3 -da -fdump-tree-all. Full results uploaded here: nclabs.org/downloads/wtf.zip -

zwol about 12 yearsSorry, I don't have time to look at it for you. Maybe someone else will.

-

orlp about 12 years@DCoder: Oh damn! I didn't spot that. It isn't the explanation for the difference though. Let me update the question with a new version where this is ruled out.

-

Mysticial about 12 years@nightcracker Apparently, my answer was for revision 2. Your current revision seems to have broken my answer since you flipped the two examples and changed in code. Do you want to flip them back? Or should I just update my answer?

Mysticial about 12 years@nightcracker Apparently, my answer was for revision 2. Your current revision seems to have broken my answer since you flipped the two examples and changed in code. Do you want to flip them back? Or should I just update my answer? -

Daniel Fischer about 12 yearsJust sayin: if

Daniel Fischer about 12 yearsJust sayin: ifi < 0,-signis undefined behaviour. -

orlp about 12 years@DanielFischer: ah yes, in the original version (from which I extracted this as an example)

iis unsigned, so no worries there. -

Gunther Piez about 12 yearsStrange, I remember to have read exact these two algorithms somewhere

-

orlp about 12 years@drhirsch: this is a mocked up version of an algorithm that assumes IEEE754 float to implement the truncate function in software. This is because a regular cast often generates code to save, change and restore the processors rounding mode. This is very slow and clears the integer pipeline, so this version might be a lot faster. While optimizing for GCC I found this weird behaviour. Here's a working version: gist.github.com/f29a0a7813df398fc494 Here's the XOR trick that made GCC do weird things: graphics.stanford.edu/~seander/bithacks.html#ConditionalNegate

-

-

Richard J. Ross III about 12 yearsThis answer really doesn't make sense. If

Richard J. Ross III about 12 yearsThis answer really doesn't make sense. Iffast_trunc_two()is the function getting optimized to this, shouldn't it be faster thanfast_trunc_one()? -

Mysticial about 12 yearsI haven't attempted to answer the speed difference - only why it produces that shorter assembly. As for why it's slower, I'm taking a look into that now.

Mysticial about 12 yearsI haven't attempted to answer the speed difference - only why it produces that shorter assembly. As for why it's slower, I'm taking a look into that now. -

Mysticial about 12 yearsEdit, it looks like I've answered revision two. The current revision flipped the two examples and changed the code a bit... this is confusing.

Mysticial about 12 yearsEdit, it looks like I've answered revision two. The current revision flipped the two examples and changed the code a bit... this is confusing. -

orlp about 12 years@Mysticial: I'm sorry, I had to fix an issue which flipped it around. But to prevent further confusion I won't edit it anymore. So please edit your answer to the current version.

-

Mysticial about 12 years@nightcracker No worries. I've updated my answer to sync with the current version.

Mysticial about 12 years@nightcracker No worries. I've updated my answer to sync with the current version. -

orlp about 12 years@Mysticial: your final statement is no longer true with the new version, making your answer void (it doesn't answer the most important question, "Why does GCC generate such radically different assembly".)

-

Mysticial about 12 yearsAnswer updated again. I'm not sure if it's satisfying enough. But I don't think I can do much better without knowing exactly how the relevant GCC optimization passes work.

Mysticial about 12 yearsAnswer updated again. I'm not sure if it's satisfying enough. But I don't think I can do much better without knowing exactly how the relevant GCC optimization passes work. -

Gunther Piez about 12 yearsGood answer. Even I didn't spot the

(r ^ sign) + sign == requality at the first glance ;-) -

orlp about 12 yearsIs the compiler you won't name some top-secret supercompiler?

-

Janus Troelsen about 12 yearsthe Top Secret compiler is probably Intel

icc. I only have the 32-bit variant but it produces code very similar to this. -

Filip Navara about 12 yearsI also believe it's ICC. The compiler knows that the processor is capable of instruction level parallelism and thus both branches can be computed simultaneously. The overhead of conditional move is much lower than overhead of false branch prediction.

-

R.. GitHub STOP HELPING ICE almost 10 years@Mysticial: Strictly speaking, as long as signed type is wrongly being used in this code, pretty much all of the transformations the compiler is making here are in cases where the behavior is undefined...

-

Peter - Reinstate Monica over 7 yearsWhy did you vandalize your answer? Oded seemed to disagree with the edit as well ;-).

Peter - Reinstate Monica over 7 yearsWhy did you vandalize your answer? Oded seemed to disagree with the edit as well ;-). -

trinity420 over 5 years@PeterA.Schneider all his answers seem to have been vandalized on the same date. I think someone with his (stolen?) account data did it.

trinity420 over 5 years@PeterA.Schneider all his answers seem to have been vandalized on the same date. I think someone with his (stolen?) account data did it.

![HOW TO CONVERT .C FILE TO [ (ASM/S) (.I FILE) (.O FILE) ] FROM GCC](https://i.ytimg.com/vi/P_7VMYjHoeM/hqdefault.jpg?sqp=-oaymwEcCOADEI4CSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLD8AZzhC4avhoYVHB368QXD5lqIMg)