Why does GCC use multiplication by a strange number in implementing integer division?

Solution 1

Integer division is one of the slowest arithmetic operations you can perform on a modern processor, with latency up to the dozens of cycles and bad throughput. (For x86, see Agner Fog's instruction tables and microarch guide).

If you know the divisor ahead of time, you can avoid the division by replacing it with a set of other operations (multiplications, additions, and shifts) which have the equivalent effect. Even if several operations are needed, it's often still a heck of a lot faster than the integer division itself.

Implementing the C / operator this way instead of with a multi-instruction sequence involving div is just GCC's default way of doing division by constants. It doesn't require optimizing across operations and doesn't change anything even for debugging. (Using -Os for small code size does get GCC to use div, though.) Using a multiplicative inverse instead of division is like using lea instead of mul and add

As a result, you only tend to see div or idiv in the output if the divisor isn't known at compile-time.

For information on how the compiler generates these sequences, as well as code to let you generate them for yourself (almost certainly unnecessary unless you're working with a braindead compiler), see libdivide.

Solution 2

Dividing by 5 is the same as multiplying 1/5, which is again the same as multiplying by 4/5 and shifting right 2 bits. The value concerned is CCCCCCCCCCCCCCCD in hex, which is the binary representation of 4/5 if put after a hexadecimal point (i.e. the binary for four fifths is 0.110011001100 recurring - see below for why). I think you can take it from here! You might want to check out fixed point arithmetic (though note it's rounded to an integer at the end).

As to why, multiplication is faster than division, and when the divisor is fixed, this is a faster route.

See Reciprocal Multiplication, a tutorial for a detailed writeup about how it works, explaining in terms of fixed-point. It shows how the algorithm for finding the reciprocal works, and how to handle signed division and modulo.

Let's consider for a minute why 0.CCCCCCCC... (hex) or 0.110011001100... binary is 4/5. Divide the binary representation by 4 (shift right 2 places), and we'll get 0.001100110011... which by trivial inspection can be added the original to get 0.111111111111..., which is obviously equal to 1, the same way 0.9999999... in decimal is equal to one. Therefore, we know that x + x/4 = 1, so 5x/4 = 1, x=4/5. This is then represented as CCCCCCCCCCCCD in hex for rounding (as the binary digit beyond the last one present would be a 1).

Solution 3

In general multiplication is much faster than division. So if we can get away with multiplying by the reciprocal instead we can significantly speed up division by a constant

A wrinkle is that we cannot represent the reciprocal exactly (unless the division was by a power of two but in that case we can usually just convert the division to a bit shift). So to ensure correct answers we have to be careful that the error in our reciprocal does not cause errors in our final result.

-3689348814741910323 is 0xCCCCCCCCCCCCCCCD which is a value of just over 4/5 expressed in 0.64 fixed point.

When we multiply a 64 bit integer by a 0.64 fixed point number we get a 64.64 result. We truncate the value to a 64-bit integer (effectively rounding it towards zero) and then perform a further shift which divides by four and again truncates By looking at the bit level it is clear that we can treat both truncations as a single truncation.

This clearly gives us at least an approximation of division by 5 but does it give us an exact answer correctly rounded towards zero?

To get an exact answer the error needs to be small enough not to push the answer over a rounding boundary.

The exact answer to a division by 5 will always have a fractional part of 0, 1/5, 2/5, 3/5 or 4/5 . Therefore a positive error of less than 1/5 in the multiplied and shifted result will never push the result over a rounding boundary.

The error in our constant is (1/5) * 2-64. The value of i is less than 264 so the error after multiplying is less than 1/5. After the division by 4 the error is less than (1/5) * 2−2.

(1/5) * 2−2 < 1/5 so the answer will always be equal to doing an exact division and rounding towards zero.

Unfortunately this doesn't work for all divisors.

If we try to represent 4/7 as a 0.64 fixed point number with rounding away from zero we end up with an error of (6/7) * 2-64. After multiplying by an i value of just under 264 we end up with an error just under 6/7 and after dividing by four we end up with an error of just under 1.5/7 which is greater than 1/7.

So to implement divison by 7 correctly we need to multiply by a 0.65 fixed point number. We can implement that by multiplying by the lower 64 bits of our fixed point number, then adding the original number (this may overflow into the carry bit) then doing a rotate through carry.

Solution 4

Here is link to a document of an algorithm that produces the values and code I see with Visual Studio (in most cases) and that I assume is still used in GCC for division of a variable integer by a constant integer.

http://gmplib.org/~tege/divcnst-pldi94.pdf

In the article, a uword has N bits, a udword has 2N bits, n = numerator = dividend, d = denominator = divisor, ℓ is initially set to ceil(log2(d)), shpre is pre-shift (used before multiply) = e = number of trailing zero bits in d, shpost is post-shift (used after multiply), prec is precision = N - e = N - shpre. The goal is to optimize calculation of n/d using a pre-shift, multiply, and post-shift.

Scroll down to figure 6.2, which defines how a udword multiplier (max size is N+1 bits), is generated, but doesn't clearly explain the process. I'll explain this below.

Figure 4.2 and figure 6.2 show how the multiplier can be reduced to a N bit or less multiplier for most divisors. Equation 4.5 explains how the formula used to deal with N+1 bit multipliers in figure 4.1 and 4.2 was derived.

In the case of modern X86 and other processors, multiply time is fixed, so pre-shift doesn't help on these processors, but it still helps to reduce the multiplier from N+1 bits to N bits. I don't know if GCC or Visual Studio have eliminated pre-shift for X86 targets.

Going back to Figure 6.2. The numerator (dividend) for mlow and mhigh can be larger than a udword only when denominator (divisor) > 2^(N-1) (when ℓ == N => mlow = 2^(2N)), in this case the optimized replacement for n/d is a compare (if n>=d, q = 1, else q = 0), so no multiplier is generated. The initial values of mlow and mhigh will be N+1 bits, and two udword/uword divides can be used to produce each N+1 bit value (mlow or mhigh). Using X86 in 64 bit mode as an example:

; upper 8 bytes of dividend = 2^(ℓ) = (upper part of 2^(N+ℓ))

; lower 8 bytes of dividend for mlow = 0

; lower 8 bytes of dividend for mhigh = 2^(N+ℓ-prec) = 2^(ℓ+shpre) = 2^(ℓ+e)

dividend dq 2 dup(?) ;16 byte dividend

divisor dq 1 dup(?) ; 8 byte divisor

; ...

mov rcx,divisor

mov rdx,0

mov rax,dividend+8 ;upper 8 bytes of dividend

div rcx ;after div, rax == 1

mov rax,dividend ;lower 8 bytes of dividend

div rcx

mov rdx,1 ;rdx:rax = N+1 bit value = 65 bit value

You can test this with GCC. You're already seen how j = i/5 is handled. Take a look at how j = i/7 is handled (which should be the N+1 bit multiplier case).

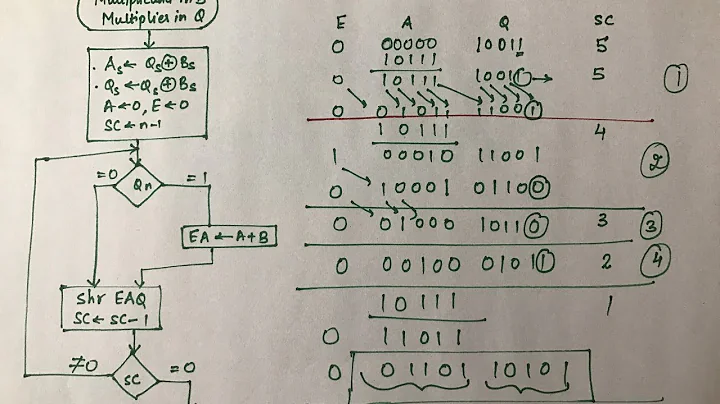

On most current processors, multiply has a fixed timing, so a pre-shift is not needed. For X86, the end result is a two instruction sequence for most divisors, and a five instruction sequence for divisors like 7 (in order to emulate a N+1 bit multiplier as shown in equation 4.5 and figure 4.2 of the pdf file). Example X86-64 code:

; rbx = dividend, rax = 64 bit (or less) multiplier, rcx = post shift count

; two instruction sequence for most divisors:

mul rbx ;rdx = upper 64 bits of product

shr rdx,cl ;rdx = quotient

;

; five instruction sequence for divisors like 7

; to emulate 65 bit multiplier (rbx = lower 64 bits of multiplier)

mul rbx ;rdx = upper 64 bits of product

sub rbx,rdx ;rbx -= rdx

shr rbx,1 ;rbx >>= 1

add rdx,rbx ;rdx = upper 64 bits of corrected product

shr rdx,cl ;rdx = quotient

; ...

To explain the 5 instruction sequence, a simple 3 instruction sequence could overflow. Let u64() mean upper 64 bits (all that is needed for quotient)

mul rbx ;rdx = u64(dvnd*mplr)

add rdx,rbx ;rdx = u64(dvnd*(2^64 + mplr)), could overflow

shr rdx,cl

To handle this case, cl = post_shift-1. rax = multiplier - 2^64, rbx = dividend. u64() is upper 64 bits. Note that rax = rax<<1 - rax. Quotient is:

u64( ( rbx * (2^64 + rax) )>>(cl+1) )

u64( ( rbx * (2^64 + rax<<1 - rax) )>>(cl+1) )

u64( ( (rbx * 2^64) + (rbx * rax)<<1 - (rbx * rax) )>>(cl+1) )

u64( ( (rbx * 2^64) - (rbx * rax) + (rbx * rax)<<1 )>>(cl+1) )

u64( ( ((rbx * 2^64) - (rbx * rax))>>1) + (rbx*rax) )>>(cl ) )

mul rbx ; (rbx*rax)

sub rbx,rdx ; (rbx*2^64)-(rbx*rax)

shr rbx,1 ;( (rbx*2^64)-(rbx*rax))>>1

add rdx,rbx ;( ((rbx*2^64)-(rbx*rax))>>1)+(rbx*rax)

shr rdx,cl ;((((rbx*2^64)-(rbx*rax))>>1)+(rbx*rax))>>cl

Related videos on Youtube

qiubit

Updated on March 08, 2022Comments

-

qiubit about 2 years

I've been reading about

divandmulassembly operations, and I decided to see them in action by writing a simple program in C:File division.c

#include <stdlib.h> #include <stdio.h> int main() { size_t i = 9; size_t j = i / 5; printf("%zu\n",j); return 0; }And then generating assembly language code with:

gcc -S division.c -O0 -masm=intelBut looking at generated

division.sfile, it doesn't contain any div operations! Instead, it does some kind of black magic with bit shifting and magic numbers. Here's a code snippet that computesi/5:mov rax, QWORD PTR [rbp-16] ; Move i (=9) to RAX movabs rdx, -3689348814741910323 ; Move some magic number to RDX (?) mul rdx ; Multiply 9 by magic number mov rax, rdx ; Take only the upper 64 bits of the result shr rax, 2 ; Shift these bits 2 places to the right (?) mov QWORD PTR [rbp-8], rax ; Magically, RAX contains 9/5=1 now, ; so we can assign it to jWhat's going on here? Why doesn't GCC use div at all? How does it generate this magic number and why does everything work?

-

Jabberwocky over 7 yearsgcc optimizes divisions by constants, try divisions by 2,3,4,5,6,7,8 and you will most likely see very different code for each case.

-

Some programmer dude over 7 yearsTry reading the values from the user to see some actual division instructions.

Some programmer dude over 7 yearsTry reading the values from the user to see some actual division instructions. -

qiubit over 7 yearshm, that's strange, I turned off optimizations with

-O0and it still optimizes? -

chux - Reinstate Monica over 7 yearsNote: Magic number

chux - Reinstate Monica over 7 yearsNote: Magic number-3689348814741910323converts toCCCCCCCCCCCCCCCDas auint64_tor just about (2^64)*4/5. -

Clifford over 7 years@qiubit : The compiler will nor perversely generate inefficient code just because optimisation is disabled. A trivial "optimisation" that does not involve code reordering or variable elimination will be performed regardless for example. Essentially a single source statement will translate to the most efficient code for that operation in isolation. The compiler optimisation takes into account the surrounding code rather then just the single statement.

Clifford over 7 years@qiubit : The compiler will nor perversely generate inefficient code just because optimisation is disabled. A trivial "optimisation" that does not involve code reordering or variable elimination will be performed regardless for example. Essentially a single source statement will translate to the most efficient code for that operation in isolation. The compiler optimisation takes into account the surrounding code rather then just the single statement. -

Jester over 7 yearsRead this awesome article: Labor of Division

-

Cody Gray over 7 yearsSome compilers actually will perversely generate inefficient code because optimization is disabled. In particular, they'll do it to make debugging easy, like the ability to set breakpoints on individual lines of code. GCC is, in fact, rather unusual in that it doesn't have a true "no optimizations" mode, because many of its optimizations are constitutively turned on. This is an example of where you can see that with GCC. Clang, on the other hand, and MSVC, will emit a

divinstruction at-O0. (cc @ clifford) -

Peter Cordes over 7 yearsThe key thing here is that this is just one of several possible ways to implement a single C operator with the same inputs as the C abstract machine. It has no impact at all on debugging because it's not optimizing across multiple statements or anything like that. There are architectures without hardware division instructions, so I wonder if

Peter Cordes over 7 yearsThe key thing here is that this is just one of several possible ways to implement a single C operator with the same inputs as the C abstract machine. It has no impact at all on debugging because it's not optimizing across multiple statements or anything like that. There are architectures without hardware division instructions, so I wonder ifgcc -O0has this trick enabled (for all architectures) so division by constants can be compiled sanely on those targets. -

Peter Cordes over 7 yearsBTW, using

Peter Cordes over 7 yearsBTW, using-Os(optimize for small code) will get gcc to use DIV instead of a modular multiplicative inverse: godbolt.org/g/FPB74p. Clang still uses a multiplicative inverse, even when it takes many instructions. It's barely an increase in code-size for small constants like 13, though. (See both gcc and clang for /13 and /12345 in that godbolt link, as functions that take args and return a value, so they don't optimize away the division like yourmain()example.) -

jamesqf over 7 yearsWhat I don't really understand here is why the compiler is generating code to do (an optimized) division at all. The values are constants, so the result could be computed during compilation, no? To see an actual generic division instruction, I'd suggest making the program read in values for i and j.

-

Sneftel over 7 years@jamesqf Operating on constants is the sort of thing that, if you compile with

-O0, GCC assumes you want for some reason. There's very little method to that, though. -

dan04 over 7 yearsGCC ought to provide

dan04 over 7 yearsGCC ought to provide-O-1and-O-2options for deliberately generating inefficient code ;-) -

Jason C over 7 years@fuz I don't know if this precise question duplicated elsewhere, but it is answered implicitly as part of stackoverflow.com/questions/40354978's answers. It's also answered directly at stackoverflow.com/a/12909900/616460 (and that answer is actually a bit more interesting than the ones here, I think, as it describes how to find the magic numbers). It's also pretty easy to find on Google (that last answer was the first search result for "gcc integer division assembler")...

Jason C over 7 years@fuz I don't know if this precise question duplicated elsewhere, but it is answered implicitly as part of stackoverflow.com/questions/40354978's answers. It's also answered directly at stackoverflow.com/a/12909900/616460 (and that answer is actually a bit more interesting than the ones here, I think, as it describes how to find the magic numbers). It's also pretty easy to find on Google (that last answer was the first search result for "gcc integer division assembler")... -

Jason C over 7 years

Jason C over 7 years -

jamesqf over 7 years@Sneftel: Sure, I get the no optimization thing, but then why is it doing the optimization of replacing DIV by shifts &c? Very little method, indeed :-)

-

user253751 over 7 years@CodyGray However, this particular optimization doesn't affect any debugger I'm aware of. Of course you'd expect "no optimizations" to avoid moving lines of code around or interleaving them, but this doesn't do that.

-

Loupax over 7 yearsAnd then you realize that humans optimize their division with lookup tables...

-

phuclv over 7 years

phuclv over 7 years -

rcgldr over 7 yearsFor i/7, the code is a bit more complicated: mov r8, i | mov rax, 2635249153387078803 | mul r8 | sub r8, rdx | shr r8, 1 | add rdx, r8 | shr rdx, 2 | mov rax,rdx .

rcgldr over 7 yearsFor i/7, the code is a bit more complicated: mov r8, i | mov rax, 2635249153387078803 | mul r8 | sub r8, rdx | shr r8, 1 | add rdx, r8 | shr rdx, 2 | mov rax,rdx . -

plugwash almost 6 years"What I don't really understand here is why the compiler is generating code to do (an optimized) division at all." AIUI gcc at O0 will optimise within a statement but not between statements. If we change the division to 9/5 it gets optimised out. If we change the division so both inputs are variables it generates a div instruction.

-

rcgldr about 4 yearsNote that if trying to replace the assembly divide instruction, which for example, divides a 128 bit integer by a 64 bit integer, the "magic" multiplier will be 128 or 129 bits, requiring 4 multiplies, then right shifting the upper 128 bits of the 256 bit product. For 129 bit multiplier, it's still 4 multiplies, with a subtract, shift right 1 bit, add, shift right some fixed number of bits. This is still useful on processors like current X86 where the multiply is more than 4 times as fast as divide.

rcgldr about 4 yearsNote that if trying to replace the assembly divide instruction, which for example, divides a 128 bit integer by a 64 bit integer, the "magic" multiplier will be 128 or 129 bits, requiring 4 multiplies, then right shifting the upper 128 bits of the 256 bit product. For 129 bit multiplier, it's still 4 multiplies, with a subtract, shift right 1 bit, add, shift right some fixed number of bits. This is still useful on processors like current X86 where the multiply is more than 4 times as fast as divide.

-

-

fuz over 7 yearsActually not. If I recall correctly, the slowest arithmetic operation on modern intel processors are

fbstpandfbldif I recall correctly. -

fuz over 7 yearsHuch? When I first read your answer I was pretty sure it read "Integer division is the slowest operation..."

-

Cody Gray over 7 yearsI'm not sure it's fair to lump together FP and integer operations in a speed comparison, @fuz. Perhaps Sneftel should be saying that division is the slowest integer operation you can perform on a modern processor? Also, some links to further explanations of this "magic" have been provided in comments. Do you think they'd be appropriate to collect in your answer for visibility? 1, 2, 3

-

Peter Cordes over 7 yearsBecause the sequence of operations is functionally identical ... this is always a requirement, even at

Peter Cordes over 7 yearsBecause the sequence of operations is functionally identical ... this is always a requirement, even at-O3. The compiler has to make code that gives correct results for all possible input values. This only changes for floating point with-ffast-math, and AFAIK there are no "dangerous" integer optimizations. (With optimization enabled, the compiler might be able to prove something about the possible range of values which lets it use something that only works for non-negative signed integers for example.) -

Peter Cordes over 7 yearsThe real answer is that gcc -O0 still transforms code through internal representations as part of turning C into machine code. It just happens that modular multiplicative inverses are enabled by default even at

Peter Cordes over 7 yearsThe real answer is that gcc -O0 still transforms code through internal representations as part of turning C into machine code. It just happens that modular multiplicative inverses are enabled by default even at-O0(but not with-Os). Other compilers (like clang) will use DIV for non-power-of-2 constants at-O0. related: I think I included a paragraph about this in my Collatz-conjecture hand-written asm answer -

Sneftel over 7 years@PeterCordes My point about "functionally identical" was WRT the grouped instructions themselves. No reordering or hoisting or anything involved; just a set of instructions that are identical to the div.

-

Peter Cordes over 7 yearsRight yeah, I figured that out while replying to the OP's comments on the question. It's just another way to implement the C

Peter Cordes over 7 yearsRight yeah, I figured that out while replying to the OP's comments on the question. It's just another way to implement the C/operator, and doesn't need to optimize between C statements or do anything that would affect debugging. But note that neither the C standard nor the gcc documentation guarantees that there's a mode that maps C statements to target asm in any kind of simple way. -

Peter Cordes over 7 yearsI made an edit which removes the "functionally identical" wording which I think different people might interpret different ways. It's your answer, so please review my edit. (I changed first para to "integer operation", because interrupts and main-memory round trips are even slower).

Peter Cordes over 7 yearsI made an edit which removes the "functionally identical" wording which I think different people might interpret different ways. It's your answer, so please review my edit. (I changed first para to "integer operation", because interrupts and main-memory round trips are even slower). -

Peter Cordes over 7 yearsAlso, are you sure about using IDIV for signed division by constants? I don't think gcc

Peter Cordes over 7 yearsAlso, are you sure about using IDIV for signed division by constants? I don't think gcc-O2or-O3would ever do that, unless there are divisors for which no inverse exists. gcc6.2-O0uses IDIV even when it takes a ton of instructions: godbolt.org/g/GmWfg8. I think you should just say that you get DIV and IDIV for non-constant divisors. -

abligh over 7 years@user2357112 feel free to post your own answer, but I don't agree. You can think of the multiply as a 64.0 bit by 0.64 bit multiply giving a 128 bit fixed point answer, of which the lowest 64 bits are discarded, then a division by 4 (as I point out in the first para). You may well be able to come up with an alternative modular arithmetic answer which explains the bit movements equally well, but I'm pretty sure this works as an explanation.

-

plugwash over 7 yearsThe value is actually "CCCCCCCCCCCCCCCD" The last D is important, it makes sure that when the result is truncated exact divisions come out with the right answer.

-

user2357112 over 7 yearsNever mind. I didn't see that they're taking the upper 64 bits of the 128-bit multiplication result; it's not something you can do in most languages, so I didn't initially realize it was happening. This answer would be much improved by an explicit mention of how taking the upper 64 bits of the 128-bit result is equivalent to multiplying by a fixed-point number and rounding down. (Also, it'd be good to explain why it has to be 4/5 instead of 1/5, and why we have to round 4/5 up instead of down.)

-

abligh over 7 years@plugwash thanks fixed - I was being lazy in typing, but have now made the rounding point.

-

John Dvorak over 7 years@plugwash I'm still not entirely sure how this correct truncation is ensured. Do you happen to have a reference handy?

John Dvorak over 7 years@plugwash I'm still not entirely sure how this correct truncation is ensured. Do you happen to have a reference handy? -

plugwash over 7 yearsIf the number you multiply by is slightly less than 4/5 you WILL get wrong results after truncation of the final answer for any number that is exactly divisible by 5.

-

plugwash over 7 yearsIf it's slightly more than 4/5 then things get messier, you would have to work out the worst case error and then check if that error was large enough to cause incorrect rounding.

-

abligh over 7 years@plugwash: IE the error is less than 2^-63 in the multiplicand, so even multiplying it by 2^64, it's lost if shifted right 2?

-

plugwash over 7 yearsAfaict you would have to work out how big an error is needed to throw a division by 5 upwards across a rounding boundry, then compare that to the worst case error in your caclulation. Presumablly the gcc developers have done so and concluded that it will always give the correct results.

-

plugwash over 7 yearsActually you probablly only need to check the 5 highest possible input values, if those round correctly everything else should too.

-

Sneftel over 7 years@PeterCordes No, that's my mistake. I vaguely remembered that there were some numbers which created problems for the multiplicative inverse method, but I was mistaken.

-

Sneftel over 7 years@PeterCordes And yeah, I think GCC (and lots of other compilers) have forgotten to come up with a good rationale for "what sorts of optimizations apply when optimization is disabled". Having spent the better part of a day tracking down an obscure codegen bug, I'm a bit annoyed about that just at the moment.

-

dan04 over 7 years@Sneftel: That's probably just because the number of application developers who actively complain to the compiler developers about their code running faster than expected is relatively small.

dan04 over 7 years@Sneftel: That's probably just because the number of application developers who actively complain to the compiler developers about their code running faster than expected is relatively small. -

Peter Cordes over 7 yearsThis answer turned modular multiplicative inverses from "math that looks more complicated than I want to take the time for" into something that makes sense. +1 for the easy-to-understand version. I've never needed to do anything other than just use compiler-generated constants, so I've only skimmed other articles explaining the math.

Peter Cordes over 7 yearsThis answer turned modular multiplicative inverses from "math that looks more complicated than I want to take the time for" into something that makes sense. +1 for the easy-to-understand version. I've never needed to do anything other than just use compiler-generated constants, so I've only skimmed other articles explaining the math. -

plugwash over 7 yearsI don't see anything to do with modular arithmetic in the code at all. Dunno where some other commenters are getting that from.

-

Peter Cordes over 7 yearsIt's modulo 2^n, like all integer math in a register. en.wikipedia.org/wiki/…

Peter Cordes over 7 yearsIt's modulo 2^n, like all integer math in a register. en.wikipedia.org/wiki/… -

plugwash over 7 yearsNo operation in the sequence can possiblly wrap since the output of the multiplication is double the size of the inputs. So modulo behaviour is irrelevent.

-

plugwash over 7 yearsIf he was taking the lower 64 bits of the muliply rather than the upper 64 bits we would be talking about modular arithmetic but he isn't, so we aren't.

-

Peter Cordes over 7 yearsMaybe I'm misusing the terminology, but I thought this was the proper name for the technique. Further googling shows that other discussion of the technique usually just calls it "multiplicative inverses". However, Granlund & Montgomery's paper about the technique and implementing it in gcc does say "The multiplicative inverse

Peter Cordes over 7 yearsMaybe I'm misusing the terminology, but I thought this was the proper name for the technique. Further googling shows that other discussion of the technique usually just calls it "multiplicative inverses". However, Granlund & Montgomery's paper about the technique and implementing it in gcc does say "The multiplicative inversedinvofdoddmodulo 2^N can be found...". I think the "modular" comes in because it only has to work for inputs from 0 to 2^n - 1, rather than for any mathematical integer. I agree there's no modulo when using it. -

Justin Time - Reinstate Monica over 7 years@Sneftel MSVC is more cautious about doing some micro-optimisations when you don't explicitly tell it to optimise code; for at least some versions, this appears to be one of them.

Justin Time - Reinstate Monica over 7 years@Sneftel MSVC is more cautious about doing some micro-optimisations when you don't explicitly tell it to optimise code; for at least some versions, this appears to be one of them. -

harold over 7 years@PeterCordes modular multiplicative inverses are used for exact division, afaik they're not useful for general division

-

Peter Cordes over 7 years@harold: So is there a specific name for this technique of doing fixed-width-integer division by multiplying with a "magic" number and using the high half of the result? Is it just "multiplicative inverse"? I was hoping for something more specific, which wouldn't apply to the similar floating-point optimization.

Peter Cordes over 7 years@harold: So is there a specific name for this technique of doing fixed-width-integer division by multiplying with a "magic" number and using the high half of the result? Is it just "multiplicative inverse"? I was hoping for something more specific, which wouldn't apply to the similar floating-point optimization. -

harold over 7 years@PeterCordes multiplication by fixed-point reciprocal? I don't know what everyone calls it but I'd probably call it that, it's fairly descriptive

-

rcgldr over 7 yearsIn the case of some divisors, such as j = i / 7, a 65 bit multiplier is needed. The code that deals with this case is a bit more complicated.

rcgldr over 7 yearsIn the case of some divisors, such as j = i / 7, a 65 bit multiplier is needed. The code that deals with this case is a bit more complicated. -

Peter Cordes over 7 yearsThat paper describes implementing it in gcc, so I think it's a safe assumption that the same algo is still used.

Peter Cordes over 7 yearsThat paper describes implementing it in gcc, so I think it's a safe assumption that the same algo is still used. -

Ed Grimm about 5 yearsThat paper dated 1994 describes implementing it in gcc, so there's been time for gcc to update its algorithm. Just in case others don't have the time to check to see what the 94 in that URL means.

Ed Grimm about 5 yearsThat paper dated 1994 describes implementing it in gcc, so there's been time for gcc to update its algorithm. Just in case others don't have the time to check to see what the 94 in that URL means.