Why does Unicode have big or little endian but UTF-8 doesn't?

Solution 1

Note: Windows uses the term "Unicode" for UCS-2 due to historical reasons – originally that was the only way to encode Unicode codepoints into bytes, so the distinction didn't matter. But in modern terminology, both examples are Unicode, but the first is specifically UCS-2 or UTF-16 and the second is UTF-8.

UCS-2 had big-endian and little-endian because it directly represented the codepoint as a 16-bit 'uint16_t' or 'short int' number, like in C and other programming languages. It's not so much an 'encoding' as a direct memory representation of the numeric values, and as an uint16_t can be either BE or LE on different machines, so is UCS-2. The later UTF-16 just inherited the same mess for compatibility.

(It probably could have been defined for a specific endianness, but I guess they felt it was out of scope or had to compromise between people representing different hardware manufacturers or something. I don't know the actual history.)

Meanwhile, UTF-8 is a variable-length encoding, which can use anywhere from 1 to 6 bytes to represent a 31-bit value. The byte representation has no relationship to the CPU architecture at all; instead there is a specific algorithm to encode a number into bytes, and vice versa. The algorithm always outputs or consumes bits in the same order no matter what CPU it is running on.

Solution 2

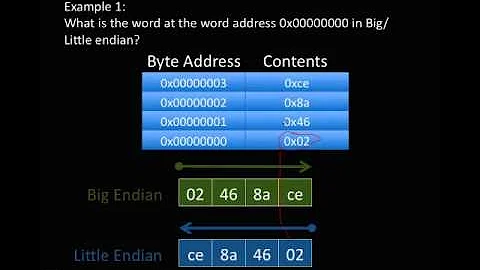

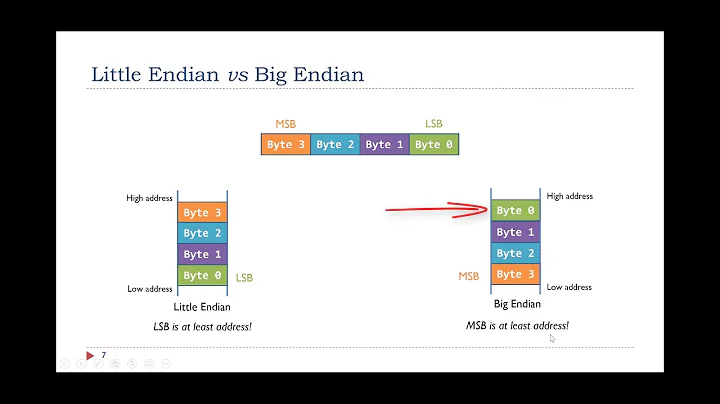

Exactly the same reason why an array of bytes (char[] in C or byte[] in many other languages) doesn't have any associated endianness but arrays of other types larger than byte do. It's because endianness is the way you store a value that's represented by multiple bytes into memory. If you have just a single byte then you only have 1 way to store it into memory. But if an int is comprised of 4 bytes with index 1 to 4 then you can store it in many different orders like [1, 2, 3, 4], [4, 3, 2, 1], [2, 1, 4, 3], [3, 1, 2, 4]... which is little endian, big endian, mixed endian...

Unicode has many different encodings called Unicode Transformation Format with the major ones being UTF-8, UTF-16 and UTF-32. UTF-16 and UTF-32 work on a unit of 16 and 32 bits respectively, and obviously when you store 2 or 4 bytes into byte-addressed memory you must define an order of the bytes to read/write. UTF-8 OTOH work on a byte unit, hence there's no endianness in it

Solution 3

Here is the official, primary source material (published in March, 2020):

"The Unicode® Standard, Version 13.0"

Chapter 2: General Structure (page 39 of the document; page 32 of the PDF)

2.6 Encoding Schemes

The discussion of Unicode encoding forms (ed. UTF-8, UTF-16, and UTF-32) in the previous section was concerned with the machine representation of Unicode code units. Each code unit is represented in a computer simply as a numeric data type; just as for other numeric types, the exact way the bits are laid out internally is irrelevant to most processing. However, interchange of textual data, particularly between computers of different architectural types, requires consideration of the exact ordering of the bits and bytes involved in numeric representation. Integral data, including character data, is serialized for open interchange into well-defined sequences of bytes. This process of byte serialization allows all applications to correctly interpret exchanged data and to accurately reconstruct numeric values (and thereby character values) from it. In the Unicode Standard, the specifications of the distinct types of byte serializations to be used with Unicode data are known as Unicode encoding schemes.

Byte Order. Modern computer architectures differ in ordering in terms of whether the most significant byte or the least significant byte of a large numeric data type comes first in internal representation. These sequences are known as “big-endian” and “little-endian” orders, respectively. For the Unicode 16- and 32-bit encoding forms (UTF-16 and UTF32), the specification of a byte serialization must take into account the big-endian or little-endian architecture of the system on which the data is represented, so that when the data is byte serialized for interchange it will be well defined.

A character encoding scheme consists of a specified character encoding form plus a specification of how the code units are serialized into bytes. The Unicode Standard also specifies the use of an initial byte order mark (BOM) to explicitly differentiate big-endian or little-endian data in some of the Unicode encoding schemes. (See the “Byte Order Mark” subsection in Section 23.8, Specials.)

When a higher-level protocol supplies mechanisms for handling the endianness of integral data types, it is not necessary to use Unicode encoding schemes or the byte order mark. In those cases Unicode text is simply a sequence of integral data types.

For UTF-8, the encoding scheme consists merely of the UTF-8 code units (= bytes) in sequence. Hence, there is no issue of big- versus little-endian byte order for data represented in UTF-8. However, for 16-bit and 32-bit encoding forms, byte serialization must break up the code units into two or four bytes, respectively, and the order of those bytes must be clearly defined. Because of this, and because of the rules for the use of the byte order mark, the three encoding forms of the Unicode Standard result in a total of seven Unicode encoding schemes, as shown in Table 2-4.

The endian order entry for UTF-8 in Table 2-4 is marked N/A because UTF-8 code units are 8 bits in size, and the usual machine issues of endian order for larger code units do not apply. The serialized order of the bytes must not depart from the order defined by the UTF-8 encoding form. Use of a BOM is neither required nor recommended for UTF-8, but may be encountered in contexts where UTF-8 data is converted from other encoding forms that use a BOM or where the BOM is used as a UTF-8 signature. See the “Byte Order Mark” subsection in Section 23.8, Specials, for more information.

Please also see the following related information:

- UNICODE CHARACTER ENCODING MODEL (UTR # 17): Character Encoding Scheme (CES)

- Unicode's FAQ on "UTF-8, UTF-16, UTF-32 & BOM"

Related videos on Youtube

Tiina

Need help on idiomatic English! You are more than welcome to correct English I used in my posts!

Updated on September 18, 2022Comments

-

Tiina over 1 year

Tiina over 1 yearUNICODE uses 2 bytes for one character, so it has big or little endian difference. For example, the character 哈 is

54 C8in hex. And its UTF-8 therefore is:11100101 10010011 10001000UTF-8 uses 3 bytes to present the same character, but it does not have big or little endian. Why?

-

Solomon Rutzky almost 3 yearsTo be fair, the term "Unicode" as an encoding is only used in Windows to refer specifically to UTF-16 Little Endian. Hence, "Unicode" doesn't use bytes or have endianness as it's not an encoding for the vast majority of the usage of that term. It might help to update the question to state "UTF-16" instead of "UNICODE" to be clearer for most readers, especially those who don't work in Windows enough to know how it misused the term "Unicode".

Solomon Rutzky almost 3 yearsTo be fair, the term "Unicode" as an encoding is only used in Windows to refer specifically to UTF-16 Little Endian. Hence, "Unicode" doesn't use bytes or have endianness as it's not an encoding for the vast majority of the usage of that term. It might help to update the question to state "UTF-16" instead of "UNICODE" to be clearer for most readers, especially those who don't work in Windows enough to know how it misused the term "Unicode". -

oakad almost 3 yearsLogically, though, UTF-8 is simply mandated by the standard to be "network byte order", aka big endian, on the wire. Indeed, other varieties of "prefix vli" encoding exist which are little endian on the wire.

-

Solomon Rutzky almost 3 yearsAlso, please see Unicode's FAQ on "UTF-8, UTF-16, UTF-32 & BOM" as it should answer many questions you might have 😺.

Solomon Rutzky almost 3 yearsAlso, please see Unicode's FAQ on "UTF-8, UTF-16, UTF-32 & BOM" as it should answer many questions you might have 😺. -

Mast almost 3 yearsThe field of Unicode has become so convoluted and complicated, it wouldn't surprise me if there were multiple reasons.

Mast almost 3 yearsThe field of Unicode has become so convoluted and complicated, it wouldn't surprise me if there were multiple reasons. -

Solomon Rutzky almost 3 years@Mast There is only one reason: the concept of ordering cannot apply to single items (in this case: bytes). Please see my answer for the official explanation.

Solomon Rutzky almost 3 years@Mast There is only one reason: the concept of ordering cannot apply to single items (in this case: bytes). Please see my answer for the official explanation. -

Mark Ransom almost 3 years@SolomonRutzky Microsoft didn't misuse the term "Unicode", they were simply an early adopter back when UCS-2 was the only way to encode it. Their usage made sense when they adopted it. Their sin was to keep that terminology long after other encodings came into widespread use.

-

Admin almost 2 years@SolomonRutzky you are wrong. If UTF-8 encodes multi-byte characters its no longer "single bytes". And it must use big-endian format for network comparability. For 2 bytes:

Admin almost 2 years@SolomonRutzky you are wrong. If UTF-8 encodes multi-byte characters its no longer "single bytes". And it must use big-endian format for network comparability. For 2 bytes:110xxxxx 10xxxxxxfor 3 bytes1110xxxx 10xxxxxx 10xxxxxx, etc. Big-endianness is encoded in a way that most significant bits are encoded in first byte of the sequence, and lower significance bits in later bytes.

-

-

Solomon Rutzky almost 3 yearsHi there. Some notes: 1) "Unicode" in Windows world is specifically UTF-16 Little Endian given that they also have a "BigEndianUnicode" that is UTF-16 BE. 2) UTF-16 is also variable-length due to supplementary characters being comprised of surrogate pairs of two UTF-16 code units. And 3) For all practical purposes, UTF-8 uses a max of 4 bytes given that the Unicode Standard states explicitly that there is a hard limit of encoding just the 1,114,111 max code points defined by Unicode (i.e. the UTF-16 limit).

Solomon Rutzky almost 3 yearsHi there. Some notes: 1) "Unicode" in Windows world is specifically UTF-16 Little Endian given that they also have a "BigEndianUnicode" that is UTF-16 BE. 2) UTF-16 is also variable-length due to supplementary characters being comprised of surrogate pairs of two UTF-16 code units. And 3) For all practical purposes, UTF-8 uses a max of 4 bytes given that the Unicode Standard states explicitly that there is a hard limit of encoding just the 1,114,111 max code points defined by Unicode (i.e. the UTF-16 limit). -

Jörg W Mittag almost 3 yearsTechnically speaking, UTF-8 works on octets, not bytes. A byte is simply the smallest individually addressable unit of memory. On many popular architectures, a byte is 8 bits, but that is in no way guaranteed. While 6-bit, 7-bit, 9-bit, 10-bit, 11-bit, and 12-bit bytes are mostly a historic curiosity at this point, there are architectures in use today that have e.g. 16-bit, 24-bit, and 32-bit bytes, as well as architectures that have variable-sized bytes and architectures that don't have bytes at all (or have 1-bit bytes, however you want to look at it).

-

user1937198 almost 3 yearsAnd if you implementing unicode on a 9 bit architecture, then maybe datatracker.ietf.org/doc/html/rfc4042 could be relevant, but realistically you are probably going to just pretend you have 8 bit bytes with UTF-8 and ignore the extra bit.

-

user3840170 almost 3 yearsUTF-16 is an encoding. It’s just not an encoding into bytes, but 16-bit words. Encoding those words into bytes is where endianness comes into play.

user3840170 almost 3 yearsUTF-16 is an encoding. It’s just not an encoding into bytes, but 16-bit words. Encoding those words into bytes is where endianness comes into play. -

v.oddou almost 3 yearsI think we can say that endianness should never come into play. it only does when we want to serialize machine format as is. which there is only a case for, for performance reasons. otherwise you never have to care about it. endianness is just an opaque internal representation of words in the electronics. A serialized format, especially of octets, abstracts away endianness through specifying how the bytes are laid down. so when you read you reconstruct the memory objects appropriately (without thinking about it).

v.oddou almost 3 yearsI think we can say that endianness should never come into play. it only does when we want to serialize machine format as is. which there is only a case for, for performance reasons. otherwise you never have to care about it. endianness is just an opaque internal representation of words in the electronics. A serialized format, especially of octets, abstracts away endianness through specifying how the bytes are laid down. so when you read you reconstruct the memory objects appropriately (without thinking about it). -

rubenvb almost 3 yearsNote that in recent Windows 10 versions (1903 to be exact), the "ASCII" Win32 API also accepts valid UTF-8 if you set up the process codepage correctly: docs.microsoft.com/en-us/windows/uwp/design/globalizing/….

-

Voo almost 3 years@Solomon "Unicode" in Windows refers to UCS2 not UTF-16 most of the time (there are I think some newer APIs that are UTF-16 compliant, but the vast majority aren't). When talking about various or fixed length encoding in regards to Unicode people generally mean unicode codepoints and not glyphs.. after all you yourself state that UTF-8 is limited to 4 byte in the next sentence - if we were talking about glyphs the maximum size would be waaaayy longer. (In practice one should care about glyphs not codepoints though I agree which makes the fact that UTF-16 is fixed length rather irrelevant)

-

marshal craft almost 3 yearsI looked up this on msdn if that helps.

-

Solomon Rutzky almost 3 yearsHello Marshal. You mention documentation, so please provide links to exactly what you read so that others may review. Also, endianness is very much not Windows-specific. Endianness is the ordering of bytes within multi-byte datatypes (e.g. 16, 32, and 64 bit ints). A single byte does not have order; it just is. Since UTF-8 uses 8 bit code units, the concept of endianness can't apply. UTF-8 is multiple, single-byte units whereas UTF-32 is a single, multi-byte unit. UTF-16 is multiple, multi-byte units. Please see phuclv's answer (correct) answer.

Solomon Rutzky almost 3 yearsHello Marshal. You mention documentation, so please provide links to exactly what you read so that others may review. Also, endianness is very much not Windows-specific. Endianness is the ordering of bytes within multi-byte datatypes (e.g. 16, 32, and 64 bit ints). A single byte does not have order; it just is. Since UTF-8 uses 8 bit code units, the concept of endianness can't apply. UTF-8 is multiple, single-byte units whereas UTF-32 is a single, multi-byte unit. UTF-16 is multiple, multi-byte units. Please see phuclv's answer (correct) answer. -

Solomon Rutzky almost 3 years@v.oddou It is incorrect, or at least misleading, to state that we never, or even rarely, need to care about endianness. Any time data is shared (via file, over the network, etc) it is important for the consumer to understand how to interpret the stream of bytes. Reading with the wrong endianness results in data loss, just like reading with the wrong code page. This is why Byte Order Marks are so important: they explicitly define the correct way to interpret the bytes that follow, reducing potential misinterpretation (outside of processes that don't understand BOMs).

Solomon Rutzky almost 3 years@v.oddou It is incorrect, or at least misleading, to state that we never, or even rarely, need to care about endianness. Any time data is shared (via file, over the network, etc) it is important for the consumer to understand how to interpret the stream of bytes. Reading with the wrong endianness results in data loss, just like reading with the wrong code page. This is why Byte Order Marks are so important: they explicitly define the correct way to interpret the bytes that follow, reducing potential misinterpretation (outside of processes that don't understand BOMs). -

Solomon Rutzky almost 3 years@Voo "Unicode" in Windows is UTF-16 LE. A) "Save As..." dialogs, choosing "save with encoding", offers both Unicode (CP 1200) and Unicode (Big-Endian ; CP 1201) (see docs.microsoft.com/en-us/windows/win32/intl/…). B) UCS-2 hasn't been an encoding since Unicode 2.0 in 1996; it just indicates a process not interpreting surrogate pairs into their supplementary code point. Please see unicode.org/faq/utf_bom.html#utf16-11 and unicode.org/versions/Unicode13.0.0/appC.pdf#G1823 (section C.2 Encoding Forms in ISO/IEC 10646 on page 11).

Solomon Rutzky almost 3 years@Voo "Unicode" in Windows is UTF-16 LE. A) "Save As..." dialogs, choosing "save with encoding", offers both Unicode (CP 1200) and Unicode (Big-Endian ; CP 1201) (see docs.microsoft.com/en-us/windows/win32/intl/…). B) UCS-2 hasn't been an encoding since Unicode 2.0 in 1996; it just indicates a process not interpreting surrogate pairs into their supplementary code point. Please see unicode.org/faq/utf_bom.html#utf16-11 and unicode.org/versions/Unicode13.0.0/appC.pdf#G1823 (section C.2 Encoding Forms in ISO/IEC 10646 on page 11). -

user1686 almost 3 yearsdocs.microsoft.com/en-us/cpp/cpp/… "In the Microsoft compiler, [wchar_t] represents a 16-bit wide character used to store Unicode encoded as UTF-16LE, the native character type on Windows operating systems."

-

Mark Ransom almost 3 years"you aren't supposed to worry about endianess with utf-8 on Windows" - the "on Windows" part is redundant, you don't need to worry about UTF-8 endianness no matter which OS you encounter it on. That's one of its beauties.

-

Voo almost 3 years@Solomon I'm painfully aware what UCS2 is. My point is exactly that many win32 APIs don't handle surrogate pairs. Yes the text controls and similar APIs can handle them these days, but goodness there are a lot of problematic APIs out there (and it's dubious if they could be fixed even if Microsoft wanted to given backcomp constraints - the file system at the very least it's a lost cause). This has even lead to such tragic things such as WTF-8, which if you never heard of or had need for you can call yourself lucky.

-

plugwash almost 3 yearsWTF-8 seems to be mozilla's fancy name for "UTF-8 with lone surrogates allowed", a concept that has been around quite a bit longer than said mozilla spec document (for example python has supported it since version 3.1 in 2009)

-

supercat almost 3 years@user1937198: Gaaah! I have no beef with the existence of 36-bit architectures, but code for such platforms which needs to exchange data with other platforms should process it as a stream of octets. Having such systems use other formats for data that will be exchanged with other systems makes them less useful rather than more useful.

-

Luaan almost 3 yearsIt's not really about the registers (though of course it affects which endianness you choose), it's just the fact that in UTF-16, the smallest unit is 16 bits, in UTF-32 it's 32 bits and in UTF-8 it's 8 bits. We've managed to almost perfectly solve how to store 8-bit numbers in a multi-platform way, but the war over 16 and 32 bit numbers is still ongoing :) Or said another, way, UTF-8 is a stream of bytes - it already has a fixed order. UTF-16 is a stream of 16-bit numbers, again ordered; but they way we actually store the individual 16-bit numbers still isn't uniform.

-

Deduplicator almost 3 yearsRegisters don't have an endianness at all. By the time data arrives there, endianness is done for. The whole processor probably has a slight or strong preference for little- or big-endian though, as avoiding it is not economic. Some can be configured (at least for integral numbers), floating point can be different, instructions can be different, and then there are some weird ones which are neither.

-

kbolino almost 3 years@supercat note the date on that RFC

-

marshal craft almost 3 yearsI expected this. It's funny how hateful you are to things which are more sophisticated then you, even when you immediately steal from it.

-

marshal craft almost 3 yearsI posted a Linux perspective answer here one time, and then disproportionate amount of up votes. This answer is perfectly fine. If I read a Linux system specific answer that's fine. This answer is great for a Windows developer using utf-8. I do not need to post document, it's easily found. Don't bother commenting further, with the same lines of argument cause i do not care, block ban what ever but just save your self the time maybe or don't.

-

marshal craft almost 3 yearsNot if you had said, in addition, the utf standard that's fine, that is great stuff, but that doesn't discredit this answer. I mean i fully support standards. And Windows is hugely beneficial to standards, including the utf-8 standards. You know a lot of people though are trying to bridge the gap, and develop, and need a conducive explanation of whats going on, say on Windows. This answer does that by basically saying the o.s. Handles it for you in a constructive useful manner. I saw the other answers and nobody was saying anything remotely close.

-

marshal craft almost 3 yearsAll too often, i see the same thing. I post an answer that functionaly explains what the issue is. Here endianess is abstracted away up and to the interface of the stream in or out of the file. There is natural topology to it. I explain this, nobody else did. All the sudden the light bulb turns on, you tell me now the whole utf-8 today does this. Well you didn't say anything, you just going on about Wikipedia endianess definition. You figure it out cause of my answer. I get down votes, which i would be completely ashamed of such behavior but your not. And if that's not all

-

marshal craft almost 3 yearsI get a little bit of moron Linux evangelicalism, fan boy stuff on top of it too. And for completely analogous reason. Windows ounce again comes up with just one random competent solution here, and you and all your crownies steal it and make a b.s. Standard, that really Windows wrote. It the same way with Windows driver and uefi. But at end of day you can't do no real stuff by your self, nobody that could, would act so childish and jealously as you do.

-

marshal craft almost 3 yearsAnd you know this is like 100th time I've had this convo, so if I'm overly harsh, i apologize, i don't have the time to go through and edit the way i said everything to make it friendly. But you people need to hear it. It good for you. I get nothing. I know i don't get nothing before i even started this answer. And if i had the same mentality as you, towards Linux that would be bad for me. Linux does do a lot. One thing that is bad jn recent years is intel, and uefi, and secure boot. And one organization to make a strongest fight against it, has been Linux. A lot of Windows users may be

-

marshal craft almost 3 yearsHaving to migrate to Linux the way things are going. But Linux relies on Windows. And there might not be the hardware for anyone if this stuff continues. You people have to wake up. Unless some wonder boy kid starts up a micro cap x64 architecture cpu company, we might all be out.

-

marshal craft almost 3 yearsCompanies like Microsoft that paved the way for internet, healthy ecosystem of oems, don't last long. Ounce those people retire, move on, what we like about a company is gone. We take a lot for granted. And unless you want to be doing all your computjng with 1 finger on a 8 bit shit pie, I'd wake up if i were you.

-

David42 almost 3 years@Deduplicator I didn't say registers have endianness. The endianness actually resides in the machine language instructions which copy words between registers and RAM. For example, x86 processors can load a 16 and 32 bit little-endian word into a register in a single instruction, but several instructions are required to load a bit-endian word from RAM.

David42 almost 3 years@Deduplicator I didn't say registers have endianness. The endianness actually resides in the machine language instructions which copy words between registers and RAM. For example, x86 processors can load a 16 and 32 bit little-endian word into a register in a single instruction, but several instructions are required to load a bit-endian word from RAM. -

supercat almost 3 years@kbolino: Heh. Most of the other Foolish April RFCs I've noticed put less effort into appearing serious.

-

Voo almost 3 years@plugwash Oh definitely - the whole problem has been around since UTF-16 and surrogates came up, so definitely much longer. It's just one example of the problems caused because many (win32, well the problem is bigger than just Windows, but that's the topic at hand) APIs allow invalid UTF-16 or handle surrogates correctly.

-

David42 almost 3 yearsJust for the record, UTF-8 doesn't come from Windows or Linux. The inventors are Dave Prosser of Unix System Laboratories and Ken Thompson of Bell Labs. The problem with your answer is not that you mentioned Windows or cited Windows documentation as a source. The problem is that the words "on Windows" at the end of the third sentence seem to suggest that UTF-8 might be different on other operating systems. The question asked is why is UTF-8 the same everywhere. You seem to imply that this may not be so. That is incorrect. Delete the stray "on Windows" to fix your answer.

David42 almost 3 yearsJust for the record, UTF-8 doesn't come from Windows or Linux. The inventors are Dave Prosser of Unix System Laboratories and Ken Thompson of Bell Labs. The problem with your answer is not that you mentioned Windows or cited Windows documentation as a source. The problem is that the words "on Windows" at the end of the third sentence seem to suggest that UTF-8 might be different on other operating systems. The question asked is why is UTF-8 the same everywhere. You seem to imply that this may not be so. That is incorrect. Delete the stray "on Windows" to fix your answer.