Why is a boolean 1 byte and not 1 bit of size?

Solution 1

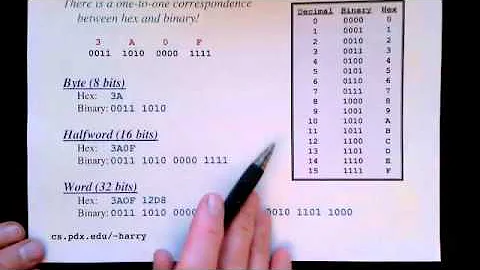

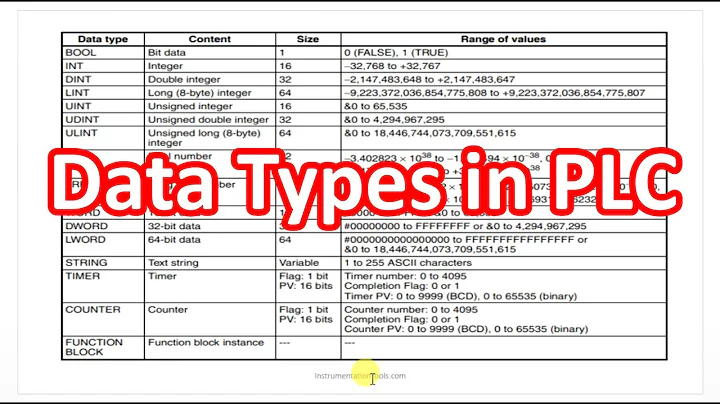

Because the CPU can't address anything smaller than a byte.

Solution 2

From Wikipedia:

Historically, a byte was the number of bits used to encode a single character of text in a computer and it is for this reason the basic addressable element in many computer architectures.

So byte is the basic addressable unit, below which computer architecture cannot address. And since there doesn't (probably) exist computers which support 4-bit byte, you don't have 4-bit bool etc.

However, if you can design such an architecture which can address 4-bit as basic addressable unit, then you will have bool of size 4-bit then, on that computer only!

Solution 3

Back in the old days when I had to walk to school in a raging blizzard, uphill both ways, and lunch was whatever animal we could track down in the woods behind the school and kill with our bare hands, computers had much less memory available than today. The first computer I ever used had 6K of RAM. Not 6 megabytes, not 6 gigabytes, 6 kilobytes. In that environment, it made a lot of sense to pack as many booleans into an int as you could, and so we would regularly use operations to take them out and put them in.

Today, when people will mock you for having only 1 GB of RAM, and the only place you could find a hard drive with less than 200 GB is at an antique shop, it's just not worth the trouble to pack bits.

Solution 4

The easiest answer is; it's because the CPU addresses memory in bytes and not in bits, and bitwise operations are very slow.

However it's possible to use bit-size allocation in C++. There's std::vector specialization for bit vectors, and also structs taking bit sized entries.

Solution 5

Because a byte is the smallest addressible unit in the language.

But you can make bool take 1 bit for example if you have a bunch of them eg. in a struct, like this:

struct A

{

bool a:1, b:1, c:1, d:1, e:1;

};

Related videos on Youtube

Asm

Updated on December 12, 2021Comments

-

Asm over 2 years

In C++,

- Why is a boolean 1 byte and not 1 bit of size?

- Why aren't there types like a 4-bit or 2-bit integers?

I'm missing out the above things when writing an emulator for a CPU

-

Matthieu M. over 13 yearsIn C++ you can "pack" the data by using bit-fields.

Matthieu M. over 13 yearsIn C++ you can "pack" the data by using bit-fields.struct Packed { unsigned int flag1 : 1; unsigned int flag2: 1; };. Most compilers will allocate a fullunsigned int, however they deal with the bit-twiddling by themselves when you read / write. Also they deal by themselves with the modulo operations. That is aunsigned small : 4attribute has a value between 0 and 15, and when it should get to 16, it won't overwrite the preceding bit :) -

Peter Cordes over 2 yearsBut note / beware that it's not thread-safe for different threads to write adjacent bitfields in the same object. It is thread-safe for them to write separate

Peter Cordes over 2 yearsBut note / beware that it's not thread-safe for different threads to write adjacent bitfields in the same object. It is thread-safe for them to write separateboolmembers of a struct/class. This means compilers are allowed to implement bitfield writes by loading the containing word, doing some bit-manipulation, then just storing the whole word (not doing an atomic CAS). Related: C++ memory model and race conditions on char arrays - that's why word-addressable machines can't use 1-bytecharin a C11 or C++11 implementaiton.

-

Hogan over 13 yearsNot sure I would agree that bitwise operations are slow. ands, nots, xors etc are very fast. It is typically the implementation of the bitwise operations that are slow. At the machine level they are quite fast. Branching... now that is slow.

-

Pedro Loureiro over 13 yearsJust to make it more clear, if you create a vector of booleans and put 24 booleans into it, it will be taking 3 bytes only (3*8). If you put another boolean in, it will take another byte. Yet, if you push another boolean, it won't take any extra bytes because it uses the "free" bits in the last byte

-

Pedro Loureiro over 13 yearsyeah, I also doubt bitewise operations are slow :)

-

John Dibling over 13 yearsThe bit vectors do not create bit-sized allocations. they create byte-sized allocations. It is not possible to allocate a single bit.

John Dibling over 13 yearsThe bit vectors do not create bit-sized allocations. they create byte-sized allocations. It is not possible to allocate a single bit. -

Martin York over 13 yearsFind it unlikely that bit operations are slow. But std::vector<bool> is slow (now considered a mistake in most circles (but we can't undo it)).

Martin York over 13 yearsFind it unlikely that bit operations are slow. But std::vector<bool> is slow (now considered a mistake in most circles (but we can't undo it)). -

sukru over 13 yearsReading a single bit in a bit vector requires three operations: shift, and, and another shift again. Writing is two. Whereas individual bytes can be accessed with a single one.

-

Paul Tomblin over 13 yearsI believe even the MIPS cpu will give you access to an individual byte, although there is a performance penalty.

-

Ryan Li over 13 years@Paul: Yes you are right, but generally the word-specific

lw/sware much more widely used. -

Gene Bushuyev over 13 yearsDon't know about MIPS, but IA-64 architecture allows only access on 64-bit boundary.

Gene Bushuyev over 13 yearsDon't know about MIPS, but IA-64 architecture allows only access on 64-bit boundary. -

fredoverflow over 13 yearsActually, the four x86 instructions

bt,bts,btrandbtccan address single bits! -

Sam over 13 yearsI think

btaddresses a byte offset and then tests the bit at a given offset, regardless, when specifying an address you go in bytes...bit offset literals would get a bit wordy (excuse the pun). -

fredoverflow over 13 years@six: You can load the beginning of an array in one register and then the relative "bit offset" into a second. The bit offset is not limited to "within one byte", it can be any 32 bit number.

-

Sam over 13 yearsGotcha, I hadn't used

btwith a register, only with an 8 bit immediate. -

Steve Jessop over 13 years"you will have int of size 4-bit then, on that computer only" - no you won't, because the standard forbids CHAR_BIT from being less than 8. If the addressable unit on the architecture is less than 8 bits, then a C++ implementation will just have to present a memory model that's different from the underlying hardware's memory model.

-

Nawaz over 13 years@Steve : oops... I overlooked that. Removed

Nawaz over 13 years@Steve : oops... I overlooked that. Removedintandcharfrom my post. -

Maxim Egorushkin over 13 yearsWell, yes and no. We do have bitfields, and we could have a bitfield pointer, that is address + bit number. Obviously, such a pointer would not be convertible to void* because of the extra storage requirement for the bit number.

-

Armstrongest over 13 yearsExcept when dealing with Flags. Things like Setting multiple options on something... eg. 00000001 + 00000100 = 00000101.

Armstrongest over 13 yearsExcept when dealing with Flags. Things like Setting multiple options on something... eg. 00000001 + 00000100 = 00000101. -

Steve Jessop over 13 yearsyou can't have a 4-bit

booleither, because thecharis the smallest addressable unit in C++, regardless of what the architecture can address with its own opcodes.sizeof(bool)must have a value of at least 1, and adjacentboolobjects must have their own addresses in C++, so the implementation just has to make them bigger and waste memory. That's why bit fields exist as a special case: the bitfield members of a struct aren't required to be separately addressable, so they can be smaller than achar(although the whole struct still can't be). -

Nawaz over 13 years@ Steve Jessop : that seems interesting. could you please give me the reference from the language specification where it says

Nawaz over 13 years@ Steve Jessop : that seems interesting. could you please give me the reference from the language specification where it sayscharis the smallest addressable unit in C++? -

Steve Jessop over 13 yearsclosest specific statement is probably 3.9/4: "The object representation of an object of type T is the sequence of N unsigned char objects taken up by the object of type T, where N equals sizeof(T)". Obviously

sizeof(bool)can't be 0.5 :-) I suppose an implementation could legally provide sub-byte pointers as an extension, but "ordinary" objects like bool, allocated in ordinary ways, have to do what the standard says. -

Jay over 13 years@Atomix: I almost never do this anymore. If I need two flags, I create two boolean fields. I used to write code where I'd pack flags like that and then write "if flags & 0x110 != 0 then" or the like, but this is cryptic and these days I generally make separate fields and write "if fooFlag || barFlag" instead. I wouldn't rule out the possibility of cases where packing flags like that is better for some reason, but it's no longer necessary to save memory like it used to be.

-

Armstrongest over 13 yearsYeah, I was thinking of flags that are already baked into a language. For example, in C# I'm sure RegexOptions uses a flag to set options:

Armstrongest over 13 yearsYeah, I was thinking of flags that are already baked into a language. For example, in C# I'm sure RegexOptions uses a flag to set options:RegexOptions.CultureInvariant + RegexOptions.IgnoreCase -

Jay over 13 years@Atomix: Oh, sure. MS Windows SDK had tons of these built in, so you had no choice but to deal with them.

-

einpoklum about 8 yearsActually, it is quite worth your trouble to pack bits, if you want your computation to be fast - on that large amount of data you store in memory. Packing booleans isn't just for smaller storage - it means you can read your boolean input arrays 8 times faster (in terms of bandwidth) as when they're unpacked, and that's often quite significant. Also, you can use bit operations, like popc (population count) which speeds up your work on the CPU itself.

-

Jay about 8 years@einpoklum If you are writing large arrays of booleans to a file, yes, bandwidth savings could be significant. But it would have to be a binary file, not human readable, which is rather the opposite direction from where we're going these days. Well, I suppose if you're writing an XML file, instead of separate tags for each boolean you could write a single flag field as an integer, but that would be rather contrary to the philosophy of XML. Similarly, you could pack booleans into an int before writing to a DB. IMHO the gain of a small storage and bandwidth saving would be far outweighed ...

-

Jay about 8 years... by the loss in ease of comprehension and maintainability from having a single "flag" field that the programmer has to pick apart rather than separate fields for each flag with a friendly name. (Do DB engines pack booleans? I'll have to check on this.) If you had a thousand booleans in every record, yes, it could be worth it. But more often we have two or three.

-

einpoklum about 8 years@Jay: I didn't say anything about a file. Think in-memory processing of large amounts of data.

-

Jay about 8 years@einpoklum In memory, I'd think you'd have to have truly huge numbers of booleans for the time to move them around to be a significant percentage of CPU time in any real application. And for that to even be relevant you'd have to be moving or copying them, not just building an array and processing it. So sure, if you have an app where you're manipulating a million booleans, making copies of the list, shuffling it around, whatever, then yes, might possibly be worth it. But for the more normal case where you have, like 20 in the whole app? No.

-

einpoklum about 8 yearsTruly huge number of booleans is what you work with every day if you do: DBMSes, machine learning, scientific simulations, and a whole host of other things. And - just working on them means copying them - from memory into cache. A million bools is nothing, think billions.

-

Jay about 8 years@einpoklum (shrug) I guess the projects you work on are different from mine. I certainly store millions of Booleans on a DB, but I typically load only a tiny fraction of them into memory at a time.

-

gEdringer almost 8 yearsWhat a waste of resources though.

gEdringer almost 8 yearsWhat a waste of resources though. -

Paul Tomblin almost 8 years@gEdringer if you're trying to cram as much information into sub-byte fields as you can fit, there are always bitfields.

-

André van Schoubroeck about 6 yearsNot only computers are being programmed, we have microcontrollers as well, and then we are indeed talking about kilobytes of ram

-

Jay about 6 years@AndrévanSchoubroeck Fair enough. If you're working on some highly constrained environment, AND you need to manipulate large numbers of booleans simultaneously, then packing them could be worthwhile.

-

Alexis Wilke almost 5 yearsI think 8 bits was also chosen for the instruction set. That gives you 256 possible instructions which is enough to implement a good CPU (like the 6502 and Z80). Less bits and you have to use tricks or multiple bytes to implement instructions which makes the CPU more complicated.

-

Peter Cordes about 4 years@PedroLoureiro: correct, it's not that bitwise operations are slow, it's that read-modify-write to update one bit in a byte is much worse than write-only to store a whole byte (or word on machines without efficient byte stores). It takes extra operations, and RMW would create a false dependency between adjacent bits in a word, preventing out-of-order execution of what would otherwise be independent work.

Peter Cordes about 4 years@PedroLoureiro: correct, it's not that bitwise operations are slow, it's that read-modify-write to update one bit in a byte is much worse than write-only to store a whole byte (or word on machines without efficient byte stores). It takes extra operations, and RMW would create a false dependency between adjacent bits in a word, preventing out-of-order execution of what would otherwise be independent work. -

Peter Cordes about 4 yearsCPU cache is always "highly constrained", like 32k L1d cache, 256k L2 cache. Having your working set fit in cache is a very big deal. Packing bitmaps is a very good thing for a big Sieve of Eratosthenes, but yes if even unpacked

Peter Cordes about 4 yearsCPU cache is always "highly constrained", like 32k L1d cache, 256k L2 cache. Having your working set fit in cache is a very big deal. Packing bitmaps is a very good thing for a big Sieve of Eratosthenes, but yes if even unpackedbool[]will fit in cache then it's typically better at small sizes. If you use SIMD to handle multiple elements at once, doing 128 bools per 16-byte SIMD vector instead of 16 is a nice speedup (for bitwise AND, or popcount). Or with AVX512, you can compare into a mask, or load a chunk of bitmap from memory and use it as a mask for SIMD vector elements. -

Jay about 4 years@PeterCordes Maybe some of you folks work on very different kinds of apps than I do. I work mostly on "business apps". So I might say, read the item record for the product the customer ordered, it has a boolean for "is this item taxable", if true add it's cost to the taxable cost counter before calculating sales tax, if false don't. Maybe there's another boolean or two for other attributes of an item. That sort of thing. It is very, very rare for me to manipulate arrays of booleans. Rather, I might have 5 distinct boolean values in the whole program. ...

-

Jay about 4 years... They're almost always manipulated individually, not in any sort of groups. So packing and unpacking them would be more work for the CPU, not less. If you have an app where you have some large array of booleans and you have to AND array A with array B bitwise, yes, very different story. I'm struggling to recall a time when I ever wrote such an app, but it's certainly not inconceivable. For IT departments I've worked in, though, that would be a rare to non-existant case.

-

Peter Cordes about 4 yearsIn that case yes, separate booleans, one per byte, is a good design for modern CPUs. The last code I worked on that dealt with arrays of masks was data analysis for flow cytometry; you get 4 to 20 separate measurements per cell, over millions of cells, and want to look for correlations. So you might use one column as X, another as Y, and select rows inside a box or something. Then use that mask along with another mask on a different X Y pair. Then do whatever stats on the matching elements that pass both masks. Packed masks work great with SIMD

Peter Cordes about 4 yearsIn that case yes, separate booleans, one per byte, is a good design for modern CPUs. The last code I worked on that dealt with arrays of masks was data analysis for flow cytometry; you get 4 to 20 separate measurements per cell, over millions of cells, and want to look for correlations. So you might use one column as X, another as Y, and select rows inside a box or something. Then use that mask along with another mask on a different X Y pair. Then do whatever stats on the matching elements that pass both masks. Packed masks work great with SIMD -

Peter Cordes about 4 yearsOther use-cases for packed bits include Linux system calls to set thread affinity, like

Peter Cordes about 4 yearsOther use-cases for packed bits include Linux system calls to set thread affinity, likesched_setaffinity(2)which takes an opaque type that represents the set of all CPU numbers this thread can be scheduled on. The actual implementation is a bitmask = array of packed bits. -

Peter Cordes about 4 yearsAlso related: Howard Hinnant's article: On

Peter Cordes about 4 yearsAlso related: Howard Hinnant's article: Onvector<bool>talks about the fact that a bit-array is a potentially useful data structure, it's merely unfortunate that it's calledvector<bool>in C++. -

Jay about 4 years@PeterCordes Yes, absolutely, if I had a set of booleans that were logically the "same idea" so that I naturally think of them as an "array" in some sense, and if I'm then going to mask or filter them or otherwise perform bitwise operations on them, then packing them into bytes might make good sense. As I said earlier, I'm hard pressed to think of the last time I worked on an application where those conditions applied, but you give a couple of good examples, and I'm sure with a little imagination one could think of others.

-

phuclv over 3 yearssome CPUs/MCUs like 8051 do have bit-addressable memory and can address single bits. Besides many architectures like ARM or PowerPC have instructions to access bitfields so you can access single bits efficiently

phuclv over 3 yearssome CPUs/MCUs like 8051 do have bit-addressable memory and can address single bits. Besides many architectures like ARM or PowerPC have instructions to access bitfields so you can access single bits efficiently -

Paul Tomblin over 3 years@phuclv unless you can make a pointer to it, you can't make it a datatype.

-

Peter Cordes over 2 years@PaulTomblin: you're correct, DEC Alpha is the only ISA in recent memory with byte-addressable memory but without byte actual byte load/store instructions. (See Can modern x86 hardware not store a single byte to memory? for details).

Peter Cordes over 2 years@PaulTomblin: you're correct, DEC Alpha is the only ISA in recent memory with byte-addressable memory but without byte actual byte load/store instructions. (See Can modern x86 hardware not store a single byte to memory? for details). -

Peter Cordes over 2 years@GeneBushuyev: Wrong for IA-64. csee.umbc.edu/portal/help/architecture/aig.pdf#page=41 confirms that IA-64

Peter Cordes over 2 years@GeneBushuyev: Wrong for IA-64. csee.umbc.edu/portal/help/architecture/aig.pdf#page=41 confirms that IA-64ldinstructions supported an access size of 1, 2, 4, or 8 bytes. (For sizes less than 64-bit, the result is zero-extended into a 64-bit reg, like a normal RISC rather than x86 partial-registers.) Since IA-64 was designed by Intel with hopes of taking over from x86 (via emulation, or in early CPUs via hardware support for an IA-32 mode), unaligned word load/store is also optionally supported (even in IA-64 mode).