Why is RAID 1+6 not a more common layout?

Solution 1

Generally I'd say RAID 1+0 will tend to be more widely used than 1+5 or 1+6 because RAID 1+0 is reliable enough and provides marginally better performance and more usable storage.

I think most people would take the failure of a full RAID 1 pair within the RAID 1+0 group as a pretty incredibly rare event that's worth breaking out the backups for - and probably aren't too enthusiastic about getting under 50% of their physical disk as usable space.

If you need better reliability than RAID 1+0, then go for it! ..but most people probably don't need that.

Solution 2

The practical answer lies somewhere at the intersection of hardware RAID controller specifications, average disk sizes, drive form-factors and server design.

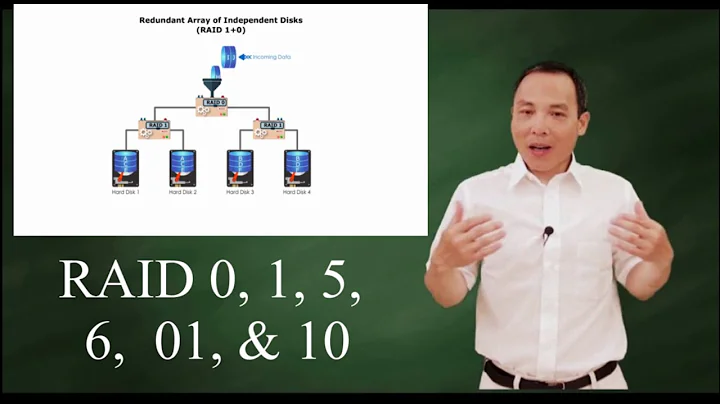

Most hardware RAID controllers are limited in the RAID levels they support. Here are the RAID options for an HP ProLiant Smart Array controller:

[raid=0|1|1adm|1+0|1+0adm|5|50|6|60]

note: the "adm" is just triple-mirroring

LSI RAID controllers support: 0, 1, 5, 6, 10, 50, and 60

So these controllers are only capable of RAID 50 and 60 as nested levels. LSI (née Dell PERC) and HP comprise most of the enterprise server storage adapter market. That's the major reason you don't see something like RAID 1+6, or RAID 61 in the field.

Beyond that consideration, nested RAID levels beyond RAID 10 require a relatively large number of disks. Given the increasing drive capacities available today (with 3.5" nearline SAS and SATA drives), coupled with the fact that many server chassis are designed around 8 x 2.5" drive cages, there isn't much of an opportunity to physically configure RAID 1+6, or RAID 61.

The areas where you may see something like RAID 1+6 would be large chassis software RAID solutions. Linux MD RAID or ZFS are definitely capable of it. But by that time, drive failure can be mitigated by hot or cold-spare disks. RAID reliability isn't much of an issue these days, provided you avoid toxic RAID level and hardware combinations (e.g. RAID 5 and 6TB disks). In addition, read and write performance would be abstracted by tiering and caching layers. Average storage workloads typically benefit from one or the other.

So in the end, it seems as though the need/demand just isn't there.

Solution 3

You have diminishing returns on reliability. RAID 6 is pretty unlikely to compound failure even on nasty SATA drives with a 1 in 10^14 UBER rate. On FC/SAS drives your UBER is 1 in 10^16 and you get considerably more performance too.

RAID group reliability doesn't protect you against accidental deletion. (so you need the backups anyway)

beyond certain levels of RAIDing, your odds of a compound failure on disks becomes lower than compound failure of supporting infrastructure (power, network, aircon leak, etc.)

Write penalty. Each incoming write on your RAID 61 will trigger 12 IO operations (naively done). RAID 6 is already painful in 'low tier' scenarios in terms of IOPs per TB random write. (and in higher tier, your failure rate is 100x better anyway)

it's not '25% reduction' it's a further 25% reduction. Your 16TB is turning into 6TB. So you're getting 37.5% usable storage. You need 3x as many disks per capacity, and 3x as much datacentre space. You would probably get more reliability by simply making smaller RAID6 sets. I haven't done the number crunching, but try - for example the sums of RAID 6 in 3x 3+2 sets (15 drives, less storage overhead than your RAID10). Or doing 3 way mirrors instead.

Having said that - it's more common than you think to do it for multi-site DR. I run replicated storage arrays where I've got RAID5/6/DP RAID groups asynchronously or synchronously to a DR site. (Don't do sync if you can possibly avoid it - it looks good, it's actually horrible).

With my NetApps, that's a metrocluster with some mirrored aggregates. With my VMAXes we've Symmetrix Remote Data Facility (SRDF). And my 3PARs do remote copy.

It's expensive, but provides 'data centre catching fire' levels of DR.

Regarding triple mirrors - I've used them, but not as direct RAID resilience measures, but rather as full clones as part of a backup strategy. Sync a third mirror, split it, mount it on a separate server and back that up using entirely different infrastructure. And sometimes rotate the third mirror as a recovery option.

The point I'm trying to make is that in my direct experience as a storage admin - in a ~40,000 spindle estate (yes, we're replacing tens of drives daily) - we've had to go to backups for a variety of reasons in the last 5 years, but none of them have been RAID group failure. We do debate the relative merits and acceptable recovery time, recovery point and outage windows. And underpinning all of this is ALWAYS the cost of the extra resilience.

Our array all media scrub and failure predict, and aggressively spare and test drives.

Even if there were a suitable RAID implementation, cost-benefit just isn't there. The money spent on the storage space would be better invested in a longer retention or more frequent backup cycle. Or faster comms. Or just generally faster spindles, because even with identical resilience numbers, faster rebuilding of spares improves your compound failure probability.

So I think I would therefore offer the answer to your question:

You do not see RAID 1+6 and 1+5 very often, because the cost benefit simply doesn't stack up. Given a finite amount of money, and given a need to implement a backup solution in the first place, all you're doing is spending money to reduce your outage frequency. There are better ways to spend that money.

Solution 4

Modern and advanced systems don't implement shapes like that because they're excessively complicated, completely unnecessary, and contrary to any semblance of efficiency.

As others have pointed out, the ratio of raw space to usable space is essentially 3:1. That is essentially three copies (two redundant copies). Because of the calculation cost of "raid6" (twice over, if mirrored), and the resulting loss of IOPS, this is very inefficient. In ZFS, which is very well designed and tuned, the equivalent solution, capacity-wise would be to create a stripe of 3-way mirrors.

As an example, instead of a mirror of 6-way raid6/raidz2 shapes (12 drives total), which would be very inefficient (also not something ZFS has any mechanism to implement), you would have 4x 3-way mirrors (also 12 drives). And instead of 1 drive worth of IOPS, you would have 4 drives worth of IOPS. Especially with virtual machines, that is a vast difference. The total bandwidth for the two shapes may be very similar in sequential reads/writes, but the stripe of 3-way mirrors would definitely be more responsive with random read/write.

To sum up: raid1+6 is just generally impractical, inefficient, and unsurprisingly not anything anyone serious about storage would consider developing.

To clarify the IOPS disparity: With a mirror of raid6/raidz2 shapes, with each write, all 12 drives must act as one. There is no ability for the total shape to split the activity up into multiple actions that multiple shapes can perform independently. With a stripe of 3-way mirrors, each write may be something that only one of the 4 mirrors must deal with, so another write that comes in doesn't have to wait for the whole omnibus shape to deal with before looking at further actions.

Solution 5

Since noone said it directly enough: Raid6 write performance is not marginally worse. It is horrible beyond description if put under load.

Sequential writing is OK and as long as caching, write merging etc. is able to cover it up, it looks ok. Under high load, things look bad and this is the main reason a 1+5/6 setup is almost never used.

Related videos on Youtube

James Haigh

Updated on September 18, 2022Comments

-

James Haigh almost 2 years

Why are the nested RAID levels 1+5 or 1+6 almost unheard of? The nested RAID levels Wikipedia article is currently missing their sections. I don't understand why they are not more common than RAID 1+0, especially when compared to RAID 1+0 triple mirroring.

It is apparent that rebuilding time is becoming increasingly problematic as drive capacities are increasing faster than their performance or reliability. I'm told that RAID 1 rebuilds quicker and that a RAID 0 array of RAID 1 pairs avoids the issue, but surely so would a RAID 5 or 6 array of RAID 1 pairs. I'd at least expect them to be a common alternative to RAID 1+0.

For 16 of 1TB drives, here are my calculations of the naïve probability of resorting to backup, i.e. with the simplifying assumption that the drives are independent with even probability:

RAID | storage | cumulative probabilities of resorting to backup /m 1+0 | 8TB | 0, 67, 200, 385, 590, 776, 910, 980, 1000, 1000, 1000 1+5 | 7TB | 0, 0, 0, 15, 77, 217, 441, 702, 910, 1000, 1000 1+6 | 6TB | 0, 0, 0, 0, 0, 7, 49, 179, 441, 776, 1000 (m = 0.001, i.e. milli.)If this is correct then it's quite clear that RAID 1+6 is exceptionally more reliable than RAID 1+0 for only a 25% reduction in storage capacity. As is the case in general, the theoretical write throughput (not counting seek times) is storage capacity / array size × number of drives × write throughput of the slowest drive in the array (RAID levels with redundancy have a higher write amplification for writes that don't fill a stripe but this depends on chunk size), and the theoretical read throughput is the sum of the read throughputs of the drives in the array (except that RAID 0, RAID 5, and RAID 6 can still be theoretically limited by the slowest, 2nd slowest, and 3rd slowest drive read throughputs respectively). I.e., assuming identical drives, that would be respectively 8×, 7×, or 6× maximum write throughput and 16× maximum read throughput.

Furthermore, consider a RAID 0 quadruple of RAID 1 triples, i.e. RAID 1+0 triple mirroring of 12 drives, and a RAID 6 sextuple of RAID 1 pairs, i.e. RAID 1+6 of 12 drives. Again, these are identical 1TB drives. Both layouts have the same number of drives (12), the same amount of storage capacity (4TB), the same proportion of redundancy (2/3), the same maximum write throughput (4×), and the same maximum read throughput (12×). Here are my calculations (so far):

RAID | cumulative probabilities of resorting to backup /m 1+0 (4×3) | 0, 0, 18, ?, ?, ?, ?, ?, 1000 1+6 (6×2) | 0, 0, 0, 0, 0, 22, 152, 515, 1000Yes, this may look like overkill, but where triple mirroring is used to split-off a clone for backup, RAID 1+6 can just as well be used, simply by freezing and removing 1 of each drive of all but 2 of the RAID 1 pairs, and while doing so, it still has far better reliability when degraded than the degraded RAID 1+0 array. Here are my calculations for 12 drives degraded by 4 in this manner:

RAID | cumulative probabilities of resorting to backup /m 1+0 (4×3) | (0, 0, 0, 0), 0, 143, 429, 771, 1000 1+6 (6×2) | (0, 0, 0, 0), 0, 0, 71, 414, 1000Read throughput, however, could be degraded down to 6× during this time for RAID 1+6, whereas RAID 1+0 is only reduced to 8×. Nevertheless, if a drive fails while the array is in this degraded state, the RAID 1+6 array would have a 50–50 chance of staying at about 6× or being limited further to 5×, whereas the RAID 1+0 array would be limited down to a 4× bottleneck. Write throughput should be pretty unaffected (it may even increase if the drives taken for backup were the limiting slowest drives).

In fact, both can be seen of as ‘triple mirroring’ because the degraded RAID 1+6 array is capable of splitting-off an additional RAID 6 group of 4 drives. In other words, this 12-drive RAID 1+6 layout can be divided into 3 degraded (but functional) RAID 6 arrays!

So is it just that most people haven't gone into the maths in detail? Will we be seeing more RAID 1+6 in the future?

-

JamesRyan over 9 yearsYour thoughput calc doesn't seem to have taken into account the write amplification to create the parity.

-

James Haigh over 9 years@JamesRyan: Yes, I have indeed considered that the parity needs writing. That's what the “storage capacity / array size” is for – the reciprocal of this is the write amplification factor, not including further write amplification associated with solid-state drives. Note that this includes the write amplification of the RAID 1 redundancy as well. Basically, the write amplification factor is equal to the reciprocal of 1 minus the proportion of redundancy. So 50% redundancy gives a write amplification factor of 2; 62.5% (10/16) redundancy gives a write amplification factor of ~2.67 (16/6).

-

JamesRyan over 9 yearsno that is incorrect. Each RAID6 write takes 6 IOs and each RAID1 write takes 2 IOs, these are multiplicative. So in RAID 1+6 each write will take 12 IOs, for RAID 10 is it 2 IOs. Write throughput on 12 drives will be 1x for RAID1+6 and 6x for RAID10!

-

James Haigh over 9 years@JamesRyan: Oh, I see where you're going with this now – for writes that are less than a full stripe, the write amplification factor can double for RAID 1+6 thus halving the maximum write throughput. For a full stripe, yes there are 12 writes in the 6×2 example, but you forget that this is for 4 chunks worth of data. For 4, 3, 2, 1 chunks-worth respectively, the write amplification factors are (6×2)/4 = 3, (5×2)/3 = ~3.33, (4×2)/2 = 4, (3×2)/1 = 6, giving maximum write throughputs of 4×, 3.6×, 3×, 2×. For RAID 1+0 4×3 it's (4×3)/4, (3×3)/3, (2×3)/2, (1×3)/1 giving a constant 4×. …

-

James Haigh over 9 years…However, this depends on chunk size and the size of writes, which I've now noted in the question. If writes are typically not filling the stripes then it may be worth decreasing the chunk size.

-

JamesRyan over 9 yearsno writing 4 chunks at once does not magically increase drive throughput by 4 :) Less than optimal chunksize can only lower throughput from the theoretical maximum.

-

James Haigh over 9 years“the write amplification factor can double for RAID 1+6” – Well this can apply to RAID levels with parities in general, depending on the number of drives. On an infinite number of drives, single parity converges to an absolute maximum of double the write amplification for single-chunk writes (worst case) compared to writes that fill a stripe; double parity converges to triple in worst case.

-

JamesRyan over 9 yearsI think you are quoting something without understanding it. What you are saying specifically about write amplification is technically correct only because RAID6 requires 3 writes the same as a 2nd mirror. But what you are missing is that parity in RAID6 also requires reads. The disk IOs limit throughput, not just the disk writes.

-

James Haigh over 9 years@JamesRyan: Not magically, no. If you're getting 1/3 of 12×, i.e. writing a 4-chunk stripe occupies all 12 drives with a chunk each, then yes, you get 4×. Writes that fill a stripe only have to write the parities once, rather than writing each chunk separately which would require the parities to be rewritten for each chunk (and would also require reads if the other chunks aren't cached somewhere). This will lower from theoretical maximum, but can be avoided be choosing a sensible chunk size.

-

James Haigh over 9 years“But what you are missing is that parity in RAID6 also requires reads.” – Only when not filling a stripe. Again, this depends on chunk size and the size of writes.

-

JamesRyan over 9 yearsyou don't get 4 chunks written for the price of one. Throughput per drive is dependent on the amount of data, so that has already been cancelled out. The number of reads does not depend on chunksize, it is always required to calculate parity. It might be helpful if you linked where you are getting these ideas from.

-

JamesRyan over 9 yearsI think basically you have read someone generalising the throughput calculation and are trying to apply it as if it is a rule when it isn't. And it certainly doesn't work for nested situations that are multiplicative.

-

James Haigh over 9 years@JamesRyan: What? These are my own calculations! Based on the examples that I've given! I have taken into consideration the nesting (which of course I'm aware of given that I'm asking about why a particular nested layout isn't used over other nested layouts) and I understand the multiplicative nature. You're just wasting my time now. And yours. And possibly other people's. I still need to complete the calculations for 5 more probabilities in the 2nd example (which are more tricky than the others), but it's clear that they'll all be greater than 0.018 (1/11*2/10) and less than 1.

-

JamesRyan over 9 yearsYou stated based on your calculations that RAID1+6 has the same write throughput as RAID10 with triples. In reality RAID1+6 has not even remotely the write throughput of RAID10 so your calculations or the assumptions they are based on are wrong. I was trying to help you understand why, if you refuse to listen then we might be wasting our time but it is you who is wasting it.

-

James Haigh over 9 years@JamesRyan: “no that is incorrect.” – Actually we were both incorrect, but there's some truth in what we both say. It turns out that write amplification is far more complicated when considering chunk and write sizes. I was talking solely about write throughput amplification (regarding large writes) whereas you may be talking about write seek amplification (significant for small writes). Sorry for the confusion! I hadn't noticed 'til now that these 2 things behave very differently mathematically – see my answer for details.

-

James Haigh over 9 years“For 4, 3, 2, 1 chunks-worth respectively, the write amplification factors are (6×2)/4 = 3, (5×2)/3 = 3.33̰, (4×2)/2 = 4, (3×2)/1 = 6, giv…” – Correction: This was forgetting reads; taking the reads into consideration, the write throughput amplification factors are (6×2 + 0)/4 = 3, (5×2 + 1)/3 = 3.67̰, (4×2 + 2)/2 = 5, (3×2 + 2)/1 = 8, giving maximum write throughputs of 4×, 3.27̰×, 2.4×, 1.5×. I derive the general RAID 1+6 write throughput amplification factor formula to be

((v + 2)n₁ + min [2, n₆ - (v + 2)])/vwhere v is the virtual number of chunks, and n₆×n₁ is the RAID 1+6 layout (6×2).

-

-

James Haigh over 9 yearsThe issue that I have with RAID 1+0 is that it has a bad ratio of reliability to storage. If RAID 6 was arbitrarily extensible to any number of parities (below n - 1) then for the same drives you could achieve both increased storage and better reliability than RAID 1+0. For the example above, if it was possible to have RAID 6 with 4 parities, you'd have 50% more storage and maximum write throughput than RAID 1+0 yet have exceptionally higher reliability. RAID 6 with 3 or 4 parities would have a good reliability–storage trade-off.

-

ravi yarlagadda over 9 years@JamesHaigh RAID 6 vs RAID 1+0 is a much different discussion than RAID 1+6 vs RAID 1+0, you kinda changed the subject. ZFS's raidz3 seems like it'd be up your alley? Anyway, to your point, there are some performance advantages that RAID 1+0 maintains over RAID 6, such as small single-block writes needing to touch a far smaller number of drives (and back to raidz3, ZFS handles this intelligently by writing multiple full copies instead of writing to all disks for small writes)

-

James Haigh over 9 yearsSorry, yes, I think that this is really what I'm chasing. Since that last comment I've been writing a new question specifically about RAID with 3 or more parities. That would be better than RAID 1+6 I think. It would also be more flexible and simpler to get the desired trade-off. You may want to continue this over on that question.

-

Sobrique over 9 yearsRAID 6 can't be linearly extended, because it doesn't work that way. The syndrome computation for second parity won't trivially scale to a third party. But you can quite easily do smaller RAID 6 groups - there's no real reason you need to do 14+2, and could instead do 2+2 or 4+2 and gain a lot of reliability.

Sobrique over 9 yearsRAID 6 can't be linearly extended, because it doesn't work that way. The syndrome computation for second parity won't trivially scale to a third party. But you can quite easily do smaller RAID 6 groups - there's no real reason you need to do 14+2, and could instead do 2+2 or 4+2 and gain a lot of reliability. -

Sobrique over 9 yearsThere's a demand in the form of array replication. I know several sites that do multi-site DR, which is practically speaking RAID 10 or 5 or 6 replicated to a remote (RAID 10 or 5 or 6) remote site. In no small part - beyond a certain level of disk reliability, your processors, controllers, networks, power, aircon, datacentre-catching-fire are bigger threats to your reliability.

Sobrique over 9 yearsThere's a demand in the form of array replication. I know several sites that do multi-site DR, which is practically speaking RAID 10 or 5 or 6 replicated to a remote (RAID 10 or 5 or 6) remote site. In no small part - beyond a certain level of disk reliability, your processors, controllers, networks, power, aircon, datacentre-catching-fire are bigger threats to your reliability. -

ewwhite over 9 yearsI don't think the OP even considered replication or multi-site use.

ewwhite over 9 yearsI don't think the OP even considered replication or multi-site use. -

Sobrique over 9 yearsNo, probably not. As you say - there's just no demand because it's overkill. It's the only use-case I can think of where it isn't overkill though :)

Sobrique over 9 yearsNo, probably not. As you say - there's just no demand because it's overkill. It's the only use-case I can think of where it isn't overkill though :) -

James Haigh over 9 years“RAID group reliability doesn't protect you against accidental deletion. (so you need the backups anyway)” – I didn't imply that this makes backups unnecessary (I'm well aware that RAID is not a backup). I actually imply the converse by saying “cumulative probabilities of resorting to backup” – I'm taking it as given that backups are standard practice. I agree with this point, however, it is presented as countering my reasoning about RAID 1+6, which doesn't make sense.

-

James Haigh over 9 years“RAID 61” – RAID 6+1 would be a RAID 1 array of RAID 6 arrays. That's a reversed nesting, and I think it would have much less reliability. I.e., what happens if 3 drives fail in the same nested RAID 6 array? Doesn't that whole nested RAID 6 array need rebuilding? The same drives nested as RAID 1+6 would sustain those same 3 drive failures without taking offline any working drives.

-

James Haigh over 9 years“beyond certain levels of RAIDing, your odds of a compound failure on disks becomes lower than compound failure of supporting infrastructure (power, network, aircon leak, etc.)”; “it's a further 25% reduction” – True and true, it's an overkill nesting layout. But then why one Earth would anyone use a RAID 0 array of RAID 1 triples? Thanks for reminding me about RAID 1+0 triple mirroring! “I haven't done the number crunching”; “Or doing 3 way mirrors instead.” – You really should do some calculations before giving a supporting case as a counterexample. These calculations should be explored…

-

Sobrique over 9 years1 - because your risk is no longer 'data loss' as 'temporary outage'. Which means the cost-benefit is reduced. 2 - Take your pick. I have used mirrored R6 groups for DR. 3 - because your approximations no longer hold. They're fine for per drive comparisons, but as your failure rate tends to zero, then other factors become more significant. These are much harder to model naively, so I'm not even going to try.

Sobrique over 9 years1 - because your risk is no longer 'data loss' as 'temporary outage'. Which means the cost-benefit is reduced. 2 - Take your pick. I have used mirrored R6 groups for DR. 3 - because your approximations no longer hold. They're fine for per drive comparisons, but as your failure rate tends to zero, then other factors become more significant. These are much harder to model naively, so I'm not even going to try. -

James Haigh over 9 yearsSure, these risks for RAID 1+6 are much lower than many other risks, but what I'm trying to get at is that it's more ‘risk-efficient’ as compared to other layouts. I've added the 12-drive RAID 1+0 triple mirroring example to the question – this is an excellent example because it is a square-on comparison of the probabilities that demonstrates the better risk efficiency of RAID 1+6, with the performance characteristics pretty much identical providing that it's implemented/configured correctly. If people feel the need for triple mirroring then maybe RAID 1+6 isn't overkill for some applications.

-

James Haigh over 9 yearsOh, I see what you mean. The triple mirrors allow you to simply remove a clone and do a backup. Okay, I didn't think of that. I was only thinking about backups done at a higher level such as taking an atomic snapshot on a copy-on-write filesystem then copying that snapshot elsewhere. Can you not use RAID 1+6 for multisite mirroring in the same way that you did for RAID 6+1? I.e. have multisite RAID 1 arrays in a RAID 6 array. (Btw., I've been interpreting ‘DR’ as ‘disaster recovery’, but it could be a couple of other things. I take it that by ‘RG’ you mean ‘RAID group’. Can you confirm these?)

-

Sobrique over 9 yearsYes, those are what I meant. A RAID5 or 6 that you then mirror to another RAID5 or 6 makes sense. I'm not sure how you'd do it the other way around, as you'd need multiple sites involved in the RAID parity calc.

Sobrique over 9 yearsYes, those are what I meant. A RAID5 or 6 that you then mirror to another RAID5 or 6 makes sense. I'm not sure how you'd do it the other way around, as you'd need multiple sites involved in the RAID parity calc. -

James Haigh over 9 yearsI've just realised that RAID 1+6 also allows you to split-off for backup, simply by freezing and removing 1 of each drive of all but 2 of the RAID 1 pairs, and while doing so, it still has far better reliability when degraded than the degraded RAID 1+0 array! :-D (Also, I've just noticed and read your edit.)

-

fgbreel over 9 yearsBackblaze has used RAID6 for a long time because has little overhead for store the parity.

-

Sobrique over 9 yearsActually you'd need all your RAID 1 pairs, to avoid having to reconstruct your RAID group. And you still have the RAID-6 write penalty - 12 now, because of mirroring, rather than the 3 of a triple mirror. We already eschew RAID6 for a lot of scenarios because of write penalty - it's just not worth it unless you're running low grade disks.

Sobrique over 9 yearsActually you'd need all your RAID 1 pairs, to avoid having to reconstruct your RAID group. And you still have the RAID-6 write penalty - 12 now, because of mirroring, rather than the 3 of a triple mirror. We already eschew RAID6 for a lot of scenarios because of write penalty - it's just not worth it unless you're running low grade disks. -

Sobrique over 9 yearsYou're misunderstanding what write penalty is. It's that for a single overwrite, you must read from your two parity devices, compute parity, write back to you twp parity devices and your target block. Thus 6 IOs per 'write'. This is not a software or implementation limitation. You partially mitigate with good write caching, but only partially.

Sobrique over 9 yearsYou're misunderstanding what write penalty is. It's that for a single overwrite, you must read from your two parity devices, compute parity, write back to you twp parity devices and your target block. Thus 6 IOs per 'write'. This is not a software or implementation limitation. You partially mitigate with good write caching, but only partially. -

James Haigh over 9 yearsSo synonymous with write amplification. Please see my comments in the question's main comment section about that. Particularly this one, i.e. when writing a full stripe you can just clobber the whole stripe, including the parity.

-

Basil over 9 yearsI've (briefly) configured something like a raid 6+1- a Netapp local syncmirror will create an identical copy of itself and multiplex reads across both plexes, while mirroring writes. It's mostly used for migrating Netapp V-Series to new backend LUNs, however if I wanted to double my reliability, I could do that with this.

-

Sobrique over 9 yearsI'm aware of the purpose of write caching. The assumption that you can ignore the problem however, is mistaken. It's a partial solution to the problem.

Sobrique over 9 yearsI'm aware of the purpose of write caching. The assumption that you can ignore the problem however, is mistaken. It's a partial solution to the problem. -

JamesRyan over 9 yearsPlease state your source for this information. A practical test with large or tiny writes does not concur with the performance you have suggested.

-

James Haigh over 9 years@JamesRyan: This is not second-hand information. The theoretical outcomes are derived from the fundamentals of how standard RAID levels work. All that's needed for the theory is an understanding of how RAID works and an understanding of logic and mathematical derivation. If these calculations were done by someone else then I would of-course state this and provide links for reference if possible. Note that there are many ways in which a practical RAID 1+6 implementation can be suboptimal, but different implementations will vary. What I'd like to know is why your practical test doesn't concur.

-

James Haigh over 9 years@JamesRyan: Please could you give more details on what implementation you used, what drives you used, in which configurations, with what benchmarking methods? Did you try both a RAID 6 array of 6 RAID 1 pairs and a RAID 0 array of 4 RAID 1 triples with the same 12 drives and chunk size? Was it a software RAID?

-

killermist over 9 yearsI agree, but that's primarily because what you said is just a super summed-up version of what I said. And of course I agree with myself.

-

killermist over 9 years@JamesHaigh What you seem to be wanting is a 12-way raidz8. Based on the logic that goes into parity calculations, that's going to peg out processors in perpetuity even with trivial data. Single parity is essentially XOR (easy). Dual parity is something to do with squares (not hard, but not easy). Triple parity is cube based or similar (hard). 4, 5, 6, 7, or 8 parity demands even larger (by an exponential scale) calculations (which might need quantum computers to keep up with). Just remember that as the shape grows, there is ZERO increase in IOPS. For media, who cares? For VMs, it kills.

-

maaartinus over 6 years@killermist You're wrong in at least two points. The computational complexity does not grow, except for one exception: XOR is a trivial case, much simpler than the other functions involved. Error-correcting codes are well-known and in use since many decades. The load is pretty trivial for a contemporary CPU and even more so since Carry-less Multiplication is a CPU instruction.

-

killermist over 5 years@maaartinus Prove your assertion. Let's start with the most simple case. 3-drive raidz (raid5). A xor B = C. How do you CPU trivial add more parity (D)? Square and sqrt are almost trivial, but far more complicated than xor. Then how would you calculate a third parity (E)? And how could that possibly be processor trivial? With only immaculate A+E, you must be able to recreate the immaculate data, and then be able to reproduce B, C, and D. A xor B xor C = 0. If D=0, A xor D = [not data], and A [something] D [MUST equal] [data]. Let's not even get into E or F. How's that trivial?

-

maaartinus over 5 years@killermist I'd bet, there's no squaring and no sqrt in any parity calculation and no floating point operations, though I don't know all types of ECC. Most of them use some Galois field multiplication. As a trivial second (and third etc.) parity, you could use mode dimensions, but that's not optimal. You don't have to guess how expensive is sqrt as you don't use it.

-

killermist over 5 years@maaartinus Please elaborate. A [something-1] D = [data] This something-1 ain't trivial. A [something-2] E = [data] something-2 very totally isn't trivial.