Why using hexadecimal in computer is better than using octal?

Solution 1

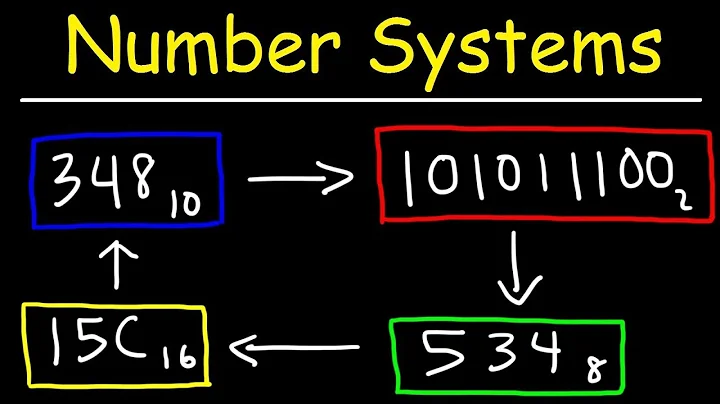

Well, I mean, the computer itself doesn't store any values in hexadecimal, it stores them as binary. However, we do choose to represent them as hexadecimal digits, for one main reason -- it's the easiest, most concise way to represent bytewise data:

0110 1011 becomes 6B in hex

Octal would require grouping of digits into 3, which would not allow for separation at byte level:

01 101 011 becomes 153 in octal

Note that the most significant digit will never be greater than 3.

Solution 2

From wikipedia:

All modern computing platforms, however, use 16-, 32-, or 64-bit words, further divided into eight-bit bytes. On such systems three octal digits per byte would be required, with the most significant octal digit representing two binary digits (plus one bit of the next significant byte, if any). Octal representation of a 16-bit word requires 6 digits, but the most significant octal digit represents (quite inelegantly) only one bit (0 or 1). This representation offers no way to easily read the most significant byte, because it's smeared over four octal digits. Therefore, hexadecimal is more commonly used in programming languages today, since two hexadecimal digits exactly specify one byte.

Related videos on Youtube

Luke Vo

Updated on September 18, 2022Comments

-

Luke Vo over 1 year

What are benefits that hexadecimal provides? And is it really octal is less common than hexadecimal?

-

Franz Wong over 9 yearsAs an aside, the dotted-decimal IP address

192.168.0.1is the unsigned int3232235521which how it's actually stored (which translates into the binary you've posted, but without the dots).