Why won't environment variables added to .profile show up in screen

Solution 1

The answer is:

I should set them in .pam_enviornment

See here https://help.ubuntu.com/community/EnvironmentVariables

I imagine this is one of the most common questions, I don't know why nobody gave me the proper answer when I asked this question.

Solution 2

From the Bash manual:

When bash is invoked as an interactive login shell, or as a non-interactive shell with the --login option, it first reads and executes commands from the file /etc/profile, if that file exists. After reading that file, it looks for ~/.bash_profile, ~/.bash_login, and ~/.profile, in that order, and reads and executes commands from the first one that exists and is readable.

That means if you have a .bash_profile or .bash_login in your home folder, then bash won't read the contents of your .profile. Additionally, when not running in an interactive login shell, .profile won't be read either.

Solution 3

echo $0

will return the script name which is being executed, when this command is executed within a shell script. In a terminal, it will return the type of shell being used.

You are using bash shell. You need to add this line

export LD_LIBRARY_PATH=/home/dspies/workspace/hdf5-1.8.11-linux-shared/lib

to ~/.bash_profile file.

EDIT:

You may find the different login profile scipt names for different shells, here

EDIT2:

Even I got two different responses when I executed echo $0 on two different machines. On one machine, I got bash and on the other I got -bash. I asked that question here. This is what I got as the answer.

Processes with a - at the beginning of arg 0 have been run via login, or by exec -l in bash.

After reading that answer, I did help exec and that reads, If the first argument is '-l', then place a dash in the zeroth arg passed to FILE, as login does.

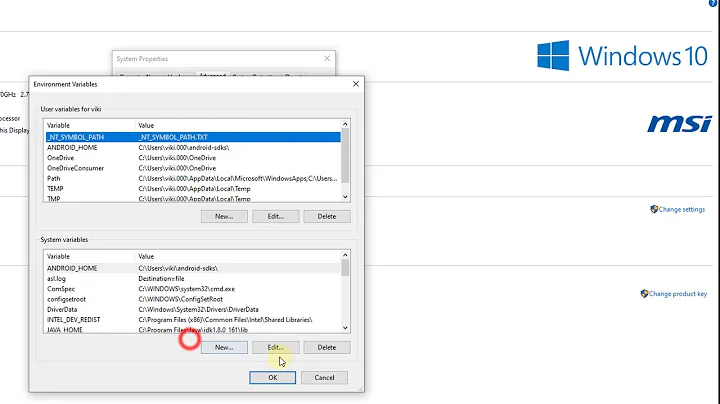

Related videos on Youtube

user2220127

Updated on September 18, 2022Comments

-

user2220127 over 1 year

I am trying to build a new index, but I have run into some issues in Kibana. The Discover page has displayed "Searching..." for several hours now, on just a handful of data points. I think there may be an issue with the formatting?

The indices page shows that Average, Maximum, and Minimum are all numbers, analyzed= false, indexed= true . InstanceID, MetricName,Region are all string, analyzed and indexed = True. Timestamp is a date

Does any of this indicate what the issue may be?

Edit: Additional Info from Warning: "Doc values are not enabled on this field. This may lead to excess heap consumption when visualizing" ...AND... "This is an analyzed string field. Analyzed strings are highly unique and can use a lot of memory to analyze"

Edit 2: A few notes: My mappings do not seem to be working properly.

Invoke-RestMethod "$URI/mytestindex/t2credbal" -Method post -body '{ "mytestindex": { "mappings": { "t2credbal": { "properties": { "timestamp": {"type":"date"}, "minimum": {"type":"number", "index":"no", "fielddata": { "format": "doc_values" } }, "maximum": {"type":"number", "index":"no", "fielddata": { "format": "doc_values" } }, "average": {"type":"number", "index":"no", "fielddata": { "format": "doc_values" } } } } } } }'When I run the command above, my fields show up as mytestindex.mappings.t2credbal.properties.timestamp, instead of just timestamp

My values are reporting in as Records.Timestamp (per the command below), with the proper field type, so I do not feel that specifying the mappings are totally necessary in my case. However, Kibana is not able to search and analyze the data, although it is listed in ElasticSearch exactly as I anticipated.

$json= {Records: [ { "Minimum": 280.91, "Maximum": 280.97, "Average": 280.94416666666672, "Timestamp": "2015-04-27T13:12:00Z", "InstanceID": "i-65e2b951", "MetricName": "CPUCreditBalance", "Region": "eu-west-1" } Invoke-RestMethod "$URI/mytestindex/t2credbal/" -Method Post -Body $json -ContentType 'application/json'EDIT #3

I adjusted the timestamp format to one that has worked for me in my other index; however I am getting the following errors upon trying to visualize the timestamp field:

Error: Request to Elasticsearch failed: {"error":"SearchPhaseExecutionException[Failed to execute phase [query], all shards failed; shardFailures {[S73SynuOQzW4NKbwPN7tTg][mytestindex][0]: SearchParseException[[mytestindex][0]: query[ConstantScore(*:*)],from[-1],size[0]: Parse Failure [Failed to parse source [{\"size\":0,\"query\":{\"query_string\":{\"analyze_wildcard\":true,\"query\":\"*\"}},\"aggs\":{\"1\":{\"date_histogram\":{\"field\":\"Records.Timestamp\",\"interval\":\"0ms\",\"pre_zone\":\"-04:00\",\"pre_zone_adjust_large_interval\":true,\"min_doc_count\":1,\"extended_bounds\":{\"min\":1430158024806,\"max\":1430158924806}}}}}]]]; nested: ElasticsearchIllegalArgumentException[Zero or negative time interval not supported]; }{[S73SynuOQzW4NKbwPN7tTg][mytestindex][1]: SearchParseException[[mytestindex][1]: query[ConstantScore(*:*)],from[-1],size[0]: Parse Failure [Failed to parse source [{\"size\":0,\"query\":{\"query_string\":{\"analyze_wildcard\":true,\"query\":\"*\"}},\"aggs\":{\"1\":{\"date_histogram\":{\"field\":\"Records.Timestamp\",\"interval\":\"0ms\",\"pre_zone\":\"-04:00\",\"pre_zone_adjust_large_interval\":true,\"min_doc_count\":1,\"extended_bounds\":{\"min\":1430158024806,\"max\":1430158924806}}}}}]]]; nested: ElasticsearchIllegalArgumentException[Zero or negative time interval not supported]; }{[S73SynuOQzW4NKbwPN7tTg][mytestindex][2]: SearchParseException[[mytestindex][2]: query[ConstantScore(*:*)],from[-1],size[0]: Parse Failure [Failed to parse source [{\"size\":0,\"query\":{\"query_string\":{\"analyze_wildcard\":true,\"query\":\"*\"}},\"aggs\":{\"1\":{\"date_histogram\":{\"field\":\"Records.Timestamp\",\"interval\":\"0ms\",\"pre_zone\":\"-04:00\",\"pre_zone_adjust_large_interval\":true,\"min_doc_count\":1,\"extended_bounds\":{\"min\":1430158024806,\"max\":1430158924806}}}}}]]]; nested: ElasticsearchIllegalArgumentException[Zero or negative time interval not supported]; }{[S73SynuOQzW4NKbwPN7tTg][mytestindex][3]: SearchParseException[[mytestindex][3]: query[ConstantScore(*:*)],from[-1],size[0]: Parse Failure [Failed to parse source [{\"size\":0,\"query\":{\"query_string\":{\"analyze_wildcard\":true,\"query\":\"*\"}},\"aggs\":{\"1\":{\"date_histogram\":{\"field\":\"Records.Timestamp\",\"interval\":\"0ms\",\"pre_zone\":\"-04:00\",\"pre_zone_adjust_large_interval\":true,\"min_doc_count\":1,\"extended_bounds\":{\"min\":1430158024806,\"max\":1430158924806}}}}}]]]; nested: ElasticsearchIllegalArgumentException[Zero or negative time interval not supported]; }{[S73SynuOQzW4NKbwPN7tTg][mytestindex][4]: SearchParseException[[mytestindex][4]: query[ConstantScore(*:*)],from[-1],size[0]: Parse Failure [Failed to parse source [{\"size\":0,\"query\":{\"query_string\":{\"analyze_wildcard\":true,\"query\":\"*\"}},\"aggs\":{\"1\":{\"date_histogram\":{\"field\":\"Records.Timestamp\",\"interval\":\"0ms\",\"pre_zone\":\"-04:00\",\"pre_zone_adjust_large_interval\":true,\"min_doc_count\":1,\"extended_bounds\":{\"min\":1430158024806,\"max\":1430158924806}}}}}]]]; nested: ElasticsearchIllegalArgumentException[Zero or negative time interval not supported]; }]"} at http://myurl.com/index.js?_b=5930:42978:38 at Function.Promise.try (http://myurl.com/index.js?_b=5930:46205:26) at http://myurl.com/index.js?_b=5930:46183:27 at Array.map (native) at Function.Promise.map (http://myurl.com/index.js?_b=5930:46182:30) at callResponseHandlers (http://myurl.com/index.js?_b=5930:42950:22) at http://myurl.com/index.js?_b=5930:43068:16 at wrappedCallback (http://myurl.com/index.js?_b=5930:20873:81) at wrappedCallback (http://myurl.com/index.js?_b=5930:20873:81) at http://myurl.com/index.js?_b=5930:20959:26Edit #4

Fixed the timestamp format. Verified that the results are displayed as expected using the _search? syntax. Completely blew out and recreated my index, verified that all field names and types are correct. The Discover screen now displays "no results" instead of "Searching...". When I change the time interval from 15 minutes to any other value, I get the following:

Discover: Cannot read property 'indexOf' of undefined TypeError: Cannot read property 'indexOf' of undefined at Notifier.error (myurl/index.js?_b=5930:45607:23) at Notifier.bound (myurl/index.js?_b=5930:32081:21) at myurl/index.js?_b=5930:118772:18 at wrappedCallback (myurl/index.js?_b=5930:20873:81) at myurl/index.js?_b=5930:20959:26 at Scope.$eval (myurl/index.js?_b=5930:22002:28) at Scope.$digest (myurl/index.js?_b=5930:21814:31) at Scope.$apply (myurl/index.js?_b=5930:22106:24) at done (myurl/index.js?_b=5930:17641:45) at completeRequest (myurl/index.js?_b=5930:17855:7)-

gosalia almost 11 yearsWhat is the output of

echo $0? -

dspyz almost 11 yearsFrom within terminal, it's just "bash", in CTRL-ALT-F1, it's "-bash", and in screen, it's "/bin/bash". Why? What is $0?

-

Nir Alfasi about 9 yearsDid you specify which analyzer to use ? if so, which one ? can you find the fields you want by accessing

Nir Alfasi about 9 yearsDid you specify which analyzer to use ? if so, which one ? can you find the fields you want by accessing_search?(doing a lite search) -

user2220127 about 9 yearsI did not specify one... I'm pretty new to ES, so I don't know much about them yet. The sample datapoint I posted above is from a _search result

-

Nir Alfasi about 9 yearsThis is not enough information. If you want, you can update your post with full details: index mapping and a few curl commands to insert data. This way it can be reproducible. Further, which versions of elasticsearch and kibana are you using?

Nir Alfasi about 9 yearsThis is not enough information. If you want, you can update your post with full details: index mapping and a few curl commands to insert data. This way it can be reproducible. Further, which versions of elasticsearch and kibana are you using? -

user2220127 about 9 yearsMore edits made. Specifying mappings do not seem to be necessary, as ElasticSeach is properly analyzing the fields and listing them as the correct type in Kibana (string, number). Attempting to specify them seems to just create more issues.

-

user2220127 about 9 yearsAlso, I think the Timestamp field may be the source of the issue. Kibana shows 0 results when I attempt to visualize that field... I will try playing with the formatting on that.

-

Nir Alfasi about 9 yearsUnless it's code you posted,

Nir Alfasi about 9 yearsUnless it's code you posted,"Timestamp": "\/Date(1430066400000)\/",is not a valid timestamp. If you refuse to post the mapping of this index - it'll not be easy to help you. -

user2220127 about 9 yearsI converted the timestamp to the "Timestamp": "2015-04-27T12:20:00" format, but I am getting date internal errors now. See OP for full message.

-

Nir Alfasi about 9 yearsElasticsearch field-names are case sensitive, Your mapping shows

Nir Alfasi about 9 yearsElasticsearch field-names are case sensitive, Your mapping showstimestampwhile you try to index:Timestamp. I'm not sure if that's the core of the issue - but it's definitely worth testing. BTW - same goes to the rest of the fields, i.e.minimumvs.Minimumand etc. -

user2220127 about 9 years@alfasin thanks for all of your suggestions so far. The issue I am running into now seems to be a Kibana related bug. Shards: 5 Successful: 5 Failed: 0

-

Nir Alfasi about 9 years"Shards: 5 Successful: 5 Failed: 0" - related to which request?

Nir Alfasi about 9 years"Shards: 5 Successful: 5 Failed: 0" - related to which request? -

user2220127 about 9 yearsSorry if I'm not making sense... After fixing the date format issues I was having, I used the /_search? API in ElasticSearch to pull my records. They all looked correct, and I confirmed that all 5 shards were successful, no failures. Kibana no longer shows "Searching...", it just says 0 results when I go to Discover, but then I get the error message above (in the OP) when I change the time picker to another interval besides Last 15 minutes.

-

-

user2220127 about 9 yearsTo follow up, I have completely resolved the issues. Kibana did not like me entering the stats as sub-types of "Records", so I adjusted my script to POST my JSON objects individually, using a foreach statement. By entering the data in the format: { "Minimum": 279.29, "Maximum": 279.43, "Average": 279.35833333333335, "Timestamp": "2015-04-30T08:17:00Z", "InstanceID": "i-d0be991a", "MetricName": "CPUCreditBalance", "Region": "ap-southeast-1" } , I was able to display the data as intended.

-

Ajit Goel over 8 yearsI have the same problem but the solution above did not work.

Ajit Goel over 8 yearsI have the same problem but the solution above did not work.