Windows TCP Window Scaling Hitting plateau too early

Solution 1

Have you tried enabling Compound TCP (CTCP) in your Windows 7/8 clients.

Please read:

Increasing Sender-Side Performance for High-BDP Transmission

http://technet.microsoft.com/en-us/magazine/2007.01.cableguy.aspx

...

These algorithms work well for small BDPs and smaller receive window sizes. However, when you have a TCP connection with a large receive window size and a large BDP, such as replicating data between two servers located across a high-speed WAN link with a 100ms round-trip time, these algorithms do not increase the send window fast enough to fully utilize the bandwidth of the connection.

To better utilize the bandwidth of TCP connections in these situations, the Next Generation TCP/IP stack includes Compound TCP (CTCP). CTCP more aggressively increases the send window for connections with large receive window sizes and BDPs. CTCP attempts to maximize throughput on these types of connections by monitoring delay variations and losses. In addition, CTCP ensures that its behavior does not negatively impact other TCP connections.

...

CTCP is enabled by default in computers running Windows Server 2008 and disabled by default in computers running Windows Vista. You can enable CTCP with the

netsh interface tcp set global congestionprovider=ctcpcommand. You can disable CTCP with thenetsh interface tcp set global congestionprovider=nonecommand.

Edit 6/30/2014

to see if CTCP is really "on"

> netsh int tcp show global

i.e.

PO said:

If I understand this correctly, this setting increases the rate at which the congestion window is enlarged rather than the maximum size it can reach

CTCP aggressively increases the send window

http://technet.microsoft.com/en-us/library/bb878127.aspx

Compound TCP

The existing algorithms that prevent a sending TCP peer from overwhelming the network are known as slow start and congestion avoidance. These algorithms increase the amount of segments that the sender can send, known as the send window, when initially sending data on the connection and when recovering from a lost segment. Slow start increases the send window by one full TCP segment for either each acknowledgement segment received (for TCP in Windows XP and Windows Server 2003) or for each segment acknowledged (for TCP in Windows Vista and Windows Server 2008). Congestion avoidance increases the send window by one full TCP segment for each full window of data that is acknowledged.

These algorithms work well for LAN media speeds and smaller TCP window sizes. However, when you have a TCP connection with a large receive window size and a large bandwidth-delay product (high bandwidth and high delay), such as replicating data between two servers located across a high-speed WAN link with a 100 ms round trip time, these algorithms do not increase the send window fast enough to fully utilize the bandwidth of the connection. For example, on a 1 Gigabit per second (Gbps) WAN link with a 100 ms round trip time (RTT), it can take up to an hour for the send window to initially increase to the large window size being advertised by the receiver and to recover when there are lost segments.

To better utilize the bandwidth of TCP connections in these situations, the Next Generation TCP/IP stack includes Compound TCP (CTCP). CTCP more aggressively increases the send window for connections with large receive window sizes and large bandwidth-delay products. CTCP attempts to maximize throughput on these types of connections by monitoring delay variations and losses. CTCP also ensures that its behavior does not negatively impact other TCP connections.

In testing performed internally at Microsoft, large file backup times were reduced by almost half for a 1 Gbps connection with a 50ms RTT. Connections with a larger bandwidth delay product can have even better performance. CTCP and Receive Window Auto-Tuning work together for increased link utilization and can result in substantial performance gains for large bandwidth-delay product connections.

Solution 2

Clarifying the Problem:

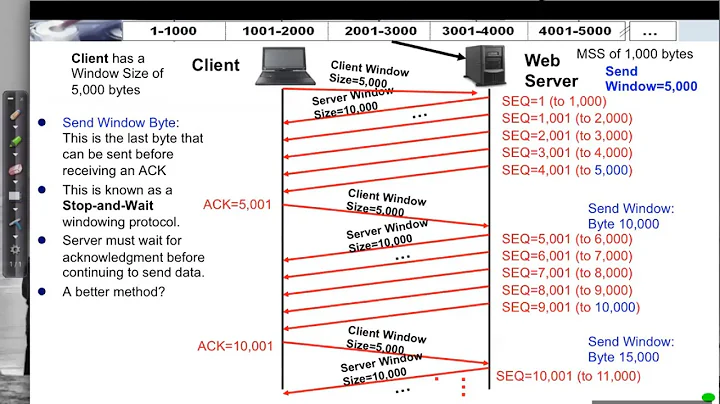

TCP has two windows:

- The receive window: How many bytes are left in the buffer. This is flow control imposed by the receiver. You can see the size of the receive window in the wireshark since it is made up of the window size and windowing scaling factor inside the TCP header. Both sides of the TCP connection will advertise their receive window, but generally the one you care about is the one receiving the bulk of the data. In your case, it is the "server" since the client is uploading to the server

- The congestion window. This is flow control imposed by the Sender. This is maintained by the operating system and does not show up in the TCP header. It controls the rate how fast data will be sent.

In the capture file you provided. We can see that the receive buffer is never overflowing:

My analysis is that the sender isn't sending fast enough because the send window (aka the congestion control window) isn't opening up enough to satisfy the RWIN of the receiver. So in short the receiver says "Give me More", and when Windows is the sender it isn't sending fast enough.

This is evidenced by the fact that in the above graph the RWIN stays open, and with the round trip time of .09 seconds and a RWIN of of ~ 500,000 bytes we can expect a max throughput according to the bandwidth delay product to be (500000/0.09) * 8 =~ 42 Mbit/s (and you are only getting about ~5 in your win to Linux capture).

How to Fix it?

I don't know. interface tcp set global congestionprovider=ctcp sounds like the right thing to do to me because it would increase the send window (which is another term for the congestion window). You said that is isn't working. So just to make sure:

- Did you reboot after enabling this?

- Is Chimney offload on? If it is maybe try turning it off as an experiment. I don't know what exactly gets offloaded when this enabled, but if controlling the send window is one of them, maybe congestionprovider has no effect when this is enabled... I'm just guessing...

- Also, I think this may be pre windows 7, but you might try adding and playing with the two registry keys called DefaultSendWindow and DefaultReceiveWindow in HKEY_LOCAL_MACHINE-System-CurrentControlSet-Services-AFD-Parameters. If these even work, you probably beed it have ctcp off.

- Yet another guess, try checking out

netsh interface tcp show heuristics. I think that might be RWIN, but it doesn't say, so maybe play with disabling/enabling that in case it impacts the send window. - Also, make sure your drivers are up to date on your test client. Maybe something is just broken.

I would try all these experiments with all you offloading features off to start with to eliminate the possibility that the network drivers are doing some rewriting/modifying of things (keep an eye CPU while offloading is disabled). The TCP_OFFLOAD_STATE_DELEGATED struct seems to at least imply that CWnd offloading is at least possible.

Solution 3

There's been some great info here by @Pat and @Kyle. Definitely pay attention to @Kyle's explanation of the TCP receive and send windows, I think there has been some confusion around that. To confuse matters further, iperf uses the term "TCP window" with the -w setting which is kind of an ambiguous term with regards to the receive, send, or overall sliding window. What it actually does is set the socket send buffer for the -c (client) instance and the socket receive buffer on the -s (server) instance. In src/tcp_window_size.c:

if ( !inSend ) {

/* receive buffer -- set

* note: results are verified after connect() or listen(),

* since some OS's don't show the corrected value until then. */

newTCPWin = inTCPWin;

rc = setsockopt( inSock, SOL_SOCKET, SO_RCVBUF,

(char*) &newTCPWin, sizeof( newTCPWin ));

} else {

/* send buffer -- set

* note: results are verified after connect() or listen(),

* since some OS's don't show the corrected value until then. */

newTCPWin = inTCPWin;

rc = setsockopt( inSock, SOL_SOCKET, SO_SNDBUF,

(char*) &newTCPWin, sizeof( newTCPWin ));

}

As Kyle mentions, the issue isn't with the receive window on the Linux box, but the sender isn't opening up the send window enough. It's not that it doesn't open up fast enough, it just caps at 64k.

The default socket buffer size on Windows 7 is 64k. Here's what the documentation says about the socket buffer size in relation to throughput at MSDN

When sending data over a TCP connection using Windows sockets, it is important to keep a sufficient amount of data outstanding (sent but not acknowledged yet) in TCP in order to achieve the highest throughput. The ideal value for the amount of data outstanding to achieve the best throughput for the TCP connection is called the ideal send backlog (ISB) size. The ISB value is a function of the bandwidth-delay product of the TCP connection and the receiver's advertised receive window (and partly the amount of congestion in the network).

Ok, blah blah blah, Now here we go:

Applications that perform one blocking or non-blocking send request at a time typically rely on internal send buffering by Winsock to achieve decent throughput. The send buffer limit for a given connection is controlled by the SO_SNDBUF socket option. For the blocking and non-blocking send method, the send buffer limit determines how much data is kept outstanding in TCP. If the ISB value for the connection is larger than the send buffer limit, then the throughput achieved on the connection will not be optimal.

The average throughput of your most recent iperf test using the 64k window is 5.8Mbps. That's from Statistics > Summary in Wireshark, which counts all the bits. Likely, iperf is counting TCP data throughput which is 5.7Mbps. We see the same performance with the FTP test as well, ~5.6Mbps.

The theoretical throughput with a 64k send buffer and 91ms RTT is....5.5Mbps. Close enough for me.

If we look at your 1MB window iperf test, the tput is 88.2Mbps (86.2Mbps for just TCP data). The theoretical tput with a 1MB window is 87.9Mbps. Again, close enough for government work.

What this demonstrates is that the send socket buffer directly controls the send window and that, coupled with the receive window from the other side, controls throughput. The advertised receive window has room, so we're not limited by the receiver.

Hold up, what about this autotuning business? Doesn't Windows 7 handle that stuff automatically? As has been mentioned, Windows does handle auto-scaling of the receive window, but it can also dynamically handle the send buffer too. Let's go back to the MSDN page:

Dynamic send buffering for TCP was added on Windows 7 and Windows Server 2008 R2. By default, dynamic send buffering for TCP is enabled unless an application sets the SO_SNDBUF socket option on the stream socket.

iperf uses SO_SNDBUF when using the -w option, so dynamic send buffering would be disabled. However, if you don't use -w then it doesn't use SO_SNDBUF. Dynamic send buffering should be on by default, but you can check:

netsh winsock show autotuning

The documentation says you can disable it with:

netsh winsock set autotuning off

But that didn't work for me. I had to make a registry change and set this to 0:

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\AFD\Parameters\DynamicSendBufferDisable

I don't think disabling this will help; it's just an FYI.

Why isn't your send buffer scaling above the default 64k when sending data to a Linux box with plenty of room in the receive window? Great question. Linux kernels also have an autotuning TCP stack. Like T-Pain and Kanye doing an autotune duet together, it just might not sound good. Perhaps there's some issue with those two autotuning TCP stacks talking to each other.

Another person had a problem just like yours and was able to fix it with a registry edit to increase the default send buffer size. Unfortunately, that doesn't seem to work anymore, at least it didn't for me when I tried it.

At this point, I think it's clear the limiting factor is the send buffer size on the Windows host. Given that it doesn't seem to be dynamically growing properly, what's a girl to do?

You can:

- Use applications that allow you to set the send buffer i.e. window option

- Use a local Linux proxy

- Use a remote Windows proxy?

- Open a case with Microsofhahahahahahaha

- Beer

Disclaimer: I have spent many many hours researching this and it is correct to the best of my knowledge and google-fu. But I wouldn't swear on my mother's grave (she's still alive).

Solution 4

Once you have the TCP stack tuned, you might still have a bottleneck in the Winsock layer. I have found that configuring Winsock (Ancillary Function Driver in the registry) makes a huge difference for upload speeds (pushing data to the server) in Windows 7. Microsoft has acknowledged a bug in the TCP autotuning for non-blocking sockets -- just the kind of socket that browsers use ;-)

Add DWORD key for DefaultSendWindow and set it to the BDP or higher. I am using 256000.

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\services\AFD\Parameters\DefaultSendWindow

Changing the Winsock setting for downloads might help - add a key for DefaultReceiveWindow.

You can experiment with various socket level settings by using the Fiddler Proxy and commands to adjust the client and server socket buffer sizes:

prefs set fiddler.network.sockets.Server_SO_SNDBUF 65536

fiddler.network.sockets.Client_SO_SNDBUF

fiddler.network.sockets.Client_SO_RCVBUF

fiddler.network.sockets.Server_SO_SNDBUF

fiddler.network.sockets.Server_SO_RCVBUF

Solution 5

Having read all the analysis in the answers, this problem very much sounds like you might be running Windows7/2008R2 aka Windows 6.1

The networking stack (TCP/IP & Winsock) in Windows 6.1 was horribly flawed and had a whole host of bugs and performance problems which Microsoft eventually addressed over many years of hotfixing since the initial release of 6.1.

The best way to apply these hotfixes is to manually sift through all the relevant pages on support.microsoft.com and manually request and download the LDR versions of the network stack hotfixes (there are many dozens of these).

To find the relevant hotfixes, you must use www.bing.com with the following search query

site:support.microsoft.com 6.1.7601 tcpip.sys

You also need to understand how LDR/GDR hotfix trains work in Windows 6.1

I generally used to maintain my own list of LDR fixes (not just network stack fixes) for Windows 6.1 and then proactively applied these fixes to any Windows 6.1 server/client that I came across. It was a very time-consuming task to regularly check for new LDR hotfixes.

Luckily, Microsoft has stopped the practice of LDR hotfixes with newer OS versions and bugfixes are now available through automatic update services from Microsoft.

UPDATE: Just one example of many network bugs in Windows7SP1 - https://support.microsoft.com/en-us/kb/2675785

UPDATE 2: Here's another hotfix that adds a netsh switch to force Window scaling after the second retransmission of a SYN packet (by default, window scaling is disabled after 2 SYN packets are retransmitted) https://support.microsoft.com/en-us/kb/2780879

Related videos on Youtube

SmallClanger

Updated on September 18, 2022Comments

-

SmallClanger over 1 year

Scenario: We have a number of Windows clients regularly uploading large files (FTP/SVN/HTTP PUT/SCP) to Linux servers that are ~100-160ms away. We have 1Gbit/s synchronous bandwidth at the office and the servers are either AWS instances or physically hosted in US DCs.

The initial report was that uploads to a new server instance were much slower than they could be. This bore out in testing and from multiple locations; clients were seeing stable 2-5Mbit/s to the host from their Windows systems.

I broke out

iperf -son a an AWS instance and then from a Windows client in the office:iperf -c 1.2.3.4[ 5] local 10.169.40.14 port 5001 connected with 1.2.3.4 port 55185 [ 5] 0.0-10.0 sec 6.55 MBytes 5.48 Mbits/seciperf -w1M -c 1.2.3.4[ 4] local 10.169.40.14 port 5001 connected with 1.2.3.4 port 55239 [ 4] 0.0-18.3 sec 196 MBytes 89.6 Mbits/secThe latter figure can vary significantly on subsequent tests, (Vagaries of AWS) but is usually between 70 and 130Mbit/s which is more than enough for our needs. Wiresharking the session, I can see:

-

iperf -cWindows SYN - Window 64kb, Scale 1 - Linux SYN, ACK: Window 14kb, Scale: 9 (*512)

-

iperf -c -w1MWindows SYN - Windows 64kb, Scale 1 - Linux SYN, ACK: Window 14kb, Scale: 9

Clearly the link can sustain this high throughput, but I have to explicity set the window size to make any use of it, which most real world applications won't let me do. The TCP handshakes use the same starting points in each case, but the forced one scales

Conversely, from a Linux client on the same network a straight,

iperf -c(using the system default 85kb) gives me:[ 5] local 10.169.40.14 port 5001 connected with 1.2.3.4 port 33263 [ 5] 0.0-10.8 sec 142 MBytes 110 Mbits/secWithout any forcing, it scales as expected. This can't be something in the intervening hops or our local switches/routers and seems to affect Windows 7 and 8 clients alike. I've read lots of guides on auto-tuning, but these are typically about disabling scaling altogether to work around bad terrible home networking kit.

Can anyone tell me what's happening here and give me a way of fixing it? (Preferably something I can stick in to the registry via GPO.)

Notes

The AWS Linux instance in question has the following kernel settings applied in

sysctl.conf:net.core.rmem_max = 16777216 net.core.wmem_max = 16777216 net.core.rmem_default = 1048576 net.core.wmem_default = 1048576 net.ipv4.tcp_rmem = 4096 1048576 16777216 net.ipv4.tcp_wmem = 4096 1048576 16777216I've used

dd if=/dev/zero | ncredirecting to/dev/nullat the server end to rule outiperfand remove any other possible bottlenecks, but the results are much the same. Tests withncftp(Cygwin, Native Windows, Linux) scale in much the same way as the above iperf tests on their respective platforms.Edit

I've spotted another consistent thing in here that might be relevant:

This is the first second of the 1MB capture, zoomed in. You can see Slow Start in action as the window scales up and the buffer gets bigger. There's then this tiny plateau of ~0.2s exactly at the point that the default window iperf test flattens out forever. This one of course scales to much dizzier heights, but it's curious that there's this pause in the scaling (Values are 1022bytes * 512 = 523264) before it does so.

Update - June 30th.

Following up on the various responses:

- Enabling CTCP - This makes no difference; window scaling is identical. (If I understand this correctly, this setting increases the rate at which the congestion window is enlarged rather than the maximum size it can reach)

- Enabling TCP timestamps. - No change here either.

- Nagle's algorithm - That makes sense and at least it means I can probably ignore that particular blips in the graph as any indication of the problem.

- pcap files: Zip file available here: https://www.dropbox.com/s/104qdysmk01lnf6/iperf-pcaps-10s-Win%2BLinux-2014-06-30.zip (Anonymised with bittwiste, extracts to ~150MB as there's one from each OS client for comparison)

Update 2 - June 30th

O, so following op on Kyle suggestion, I've enabled ctcp and disabled chimney offloading: TCP Global Parameters

---------------------------------------------- Receive-Side Scaling State : enabled Chimney Offload State : disabled NetDMA State : enabled Direct Cache Acess (DCA) : disabled Receive Window Auto-Tuning Level : normal Add-On Congestion Control Provider : ctcp ECN Capability : disabled RFC 1323 Timestamps : enabled Initial RTO : 3000 Non Sack Rtt Resiliency : disabledBut sadly, no change in the throughput.

I do have a cause/effect question here, though: The graphs are of the RWIN value set in the server's ACKs to the client. With Windows clients, am I right in thinking that Linux isn't scaling this value beyond that low point because the client's limited CWIN prevents even that buffer from being filled? Could there be some other reason that Linux is artificially limiting the RWIN?

Note: I've tried turning on ECN for the hell of it; but no change, there.

Update 3 - June 31st.

No change following disabling heuristics and RWIN autotuning. Have updated the Intel network drivers to the latest (12.10.28.0) with software that exposes functioanlity tweaks viadevice manager tabs. The card is an 82579V Chipset on-board NIC - (I'm going to do some more testing from clients with realtek or other vendors)

Focusing on the NIC for a moment, I've tried the following (Mostly just ruling out unlikely culprits):

- Increase receive buffers to 2k from 256 and transmit buffers to 2k from 512 (Both now at maximum) - No change

- Disabled all IP/TCP/UDP checksum offloading. - No change.

- Disabled Large Send Offload - Nada.

- Turned off IPv6, QoS scheduling - Nowt.

Update 3 - July 3rd

Trying to eliminate the Linux server side, I started up a Server 2012R2 instance and repeated the tests using

iperf(cygwin binary) and NTttcp.With

iperf, I had to explicitly specify-w1mon both sides before the connection would scale beyond ~5Mbit/s. (Incidentally, I could be checked and the BDP of ~5Mbits at 91ms latency is almost precisely 64kb. Spot the limit...)The ntttcp binaries showed now such limitation. Using

ntttcpr -m 1,0,1.2.3.5on the server andntttcp -s -m 1,0,1.2.3.5 -t 10on the client, I can see much better throughput:Copyright Version 5.28 Network activity progressing... Thread Time(s) Throughput(KB/s) Avg B / Compl ====== ======= ================ ============= 0 9.990 8155.355 65536.000 ##### Totals: ##### Bytes(MEG) realtime(s) Avg Frame Size Throughput(MB/s) ================ =========== ============== ================ 79.562500 10.001 1442.556 7.955 Throughput(Buffers/s) Cycles/Byte Buffers ===================== =========== ============= 127.287 308.256 1273.000 DPCs(count/s) Pkts(num/DPC) Intr(count/s) Pkts(num/intr) ============= ============= =============== ============== 1868.713 0.785 9336.366 0.157 Packets Sent Packets Received Retransmits Errors Avg. CPU % ============ ================ =========== ====== ========== 57833 14664 0 0 9.4768MB/s puts it up at the levels I was getting with explicitly large windows in

iperf. Oddly, though, 80MB in 1273 buffers = a 64kB buffer again. A further wireshark shows a good, variable RWIN coming back from the server (Scale factor 256) that the client seems to fulfil; so perhaps ntttcp is misreporting the send window.Update 4 - July 3rd

At @karyhead's request, I've done some more testing and generated a few more captures, here: https://www.dropbox.com/s/dtlvy1vi46x75it/iperf%2Bntttcp%2Bftp-pcaps-2014-07-03.zip

- Two more

iperfs, both from Windows to the same Linux server as before (1.2.3.4): One with a 128k Socket size and default 64k window (restricts to ~5Mbit/s again) and one with a 1MB send window and default 8kb socket size. (scales higher) - One

ntttcptrace from the same Windows client to a Server 2012R2 EC2 instance (1.2.3.5). here, the throughput scales well. Note: NTttcp does something odd on port 6001 before it opens the test connection. Not sure what's happening there. - One FTP data trace, uploading 20MB of

/dev/urandomto a near identical linux host (1.2.3.6) using Cygwinncftp. Again the limit is there. The pattern is much the same using Windows Filezilla.

Changing the

iperfbuffer length does make the expected difference to the time sequence graph (much more vertical sections), but the actual throughput is unchanged.-

TomTom almost 10 yearsA rare instance of a well researched problem that is not obviously in the documentation. Nice - let's hope someone finds a solution (because somehow I think I can use that too).

-

Brian almost 10 yearsTry turning on RFC 1323 Timestamps as they are disabled by default in Windows while Linux has them enabled by default).

netsh int tcp set global timestamps=enabled -

Greg Askew almost 10 yearsThe 200 ms delay is probably the Nagle algorithm in action. As data is received by TCP on a particular connection, it sends an acknowledgement back only if one of the following conditions is true: No acknowledgement was sent for the previous segment received; A segment is received, but no other segment arrives within 200 milliseconds for that connection.

-

Kyle Brandt almost 10 yearsAny chance of putting up some packet captures from one of the slower senders somewhere?

Kyle Brandt almost 10 yearsAny chance of putting up some packet captures from one of the slower senders somewhere? -

SmallClanger almost 10 yearsI've updated my OP with the results of these tests and links to representative capture files.

-

Kyle Brandt almost 10 years@SmallClanger: Regarding "Could there be some other reason that Linux is artificially limiting the RWIN?". I'm not positive, but I would agree with your theory that the RWIN doesn't increases because it is never getting low. It would be silly to do so, because that would mean more memory usage when there is no reason to take more memory for the receive buffer.

Kyle Brandt almost 10 years@SmallClanger: Regarding "Could there be some other reason that Linux is artificially limiting the RWIN?". I'm not positive, but I would agree with your theory that the RWIN doesn't increases because it is never getting low. It would be silly to do so, because that would mean more memory usage when there is no reason to take more memory for the receive buffer. -

Kyle Brandt almost 10 years@SmallClanger: Also look at "Expert Analysis" in the two captures. In the Linux-to-Linux one, you see Zero window messages, duplicate acks etc. This is actually what one would hope to see as TCP gets pushed to its limit and then enforces some flow control. In the Windows to Linux case, everything is clean because TCP isn't "stress tested".

Kyle Brandt almost 10 years@SmallClanger: Also look at "Expert Analysis" in the two captures. In the Linux-to-Linux one, you see Zero window messages, duplicate acks etc. This is actually what one would hope to see as TCP gets pushed to its limit and then enforces some flow control. In the Windows to Linux case, everything is clean because TCP isn't "stress tested". -

Kyle Brandt almost 10 years@SmallClanger: Oh, and to help clarify, my graph if I understand it correctly displays the RWIN as it currently is over time. In other words, it is "currently available bytes" in the buffer. So the RWIN always has plenty of space.

Kyle Brandt almost 10 years@SmallClanger: Oh, and to help clarify, my graph if I understand it correctly displays the RWIN as it currently is over time. In other words, it is "currently available bytes" in the buffer. So the RWIN always has plenty of space. -

Kyle Brandt almost 10 years@SmallClanger: Another guess, try playing with

Kyle Brandt almost 10 years@SmallClanger: Another guess, try playing withnetsh int tcp show heuristics/netsh interface tcp show heuristics disabled|enabled -

hookenz almost 10 yearsAre these windows 7 clients and beyond? It's crap. Disabling autotuning usually fixed it for me. But this patch looks like it's not normally installed and appears to address the issue you describe. support.microsoft.com/kb/983528.

-

hookenz almost 10 yearsnetsh interface tcp set global autotuning=disabled usually works for me.

-

SmallClanger almost 10 years@Matt This was one of the first things I tried. As I understand it the autotuning is purely to do with the RWIN and downstream bandwidth. (This does seem to be similarly restricted, but changing autotune settings had no effect).

-

Greg Askew almost 10 years@SmallClanger: What is the version of tcpip.sys on your test system?

-

SmallClanger almost 10 years@GregAskew 6.1.7601.22648 on the Win7SP1 client. 6.3.9600.17088 On the Win8.1 client.

-

Kyle Brandt almost 10 yearsAt the rate we are all going it looks like the correct answer is going to be "Switch all your clients to Linux" ;-)

Kyle Brandt almost 10 yearsAt the rate we are all going it looks like the correct answer is going to be "Switch all your clients to Linux" ;-) -

SmallClanger almost 10 years@KyleBrandt - I'd probably accept "lol, use linux, nub" as an answer. It seems like the path of least resistance at the moment :)

-

André Borie over 8 years@SmallClanger did you ever find a solution ? I'm facing the same problem.

-

SmallClanger over 8 years@AndréBorie - I haven't. This issue that was blocked by this (for me) has gone away and I haven't had time to work through some of the later registry-based solutions suggested here. FWIW, I'm sure CTCP is the correct route, but I think there are TCP implementation issues with certain software packages that are bottlenecking even when CTCP is correctly set up.

-

-

Ryan Ries almost 10 yearsJust as a complement to this answer, the Powershell equivalent in Server 2012/Win8.1 is

Ryan Ries almost 10 yearsJust as a complement to this answer, the Powershell equivalent in Server 2012/Win8.1 isSet-NetTCPSettingwith the-CongestionProviderparameter... which accepts CCTP, DCTCP, and Default. Windows client and servers use different default congestion providers. technet.microsoft.com/en-us/library/hh826132.aspx -

Pat almost 10 yearsI have reported your "answer" because yours it's not an answer; I immediately got down-voted; now I see how "people" is up-voting your "no-answer"... really funny

-

SmallClanger almost 10 yearsI see what you're getting at, but it doesn't seem to apply. For the sake of it, I ran a 30 minute

iperfand the Window still never scaled beyond ~520kb. Something else is limiting the CWND before this aggressive algorithm can show any benefits. -

Pat almost 10 yearsthere's an old (already fixed) Vista bug that presented this kind of problems when transmitting non-HTML protocols. Does your problem looks exactly the same when transferring the same file by HTML or let say by FTP?

-

SmallClanger almost 10 years@Pat - It does. SVN Commits (via HTTP and HTTPS) and FTP transfers to another system on AWS also exhibit the same limits.

-

Pat almost 10 yearshow about Win client's firewall? can you test with firewall completely off? see here: ask.wireshark.org/questions/2365/tcp-window-size-and-scaling

-

Kyle Brandt almost 10 years@Pat: You can click the vote number too see the breakdown of Upvotes/Downvotes. Currently you have no downvotes on your answer. My answer doesn't solve his problem (but no answer does yet), it does explain and localize the problem (hopefully correctly!) which is an important step in troubleshooting.

Kyle Brandt almost 10 years@Pat: You can click the vote number too see the breakdown of Upvotes/Downvotes. Currently you have no downvotes on your answer. My answer doesn't solve his problem (but no answer does yet), it does explain and localize the problem (hopefully correctly!) which is an important step in troubleshooting. -

Pat almost 10 years@ Kyle Brandt if you accept yours is not an answer I wonder why it is not "automatically" removed without any further consideration?? and you are wrong; I got a down-vote (unupvote) "as soon" as I reported your "answer"; the one has not been removed yet. It seems you play by "special" rules here.

-

SmallClanger almost 10 years@Pat If it helps, Kyle's non-answer has been incredibly helpful. I now have a clearer idea of which buffers are being limited and I feel I'm a little closer to a proper solution as a result. Sometimes questions such as this can be a collaborative effort that, with a bit of judicious editing can become a proper Q and a proper A.

-

Pat almost 10 years@SmallClanger with all due respect, SF has a set of rules that should be followed by all its users including Kyle Brandt; if his is not an answer it must be erased or moved as a comment no matter how many friends he has among the "moderators" club.

-

SmallClanger almost 10 yearsFantasic input; thank you. I am using iperf 2.0.4, I'll experiment with the settings and update my OP with some new caps, too.

-

karyhead almost 10 yearsOk, I've updated my "answer" based on more research and your recent tests

-

SmallClanger almost 10 yearsThanks. At least in part it's nice to know I'm not just going mad. I've read a few blogs/threads from the XP/2003 days that recommend those registry settings, but they were written before Vista/2008 and I'm pretty sure they're ignored in Vista onwards. I think I actually will raise a ticket with MS about this (wish me luck)

-

karyhead almost 10 yearsThe receive window on your Windows server on the Win-to-Win test does behave quite differently than Linux i.e. it's much more dynamic. Perhaps that's a factor in terms of the dynamic send buffer working properly. I tried disabling the autotuning receive window on Linux (/proc/sys/net/ipv4/tcp_moderate_rcvbuf) and set it to the max (/proc/sys/net/ipv4/tcp_rmem), but it didn't increase tput for me. You could try that. Good luck with MSFT. I'd suggest trying to escalate the case as quickly as you can. You're probably gonna need to get past the first few levels; it may need engineering attention.

-

karyhead almost 10 yearsAnd lastly. This has been super interesting to research as I don't (didn't) know a lot about TCP on Windows. I hope you'll follow up if you find anything out from MSFT. If you don't mind, I'm gonna write this up for my packet analysis site packetbomb.com.

-

SmallClanger almost 10 yearsIf the issue is down to a lot of user-space software simply having too low a ceiling on socket buffer allocations, the question then becomes "Is it the job of the OS to intervene in these case? Could it even?". I'll follow if MS have come back with a useful answer.

-

Pat almost 10 yearsI have found comments on several forums about "netsh" commands not working as advertized in 7/8, and people being forced to manually enter the corresponding registry entries; I wonder if something like that might be happening with the CTCP option.

-

SmallClanger about 9 yearsGreat additional bit of information. Do you have a reference link for the MS bug, by any chance?

-

SmallClanger almost 8 yearsEnterprise 1511 is what we're targeting.

-

Christoph Wegener almost 8 yearsI see. It's quite difficult to decide on a branch with Windows 10 since there are so many. I have already run into one issue with Windows 10 where I couldn't use a particular feature because I was on an LTSB branch. I wish Microsoft had reduced the number of available branches overall and instead improved their documentation about what fixes and features are included in each build....