word2vec - what is best? add, concatenate or average word vectors?

Solution 1

I have found an answer in the Stanford lecture "Deep Learning for Natural Language Processing" (Lecture 2, March 2016). It's available here. In minute 46 Richard Socher states that the common way is to average the two word vectors.

Solution 2

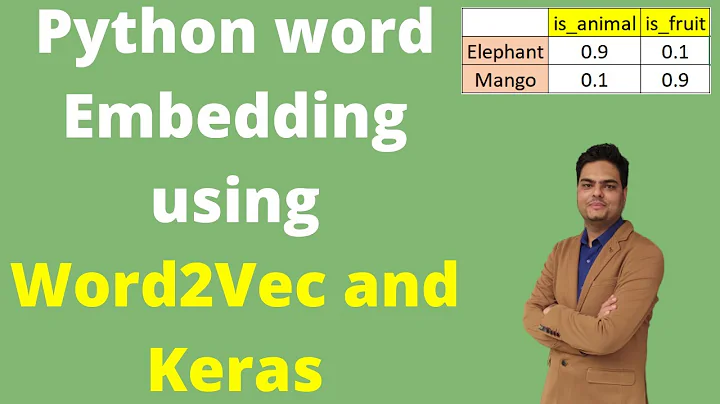

You should read this research work at-least once to get the whole idea of combining word embeddings using different algebraic operators. It was my research.

In this paper you can also see the other methods to combine word vectors.

In short L1-Normalized average word vectors and sum of words are good representations.

Related videos on Youtube

Lemon

Updated on June 04, 2022Comments

-

Lemon almost 2 years

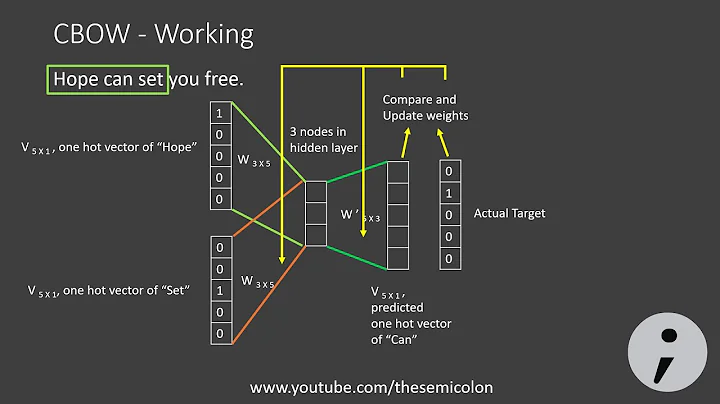

I am working on a recurrent language model. To learn word embeddings that can be used to initialize my language model, I am using gensim's word2vec model. After training, the word2vec model holds two vectors for each word in the vocabulary: the word embedding (rows of input/hidden matrix) and the context embedding (columns of hidden/output matrix).

As outlined in this post there are at least three common ways to combine these two embedding vectors:

- summing the context and word vector for each word

- summing & averaging

- concatenating the context and word vector

However, I couldn't find proper papers or reports on the best strategy. So my questions are:

- Is there a common solution whether to sum, average or concatenate the vectors?

- Or does the best way depend entirely on the task in question? If so, what strategy is best for a word-level language model?

- Why combine the vectors at all? Why not use the "original" word embeddings for each word, i.e. those contained in the weight matrix between input and hidden neurons.

Related (but unanswered) questions:

-

de1 over 6 yearsYou might want to add what you are trying to do, e.g. build a sentence or paragraph level vector. (Gensim for example offers doc2vec for that)

-

Lemon over 6 yearsI want to initialize my recurrent language model with the word embeddings produced by gensim. So my goal is to learn an embedding for each word in my vocabulary. After training the word2vec model, I can use the original embeddings or modify them further (as outlined in the post). I want to know which strategy yields the "best" word embeddings

-

de1 over 6 yearsIn the first post you linked, the question is about creating a sentence vector. i.e. combine the word vectors to a single vector representing the sentence (or paragraph). That is where the question about how to combine the vectors seems to be most relevant. Is that what you want to do?

-

Lemon over 6 yearsNot sure whether I understand your question. I am building a language model that is fed with sequential words and trained to predict the next word in a sentence. Each input word is mapped to an embedding. I use gensim to learn these word embeddings. My goal is to get the best possible word embeddings.

-

de1 over 6 yearsOkay, then it doesn't sound like you are trying to do that. As far as I know, the combination of vectors you referred to are used to create a single vector out of a number of vectors. Not to improve the word vectors themselves. But perhaps someone else knows better. To get better vectors you could obviously look into the training data, size of the embedding or alternative methods such as GloVe. Also including the type of word within sentence could potentially improve the vector (see Sense2Vec).

-

Lemon over 6 yearsI think you have misunderstood my question. The word2vec model holds two word vectors for each word - one from each weight matrix. My question is related to why and how to combine these two vectors for individual words. I know about other techniques for creating word vectors and/or how to tweak the word2vec model. But my question is specifically related to word2vec and its outputs matrices.

-

de1 over 6 yearsI may very well have and I can later delete this answer. You mentioned predicting the next word before but I probably missed anything relating to your model holding vectors of two words. I outlined reasons for combining vectors to create a sentence vector. But your use case seems to be different. It might be worth expanding your question a bit more to explain your use case in more detail?

-

Lemon over 6 yearsMy model isn't holding two vectors for each word. The word2vec model is!

-

dter about 5 yearsFirst of all please state you're the primary author (i.e. conflict of interest). Secondly, would be useful to summarize the relevant parts rather than just linking to your paper here.

-

Nomiluks about 5 yearsActually the work he is interested is in the research paper. And I have explained that. No reason to downvote this answer. It's related to the post.

Nomiluks about 5 yearsActually the work he is interested is in the research paper. And I have explained that. No reason to downvote this answer. It's related to the post. -

dnk8n almost 3 yearsHe does say "average or concatinate". In this context is "concatinate" a synonym for "average"? Or does he mean that one can choose either the "mean" or the "sum" of the two vectors?

-

EnesZ over 2 yearsNice paper but the question is about combining two vectors from word2vec for one particular word but not combining word vectors of a given sentence.