Writing a tokenizer in Python

Solution 1

As tokenizing is easy in Python, I'm wondering what your module is planned to provide. I mean when starting a piece of software a good design rather comes from thinking about the usage scenarios than considering data structures first.

Your examples for expected output are a bit confusing. I assume you want the tokenizers return name on left side and a list of tokens on right side. I played a bit to achieve similar results, but using lists for easier handling:

import re

# some tokenizers

def tokzr_WORD(txt): return ('WORD', re.findall(r'(?ms)\W*(\w+)', txt)) # split words

def tokzr_SENT(txt): return ('SENTENCE', re.findall(r'(?ms)\s*(.*?(?:\.|\?|!))', txt)) # split sentences

def tokzr_QA(txt):

l_qa = []

for m in re.finditer(r'(?ms)^[\s#\-\*]*(?:Q|Question)\s*:\s*(?P<QUESTION>\S.*?\?)[\s#\-\*]+(?:A|Answer)\s*:\s*(?P<ANSWER>\S.*?)$', txt): # split (Q, A) sequences

for k in ['QUESTION', 'ANSWER']:

l_qa.append(m.groupdict()[k])

return ('QA', l_qa)

def tokzr_QA_non_canonical(txt): # Note: not supported by tokenize_recursively() as not canonical.

l_qa = []

for m in re.finditer(r'(?ms)^[\s#\-\*]*(?:Q|Question)\s*:\s*(?P<QUESTION>\S.*?\?)[\s#\-\*]+(?:A|Answer)\s*:\s*(?P<ANSWER>\S.*?)$', txt): # split (Q, A) sequences

for k in ['QUESTION', 'ANSWER']:

l_qa.append((k, m.groupdict()[k]))

return l_qa

dict_tokzr = { # control string: tokenizer function

'WORD' : tokzr_WORD,

'SENTENCE': tokzr_SENT,

'QA' : tokzr_QA,

}

# the core function

def tokenize_recursively(l_tokzr, work_on, lev=0):

if isinstance(work_on, basestring):

ctrl, work_on = dict_tokzr[l_tokzr[0]](work_on) # tokenize

else:

ctrl, work_on = work_on[0], work_on[1:] # get right part

ret = [ctrl]

if len(l_tokzr) == 1:

ret.append(work_on) # add right part

else:

for wo in work_on: # dive into tree

t = tokenize_recursively(l_tokzr[1:], wo, lev + 1)

ret.append(t)

return ret

# just for printing

def nestedListLines(aList, ind=' ', d=0):

""" Returns multi-line string representation of \param aList. Use \param ind to indent per level. """

sRet = '\n' + d * ind + '['

nested = 0

for i, e in enumerate(aList):

if i:

sRet += ', '

if type(e) == type(aList):

sRet += nestedListLines(e, ind, d + 1)

nested = 1

else:

sRet += '\n' + (d + 1) * ind + repr(e) if nested else repr(e)

sRet += '\n' + d * ind + ']' if nested else ']'

return sRet

# main()

inp1 = """

* Question: I want try something. Should I?

* Answer : I'd assume so. Give it a try.

"""

inp2 = inp1 + 'Q: What is a good way to achieve this? A: I am not so sure. I think I will use Python.'

print repr(tokzr_WORD(inp1))

print repr(tokzr_SENT(inp1))

print repr(tokzr_QA(inp1))

print repr(tokzr_QA_non_canonical(inp1)) # Really this way?

print

for ctrl, inp in [ # example control sequences

('SENTENCE-WORD', inp1),

('QA-SENTENCE', inp2)

]:

res = tokenize_recursively(ctrl.split('-'), inp)

print nestedListLines(res)

Btw. Python/Lib/tokenize.py (for Python code itself) might be worth a look how to handle things.

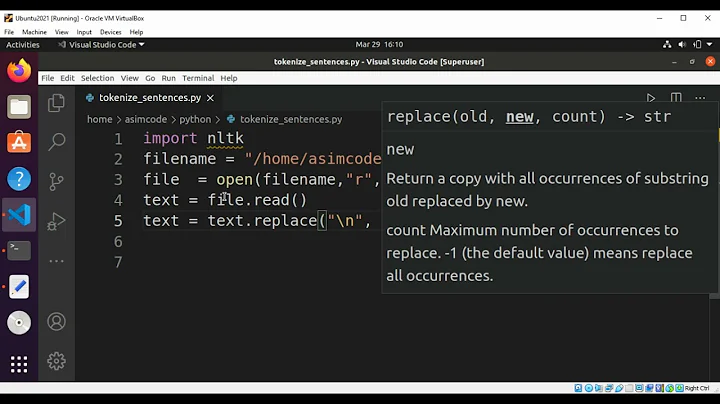

Solution 2

If I understand the question correctly then I do think you should reinvent the wheel. I would implement state machines for the different types of tokenization you want and use python dictionaries for saving the tokens.

http://en.wikipedia.org/wiki/Finite-state_machine

Example state machine that will take a sentence with spaces and print out the words, of course you could do this specific example in easier ways! But with state machines in general you get linear time performance and can costumize it easily!

while 1:

if state == "start":

if i == len(text):

state = "end"

elif text[i] == " ":

state = "new word"

i = i - 1

else:

word.append(text[i])

elif state == "new word":

print(''.join(word))

del word[:]

state = "start"

elif state == "end":

print(''.join(word))

break

i = i + 1

http://docs.python.org/2/library/collections.html#collections.Counter

Then you can for example use this python data structure for saving your tokens. I think it's perfectly suited for your needs!

Hope this was some help.

Related videos on Youtube

Comments

-

Legend over 1 year

I want to design a custom tokenizer module in Python that lets users specify what tokenizer(s) to use for the input. For instance, consider the following input:

Q: What is a good way to achieve this? A: I am not so sure. I think I will use Python.

I want to be able to provide NLTK's sentence tokenization,

sent_tokenize()as an option because it works well in many situations and I don't want to re-invent the wheel. In addition to this, I also want to provide a finer-grained tokenization builder (something along the lines of a rule-engine). Let me explain:Assume that I provider a couple of tokenizers:

SENTENCE # Tokenizes the given input by using sent_tokenize() WORD # Tokenizes the given input by using word_tokenize() QA # Tokenizes using a custom regular expression. E.g., Q: (.*?) A: (.*?)I want to support rules as follows:

- QA -> SENTENCE: Apply the QA tokenizer first, followed by the sentence tokenizer

- QA: Apply just the QA tokenizer

Therefore, the expected output is as follows:

1. QA -> SENTENCE

[ ('QUESTION', ('SENTENCE', 'What is a good way to achieve this?'), ), ('ANSWER', ('SENTENCE', 'I am not so sure', 'I think I will use Python') ) ]2. QA

[ ('QUESTION', 'What is a good way to achieve this?'), ('ANSWER', 'I am not so sure. I think I will use Python') ]What is a good design to achieve this efficiently?

-

Jared about 11 yearsWhats wrong with just writing a function that takes input functions and applies them to the given text? You can include

Jared about 11 yearsWhats wrong with just writing a function that takes input functions and applies them to the given text? You can includeword_tokenize,sent_tokenize, andregexp_tokenize? -

Mikaël Mayer about 11 yearsShouldn't QA -> SENTENCE look like ('QUESTION', ('SENTENCE', 'What is a good way to achieve this?')),

-

Legend about 11 years@MikaëlMayer: You're right! Thank you. Updated my question.

-

Asclepius about 11 yearsYou can probably compile and reuse the compiled regexes.

Asclepius about 11 yearsYou can probably compile and reuse the compiled regexes. -

Legend almost 11 years+1 First of all, thank you very much for this. I have a couple of follow-up questions: 1. Do you have suggestions on how to handle large input? Operating on chunks does not make sense to me due to regex boundaries. 2. Is there a way to convert the non-canonical tokenize into a canonical one? Given some input, it should first find the QUESTION and ANSWER and then individually apply the subsequent parts.

-

thoku almost 11 yearsFor 1.: (I don't know about regex bounderies) To feed large data maybe you want to consider both a) file iteration like

thoku almost 11 yearsFor 1.: (I don't know about regex bounderies) To feed large data maybe you want to consider both a) file iteration likewith open(fn) as f: for line in f:and b) functions returning withyield. For 2.: Couldn't you just adapttokzr_QA()to your special needs?

![Building a Parser from scratch. Lecture [1/18]: Tokenizer | Parser](https://i.ytimg.com/vi/4m7ubrdbWQU/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLA7_aSJOM4zoDbTmRTCCJq3SggpWg)

![KERAS Tokenizer Explained in Python [2021] 🔴](https://i.ytimg.com/vi/o4zEqZ8Aim4/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLDu0QnrDWE6YZaPQTFgXJwd_7F04Q)