XFS Can't Read the Superblock

Turns out it wasn't as potential-data-loss scary as I was beginning to fear. When I noticed that the array was inactive but couldn't be assembled, I stopped it:

# mdadm -S /dev/md0

mdadm: stopped /dev/md0

Then tried to assemble it:

# mdadm --assemble /dev/md0 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1

mdadm: /dev/md0 assembled from 10 drives - not enough to start the array while not clean - consider --force.

Still a little scary, let's see what /proc/mdstat has to say:

# cat /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [multipath]

md0 : inactive sdb1[0](S) sdk1[10](S) sdf1[9](S) sdg1[8](S) sdh1[7](S) sdi1[6](S) sdj1[5](S) sde1[4](S) sdd1[3](S) sdc1[2](S)

19535119360 blocks

All... spares... ? Ok, scared again. Stop it again:

# mdadm -S /dev/md0

mdadm: stopped /dev/md0

And try what it suggests, using --force:

# mdadm --assemble /dev/md0 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1 --force

mdadm: /dev/md0 has been started with 10 drives (out of 11).

10 out of 11, since one's sitting on the shelf next to the computer, so far so good:

# cat /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [multipath]

md0 : active raid6 sdb1[0] sdk1[10] sdf1[9] sdg1[8] sdh1[7] sdi1[6] sdj1[5] sde1[4] sdd1[3] sdc1[2]

17581607424 blocks level 6, 64k chunk, algorithm 2 [11/10] [U_UUUUUUUUU]

Breathing a sigh of relief, one final test:

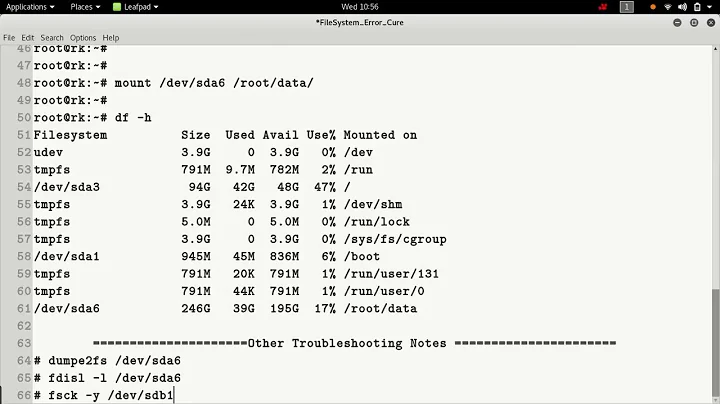

# mount /dev/md0 /mnt/data

# df -ahT

Filesystem Type Size Used Avail Use% Mounted on

/dev/root ext4 73G 6.9G 63G 10% /

proc proc 0 0 0 - /proc

sysfs sysfs 0 0 0 - /sys

usbfs usbfs 0 0 0 - /proc/bus/usb

tmpfs tmpfs 1.7G 0 1.7G 0% /dev/shm

/dev/md0 xfs 15T 14T 1.5T 91% /mnt/data

Relief all around. I need a drink...

Related videos on Youtube

David

Updated on September 18, 2022Comments

-

David 8 months

David 8 monthsI awoke this morning to find an email from my RAID host (Linux software RAID) telling me that a drive had failed. It's consumer hardware, it's not a big deal. I have cold spares. However, when I got to the server the whole thing was unresponsive. At some point I figured I had no choice but to cut the power and restart.

The system came up, the failed drive is still marked as failed,

/proc/mdstatlooks correct. However, it won't mount/dev/md0and tells me:mount: /dev/md0: can't read superblockNow I'm starting to worry. So I try

xfs_checkandxfs_repair, the former of which tells me:xfs_check: /dev/md0 is invalid (cannot read first 512 bytes)and the latter:

Phase 1 - find and verify superblock... superblock read failed, offset 0, size 524288, ag 0, rval 0 fatal error -- Invalid argumentNow I'm getting scared. So far my Googling has been to no avail. Now, I'm not in panic mode just yet because I've been scared before and it's always worked out within a few days. I can still pop in my cold spare tonight, let it rebuild (for 36 hours), and then see if the file system is in a more usable state. I can maybe even try to re-shape the array back down to 10 drives from the current 11 (since I haven't grown the file system yet) and see if that helps (which takes the better part of a week).

But while I'm at work, before I can do any of this at home tonight, I'd like to seek the help of experts here.

Does anybody more knowledgeable about file systems and RAID have any recommendations? Maybe there's something I can do over SSH from here to further diagnose the file system problem, or even perchance repair it?

Edit:

Looks like

/proc/mdstatis actually offering a clue:Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [multipath] md0 : inactive sdk1[10] sdh1[7] sdj1[5] sdg1[8] sdi1[6] sdc1[2] sdd1[3] sde1[4] sdf1[9] sdb1[0] 19535119360 blocksinactive? So I try to assemble the array:# mdadm --assemble /dev/md0 /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1 /dev/sdg1 /dev/sdh1 /dev/sdi1 /dev/sdj1 /dev/sdk1 mdadm: device /dev/md0 already active - cannot assemble itIt's already active? Even though

/proc/mdstatis telling me that it's inactive?