yum lockfile is held by another user

I'm pretty sure your running into this issue using Ansible 2.8, it want's to blow up now when running YUM Package installs. Easy work around to this is to set the lock_timeout var to 100 + as the default is set to 0.

- name: Install yum utils

yum:

name:

- yum-utils

- "@Development tools"

lock_timeout: 180

Unfortunately the trouble with this is that when you have a lot of Ansible tasks that install YUM Packages you need to go add this var to every single task. I've been looking for a way to set this globally some how but no joy. Hope that helps!

Links: https://github.com/ansible/ansible/issues/57189 https://docs.ansible.com/ansible/latest/modules/yum_module.html

Related videos on Youtube

RabT

Updated on September 18, 2022Comments

-

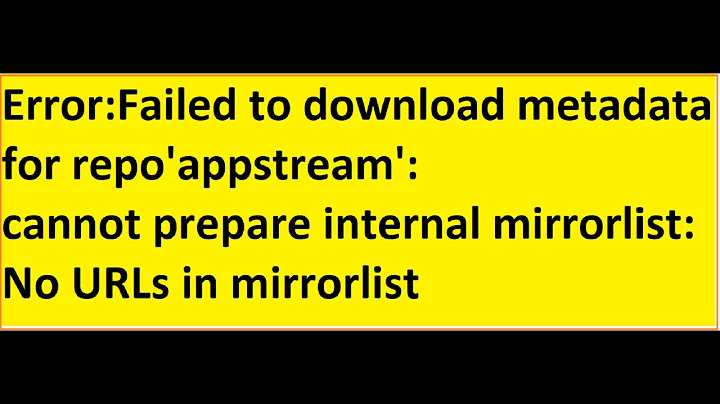

RabT almost 2 years

Amazon Linux 2instances are operated upon by an Ansible Playbook which performs multipleyumtasks one after the other.What specific changes need to be made to the syntax below in order for the successive

yumtasks to run without stopping due to process conflicts?Currently, the second

yumtask below is failing because Ansible does not know how to handle hearing that the precedingyumtask has not yet let go of theyumlockfile.Here is the current error message that files when the second

yumtask below is called:TASK [remove any previous versions of specific stuff] ************************************************************************************************************************************ fatal: [10.1.0.232]: FAILED! => {"changed": false, "msg": "yum lockfile is held by another process"}The two successive

yumtasks are currently written as follows:- name: Perform yum update of all packages yum: name: '*' state: latest - name: remove any previous versions of specific stuff yum: name: thing1, thing2, thing3, thing4, thing5, thing6 state: absentI imagine the solution is just to add something telling Ansible to wait until the first task's

yumlock has been released. But what syntax should be used for this?-

Zeitounator about 5 yearsI have never encountered such an issue with ansible. Are your sure a process refreshing yum/rpm db (not related to your ansible run) is not launched automagically on your host right after a change has been made ? If you really need to cope with this, the

Zeitounator about 5 yearsI have never encountered such an issue with ansible. Are your sure a process refreshing yum/rpm db (not related to your ansible run) is not launched automagically on your host right after a change has been made ? If you really need to cope with this, thewait_formodule could help (see optionspathandstate).

-

-

RabT about 5 yearsThank you and +1 for seeming to answer my question. I am marking this as answered before the problem does not seem to appear when I re-run the playbook after a few strategically placed additions of

lock_timeout: 180. If the problem re-appears with repeated runs of the playbook, I will revisit here. Welcome to the site.