Airflow tasks get stuck at "queued" status and never gets running

Solution 1

Tasks getting stuck is, most likely, a bug. At the moment (<= 1.9.0alpha1) it can happen when a task cannot even start up on the (remote) worker. This happens for example in the case of an overloaded worker or missing dependencies.

This patch should resolve that issue.

It is worth investigating why your tasks do not get a RUNNING state. Setting itself to this state is first thing a task does. Normally the worker does log before it starts executing and it also reports and errors. You should be able to find entries of this in the task log.

edit: As was mentioned in the comments on the original question in case one example of airflow not being able to run a task is when it cannot write to required locations. This makes it unable to proceed and tasks would get stuck. The patch fixes this by failing the task from the scheduler.

Solution 2

We have a solution and want to share it here before 1.9 becomes official. Thanks for Bolke de Bruin updates on 1.9. in my situation before 1.9, currently we are using 1.8.1 is to have another DAG running to clear the task in queue state if it stays there for over 30 mins.

Solution 3

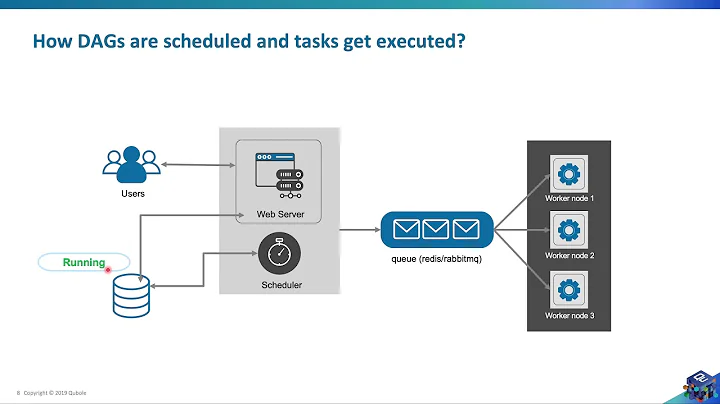

Please try airflow scheduler, airflow worker command.

I think airflow worker calls each task, airflow scheduler calls between two tasks.

Solution 4

I have been working on the same docker image puckel. My issue was resolved by :

Replacing

result_backend = db+postgresql://airflow:airflow@postgres/airflow

with

celery_result_backend = db+postgresql://airflow:airflow@postgres/airflow

which I think is updated in the latest pull by puckel. The change was reverted around in Feb 2018 and your comment was made in January.

Solution 5

In my case, all Airflow tasks got stuck and none of them were running. Below are the steps I have done to fix it:

- Kill all airflow processes, using

$ kill -9 <pid> - Kill all celery processes, using

$ pkill celery - Increses count for celery's

worker_concurrency,parallelism,dag_concurrencyconfigs in airflow.cfg file. - Starting airflow, first check if airflow webserver automatically get started, as in my case, it is running through Gunicorn otherwise start using

$ airflow webserver & - Start airflow scheduler

$ airflow scheduler - Start airflow worker

$ airflow worker - Try to run a job.

Related videos on Youtube

Comments

-

Norio Akagi almost 2 years

Norio Akagi almost 2 yearsI'm using Airflow v1.8.1 and run all components (worker, web, flower, scheduler) on kubernetes & Docker. I use Celery Executor with Redis and my tasks are looks like:

(start) -> (do_work_for_product1) ├ -> (do_work_for_product2) ├ -> (do_work_for_product3) ├ …So the

starttask has multiple downstreams. And I setup concurrency related configuration as below:parallelism = 3 dag_concurrency = 3 max_active_runs = 1Then when I run this DAG manually (not sure if it never happens on a scheduled task) , some downstreams get executed, but others stuck at "queued" status.

If I clear the task from Admin UI, it gets executed. There is no worker log (after processing some first downstreams, it just doesn't output any log).

Web server's log (not sure

worker exitingis related)/usr/local/lib/python2.7/dist-packages/flask/exthook.py:71: ExtDeprecationWarning: Importing flask.ext.cache is deprecated, use flask_cache instead. .format(x=modname), ExtDeprecationWarning [2017-08-24 04:20:56,496] [51] {models.py:168} INFO - Filling up the DagBag from /usr/local/airflow_dags [2017-08-24 04:20:57 +0000] [27] [INFO] Handling signal: ttou [2017-08-24 04:20:57 +0000] [37] [INFO] Worker exiting (pid: 37)There is no error log on scheduler, too. And a number of tasks get stuck is changing whenever I try this.

Because I also use Docker I'm wondering if this is related: https://github.com/puckel/docker-airflow/issues/94 But so far, no clue.

Has anyone faced with a similar issue or have some idea what I can investigate for this issue...?

-

Chengzhi over 6 yearsHi @Norio, we are having the similar issue that tasks in

queuebut scheduler seems forgot some of them. When I useairflow scheduleragain, they have been picked up. I am also using 1.8.1, kubernetes and Docker, but with LocalExecutor, same issue here. -

Norio Akagi over 6 years@Chengzhi Thank you for the info. I utilize this shell github.com/apache/incubator-airflow/blob/… to automatically restart scheduler without relying on k8s's back off, so in my case scheduler should be periodically re-spawned but not pick some tasks forever... very weird.

Norio Akagi over 6 years@Chengzhi Thank you for the info. I utilize this shell github.com/apache/incubator-airflow/blob/… to automatically restart scheduler without relying on k8s's back off, so in my case scheduler should be periodically re-spawned but not pick some tasks forever... very weird. -

Chengzhi over 6 yearssuper, thanks for sharing, very wired, I will keep you in loop if I found something, but it looks like this is the solution for now.

-

fernandosjp over 6 yearsI'm having a similar problem with airflow and using LocalExecutor. My setup is ismilar to the one @Chengzhi has. Did anyone find anything to try here?

fernandosjp over 6 yearsI'm having a similar problem with airflow and using LocalExecutor. My setup is ismilar to the one @Chengzhi has. Did anyone find anything to try here? -

fernandosjp over 6 yearsJust resolved my case which doesn't seem to be like yours but worth sharing. I was working with the logs/ folder and without noticing all ownership of folders changed. For this reason, Airflow was not able to write in log files and task kept getting stuck on queued state. Changing ownership of all files back to

fernandosjp over 6 yearsJust resolved my case which doesn't seem to be like yours but worth sharing. I was working with the logs/ folder and without noticing all ownership of folders changed. For this reason, Airflow was not able to write in log files and task kept getting stuck on queued state. Changing ownership of all files back toairflowuser brought the application to normal.sudo chown -R airflow:airflow logs/ -

Nick over 6 yearsPlease look at the stuck/queued task instance logs and provide those. We see this in our environment and it seems that its due to a dagbag import timeout that happens when the server is overly busy. See also this bug: issues.apache.org/jira/browse/…

-

-

l0n3r4n83r over 6 yearsWe are seeing this issue with 1.9.0 tasks get queued but never go to running state unless done manually from the UI

-

ugur over 5 yearsI am using puckel images, and I want to run some command on containers; for instance

airflow dag_state spark_python, but I am encounter following error:airflow.exceptions.AirflowConfigException: error: cannot use sqlite with the CeleryExecutor, do you have any idea, why I cannot run -

Pierre about 4 yearsDo you have the code of this DAG available somewhere by any chance please? I also have this issue and will take some time before I can migrate to a newer version of Airflow.

-

poorva over 3 yearsThis might not be a resolution to the problem, but atleast by running these commands you can come to know what the problem, like if they error out something

poorva over 3 yearsThis might not be a resolution to the problem, but atleast by running these commands you can come to know what the problem, like if they error out something

![[Getting started with Airflow - 3] Understanding task retries](https://i.ytimg.com/vi/2N6uR0kTTxo/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLAVL6v3YDeUMAK3LB_aKtWb6RiUiA)