Are 2^n and n*2^n in the same time complexity?

Solution 1

You will have to go to the formal definition of the big O (O) in order to answer this question.

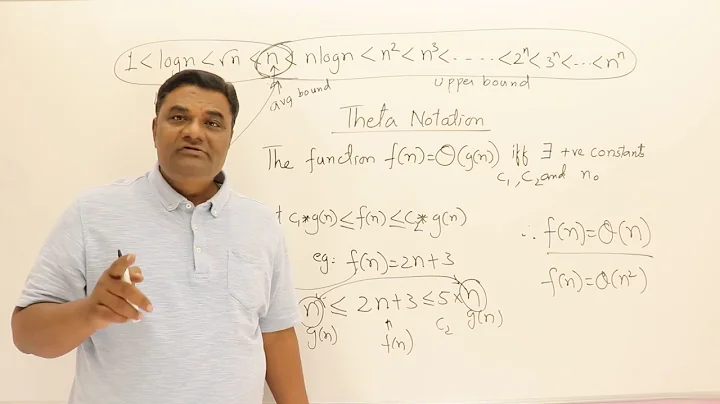

The definition is that f(x) belongs to O(g(x)) if and only if the limit limsupx → ∞ (f(x)/g(x)) exists i.e. is not infinity. In short this means that there exists a constant M, such that value of f(x)/g(x) is never greater than M.

In the case of your question let f(n) = n ⋅ 2n and let g(n) = 2n. Then f(n)/g(n) is n which will still grow infinitely. Therefore f(n) does not belong to O(g(n)).

Solution 2

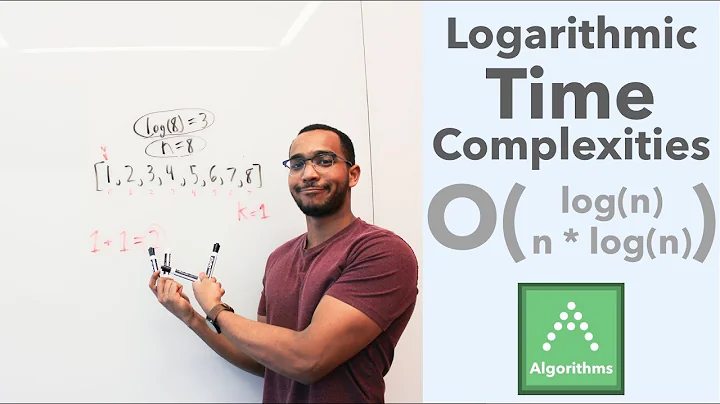

A quick way to see that n⋅2ⁿ is bigger is to make a change of variable. Let m = 2ⁿ. Then n⋅2ⁿ = ( log₂m )⋅m (taking the base-2 logarithm on both sides of m = 2ⁿ gives n = log₂m ), and you can easily show that m log₂m grows faster than m.

Solution 3

I agree that n⋅2ⁿ is not in O(2ⁿ), but I thought it should be more explicit since the limit superior usage doesn't always hold.

By the formal definition of Big-O: f(n) is in O(g(n)) if there exist constants c > 0 and n₀ ≥ 0 such that for all n ≥ n₀ we have f(n) ≤ c⋅g(n). It can easily be shown that no such constants exist for f(n) = n⋅2ⁿ and g(n) = 2ⁿ. However, it can be shown that g(n) is in O(f(n)).

In other words, n⋅2ⁿ is lower bounded by 2ⁿ. This is intuitive. Although they are both exponential and thus are equally unlikely to be used in most practical circumstances, we cannot say they are of the same order because 2ⁿ necessarily grows slower than n⋅2ⁿ.

Solution 4

I do not argue with other answers that say that n⋅2ⁿ grows faster than 2ⁿ. But n⋅2ⁿ grows is still only exponential.

When we talk about algorithms, we often say that time complexity grows is exponential.

So, we consider to be 2ⁿ, 3ⁿ, eⁿ, 2.000001ⁿ, or our n⋅2ⁿ to be same group of complexity with exponential grows.

To give it a bit mathematical sense, we consider a function f(x) to grow (not faster than) exponentially if exists such constant c > 1, that f(x) = O(cx).

For n⋅2ⁿ the constant c can be any number greater than 2, let's take 3. Then:

n⋅2ⁿ / 3ⁿ = n ⋅ (2/3)ⁿ and this is less than 1 for any n.

So 2ⁿ grows slower than n⋅2ⁿ, the last in turn grows slower than 2.000001ⁿ. But all three of them grow exponentially.

Solution 5

You asked "is the second in the same order as the first? Does the additional n multiplication there matter?" These are two different questions with two different answers.

n 2^n grows asymptotically faster than 2^n. That's that question answered.

But you could ask "if algorithm A takes 2^n nanoseconds, and algorithm B takes n 2^n nanoseconds, what is the biggest n where I can find a solution in a second / minute / hour / day / month / year? And the answers are n = 29/35/41/46/51/54 vs. 25/30/36/40/45/49. Not much difference in practice.

The size of the biggest problem that can be solved in time T is O (ln T) in both cases.

Related videos on Youtube

matty-d

Updated on June 15, 2021Comments

-

matty-d almost 3 years

Resources I've found on time complexity are unclear about when it is okay to ignore terms in a time complexity equation, specifically with non-polynomial examples.

It's clear to me that given something of the form n2 + n + 1, the last two terms are insignificant.

Specifically, given two categorizations, 2n, and n*(2n), is the second in the same order as the first? Does the additional n multiplication there matter? Usually resources just say xn is in an exponential and grows much faster... then move on.

I can understand why it wouldn't since 2n will greatly outpace n, but because they're not being added together, it would matter greatly when comparing the two equations, in fact the difference between them will always be a factor of n, which seems important to say the least.

-

Jack about 10 yearsIn my opinion, given that NLogN is regarded strictly slower than N, but most people don't really care by how much, it is safe to say N2^N is simply slower than 2^N, but not "slower enough" for people to care..

-

matty-d about 10 years@tobias_k, I understand this point, but consider the example of O(n!). Would an extra n term really be different? O(n!) is to O(n*n!) as O(n!) is to O((n+1)!) aka the same graph simply shifted. The growth is the same though... In this case even though one is strictly large, is the growth different? isn't this what time complexity cares about?

-

C.B. about 10 years@JackWu but most people don't really care by how much until you have to sort hundreds of millions of records with nlogn instead of n :)

-

chepner about 10 yearsIn fact,

n! = o((n+1)!), that is, it grows strictly slower asymptotically. -

Raphael about 10 yearsNote that this has nothing to with complexity theory, it's "just" about aymptotics. Also, this kind of questions is probably better off on Computer Science.

Raphael about 10 yearsNote that this has nothing to with complexity theory, it's "just" about aymptotics. Also, this kind of questions is probably better off on Computer Science. -

supercat about 10 years@C.B.: An algorithm which is O(N) but has a constant that's 30 times as large as one that's O(NlgN) will never significantly outperform the O(NlgN) algorithm for any plausible size of N.

-

Patrick M about 10 yearsOff topic, but doesn't stackoverflow support mathjax? I haven't learned that syntax yet, or I'd be editing up a storm...

Patrick M about 10 yearsOff topic, but doesn't stackoverflow support mathjax? I haven't learned that syntax yet, or I'd be editing up a storm... -

Anders about 10 yearsYou have to look at the graph, Jack Wu. O(n log n) grows faster than O(n) for large enough n AND larger values of n. But as we usually let the function represent time, or memory, use depending on number of element, we want them to be low.

-

Bernhard Barker about 10 years@PatrickM No, MathJax isn't activated on Stack Overflow (it does work on, for example, Computer Science).

Bernhard Barker about 10 years@PatrickM No, MathJax isn't activated on Stack Overflow (it does work on, for example, Computer Science).

-

-

matty-d about 10 yearsYes, I should have remembered this. I guess I'm used to using limits for proving little o and normally am able to use my intuition to solve big O or big theta related questions. Well done sir!

-

Alden about 10 yearsFor a slightly easier to read definition, see here

-

chepner about 10 yearsFormally speaking, you can't take the limit of

O(f(x)/g(x)); big-O notification is shorthand for a set of functions, not a single function whose value you can limit. However, I think it's true that you can show thatf(x) = O(g(x))iflim(x->infinity) f(x)/g(x)exists. -

matty-d about 10 yearsYeah I think you just need to remove that O

-

Ivaylo Strandjev about 10 years@chepner matty-d is right I just put

Oby error. Of course what I have written previously was nonsense. Also I know that f(x) belongs to O(g(x)) if lim(x->infinity) f(x)/g(x) exists. That is why I put it in my answer. -

user2357112 about 10 yearsThe limit does not have to exist; the ratio only has to be bounded above by a constant for sufficiently large x. For example, 2+sin(x) is in O(1), but (2+sin(x))/1 does not approach a limit as x->infinity.

-

David Eisenstat about 10 yearsThe definition would be correct with

lim supinstead oflim. -

John P about 10 yearsThank you! This is the best answer in my opinion. Proofs based on formal definitions are correct, but if you have a stumbling block of some sort to get over, a very comfortable and familiar analogy will do the job the best and the fastest.

John P about 10 yearsThank you! This is the best answer in my opinion. Proofs based on formal definitions are correct, but if you have a stumbling block of some sort to get over, a very comfortable and familiar analogy will do the job the best and the fastest. -

Ivaylo Strandjev about 10 years@user2357112 you are right of course. Initially I thought that using the compkete definition will make my answer more complicated and hard to understand, but I will fix the definition to be more precise. Great example btw.

-

tobias_k about 10 years

f(n) = 2*2^nI think you meantn*2^n? -

Pierre Arlaud about 10 yearsStupid question, what is

Pierre Arlaud about 10 yearsStupid question, what islg? Logarithm in base 2? -

chepner about 10 yearsIt's a lazy abbreviation. In computer science, it tends to mean base 2 because it mostly results from divide-and-conquer strategies. In big-O notation, it could represent anything, because the base-x logarithm of a number differs from its base-y logarithm by only a constant factor, regardless of x and y.

-

K.Steff about 10 years@IvayloStrandjev please note that your in short description is incorrect. This must be true for a sufficiently large

x, not for all values ofx. -

Nicu Stiurca about 10 yearsI incorporated David's and Steff's comments into the definition in the answer.

-

haccks about 10 years@SchighSchagh; What is this

haccks about 10 years@SchighSchagh; What is thissupfor ? -

chepner about 10 yearsI should note in retrospect that

lgis the ISO notation for a base-10 logarithm, rather than the base-agnostic use most commonly used when discussing asymptotic run times. See en.wikipedia.org/wiki/Logarithm#Particular_bases -

Ivaylo Strandjev about 10 years@K.Steff you are right of course. Still I will not incorporate this change in my answer, as it will almost never be important when we consider complexity functions. I want to keep the answer as comprehensive as possible. In all practical examples I can think of f(x)/g(x) will never be infinite (for positive x) and thus what I've written is accurate. I don't want to write

for sufficiently large xas then I will have to explain whatsufficiently largemeans and this will add a lot of complexity. -

gnasher729 about 10 yearsIn the last example, n*2^n is greater than 2.000001^n up to n = 34,726,000. At that point 2^n is a number with more than 10 million digits, so it doesn't really matter...

-

Andrey about 10 years@gnasher729 It's just a constant which we may omit as f(n) and c*f(n) is same complexity in terms of big-O. e.g. 40'000'000*2.000001^n is greater than n*2^n right away. But you are right, it does not really matter, I would say it does not really matter once we hit exponential grows (unless we get only small values of n).

Andrey about 10 years@gnasher729 It's just a constant which we may omit as f(n) and c*f(n) is same complexity in terms of big-O. e.g. 40'000'000*2.000001^n is greater than n*2^n right away. But you are right, it does not really matter, I would say it does not really matter once we hit exponential grows (unless we get only small values of n). -

djechlin over 8 yearsOkay, sure, but I don't get why it's more obvious that m log m grows faster than m, than it is that n 2^n grows faster than 2^n.

-

chepner over 8 yearsI didn't say it was obvious, but working from the definition, you should be able to show that for any constant c, c*m <= m log m for large enough m. (Specifically, m > 2*c). Some people describe big-oh as ignoring lower-order terms, and by that informal definition, you might think that since n is lower order than 2^n, you can ignore it. (You can't, since it's a non-constant factor of the highest-order term, rather than a lower-order term as in, for example, 2^n + n).

-

NerdOnTour about 2 yearsSome have pointed out that the limit

f(x)/g(x)forx to inftyneed not exist, butf(x)/g(x)has to be bounded by someM. I'd like to add that the absolute value off(x)/g(x)has to be considered. (Of course, in case of time complexity considerations bothfandgare positive functions, so the absolute value is not necessary.)

![2.3.2 Recurrence Relation Dividing [ T(n)=T(n/2)+ n]. #2](https://i.ytimg.com/vi/XcZw01FuH18/hqdefault.jpg?sqp=-oaymwEcCOADEI4CSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLCCmcrGVfU5Vtz0LTEDmsHZkyodAg)

![L-2.4: Recurrence Relation [ T(n)= 2T(n/2) +n] | Substitution Method | Algorithm](https://i.ytimg.com/vi/VHGisohk3Ck/hq720.jpg?sqp=-oaymwEcCNAFEJQDSFXyq4qpAw4IARUAAIhCGAFwAcABBg==&rs=AOn4CLBnYduCAIpAjDBdoeuVt_6OJcGRXg)