Auto scale RTMP live streams (NGINX-RTMP)

If you are willing to switch over to hls (nginx-rtmp supports hls) it'd make your life - from my experience - easier than trying to load balance rtmp itself. Once you have hls transcoding set up the only thing you need it to either put a cdn in front of your webserver and let that take care of the caching or roll your own using varnish, squid or even nginx yourself (of course there are more possibilities) - HTTP caching is so widespread I'm sure you'll find an easy solution.

If you want to stick with rtmp though, you could set up a similar infrastructure.

Have one master ingest server and multiple edge nodes that each pull from the ingest server. This setup would be fairly scalable and should work fine for your current load.

Edit: Seems I misunderstood your question: It would probably the easiest to have an api endpoint which you can ask which rtmp server your webcam should stream to instead of trying to load balance.

So once your rtmp server has reached X streams (see nginx-rtmp stat module), you launch a new instance and redirect new streams to that.

nginx-rtmp also has a redirect functionality in on_connect (can't put more than two links yet, just search for on_connect on the directives wiki page) by returning a 3xx header with a Location. I am not sure if this supports redirecting to a different node, but this would be worth a try aswell - can avoid having to manually query before picking a server then.

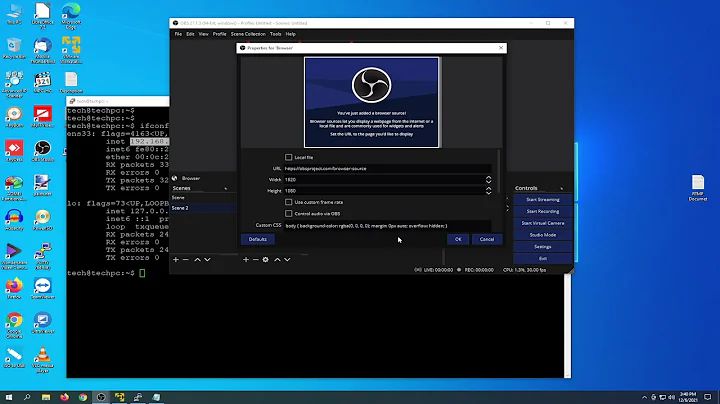

Related videos on Youtube

Junaid

favorite code comment: this is a work-in-progress and may do anything it likes up to and including eating your laundry

Updated on September 18, 2022Comments

-

Junaid over 1 year

Junaid over 1 yearI am using Nginx-rtmp-module for live streaming. It works perfectly for 40-50 cameras on a single machine (AWS EC2 C3-large). But if i have more than 100 streams, how can I scale my servers to meet the requirement ?

I have tried using ELB but it terminates the connections once new machine is launched and after launching new machine it sends incoming requests in a round robin manner. What I want is the following.

- When System's CPU utilization reaches 80% launch a new server but keep the existing connections alive.

- Send new requests to newly created server only if first server's CPU utilization > 80%. (No Round Robin)

How can i achieve this ? Thank you for your time.

-

DaveM almost 9 yearsThis sounds like a load balancing question, but not being an expert I don't know how it would be best set up. I'm guessing a number of real or VM's each talking to 40 cameras (then it is easy to to just copy your VM when you need more cameras. The problem, as I see it is that you probably want to be able to access all the cameras from a single single IP / web address - which sounds like a load balancing or proxying trick... But I wouldn't know how to set this up. Looking forward to the answer.

DaveM almost 9 yearsThis sounds like a load balancing question, but not being an expert I don't know how it would be best set up. I'm guessing a number of real or VM's each talking to 40 cameras (then it is easy to to just copy your VM when you need more cameras. The problem, as I see it is that you probably want to be able to access all the cameras from a single single IP / web address - which sounds like a load balancing or proxying trick... But I wouldn't know how to set this up. Looking forward to the answer.

-

Junaid almost 9 yearsthank you for the answer. I already went for the approach you mentioned (api method).

Junaid almost 9 yearsthank you for the answer. I already went for the approach you mentioned (api method).on_connectdoesn't redirect to remote nodes, and one additional thing is that stat page will go blank ifnginx -s reloadis called. right now I am usingon_updatewhich tell the api which camera is connected to which server. API resolve the id of next connection