Calculate sum of several sizes of files in Bash

Solution 1

Use stat instead of du:

#!/bin/bash

for i in `grep -v ^# ~/cache_temp | grep -v "dovecot.index.cache"`; do

[ -f "$i" ] && totalsize=$[totalsize + $(stat -c "%s" "$i")]

done

echo totalsize: $totalsize bytes

Solution 2

If you need to use the file this snippet is hopefully efficient.

xargs -a cache_file stat --format="%s" | paste -sd+ | bc -l

The xargs is to prevent overflowing the argument limit but getting the max number of files into one invocation of stat each time.

Solution 3

According to du(1), there is a -c option whose purpose is to produce the grand total.

% du -chs * /etc/passwd

92K ABOUT-NLS

196K NEWS

12K README

48K THANKS

8,0K TODO

4,0K /etc/passwd

360K total

Solution 4

If you remove the "-h" flag from your "du" command, you'll get the raw byte sizes. You can then add them with the ((a += b)) syntax:

a=0

for i in $(find . -type f -print0 | xargs -0 du -s | awk {'print $1'})

do

((a += i))

done

echo $a

The -print0 and -0 flags to find/xargs use null-terminated strings to preserve whitespace.

EDIT: turns out I type slower than @HBruijn comments!

Solution 5

Well... For better or worse, here's my implementation of this. I've always preferred using "while" to read lines from files.

#!/bin/bash

SUM=0

while read file; do

SUM=$(( $SUM + $(stat $file | awk '/Size:/ { print $2 }') ))

done < cache_temp

echo $SUM

Per janos' recommendation below:

#!/bin/bash

while read file; do

stat $file

done < cache_temp | awk 'BEGIN { s=0 } $1 == "Size:" { s=s+$2 } END { print s; }'

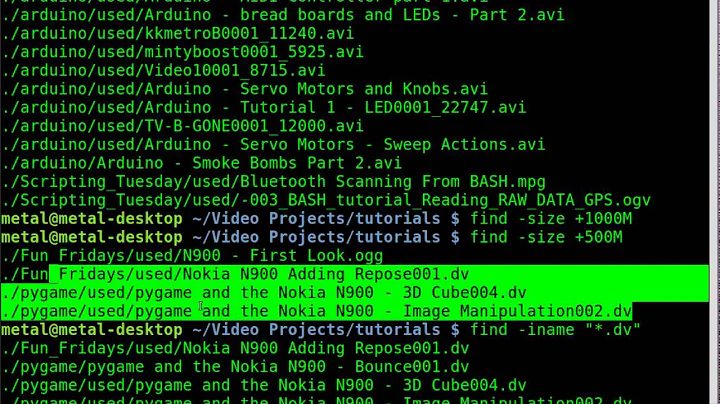

Related videos on Youtube

Piduna

Updated on September 18, 2022Comments

-

Piduna over 1 year

I have list of files in a file,

cache_temp.In file

cache_temp:/home/maildir/mydomain.com/een/new/1491397868.M395935P76076.nm1.mydomain.com,S=1740,W=1777 /home/maildir/mydomain.com/een/new/1485873821.M199286P14170.nm1.mydomain.com,S=440734,W=446889 /home/maildir/mydomain.com/td.pr/cur/1491397869.M704928P76257.nm1.mydomain.com,S=1742,W=1779:2,Sb /home/maildir/mydomain.com/td.pr/cur/1501571359.M552218P73116.nm1.mydomain.com,S=1687,W=1719:2,Sa /home/maildir/mydomain.com/td.pr/cur/1498562257.M153946P22434.nm1.mydomain.com,S=1684,W=1717:2,SbI have a simple script for getting the size of files from

cache_temp:#!/bin/bash for i in `grep -v ^# ~/cache_temp | grep -v "dovecot.index.cache"`; do if [ -f "$i" ]; then size=$(du -sh "$i" | awk '{print $1}') echo $size fi doneI have a list of sizes of files:

4,0K 4,0K 4,0K 432K 4,0KHow can I calculate the sum of them?

-

HBruijn over 6 yearsDon't use the

HBruijn over 6 yearsDon't use the-hswitch for basic size calculations, takingkMorG's into account is going to be horribly complex for a simple shell script. Simply adding numbers is trivial tldp.org/LDP/abs/html/arithexp.html

-

-

foxfabi over 6 yearsor

(( totalsize += $(stat -c "%s" "$i") )) -

foxfabi over 6 yearsWith good reason: mywiki.wooledge.org/BashFAQ/001

-

foxfabi over 6 yearsAnd to just get the human-readable total size:

du -chs * | tail -1 | cut -f1 -

Xen2050 over 6 years@glennjackman Actually the "reason" link is here mywiki.wooledge.org/DontReadLinesWithFor but both links are useful

Xen2050 over 6 years@glennjackman Actually the "reason" link is here mywiki.wooledge.org/DontReadLinesWithFor but both links are useful -

Xen2050 over 6 yearsGood option, and so close to a full answer... combined with reading files from

Xen2050 over 6 yearsGood option, and so close to a full answer... combined with reading files fromcache_tempand maybe xargs in case of large lines & add them... I guess you'd have shearn89's answer... -

tripleee over 6 yearsThe

tripleee over 6 yearsThestat --formatimplies Linux which means you havexargs -ato avoid the explicit redirection if you like. -

Matthew Ife over 6 years@tripleee Now thats a valid criticism ;) I changed the suggestion.

Matthew Ife over 6 years@tripleee Now thats a valid criticism ;) I changed the suggestion. -

janos over 6 yearsPlease use modern

janos over 6 yearsPlease use modern$(...)subshells instead of backticks -

Erik over 6 yearsI see what you're saying.. no sense running stat AND awk "wc -l cache_temp" times. Use awk one to roll everything up at the end