Can apache spark run without hadoop?

Solution 1

Spark can run without Hadoop but some of its functionality relies on Hadoop's code (e.g. handling of Parquet files). We're running Spark on Mesos and S3 which was a little tricky to set up but works really well once done (you can read a summary of what needed to properly set it here).

(Edit) Note: since version 2.3.0 Spark also added native support for Kubernetes

Solution 2

Spark is an in-memory distributed computing engine.

Hadoop is a framework for distributed storage (HDFS) and distributed processing (YARN).

Spark can run with or without Hadoop components (HDFS/YARN)

Distributed Storage:

Since Spark does not have its own distributed storage system, it has to depend on one of these storage systems for distributed computing.

S3 – Non-urgent batch jobs. S3 fits very specific use cases when data locality isn’t critical.

Cassandra – Perfect for streaming data analysis and an overkill for batch jobs.

HDFS – Great fit for batch jobs without compromising on data locality.

Distributed processing:

You can run Spark in three different modes: Standalone, YARN and Mesos

Have a look at the below SE question for a detailed explanation about both distributed storage and distributed processing.

Which cluster type should I choose for Spark?

Solution 3

By default , Spark does not have storage mechanism.

To store data, it needs fast and scalable file system. You can use S3 or HDFS or any other file system. Hadoop is economical option due to low cost.

Additionally if you use Tachyon, it will boost performance with Hadoop. It's highly recommended Hadoop for apache spark processing.

Solution 4

As per Spark documentation, Spark can run without Hadoop.

You may run it as a Standalone mode without any resource manager.

But if you want to run in multi-node setup, you need a resource manager like YARN or Mesos and a distributed file system like HDFS,S3 etc.

Solution 5

Yes, spark can run without hadoop. All core spark features will continue to work, but you'll miss things like easily distributing all your files (code as well as data) to all the nodes in the cluster via hdfs, etc.

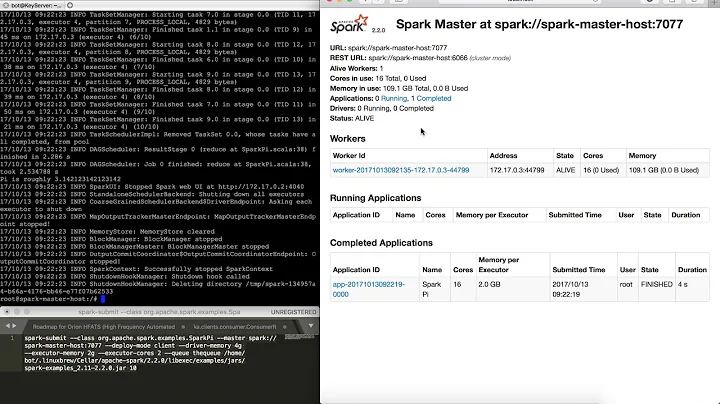

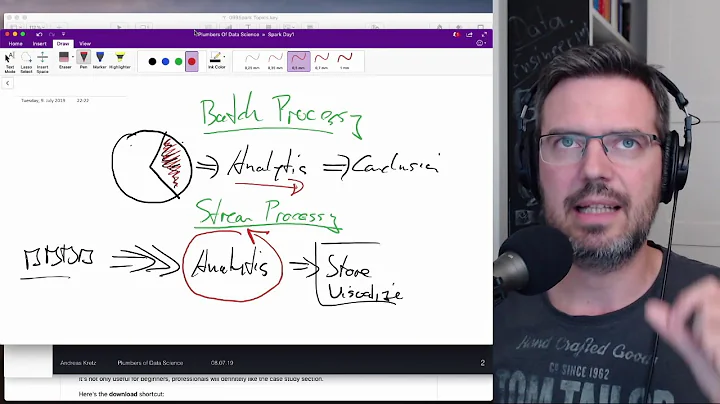

Related videos on Youtube

tourist

Updated on March 29, 2022Comments

-

tourist about 2 years

Are there any dependencies between Spark and Hadoop?

If not, are there any features I'll miss when I run Spark without Hadoop?

-

Chris Chambers over 8 yearsThis is incorrect, it works fine without Hadoop in current versions.

-

NikoNyrh over 7 years@ChrisChambers Would you care to elaborate? Comment on that issue says "In fact, Spark does require Hadoop classes no matter what", and on downloads page there are only options to either a pre-built for a specific Hadoop version or one with user-provided Hadoop. And docs say "Spark uses Hadoop client libraries for HDFS and YARN." and this dependency doesn't seem to be optional.

-

Jesús Zazueta almost 7 years@NikoNyrh correct. I just tried executing the 'User provided Hadoop' download artifact and immediately get a stack trace. I also wish for Spark's classpath to be decoupled from core Hadoop classes. But for prototyping and testing purposes, I take no issue other than the size of the download (120 something MB) all in all. Oh well. Cheers!

-

Jesús Zazueta almost 7 yearsStack trace in question:

$ ./spark-shell Exception in thread "main" java.lang.NoClassDefFoundError: org/apache/hadoop/fs/FSDataInputStream at org.apache.spark.deploy.SparkSubmitArguments$$anonfun$mergeDefaultSparkProperties$1.apply(SparkSubmitArguments.scala:118) at org.apache.spark.deploy.SparkSubmitArguments$$anonfun$mergeDefault at java.net.URLClassLoader.findClass(URLClassLoader.java:381) at java.lang.ClassLoader.loadClass(ClassLoader.java:424) at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ... 7 more