Standalone Manager Vs. Yarn Vs. Mesos

Solution 1

Apache Spark runs in the following cluster modes

- Local

- Standalone

- YARN

- Mesos

- Kubernetes

- Nomad

Local mode is used to run Spark applications on Operating system. This mode is useful for Spark application development and testing.

Modes like standalone, Yarn, Mesos and Kubernetes modes are distributed environment. In distributed environment, resource management is very important to manage the computing resources. So to manage computing resources in efficient way, we need good resource management system or Resource Schedular.

Standalone is good for small spark clusters, but it is not good for bigger clusters (There is an overhead of running spark daemons(master + slave) in cluster nodes). These daemons require dedicated resources. So standalone is not recommended for bigger production clusters. Standalone supports only Spark applications and it is not general purpose cluster manager. In Enterprise context where we have variety of work loads to run, spark standalone cluster manager is not a good a choice.

In case of YARN and Mesos mode, Spark runs as an application and there are no daemons overhead. So we can use either YARN or Mesos for better performance and scalability. Both YARN and Mesos are general purpose distributed resource management and they support a variety of work loads like MapReduce, Spark, Flink, Storm etc... with container orchestration. They are good for running large scale Enterprise production clusters.

In between YARN and Mesos, YARN is specially designed for Hadoop work loads whereas Mesos is designed for all kinds of work loads. YARN is application level scheduler and Mesos is OS level scheduler. it is better to use YARN if you have already running Hadoop cluster (Apache/CDH/HDP). In case of a brand new project, better to use Mesos(Apache, Mesosphere). There is also a provision to use both of them in colocated manner using Project called Apache Myriad.

Kubernetes - Open source system for automating deployment, scaling, and management of containerized applications. So it used for running Spark applications in containerized fashion. Most of the cloud vendors like Google, Microsoft, Amazon offering Kubernetes platform as service in Cloud. We can also have on-prim K8S cluster to run Spark applications in containerized fashion. Here containers are Docker or CGroups/Linux Container.

Nomad - It is another open source system for running Spark applications. This cluster manager is not officially supported by the Spark project as a cluster manager.

Out of all above modes, Apache Mesos has better resource management capabilities.

Please see this link, it contains a detailed explanation from expertise about Yarn vs Mesos. http://www.quora.com/How-does-YARN-compare-to-Mesos

Solution 2

On a 3 node cluster I'd just go with the standalone manager the overhead of the additional processes would not pay off

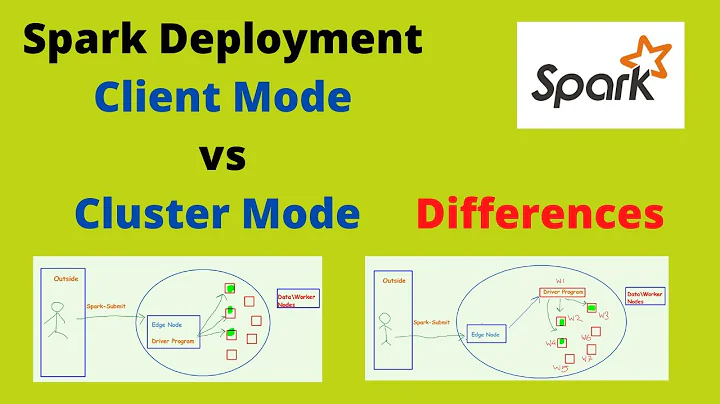

Related videos on Youtube

Abhinandan Satpute

I am graduated from Pune Institute of Computer Technology. I have nearly 4 years of combined experience in multinational company and startup. I have started my professional career with Atos, Pune. At Atos, while working on a telecom project, I got a chance to work and explore the technologies like Linux, Shell scripting, Oracle, Datastage, Tivoli, BMC remedy tool. It's been more than 2 years of working at Krixi and learning new things while playing different roles. While working on a healthcare project, I worked on configuring and maintaining distributed clusters of Hadoop, Spark, Cassandra, Solr along with shell scripting and Spark programming. Building an IoT web application in Grails framework gave me an opportunity to work on a Web application. Enabling the communication between a web application and the IoT devices over the SMSes and HTTPS was a real fun and challenging task. Consumption, processing and persistence(in MySQL & Mongo) of the raw unstructured device data was a good learning experience. Currently I am working on Shiny which is an elegant and powerful web framework for building web applications using R. I am doing the complex data analysis, building an interactive application and presenting output in the form of intuitive charts and tables along with Excel and PDF report generation and Downloading options. Throughout my professional career, I have always found myself effectively interacting with clients. ✉ [email protected] ✆ +91 8796.105.046

Updated on June 06, 2022Comments

-

Abhinandan Satpute almost 2 years

Abhinandan Satpute almost 2 yearsOn 3 node Spark/Hadoop cluster which scheduler(Manager) will work efficiently? Currently I am using Standalone Manager, but for each spark job I have to explicitly specify all resource parameters(e.g: cores,memory etc),which I want to avoid. I have tried Yarn as well, but it's running 10X slower than standalone manager.

Can Mesos will be helpful?

Cluster Details: Spark 1.2.1 and Hadoop 2.7.1

-

rukletsov almost 9 years[Disclaimer: Not a Yarn expert] I think it strongly depends on what future workload you plan to add to your cluster. Mesos is a generic scheduler, while Yarn is more tailored for Hadoop workloads.

rukletsov almost 9 years[Disclaimer: Not a Yarn expert] I think it strongly depends on what future workload you plan to add to your cluster. Mesos is a generic scheduler, while Yarn is more tailored for Hadoop workloads. -

Ravindra babu over 7 yearsHave a look at related SE question: stackoverflow.com/questions/28664834/…

Ravindra babu over 7 yearsHave a look at related SE question: stackoverflow.com/questions/28664834/…

-

-

Abhinandan Satpute almost 9 yearsActually,in future there will be more than 100 nodes.This is just test environment,but I want to test all things here only.

Abhinandan Satpute almost 9 yearsActually,in future there will be more than 100 nodes.This is just test environment,but I want to test all things here only. -

Thomas Browne over 8 yearsAt what number of nodes would you say it becomes worthwhile to move from Standalone to Mesos (or Yarn)?

Thomas Browne over 8 yearsAt what number of nodes would you say it becomes worthwhile to move from Standalone to Mesos (or Yarn)?