Can I invoke Google to check my robots.txt?

Solution 1

You can't make them re-download your robots.txt when you want them to. Google will re-crawl it and use the new data whenever they feel it is appropriate for your site. They tend to crawl it regularly so I wouldn't expect it to take long for your updated file to be found and your pages re-crawled and re-indexed. Keep in mind that it may take some time after the new robots.txt file is found before your pages are re-crawled and even more time for them to reappear in Google's search results.

Solution 2

I faced the same problem when I started my new website www.satyabrata.com on June 16.

I had a Disallow: / in my robots.txt, exactly like Oliver. There was also a warning message in Google Webmaster Tools about blocked URLs.

The problem was solved yesterday, June 18. I did the following. I am not sure which step worked.

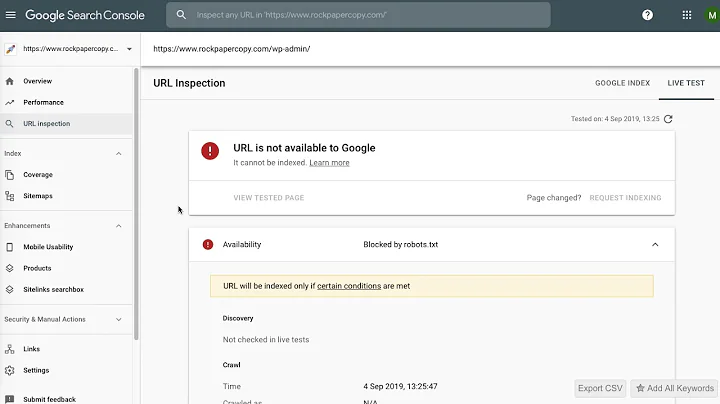

- Health -> Fetch as Google: robots.txt and the home page. Then, submit to index.

- Settings -> Preffered domain: Display URL as

www.satyabrata.com - Optimization -> Sitemaps: Added XML sitemap.

The warning message about blocked URLs is gone now and a fresh robots.txt is shown downloaded in Google Webmaster Tools.

Presently, I have only two pages indexed in Google, the home page and robots.txt. I have 10 pages on the website. I hope the rest will get indexed soon.

Related videos on Youtube

Oliver Salzburg

Never forget: :w !sudo tee % Save a file in vim when you neglected to open the file with sudo but already made changes you don't want to lose. Ctrl+X,* Evaluate globbing on your current input on the bash command line. postfix flush Pump out the postfix queue on your backup MX after you've fixed the issue with your primary MX. git tag -l | xargs -n 1 git push --delete origin; git tag | xargs git tag -d Delete all tags from a git repo - remotely and locally. sudo apt-get purge $(for tag in "linux-image" "linux-headers"; do dpkg-query -W -f'${Package}\n' "$tag-[0-9]*.[0-9]*.[0-9]*" | sort -V | awk 'index($0,c){exit} //' c=$(uname -r | cut -d- -f1,2); done) Delete old kernels https://signup.microsoft.com/productkeystart Register new Office 365 product keys for an existing tenant.

Updated on September 18, 2022Comments

-

Oliver Salzburg over 1 year

I read the answers in this question, but they still leave my question open: Does Google cache robots.txt?

I didn't find a way in the Google Webmaster Tools to invoke a re-download of my robots.txt.

Through some error, my robots.txt was replaced with:

User-agent: * Disallow: /And now all my content was removed from Google search results.

Obviously, I'm interested in correcting this as soon as possible. I already replaced the robots.txt, but I can't find a way to make Google update the cached version.

-

Admin about 12 yearsJust disallowing all your pages in robots.txt should generally not be enough to completely remove them from Google's results, as long as other sites still link to them.

Admin about 12 yearsJust disallowing all your pages in robots.txt should generally not be enough to completely remove them from Google's results, as long as other sites still link to them. -

Admin about 11 yearsHmm its a tricky one. ZenCart URLs seem to confuse the robots.txt web crawler bot and before you know it, you have blocked URLs that you don't want to be blocked. My experience is that you are better off without robots.txt, but just keeping a clean web site. I lost many web rank places due to this robots.txt error blocking of valid URLs. Because ZenCart uses dynamic URLs it seems to confuse the robots.txt web crawler resulting in blocking of URLs that you don't expect to be blocked. Not sure if it relates to the disabling of a category in ZenCart and then moving products out of that category a

Admin about 11 yearsHmm its a tricky one. ZenCart URLs seem to confuse the robots.txt web crawler bot and before you know it, you have blocked URLs that you don't want to be blocked. My experience is that you are better off without robots.txt, but just keeping a clean web site. I lost many web rank places due to this robots.txt error blocking of valid URLs. Because ZenCart uses dynamic URLs it seems to confuse the robots.txt web crawler resulting in blocking of URLs that you don't expect to be blocked. Not sure if it relates to the disabling of a category in ZenCart and then moving products out of that category a

-

-

Oliver Salzburg about 12 yearsThey re-read the file about 6 hours after I posted. Everything is back to normal by now.

-

Fiasco Labs about 12 yearsWhew! Back on track then!

-

studgeek almost 12 yearsAccording to them they check every day or so, but they probably check more often for busy sites. See webmasters.stackexchange.com/a/32949/17430.

-

studgeek almost 12 yearsI tried asking webmaster tools to fetch robots.txt, it complained it was denied by robots.txt :). So apparently that trick will not work if you have robots.txt doing a full block.

-

Kasapo over 11 yearsSame here... Request for robots.txt denied by robots.txt! Hah!

-

Fiasco Labs over 11 yearsWhelp, if you put deny on the root then I guess you're kind of SOL. In my case, it was a subfolder that was being refused, so forcing a reread of robots.txt through the mechanisms provided actually worked.

-

Oliver Salzburg over 10 yearsThat's not what this question is about

-

Stephen Ostermiller about 6 yearsI don't see what DNS or having free site has to do with robots.txt or telling Google to refetch it.

Stephen Ostermiller about 6 yearsI don't see what DNS or having free site has to do with robots.txt or telling Google to refetch it. -

Stefan Monov about 6 years@StephenOstermiller: I don't see either, but the fact is, this helped in my case.

-

Stephen Ostermiller about 6 yearsIt helped Google check your robots.txt?

Stephen Ostermiller about 6 yearsIt helped Google check your robots.txt? -

Stefan Monov about 6 years@StephenOstermiller: Yes.