Copy large file from one Linux server to another

Solution 1

Sneakernet Anyone?

Assuming this is a one time copy, I don't suppose its possible to just copy the file to a CD (or other media) and overnight it to the destination is there?

That might actually be your fastest option as a file transfer of that size, over that connection, might not copy correctly... in which case you get to start all over again.

rsync

My second choice/attempt would be rsync as it detects failed transfers, partial transfers, etc. and can pick up from where it left off.

rsync --progress file1 file2 user@remotemachine:/destination/directory

The --progress flag will give you some feedback instead of just sitting there and leaving you to second guess yourself. :-)

Vuze (bittorrent)

Third choice would probably be to try and use Vuze as a torrent server and then have your remote location use a standard bitorrent client to download it. I know of others who have done this but you know... by the time they got it all set up running, etc... I could have overnighted the data...

Depends on your situation I guess.

Good luck!

UPDATE:

You know, I got thinking about your problem a little more. Why does the file have to be a single huge tarball? Tar is perfectly capable of splitting large files into smaller ones (to span media for example) so why not split that huge tarball into more managable pieces and then transfer the pieces over instead?

Solution 2

I've done that in the past, with a 60GB tbz2 file. I do not have the script anymore but it should be easy to rewrite it.

First, split your file into pieces of ~2GB :

split --bytes=2000000000 your_file.tgz

For each piece, compute an MD5 hash (this is to check integrity) and store it somewhere, then start to copy the pieces and their md5 to the remote site with the tool of your choice (me : netcat-tar-pipe in a screen session).

After a while, check with the md5 if your pieces are okay, then :

cat your_file* > your_remote_file.tgz

If you have also done an MD5 of the original file, check it too. If it is okay, you can untar your file, everything should be ok.

(If I find the time, I'll rewrite the script)

Solution 3

Normally I'm a big advocate of rsync, but when transferring a single file for the first time, it doesn't seem to make much sense. If, however, you were re-transferring the file with only slight differences, rsync would be the clear winner. If you choose to use rsync anyway, I highly recommend running one end in --daemon mode to eliminate the performance-killing ssh tunnel. The man page describes this mode quite thoroughly.

My recommendation? FTP or HTTP with servers and clients that support resuming interrupted downloads. Both protocols are fast and lightweight, avoiding the ssh-tunnel penalty. Apache + wget would be screaming fast.

The netcat pipe trick would also work fine. Tar is not necessary when transferring a single large file. And the reason it doesn't notify you when it's done is because you didn't tell it to. Add a -q0 flag to the server side and it will behave exactly as you'd expect.

server$ nc -l -p 5000 > outfile.tgz client$ nc -q0 server.example.com 5000 < infile.tgz

The downside to the netcat approach is that it won't allow you to resume if your transfer dies 74GB in...

Solution 4

Give netcat (sometimes called nc) a shot. The following works on a directory, but it should be easy enough to tweak for just coping one file.

On the destination box:

netcat -l -p 2342 | tar -C /target/dir -xzf -

On the source box:

tar czf * | netcat target_box 2342

You can try removing the 'z' option in both tar command for a bit more speed seeing as the file is already compressed.

Solution 5

Although it adds a bit of overhead to the situation BitTorrent is actually a really nice solution to transferring large files. BitTorrent has a lot of nice features like natively chunking a file and checksumming each chunk which can be re-transmitted if corrupt.

A program like Azureus [now known as Vuze] contains all the pieces you will need to create, server & download torrents in one app. Bean in mind Azureus isn't the most lean of solutions available for BitTorrent and I think requires its GUI too - there are a lot of command line driven torrent tools for linux though.

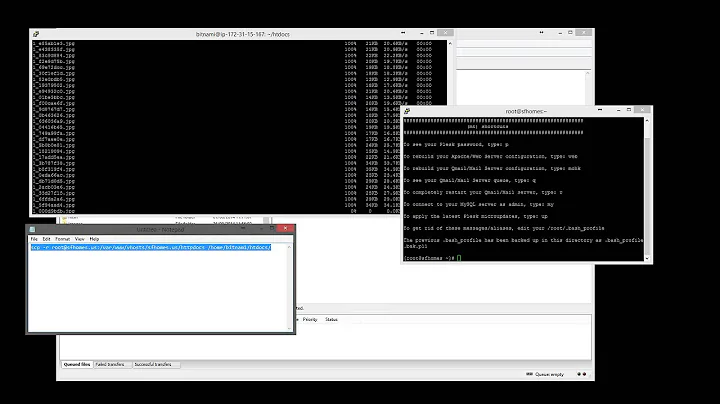

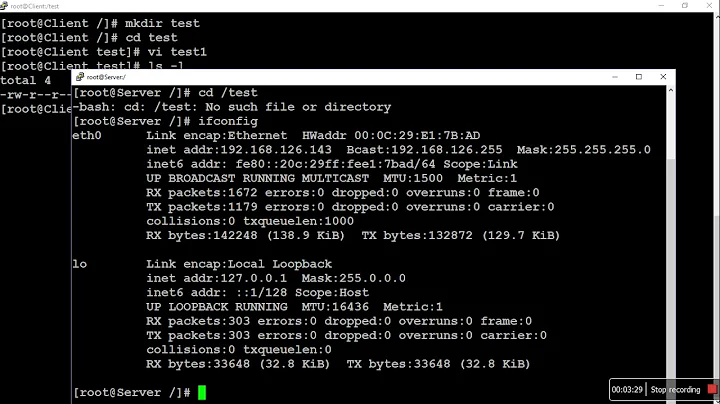

Related videos on Youtube

Nathan Milford

Updated on September 17, 2022Comments

-

Nathan Milford almost 2 years

I'm attempting to copy a 75 gigabyte tgz (mysql lvm snapshot) from a Linux server in our LA data center to another Linux server in our NY data center over a 10MB link.

I am getting about 20-30Kb/s with rsync or scp which fluctates between 200-300 hours.

At the moment it is a relatively quiet link as the second data center is not yet active and I have gotten excellent speeds from small file transfers.

I've followed different tcp tuning guides I've found via google to no avail (maybe I'm reading the wrong guides, got a good one?).

I've seen the tar+netcat tunnel tip, but my understanding is that it is only good for LOTS of small files an doesn't update you when the file is effectively finished transferring.

Before I resort to shipping a hard drive, does anyone have any good input?

UPDATE: Well... it may be the link afterall :( See my tests below...

Transfers from NY to LA:

Getting a blank file.

[nathan@laobnas test]$ dd if=/dev/zero of=FROM_LA_TEST bs=1k count=4700000 4700000+0 records in 4700000+0 records out 4812800000 bytes (4.8 GB) copied, 29.412 seconds, 164 MB/s [nathan@laobnas test]$ scp -C obnas:/obbkup/test/FROM_NY_TEST . FROM_NY_TEST 3% 146MB 9.4MB/s 07:52 ETAGetting the snapshot tarball.

[nathan@obnas db_backup]$ ls -la db_dump.08120922.tar.gz -rw-r--r-- 1 root root 30428904033 Aug 12 22:42 db_dump.08120922.tar.gz [nathan@laobnas test]$ scp -C obnas:/obbkup/db_backup/db_dump.08120922.tar.gz . db_dump.08120922.tar.gz 0% 56MB 574.3KB/s 14:20:40 ETTransfers from LA to NY:

Getting a blank file.

[nathan@obnas test]$ dd if=/dev/zero of=FROM_NY_TEST bs=1k count=4700000 4700000+0 records in 4700000+0 records out 4812800000 bytes (4.8 GB) copied, 29.2501 seconds, 165 MB/s [nathan@obnas test]$ scp -C laobnas:/obbkup/test/FROM_LA_TEST . FROM_LA_TEST 0% 6008KB 497.1KB/s 2:37:22 ETAGettting the snapshot tarball.

[nathan@laobnas db_backup]$ ls -la db_dump_08120901.tar.gz -rw-r--r-- 1 root root 31090827509 Aug 12 21:21 db_dump_08120901.tar.gz [nathan@obnas test]$ scp -C laobnas:/obbkup/db_backup/db_dump_08120901.tar.gz . db_dump_08120901.tar.gz 0% 324KB 26.8KB/s 314:11:38 ETAI guess I'll take it up with the folks who run our facilities the link is labeled as a MPLS/Ethernet 10MB link. (shrug)

-

mdpc almost 15 yearsJust a comment, I recently received a release from a software vendor on a Seagate FreeAgent (USB disk) which was about 50 GBytes. The company in question did have a web presence and usually requested customers to simply download from their website. Thought it was an interesting solution and thought this might add some information to help in your decision.

mdpc almost 15 yearsJust a comment, I recently received a release from a software vendor on a Seagate FreeAgent (USB disk) which was about 50 GBytes. The company in question did have a web presence and usually requested customers to simply download from their website. Thought it was an interesting solution and thought this might add some information to help in your decision. -

Jesse almost 15 yearsWhat kind of latency are you seeing?

-

Nathan Milford almost 15 yearsAbout 80 ms over the link.

-

Nathan Milford almost 15 yearsYeah, now I'm just confused and frustrated. I've split it up into 50mb chunks and it still goes slowly! But rsyncing other data gets 500kb/s... there must be something terribly wrong ehre I am missing....

-

lexsys almost 15 yearsInspect your traffic with

tcpdump. It can help you find out, what slows down the transfer. -

mpbloch almost 15 years50 GB / 1 day for next day shipping = 621+KB/s.

-

-

Nathan Milford almost 15 yearsi did the ol' "python -m SimpleHTTPServer" from commandlinefu on the source and wget'd the file on the destination. I still get "18.5K/s eta 15d 3h"

-

Nathan Milford almost 15 yearsIt is an LVM snapshot of another MYSQL replica (of our main MYSQL instance elsewhere). Once transfered and situated the destination mysql instance can simply update the difference between that snapshot (use it as a delta) and where the master is at now. That it is a MYSQL backup is not relevant, it's just a big chunk of data I only need to move once.

-

Chad Huneycutt almost 15 years+1, although probably not cost-efficient in this case. Never underestimate the bandwidth of a 747 full of hard drives :)

-

STW almost 15 yearsI couldn't find the link, but a couple years ago Google was looking at shipping crates of drives around. If you can move a crate of drives totalling 500TB from point A to point B, any way you cut it that's some mighty-fine bandwidth

-

KPWINC almost 15 yearsPerhaps you are referring to this article: arstechnica.com/science/news/2007/03/…

-

Ophidian almost 15 years+1 for rsyncd. I actually use it for transfers on my LAN because I see higher throughput compared to CIFS or NFS.

-

Nathan Milford almost 15 yearsYeah, I ended up shipping a hard drive. The real problem, or so I was told, was flow control on the switch(es).

-

mjbaybay7 over 9 yearsWhile FTP and HTTP avoid the "ssh-tunnel penalty" the "penalty" for not encrypting the data needs to be considered.

-

Jeter-work over 7 yearsBittorrent only works better than a direct transfer if you have multiple seeders. Even if OP installs bt on multiple machines, he's only got one connection. And he's already determined that multiple small files don't go faster than one big one, which points the finger at the network connection.

-

Jeter-work over 7 yearsbt only goes faster than direct transfer if there are multiple seeds. He has a single source. More importantly, he has a single source network with a bad network connection. Even copying the file to multiple locations locally then setting up bt with multiple seeds is counter productive due to that bad connection. Plus making multiple copies and setting them up as seeds is multiplying the copy time instead of reducing it. BT might be a workable solution if OP was trying to make a large file available to multiple recipients.