Copying faster than cp?

Solution 1

I was recently puzzled by the sometimes slow speed of cp. Specifically, how come df = pandas.read_hdf('file1', 'df') (700ms for a 1.2GB file) followed by df.to_hdf('file2') (530ms) could be so much faster than cp file1 file2 (8s)?

Digging into this:

cat file1 > file2isn't any better (8.1s).dd bs=1500000000 if=file1 of=file2neither (8.3s).rsync file1 file2is worse (11.4s), because file2 existed already so it tries to do its rolling checksum and block update magic.

Oh, wait a second! How about unlinking (deleting) file2 first if it exists?

Now we are talking:

rm -f file2: 0.2s (to add to any figure below).cp file1 file2: 1.0s.cat file1 > file2: 1.0s.dd bs=1500000000 if=file1 of=file2: 1.2s.rsync file1 file2: 4s.

So there you have it. Make sure the target files don't exist (or truncate them, which is presumably what pandas.to_hdf() does).

Edit: this was without emptying the cache before any of the commands, but as noted in the comments, doing so just consistently adds ~3.8s to all numbers above.

Also noteworthy: this was tried on various Linux versions (Centos w. 2.6.18-408.el5 kernel, and Ubuntu w. 3.13.0-77-generic kernel), and ext4 as well as ext3. Interestingly, on a MacBook with Darwin 10.12.6, there is no difference and both versions (with or without existing file at the destination) are fast.

Solution 2

Copying a file on the local disk is 99% spent in reading and writing to the disk. If you try to compress data then you increase CPU load but don't reduce the read/write data... it will actually slow down your copy.

rsync will help if you already have a copy of the data and bring it "up to date".

But if you want to create a brand new copy of a tree then you can't really do much better than your cp command.

Solution 3

On the same partition (and filesystem) you can use -l to achieve hard links instead of copies. Hard link creation is much faster than copying things (but, of course, does not work across different disk partitions).

As a small example:

$ time cp -r mydir mydira

real 0m1.999s

user 0m0.000s

sys 0m0.490s

$ time cp -rl mydir mydirb

real 0m0.072s

user 0m0.000s

sys 0m0.007s

That's a 28 times improvement. But that test used only ~300 (rather small) files. A couple of bigger files should perform faster, a lot of smaller files slower.

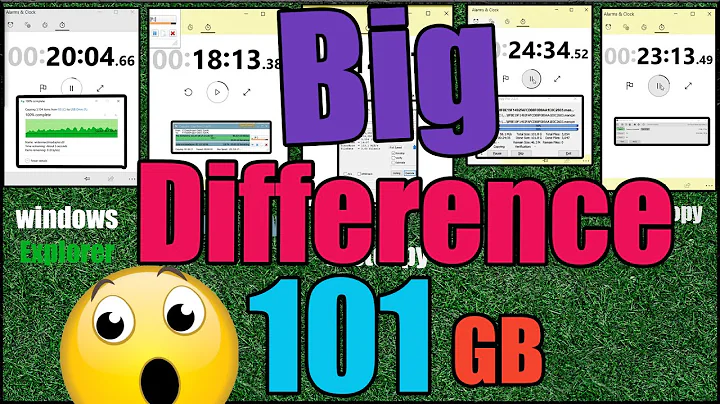

Related videos on Youtube

Raven

Updated on September 18, 2022Comments

-

Raven over 1 year

Raven over 1 yearIf I have the following input

test = 3; //first test = test + 3; //secondparsed with a grammar that looks like this (for example)

Declaration: name=ID "=" DeclarationContent ; DeclarationContent: number=INT ("+" DeclarationContent)? | reference=[Declaration] ("+" DeclarationContent)? ;to which declaration does the reference refer (looking at the second declaration in my example)? Or in other words does the reference

"test"(second Declaration) refer to the first Declaration("test = 3;")or directly to itself("test = test+ 3")and would therefor be a cycle in hierarchy.Greeting Krzmbrzl

-

Gilles 'SO- stop being evil' over 7 yearsIf this is on zfs, you can make a snapshot, which is practically instantaneous. The cost of the copy (both in time and in disk space) is then only paid when one of the sides is modified. I don't know what commands to use for this, I encourage someone who does to post an answer explaining how to do it.

Gilles 'SO- stop being evil' over 7 yearsIf this is on zfs, you can make a snapshot, which is practically instantaneous. The cost of the copy (both in time and in disk space) is then only paid when one of the sides is modified. I don't know what commands to use for this, I encourage someone who does to post an answer explaining how to do it. -

Andrew Henle over 7 yearsIf you could post the output of

iostatwhile this copy operation is running, you might get more help from readers. Assuming you're running on Solaris from the/solaristag, post several lines fromiostat -sndzx 2. That will emit an output line every 2 seconds, with the first line being not very useful. Again, that needs to be run while yourcp -r ...command is running.

-

-

CJ7 over 7 yearsIt's on the same partition

-

CJ7 over 7 yearsWhat are hard-links? I need actual copies of the files to play around with.

-

Stephen Harris over 7 yearsHard links make each filename map to the same file; they're not copies. If you modify the new name you modify the original.

Stephen Harris over 7 yearsHard links make each filename map to the same file; they're not copies. If you modify the new name you modify the original. -

grochmal over 7 years@CJ7 - Hard links are just extra inodes pointing to the same data. If you change the copy the original file is changed too.

grochmal over 7 years@CJ7 - Hard links are just extra inodes pointing to the same data. If you change the copy the original file is changed too. -

CJ7 over 7 yearsAlso I'm on solaris and my cp does not have a -l option.

-

Bratchley over 7 yearsThey could use CoW snapshots. That's essentially creating a new copy of the files and you only have the initial snapshot operation and subsequent increased latency for writes to the new "copies"

Bratchley over 7 yearsThey could use CoW snapshots. That's essentially creating a new copy of the files and you only have the initial snapshot operation and subsequent increased latency for writes to the new "copies" -

grochmal over 7 years@CJ7 - Yeah, posix cp will not have

grochmal over 7 years@CJ7 - Yeah, posix cp will not have-l. You will need to go withfind -exec ln -P ... \;. But then again, you're after copies not hard links. -

Stephen Harris over 7 years@Bratchley did you read the question? CoW does not meet the requirements (and it's Solaris, anyway).

Stephen Harris over 7 years@Bratchley did you read the question? CoW does not meet the requirements (and it's Solaris, anyway). -

Stephen Harris over 7 yearsThis doesn't meet the requirements; hard links to existing files are not copies; modify the "copy" and you modify the original.

Stephen Harris over 7 yearsThis doesn't meet the requirements; hard links to existing files are not copies; modify the "copy" and you modify the original. -

grochmal over 7 yearsWell, you might do some form of tmpfs, mount it somewhere, CoW onto it, and then edit the "copies". But (1) that's horribly far fetched, and (2) not on solaris.

grochmal over 7 yearsWell, you might do some form of tmpfs, mount it somewhere, CoW onto it, and then edit the "copies". But (1) that's horribly far fetched, and (2) not on solaris. -

Bratchley over 7 yearsIt does meet the requirements. It creates an additional set of copies. Also Solaris quite famously supports snapshots.

Bratchley over 7 yearsIt does meet the requirements. It creates an additional set of copies. Also Solaris quite famously supports snapshots. -

Bratchley over 7 years@grochmal If it's reasonably current version of Solaris, it's almost certainly going to be using ZFS which supports snapshotting. There are then a variety of ways to get those "copies" to show up in a desired part of the filesystem.

Bratchley over 7 years@grochmal If it's reasonably current version of Solaris, it's almost certainly going to be using ZFS which supports snapshotting. There are then a variety of ways to get those "copies" to show up in a desired part of the filesystem. -

grochmal over 7 years@Bratchley - I forgot that Solaris has ZFS, yeah, that's actually a good bet. I know little about ZFS though.

grochmal over 7 years@Bratchley - I forgot that Solaris has ZFS, yeah, that's actually a good bet. I know little about ZFS though. -

Bratchley over 7 yearsha yeah. Solaris is the birthplace for ZFS ;-)

Bratchley over 7 yearsha yeah. Solaris is the birthplace for ZFS ;-) -

grochmal over 7 years@StephenHarris - Well, it may meet the requirements of someone who get here from a google search. In U&L theory it answers the question at hand. The comment discussion with OP is an extra that shows that the answer does not meet OPs requirements. (it's the difference between answer to question and most useful answer to OP).

grochmal over 7 years@StephenHarris - Well, it may meet the requirements of someone who get here from a google search. In U&L theory it answers the question at hand. The comment discussion with OP is an extra that shows that the answer does not meet OPs requirements. (it's the difference between answer to question and most useful answer to OP). -

grochmal over 7 years@StephenHarris - Semantics are heavily subjective, and you're too heated today/yesterday. I use hard links a lot in my home directory for an example. Had I searched for different ways of doing

grochmal over 7 years@StephenHarris - Semantics are heavily subjective, and you're too heated today/yesterday. I use hard links a lot in my home directory for an example. Had I searched for different ways of doingcpI'd like to see an alternative with hard links. If I remember correctly (that was several years ago), had I learned about hard links sooner, i would have used them sooner. -

Gilles 'SO- stop being evil' over 7 years@Eric

Gilles 'SO- stop being evil' over 7 years@Ericddcan only copy a single file or of a whole filesystem image, but not a directory tree, which is what was asked here. -

Eric over 7 years@gilles I suppose you could use

findto runddfor a quicker copy, but you're right that I missed the recursive part of the question. I wonder ifcpioortarwould be quicker thancp. -

Andrew Henle almost 6 yearsDid you account for the source file contents potentially being held in cache?

-

roaima almost 6 yearsRsync won't do delta magic on local copies. At best it becomes like cp; in practice it's a little slower.

roaima almost 6 yearsRsync won't do delta magic on local copies. At best it becomes like cp; in practice it's a little slower. -

vijay over 3 yearsSo, why was it faster to

vijay over 3 yearsSo, why was it faster tormfirst, thencp/catrather than just plaincp/cat? Fragmentation? Something else? -

TIm Richardson over 3 yearsI am not sure, but it may be related to the way overwriting a large file is implemented on certain filesystems, e.g.

ext3andext4. BTW, I also just tested withtruncate -s 0 file2 && cp file1 file2: same timing asrm -f file2 && cp file1 file2(fast).