Dataframe: how to groupBy/count then order by count in Scala

10,356

Solution 1

You can use sort or orderBy as below

val df_count = df.groupBy("id").count()

df_count.sort(desc("count")).show(false)

df_count.orderBy($"count".desc).show(false)

Don't use collect() since it brings the data to the driver as an Array.

Hope this helps!

Solution 2

//import the SparkSession which is the entry point for spark underlying API to access

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.functions._

val pathOfFile="f:/alarms_files/"

//create session and hold it in spark variable

val spark=SparkSession.builder().appName("myApp").getOrCreate()

//read the file below API will return DataFrame of Row

var df=spark.read.format("csv").option("header","true").option("delimiter", "\t").load("file://"+pathOfFile+"db.tab")

//groupBY id column and take count of the column and order it by count of the column

df=df.groupBy(df("id")).agg(count("*").as("columnCount")).orderBy("columnCount")

//for projecting the dataFrame it will show only top 20 records

df.show

//for projecting more than 20 records eg:

df.show(50)

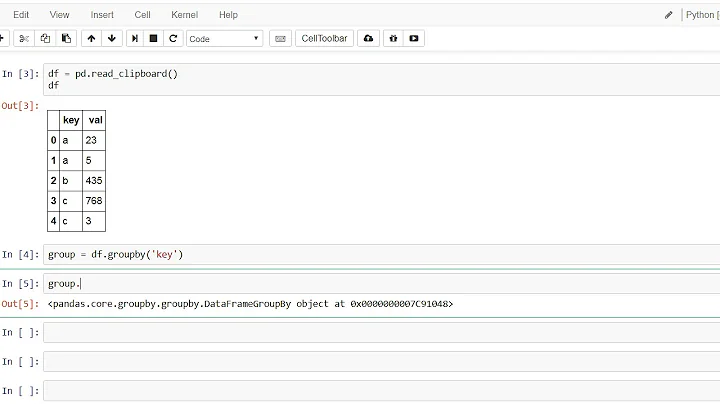

Related videos on Youtube

Author by

HISI

Updated on August 12, 2022Comments

-

HISI over 1 year

HISI over 1 yearI have a dataframe that contains a thousands of rows, what I'm looking for is to group by and count a column and then order by the out put: what I did is somthing looks like :

import org.apache.spark.sql.hive.HiveContext import sqlContext.implicits._ val objHive = new HiveContext(sc) val df = objHive.sql("select * from db.tb") val df_count=df.groupBy("id").count().collect() df_count.sort($"count".asc).show() -

HISI over 5 yearsI used this one instead:

HISI over 5 yearsI used this one instead:df.groupBy("id").count().orderBy($"count".desc).show()in only one line